Shivangi Prasad

Postdoctoral researcher, Medium Energy Physics

- Chicago, IL

- Google Scholar

Image Transformations in C++

3 minute read

Published: November 20, 2019

Introduction

Watermarking.

This project was a part of the final week assignment of an online course on Coursera on Object-Oriented Data Structures in C++ taught by Prof. Ulmschneider from department of computer science at UIUC. It is such a well designed course for people from all background who are interested in understanding the essential components of a C++ code. The goal of this project was to transform the given image by changing the Hue, Saturation or Luminance of the pixel.

The first part of the assignment was to ‘Illinify’ the image, which is explained as follows. Hue itself refers to the color of the pixel. The color range is defined in between 0 and 360. The hue of each pixel should be set to either ‘Illini orange’ which has a hue of 11 or ‘Illini Blue’ which has a hue of 216, depending on which hue value the original hue of the pixel is closer to.

The second part of the project was to put a spotlight on the image. What this means is to decrease the luminance (or brightness of the pixels) with increasing radial distance around a central pixel. In this case the luminance was decreased by 0.5% per pixel unit in euclidean distance with a maximum of 80% decrease.

The third part of the project required putting a watermark on the image. This requires the use of an overlay image as a stencil, to find out the pixels in the Alma image that intersects with overlay image where the luminance is 1. The luminance of such pixels in original image were increased by 0.2 (with a maximum allowed value upto 1).

Pneumonia detection from chest X-Rays using deep neural networks

8 minute read

Published: November 10, 2020

Statistical analysis in Python

1 minute read

Published: January 15, 2020

Project A: Classifying Images with Feature Transformations

Last modified: 2020-10-27 12:50

Status: RELEASED.

Due date : Mon. Oct. 26 at 11:59PM AoE (Anywhere on Earth) (Tue 10/27 at 07:59am in Boston)

- 2020-10-26 : Clarified that Results section of your report should include discussion of your main findings and lessons you learned as well as the required figures (as the rubric always indicated)

- 2020-10-20 : Added turn-in link for the Reflection Form

- 2020-10-07 : Clarification of plots in Figure 1B

Turn-in links :

- PDF report for Problem 1 (8 vs 9): https://www.gradescope.com/courses/173055/assignments/697997/

- PDF report for Problem 2 (Sneaker vs Sandal): https://www.gradescope.com/courses/173055/assignments/698006/

- ZIP file of predictions for Problem 2 leaderboard: https://www.gradescope.com/courses/173055/assignments/697979/

- Reflection on Project A: https://forms.gle/oor7k8sGH4KDfqnU6

This is a four week project with lots of open-ended programming. Get started right away!

Team Formation

In this project, you can work with teams of 2 people, or (if you prefer) individually. Individual teams still need to complete all the parts below. We want to incentivize you to work in pairs.

If you need help finding teammates, please post to our "Finding a Partner for Project A" post on Piazza.

By the start of the second week (by end of day Mon 10/05), you should have identified your partner and signed up here:

- ProjectA Team Formation Form

- Please use your tufts.edu G Suite account. You must provided tufts.edu email addresses.

- Even if you decide to work alone, you should fill this form out acknowledging that.

Work to Complete

As a team, you will work on one structured problem (Problem 1: 8-vs-9) and then one open-ended problem (Problem 2: Sneaker-vs-Sandal).

Problem 1, you will demonstrate that you know how to train a logistic regression classifier and interpret the results.

Problem 2, you will practice the development cycle of an ML practitioner:

- Propose a reasonable ML pipeline (feature extraction + classifier)

- Evaluate it and analyze the results carefully

- Revise the pipeline and repeat

For Problem 2, we will maintain a leaderboard on Gradescope. You should periodically submit the predictions of your best model on the test set .

What to Turn In

Each team will prepare one PDF report for Problem 1:

- Prepare a short PDF report (no more than 3 pages).

- This document will be manually graded according to our rubric

- Can use your favorite report writing tool (Word or G Docs or LaTeX or ....)

- Should be human-readable . Do not include code. Do NOT just export a jupyter notebook to PDF.

- Should have each subproblem marked via the in-browser Gradescope annotation tool )

Each team will prepare one PDF report for Sneaker-vs-Sandal (Problem 2)

- Prepare a short PDF report (no more than 5 pages).

Each team should submit one ZIP file of test-set predictions for the Sneaker-vs-Sandal Leaderboard (Problem 2):

- yproba1_test.txt : plain text file

- One line per test-set example in data_sneaker_vs_sandal/x_test.csv

- Each line contains float probability that the relevant example should be classified as a positive example given its features

- Should be loadable into NumPy as a 1D array via this snippet: np.loadtxt('yproba1_test.txt')

Multiple submissions to the leaderboard are allowed and even encouraged. By the deadline, make sure the "best" pipeline you design has its predictions submitted.

Problem 1: Logistic Regression for Image Classification of Handwritten Digits

Example 28x28 pixel images of "8s" and "9s". Each image is titled with its associated binary label.

We consider building a classifier to distinguish images of handwritten digits, specifically the digit 8 from the digit 9. You'll use the \(x\) and \(y\) examples provided in CSV files located in the data_digits_8_vs_9_noisy folder of the starter code.

https://github.com/tufts-ml-courses/comp135-20f-assignments/tree/master/projectA/data_digits_8_vs_9_noisy

We extracted this data from the well-known MNIST dataset by LeCun, Cortes, and Burges . We have preprocessed lightly for usability as well as to make the problem slightly more difficult by adding a small amount of random noise to each image.

Your challenge is to build a logistic regression classifier to distinguish '8' from '9'.

Each example image (indexed by integer \(i\) ) has features \(x_i \in \mathbb{R}^F\) and label \(y_i \in \{0, 1\}\) .

- Each row of the provided x_train.csv file is the feature vector \(x_i\) for one image.

- Each row of the provided y_train.csv file is the label \(y_i\) for the corresponding image.

The features \(x_i\) for the \(i-\) th image are the gray-scale pixel intensity values of a 28x28 pixel image. Each pixel's feature value varies between 0.0. (black) and 1.0 (bright white), with shades of gray possible in between. We've reshaped and flattened each 28x28 image so that it has a feature vector \(x_i\) of size 784 (= 28 x 28).

The label \(y_i\) for the \(i\) -th image is a binary value. Here, we've encoded the '8's as a 0 and '9's as a 1.

Preparation and Starter Code

You are given the following predefined labeled datasets:

- "training" set of 9817 total examples of handwritten digits and their labels.

- "validation" set of 1983 total examples, and their labels

- "test" set of 1983 total examples ( but no labels! )

We emphasize that throughout this project, your test set does not have labels available to you. Your goal is to build a classifier that generalizes well.

See the sample code show_images.py to get a sense of how to load these into Python and display the images.

https://github.com/tufts-ml-courses/comp135-20f-assignments/blob/master/projectA/show_images.py

In your PDF report, include the following sections:

1A : Dataset Exploration

In one table, summarize the composition of your training and validation sets.

Include 3 rows:

- total number of examples

- number of positive examples

- fraction of positive examples

You should always make such a table, to understand your data and help explain any trends you are seeing.

1B : Assess Loss and Error vs. Training Iterations

Using sklearn.linear_model.LogisticRegression , you should fit a logistic regression models to your training split.

Set C = 1e6 and `solver='lbfgs'. Leave other parameters at their default values. Explore what happens when we limit the iterations allowed for the solver to converge on its solution.

For the values i = 1, 2, 3, 4, ... 39, 40, build a logistic regression model with setting max_iter=i . Fit each such model to the training data, and keep track of the following performance metrics on both training and validation sets:

- binary cross entropy (aka log loss)

- error rate (can use sklearn.metrics.zero_one_loss ).

You may safely ignore any warnings about lack of convergence.

Figure 1B : Produce two plots side by side:

- Left plot should show log loss (y-axis) vs iteration (x-axis), with two lines (one for training, one for validation)

- Right plot should show error rate (y-axis) vs iteration (x-axis), with two lines (one for training, one for validation)

Place these plots into your PDF document, with appropriate captions.

Short Answer 1B : Below the plots, discuss the results you are seeing; what do they show, and why?

1C : Hyperparameter Selection

You should now explore a range of penalty strength values C . You can fix max_iter = 1000 .

Figure 1C : Produce a plot of the error rate as a function of C . Which hyperparameter should you select?

1D : Analysis of Mistakes

For the selected model from 1C, we might wonder if there is any pattern to the examples the classifier gets wrong.

Figure 1D : Produce two plots, one consisting of 9 sample images that are false positives on the validation set, and one consisting of 9 false negatives. You can display the images by converting the pixel data using the matplotlib function imshow(), using the Grey colormap, with vmin=0.0 and vmax=1.0. Place each plot into your PDF as a properly captioned figure. '

Short Answer 1D Discuss the results you are seeing in Figure 1D. What kinds of mistakes is the classifier making?

1E : Interpretation of Learned Weights

For the selected model from 1C, what has it really learned? One way to understand this model is to visualize the learned weight coefficient that will be applied to each pixel in a 28 x 28 image.

Figure 1E :

Reshape the weight coefficients into a (28 × 28) matrix, corresponding to the pixels of the original images, and plot the result using imshow(), with colormap RdYlBu, vmin=-0.5, and vmax=0.5. Place this plot into your PDF as a properly captioned figure.

Short Answer 1E

- Which pixels have negative weights, and thus have high-intensity values correspond to the negative class ('8')?

- Which pixels have positive weights, and thus have high-intensity values correspond to the positive class ('9')?

- Why do you think this is the case?

Problem 2: Sneaker vs Sandal Image Classification

Example 28x28 pixel images of "sneakers" and "sandals". Each image is titled with its associated binary label.

In this open-ended problem, you'll take on another image classification problem: sneakers vs. sandals.

Each input image has the same \(x\) feature representation as the MNIST digits problem above (each example is a 28x28 image, which is reshaped into a 784-dimensional vector). However, now we have images of "sneakers" and "sandals". These are from the larger Fashion MNIST dataset , made public originally by Zalando Research.

Get the data here:

https://github.com/tufts-ml-courses/comp135-20f-assignments/tree/master/projectA/data_sneaker_vs_sandal

Now, we'd like you to build a binary classifier that works on this new task. Can you achieve an error rate that reaches the top of the leaderboard? Can you understand what it takes for an image classifier to be successful on this task?

You'll be evaluated much more on your PROCESS than on your results. A well-designed experiment and careful evaluation will earn more points than getting to a perfect error rate without an ability to justify what you've done.

Your challenge is open-ended : you're not restricted to using only the 784 pixel values as features \(x_n\) for image \(n\) .

Instead, you should spend most of your time engineering your own feature transform \(\phi(x_n)\) . You are free to use ANY feature transform you can think of to turn the image into a feature vector.

Ideas for better feature transformations

Be creative! If you're stuck, here are a few ideas to consider:

does the total number of pixels that are "on" help distinguish sandals from sneakers?

how can you identify when multiple pixels are on (bright white) simultanenously?

how can you capture spatial trends (e.g. across rows or columns) that are relevant to the sneaker vs. sandal problem?

can you measure the "holes" (blocks of dark pixels) that are more common within sandals?

Other ideas for improving your performance

You'll need to decide how to do hyperparameter selection. Will you use a fixed validation set? Something else? What candidate values will you choose, and why?

You could explore data augmentation (can you augment your existing training set by transforming existing images in helpful ways? for example, if you flip each image horizontally, would that be a useful way to "double" your training set size?)

You could explore better regularization (try L1 vs L2 penalties for LogisticRegression, or look at alternatives)

You could explore better optimization (does the `solver' you choose for LogisticRegression matter?)

Required Experiments

Your report should summarize experiments on at least 3 possible classifier pipelines:

- 0) Baseline: raw pixel features, fed into a Logistic Regression classifier

- You should use sklearn.linear_model.LogisticRegression

- You should carefully justify all hyperparameters, and select at least one complexity hyperparameter via grid search

1) A feature transform of your own design, fed into a Logistic Regression classifier

- You should write your own transform functions, or use sklearn.preprocessing

2) Another feature transform of your own design, fed into a Logistic Regression classifier or some other classifier (e.g. KNeighborsClassifier)

- If you choose sklearn.neighbors.KNeighborsClassifier , think carefully about the distance function you use

- You can use any classifier in sklearn , but you must understand it and be able to talk about it professionally in your report

For all classifiers, we strongly recommend using sklearn Pipelines to manage everything. For simple examples of pipelines, see Part 5 of day04 lab notebook . For examples tailored to the image classification task, see our starter code.

Data Usage Restrictions

You should not use any additional Fashion MNIST data from other sources, only that provided for you in the starter code.

Code Usage Restrictions

For this project, any code in our standard environment comp135_2020f_env is fair game.

If you really want to use some other library, you can ask instructors on Piazza. You'll need permission to proceed.

What goes in your report PDF for Problem 2

Your entire report for Problem 2 should be somewhere between 2-5 total pages, including all figures and text.

Section 1: Methods for Sneaker-Sandal

First, in your report, provide 1 paragraph describing your experimental design

- How did you divide the provided labeled set to develop models?

- Did you make a separate "train" or "validation" sets? How did you select the sizes of these sets?

- Did you do cross-validation? Why or why not?

Next, in your report, for each of the 3 methods, provide 1 paragraph of concise but complete description of that method. A strong methods paragraph will answer each of these prompts:

- Describe the feature transformation you tried and why you thought it would work

- Describe your model fitting process: what were parameters, and how were they fit? are there concerns about overfitting? concerns about convergence of the optimization?

- Describe your hyperparameter selection process: what were hyperparameters, and how were they selected? What candidate values were considered? What performance metric did you try to optimize?

Section 2: Results for Sneaker-Sandal

In the results section, of your report, please include the following with appropriate discussion in main text of your report:

One figure showing evidence of hyperparameter selection to avoid overfitting for your baseline model (you should look at these for all models, but you can just use your baseline model here). Usually, this figure will plot training and heldout error vs one hyperparameter (like we did in HW1).

One figure comparing the training-set and heldout-set performance of your 3 models using an ROC curve (one plot for training, one plot for heldout, each plot shows an ROC line for each of the 3 models).

One figure showing analysis of mistakes (several example false positives and false negatives on heldout set) for your preferred single model and one other baseline or alternative.

Rubric for Evaluating PDF Report

This should match the rubric outline on Gradescope for the PDF report. If for any reason there is a conflict, the official problem weights on Gradescope will be used.

- 25 points for Problem 1 Report

60 points for Problem 2 Report

- 10 points for experimental design paragraph

- 6 points for description of Method 0

- 6 points for description of Method 1

- 6 points for description of Method 2

- 10 points for figure(s) showing hyperparameter selection, with discussion

- 12 points for figure(s) showing ROC curves, with discussion

- 10 points for figure(s) showing analysis of mistakes, with discussion

15 points for Problem 2 Leaderboard

- 10 points for submission with at least 'bare minimum' satisfactory error rate (as good as our Baseline Method 0)

- 5 points for submission within 0.02 points of the best error rate

CS 163/164, Spring 2019

Programming assignment - p8, image transforms, due monday, apr. 1st at 6:00 pm, late tuesday, apr. 2nd at 8:00 am, objectives of this assignment.

- Implement a set of methods that allow a GUI object to use your class,

- instantiate and call a supplied class to read and write images,

- declare and use 2D arrays to store images, and

- manipulate the data in 2D arrays to transform images.

Description

Instructions, specifications.

- Work on your own, as always.

- The name of the source code file must be exactly Transforms.java

- Name the file exactly - upper and lower case matters!

- Comments at the top as shown above.

- Assignments should be implemented using Eclipse.

- Assignments should be implemented using Java, version 1.8.

- Make sure your code runs on machines in the CSB 120 lab.

- Read the syllabus for the late policy.

- We will be checking programs for plagiarism, so please don't copy from anyone else.

Grading Criteria

Subscribe to the PwC Newsletter

Join the community, edit social preview.

Add a new code entry for this paper

Remove a code repository from this paper.

Mark the official implementation from paper authors

Add a new evaluation result row.

| TASK | DATASET | MODEL | METRIC NAME | METRIC VALUE | GLOBAL RANK | REMOVE |

|---|

- GENERATIVE ADVERSARIAL NETWORK

- TRANSLATION

Remove a task

Add a method, remove a method, edit datasets, transforming and projecting images into class-conditional generative networks.

4 May 2020 · Minyoung Huh , Richard Zhang , Jun-Yan Zhu , Sylvain Paris , Aaron Hertzmann · Edit social preview

We present a method for projecting an input image into the space of a class-conditional generative neural network. We propose a method that optimizes for transformation to counteract the model biases in generative neural networks. Specifically, we demonstrate that one can solve for image translation, scale, and global color transformation, during the projection optimization to address the object-center bias and color bias of a Generative Adversarial Network. This projection process poses a difficult optimization problem, and purely gradient-based optimizations fail to find good solutions. We describe a hybrid optimization strategy that finds good projections by estimating transformations and class parameters. We show the effectiveness of our method on real images and further demonstrate how the corresponding projections lead to better editability of these images.

Code Edit Add Remove Mark official

Tasks edit add remove, datasets edit.

Results from the Paper Edit

Methods edit add remove.

This course provides a comprehensive introduction to computer vision. Major topics include image processing, detection and recognition, geometry-based and physics-based vision and video analysis. Students will learn basic concepts of computer vision as well as hands on experience to solve real-life vision problems.

- Sign up for the course Piazza.

- Sign up for an account on this webpage. (The signup code is on Canvas.)

- Carefully read through the Course Info .

| Jan 17 (Wed) | |

| Jan 22 (Mon) | |

| Jan 24 (Wed) | |

| Jan 29 (Mon) | |

| Jan 31 (Wed) | |

| Feb 5 (Mon) | |

| Feb 7 (Wed) | |

| Feb 12 (Mon) | |

| Feb 14 (Wed) | |

| Feb 19 (Mon) | |

| Feb 21 (Wed) | |

| Feb 26 (Mon) | |

| Feb 28 (Wed) | |

| Mar 4 (Mon) | |

| Mar 6 (Wed) | |

| Mar 11 (Mon) | |

| Mar 13 (Wed) | |

| Mar 18 (Mon) | |

| Mar 20 (Wed) | |

| Mar 25 (Mon) | |

| Mar 27 (Wed) | |

| Apr 1 (Mon) | |

| Apr 3 (Wed) | |

| Apr 8 (Mon) | |

| Apr 10 (Wed) | |

| Apr 15 (Mon) | |

| Apr 17 (Wed) | |

| Apr 22 (Mon) | |

| Apr 24 (Wed) | |

| Apr 26 (Fri) |

| (Due Feb 7th) |

| (Due Feb 21st) |

| (Due Mar 13th) |

| (Due Mar 27th) |

| (Due Apr 10th) |

| (Due Apr 26th) |

| (Due Sept 19th) | |

| (Due Sept 26th) | |

| (Due Oct 3rd) | |

| (Due Oct 10th) | |

| (Due Oct 24th) | |

| (Due Oct 31st) | |

| (Due Nov 7th) | |

| (Due Nov 14th) | |

| (Due Nov 21st) | |

| (Due Nov 30th) | |

| (Due Dec 7th) |

The lecture notes have been pieced together from many different people and places. Special thanks to colleagues for sharing their slides: Kris Kitani, Bob Collins, Srinivasa Narashiman, Martial Hebert, Alyosha Efros, Ali Faharadi, Deva Ramanan, Yaser Sheikh, and Todd Zickler. Many thanks also to the following people for making their lecture notes and materials available online: Steve Seitz, Richard Selinsky, Larry Zitnick, Noah Snavely, Lana Lazebnik, Kristen Grauman, Yung-Yu Chuang, Tinne Tuytelaars, Fei-Fei Li, Antonio Torralba, Rob Fergus, David Claus, and Dan Jurafsky.

Computer Graphics, Spring 2005

Assignment 1: image processing, due on sunday, feb 20 at 11:59 pm.

In this assignment you will create a simple image processing program. The operations that you implement will be mostly filters which take an input image, process the image, and produce an output image.

Getting Started

You should use the following skeleton code ( 1.zip or 1.tar.gz ) as a starting point for your assignment. We provide you with several files, but you should mainly change image.cpp . main.cpp : Parses the command line arguments, and calls the appropriate image functions. image.[cpp/h] : Image processing. pixel.[cpp/h] : Pixel processing. vector.[cpp/h] : Simple 2D vector class you may find useful. bmp.[cpp/h] : Functions to read and write Windows BMP files. Makefile : A Makefile suitable for UNIX platforms. visual.dsp : Project file suitable for Visual C++ on Windows 2000 platform. You can find test images at /u/cos426/images (on the OIT accounts, such as arizona.princeton.edu ). After you copy the provided files to your directory, the first thing to do is compile the program. If you are developing on a Windows machine, double click on image.dsw and select "build" from the build menu. If you are developing on a UNIX machine, type make . In either case, an executable called image (or image.exe ) will be created.

How the Program Works

The user interface for this assignment was kept to the simplest possible, so you can concentrate on the image processing issues. The program runs on the command line. It reads an image from the standard input, processes the image using the filters specified by the command line arguments, and writes the resulting image to the standard output. For example, to increase the brightness of the image in.bmp by 10%, and save the result in the image out.bmp , you would type: % image -brightness 0.1 < in.bmp > out.bmp

% image -help

% image -contrast 0.8 -scale 0.5 0.5 < in.bmp > out.bmp

% image -contrast 0.8 | image -scale 0.5 0.5 < in.bmp > out.bmp

% image -contrast 0.8 | image -scale 0.5 0.5 < in.bmp | xv -

What You Have to Do

The assignment is worth 20 points. The following is a list of features that you may implement (listed roughly from easiest to hardest). The number in front of the feature corresponds to how many points the feature is worth. The features in bold face are required. The other ones are optional. Refer to this web page for more details on the implementation of each filter and example output images. (1/2) Random noise: Add noise to an image. (1/2) Brighten : Individually scale the RGB channels of an image. (1/2) Contrast : Change the contrast of an image. See Graphica Obscura . (1/2) Saturation: Change the saturation of an image. See Graphica Obscura . (1/2) Crop: Extract a subimage specified by two corners. (1/2) Extract Channel: Leave specified channel intact and set all others to zero. (1) Transition : Create 90 images of a transition between yourself and a fellow classmate. These images will be taken on the first and second day of lectures and each student will be assigned a pair of images by the TA over e-mail. We recommend implementing a standard cross-fade first and later (i.e. after completing the required components) replace the fade with the fancier Beier-Neeley morph (see below) if you desire. (1) Random dither: Convert an image to a given number of bits per channel, using a random threshold. (1) Quantize : Change the number of bits per channel of an image, using simple rounding. (1) Composite : Compose one image with a second image, using a third image as a matte. (1) Create a composite image of yourself and a famous person. (2) Blur : Blur an image by convolving it with a Gaussian low-pass filter. (2) Edge detect: Detect edges in an image by convolving it with an edge detection kernel. (2) Ordered dither: Convert an image to a given number of bits per channel, using a 4x4 ordered dithering matrix. (2) Floyd-Steinberg dither : Convert an image to a given number of bits per channel, using dithering with error diffusion. (2) Scale : Scale an image up or down by a real valued factor. (2) Rotate: Rotate an image by a given angle. (2) Fun : Warp an image using a non-linear mapping of your choice (examples are fisheye, sine, bulge, swirl). (3) Morph: Morph two images using the method in Beier & Neeley's " Feature-based Image Metamorphosis ." We also provide a stand-alone utility (only runs under Win32) for selecting line correspondences -- these are input the morph. See this page for details. (up to 3) Nonphotorealism: Implement any non-trivial painterly filter. For inspiration, take a look at the effects available in programs like xv , PhotoShop , and Image Composer (e.g., impressionist, charcoal, stained glass, etc.). The points awarded for this feature will depend on the creativity and difficulty of the filter. At most one such filter will receive points. For any feature that involves resampling (i.e., scale, rotate, "fun," and morph), you have to provide three sampling methods: point sampling, bilinear sampling, and Gaussian sampling. By implementing all the required features, you get 13 points. There are many ways to get more points: implementing the optional features listed above; (1) submitting one or more images for the art contest, (1) submitting a .mpeg or .gif movie animating the results of one or more filters with continuously varying parameters (e.g., use the makemovie command on the SGIs), (2) winning the art contest. For images or movies that you submit, you also have to submit the sequence of commands used to created them, otherwise they will not be considered valid. It is possible to get more than 20 points. However, after 20 points, each point is divided by 2, and after 22 points, each point is divided by 4. If your raw score is 19, your final score will be 19. If the raw score is 23, you'll get 21.25. For a raw score of 26, you'll get 22.

What to Submit

You should submit one archive (zip or tar file) containing: the complete source code with a Visual C project file; the .bmp images (sources, masks, and final) for the composite feature; the .mpeg movie for the movie feature (optional); the images for the art contest (optional); and a writeup. The writeup should be a HTML document called assignment1.html which may include other documents or pictures. It should be brief, describing what you have implemented, what works and what doesn't, how you created the composite image, how you created the art contest images, and instructions on how to run the fun filters you have implemented. Make sure the source code compiles on the workstations in Friend 017. If it doesn't, you will have to attend to a grading session with a TA, and your grade will suffer. Always remember the late policy and the collaboration policy .

A few hints: Do the simplest filters first! Look at the example pages here and here . There are functions to manipulate pixel components and pixels in image.[cpp/h] . You may find them helpful while writing your filters. There are functions to manipulate 2D vectors in vector.[cpp/h] . You may find them helpful while writing the morphing functions. Send mail to the cos426 staff. Stay tuned for more hints.

The compose image parameters don't make sense. Why? We are using PixelOver operator for composing images. Unfortunately, the bmp format doesn't store alpha values, so we need a way of getting alpha values for both the top and bottom image. The way we are doing this is by loading another image and treating its blue channel as the alpha value (see the function SetImageMask in main.cpp). So... we need 4 images: top, top_mask, bottom, bottom_mask. top is the current image that's already loaded, so the ComposeImage option takes the remaining 3 filenames as input. In the parameter section of main.cpp these 4 images are put together to create two images (main.cpp, lines 148-170) with the alpha values filled in, which is then passed to the ComposeImage function that you write in image.cpp. The three parameters of ComposeImage are stylistic, you could get away with two, or return an image pointer, or something equivalent. For the quantization function, can we safely assume that the image channels for input are always 8 bit and not any greater? Yes. For the quantization function, should we assume that the number of bits to which we're quantizing is always a multiple of 3? No. The parameter is the number of bits per channel, not per pixel. I get error messages and a core dump when the program tries to read in my bmp files. What should I do? Make sure your files are 24-bit, uncompressed bitmaps. The provided code does not read any other kind of files. If you are using xv : right-click to open the xv controls window, then click on the 24/8 Bit menu-button, then select the 24-bit mode option. Alt-8 toggles between 8-bit and 24-bit mode. Under windows, the error msg: Assertion failed: bmih.biSizeImage == (DWORD) lineLength * (DWORD) bmih.biHeight, file c:\temp\one\1\bmp.cpp, line 252

- Latest Articles

- Top Articles

- Posting/Update Guidelines

- Article Help Forum

- View Unanswered Questions

- View All Questions

- View C# questions

- View C++ questions

- View Visual Basic questions

- View Javascript questions

- View .NET questions

- CodeProject.AI Server

- All Message Boards...

- Running a Business

- Sales / Marketing

- Collaboration / Beta Testing

- Work Issues

- Design and Architecture

- Artificial Intelligence

- Internet of Things

- ATL / WTL / STL

- Managed C++/CLI

- Objective-C and Swift

- System Admin

- Hosting and Servers

- Linux Programming

- .NET (Core and Framework)

- Visual Basic

- Web Development

- Site Bugs / Suggestions

- Spam and Abuse Watch

- Competitions

- The Insider Newsletter

- The Daily Build Newsletter

- Newsletter archive

- CodeProject Stuff

- Most Valuable Professionals

- The Lounge

- The CodeProject Blog

- Where I Am: Member Photos

- The Insider News

- The Weird & The Wonderful

- What is 'CodeProject'?

- General FAQ

- Ask a Question

- Bugs and Suggestions

Free Image Transformation

- Download source - 29.93 KB

Introduction

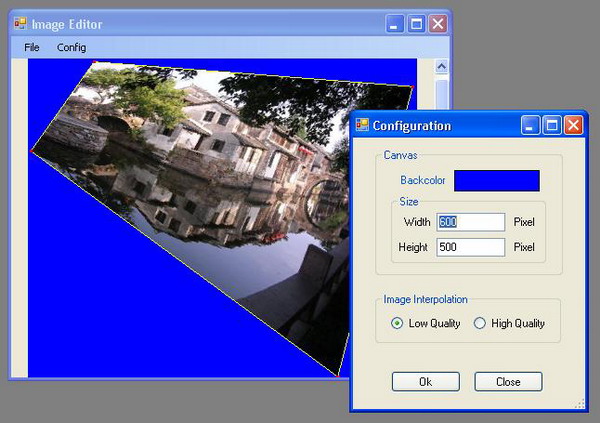

I have written a small but powerful C# application that can scale, rotate, skew and distort an image. This program includes a user control Canvas and a class FreeTransform . Canvas can keep the picture in the center of window always, and let the user zoom the image by mouse wheel. You can pick up the corner of the picture by mouse left button and move it freely in Canvas . Image transformation is done by the class FreeTransform . When you set its Bitmap and FourCorners , you can get the transformed Bitmap . If you like high quality of picture, you can set IsBilinearInterpolation to true . How does it work? The following diagram demonstrates the key to this method:

The shortest distances of point P to image borders are w1 , w2 , h1 and h2 . The position of point P on the original image is supposed to be at:

Then the colors at...

... on the original image were put on the point P and thus the result. To calculate of the distances, the vector cross product was used:

Thanks for trying it!

- 2 nd May, 2009: Initial post

- 8 th May, 2009: Added Config and Save functions

This article, along with any associated source code and files, is licensed under The Code Project Open License (CPOL)

IMAGES

COMMENTS

Solutions By size. Enterprise Teams Startups By industry. Healthcare Financial services Manufacturing By use case. CI/CD & Automation ... Image Transform project from University of Illinois through Coursera Resources. Readme License. MIT license Activity. Stars. 2 stars Watchers. 3 watching Forks.

Hello Guys,You can get the solutions of the Coursera Certification Course here which is namely Object-Oriented Data Structures in C++, here is the Week 4 Ima...

This is the final assignment of "Obejct Orieneted Data Structures in C++ course" (Coursera, Universtiy of Illinois) - FediSalhi/Programming-Assignment-Image-Transfrom-Project

Image Transform project from University of Illinois through Coursera - aamarin/coursera_img_transform_project. Skip to content. Navigation Menu Toggle navigation. ... Solutions By size. Enterprise Teams Startups By industry. Healthcare Financial services Manufacturing By use case. CI/CD & Automation ...

Image Transformations in C++. 3 minute read. Published: November 20, 2019 Introduction; Illinify; Spotlight; Watermarking; Introduction. This project was a part of the final week assignment of an online course on Coursera on Object-Oriented Data Structures in C++ taught by Prof. Ulmschneider from department of computer science at UIUC. It is such a well designed course for people from all ...

This is a four week project with lots of open-ended programming. Get started right away! Team Formation. In this project, you can work with teams of 2 people, or (if you prefer) individually. Individual teams still need to complete all the parts below. We want to incentivize you to work in pairs.

The purpose of the assignment is to write a Java class that can be called by a user interface program to unscramble images in the Portable GreyMap (PGM) format. To do this you need to write an object called Transform that inherits from an interface and implements all methods in that interface. It also instantiates and calls methods in ...

Image Reflection. Image reflection is used to flip the image vertically or horizontally. For reflection along the x-axis, we set the value of Sy to -1, Sx to 1, and vice-versa for the y-axis reflection. Python3. import numpy as np. import cv2 as cv. img = cv.imread('girlImage.jpg', 0) rows, cols = img.shape.

The purpose of this assignment is to give you some more experience with ppm images using command-line arguments. It also will give you more practice with creating a somewhat large multi-file program from scratch, using I/O functions, dynamically allocating memory for an array of structs, and using a makefile.

Rotate the Image ') 6. Apply Shear Transform to the Image: Shearing skews the image, shifting it in a direction while keeping one axis fixed. Here, we apply a shear transformation with a factor of ...

Project Documentation : MP1 Image Transform.pdf. Source Files Without Solution : MP1 Image Transform.zip. This project is study kind of image processing applications with C++. Main goal of the project to enhance to work with image transformation filters, classes and multifiles coding. HSLAPixel.cpp, HSLAPixel.h and ImageTransform.cpp files are ...

2 code implementations in PyTorch. We present a method for projecting an input image into the space of a class-conditional generative neural network. We propose a method that optimizes for transformation to counteract the model biases in generative neural networks. Specifically, we demonstrate that one can solve for image translation, scale, and global color transformation, during the ...

There are 3 modules in this course. This course will walk you through a hands-on project suitable for a portfolio. You will be introduced to third-party APIs and will be shown how to manipulate images using the Python imaging library (pillow), how to apply optical character recognition to images to recognize text (tesseract and pytesseract). By ...

Implement a function output_image = predictlines( canny_image, hough_image, xgradient_image, ygradient_image, alpha, beta, gamma) that combines the Canny Edge and Hough images into a final output image containing larger gray values where lines are predicted with more confidence. There are many ways to do this, but you should at least implement ...

Computer Vision (CMU 16-385) This course provides a comprehensive introduction to computer vision. Major topics include image processing, detection and recognition, geometry-based and physics-based vision and video analysis. Students will learn basic concepts of computer vision as well as hands on experience to solve real-life vision problems.

Getting Started. You should use the following skeleton code ( 1.zip or 1.tar.gz ) as a starting point for your assignment. We provide you with several files, but you should mainly change image.cpp . main.cpp: Parses the command line arguments, and calls the appropriate image functions. image. [cpp/h]: Image processing.

Image-Transform-Project This project was the last programming assignment in Coursera's Object-Oriented Data Structures in C++ course. The project is about manipulating images with some specific functions like grayscale,illinify,etc...

Download source - 29.93 KB; Introduction. I have written a small but powerful C# application that can scale, rotate, skew and distort an image. This program includes a user control Canvas and a class FreeTransform.Canvas can keep the picture in the center of window always, and let the user zoom the image by mouse wheel. You can pick up the corner of the picture by mouse left button and move it ...

Solutions By size. Enterprise Teams Startups By industry. Healthcare Financial services Manufacturing By use case. CI/CD & Automation ... The goal of this project is to make image transformation filters. Original input: Filter 1: Illinify. This filter transforms all hue values to either blue or orange, depending on which they're closer to ...

This repository contains my programming assignments for the specialization. \ Topics covered by this Specialization include basic object-oriented programming, the analysis of asymptotic algorithmic run times, and the implementation of basic data structures including arrays, hash tables, linked lists, trees, heaps and graphs, as well as algorithms for traversals, rebalancing and shortest paths.