What Is the Credit Assignment Problem?

Last updated: March 18, 2024

- Machine Learning

- Reinforcement Learning

Baeldung Pro comes with both absolutely No-Ads as well as finally with Dark Mode , for a clean learning experience:

>> Explore a clean Baeldung

Once the early-adopter seats are all used, the price will go up and stay at $33/year.

1. Overview

In this tutorial, we’ll discuss a classic problem in reinforcement learning: the credit assignment problem. We’ll present an example that demonstrates the problem.

Finally, we’ll highlight some solutions to solve the credit assignment problem.

2. Basics of Reinforcement Learning

Reinforcement learning (RL) is a subfield of machine learning that focuses on how an agent can learn to make independent decisions in an environment in order to maximize the reward. It’s inspired by the way animals learn via the trial and error method. Furthermore, RL aims to create intelligent agents that can learn to achieve a goal by maximizing the cumulative reward.

In RL, an agent applies some actions to an environment. Based on the action applied, the environment rewards the agent. After getting the reward, the agents move to a different state and repeat this process. Additionally, the reward can be positive as well as negative based on the action taken by an agent:

The goal of the agent in reinforcement learning is to build an optimal policy that maximizes the overall reward over time. This is typically done using an iterative process . The agent interacts with the environment to learn from experience and updates its policy to improve its decision-making capability.

3. Credit Assignment Problem

The credit assignment problem (CAP) is a fundamental challenge in reinforcement learning. It arises when an agent receives a reward for a particular action, but the agent must determine which of its previous actions led to the reward.

In reinforcement learning, an agent applies a set of actions in an environment to maximize the overall reward. The agent updates its policy based on feedback received from the environment. It typically includes a scalar reward indicating the quality of the agent’s actions.

The credit assignment problem refers to the problem of measuring the influence and impact of an action taken by an agent on future rewards. The core aim is to guide the agents to take corrective actions which can maximize the reward.

However, in many cases, the reward signal from the environment doesn’t provide direct information about which specific actions the agent should continue or avoid. This can make it difficult for the agent to build an effective policy.

Additionally, there’re situations where the agent takes a sequence of actions, and the reward signal is only received at the end of the sequence. In these cases, the agent must determine which of its previous actions positively contributed to the final reward.

It can be difficult because the final reward may be the result of a long sequence of actions. Hence, the impact of any particular action on the overall reward is difficult to discern.

Let’s take a practical example to demonstrate the credit assignment problem.

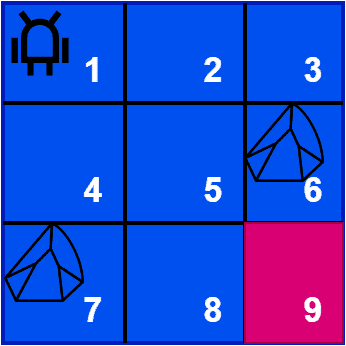

Suppose an agent is playing a game where it must navigate a maze to reach the goal state. We place the agent in the top left corner of the maze. Additionally, we set the goal state in the bottom right corner. The agent can move up, down, left, right, or diagonally. However, it can’t move through the states containing stone:

As the agent explores the maze, it receives a reward of +10 for reaching the goal state. Additionally, if it hits a stone, we penalize the action by providing a -10 reward. The goal of the agent is to learn from the rewards and build an optimal policy that maximizes the gross reward over time.

The credit assignment problem arises when the agent reaches the goal after several steps. The agent receives a reward of +10 as soon as it reaches the goal state. However, it’s not clear which actions are responsible for the reward. For example, suppose the agent took a long and winding path to reach the goal. Therefore, we need to determine which actions should receive credit for the reward.

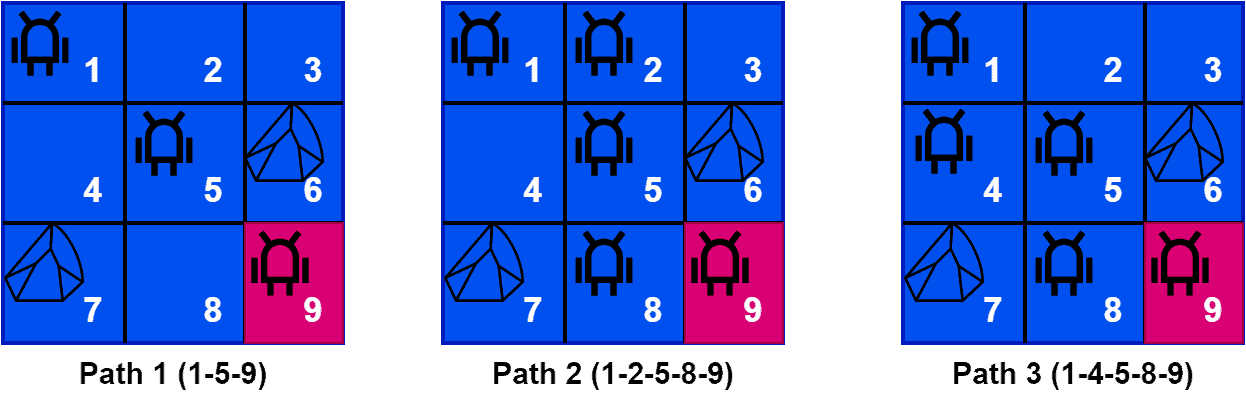

Additionally, it’s challenging to decide whether to credit the last action that took it to the goal or credit all the actions that led up to the goal. Let’s look at some paths which lead the agent to the goal state:

As we can see here, the agent can reach the goal state with three different paths. Hence, it’s challenging to measure the influence of each action. We can see the best path to reach the goal state is path 1.

Hence, the positive impact of the agent moving from state 1 to state 5 by applying the diagonal action is higher than any other action from state 1. This is what we want to measure so that we can make optimal policies like path 1 in this example.

5. Solutions

The credit assignment problem is a vital challenge in reinforcement learning. Let’s talk about some popular approaches for solving the credit assignment problem. Here we’ll present three popular approaches: temporal difference (TD) learning , Monte Carlo methods , and eligibility traces method .

TD learning is a popular RL algorithm that uses a bootstrapping approach to assign credit to past actions. It updates the value function of the policy based on the difference between the predicted reward and the actual reward received at each time step. By bootstrapping the value function from the predicted rewards of future states, TD learning can assign credit to past actions even when the reward is delayed.

Monte Carlo methods are a class of RL algorithms that use full episodes of experience to assign credit to past actions. These methods estimate the expected value of a state by averaging the rewards obtained in the episodes that pass through that state. By averaging the rewards obtained over several episodes, Monte Carlo methods can assign credit to actions that led up to the reward, even if the reward is delayed.

Eligibility traces are a method for assigning credit to past actions based on their recent history. Eligibility traces keep track of the recent history of state-action pairs and use a decaying weight to assign credit to each pair based on how recently it occurred. By decaying the weight of older state-action pairs, eligibility traces can assign credit to actions that led up to the reward, even if they occurred several steps earlier.

6. Conclusion

In this tutorial, we discussed the credit assignment problem in reinforcement learning with an example. Finally, we presented three popular solutions that can solve the credit assignment problem.

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

What is the "credit assignment" problem in Machine Learning and Deep Learning?

I was watching a very interesting video with Yoshua Bengio where he is brainstorming with his students. In this video they seem to make a distinction between "credit assignment" vs gradient descent vs back-propagation. From the conversation it seems that the credit assignment problem is associated with "backprop" rather than gradient descent. I was trying to understand why that happened. Perhaps what would be helpful was if there was a very clear definition of "credit assignment" (specially in the context of Deep Learning and Neural Networks).

What is "the credit assignment problem:?

and how is it related to training/learning and optimization in Machine Learning (specially in Deep Learning).

From the discussion I would have defined as:

The function that computes the value(s) used to update the weights. How this value is used is the training algorithm but the credit assignment is the function that processes the weights (and perhaps something else) to that will later be used to update the weights.

That is how I currently understand it but to my surprise I couldn't really find a clear definition on the internet. This more precise definition might be possible to be extracted from the various sources I found online:

https://www.youtube.com/watch?v=g9V-MHxSCcsWhat is the "credit assignment" problem in Machine Learning and Deep Learning?

How Auto-Encoders Could Provide Credit Assignment in Deep Networks via Target Propagation https://arxiv.org/pdf/1407.7906.pdf

Yoshua Bengio – Credit assignment: beyond backpropagation ( https://www.youtube.com/watch?v=z1GkvjCP7XA )

Learning to solve the credit assignment problem ( https://arxiv.org/pdf/1906.00889.pdf )

Also, I found according to a Quora question that its particular to reinforcement learning (RL). From listening to the talks from Yoshua Bengio it seems that is false. Can someone clarify how it differs specifically from the RL case ?

Cross-posted:

https://forums.fast.ai/t/what-is-the-credit-assignment-problem-in-deep-learning/52363

https://www.reddit.com/r/MachineLearning/comments/cp54zi/what_is_the_credit_assignment_problem_in_machine/ ?

https://www.quora.com/What-means-credit-assignment-when-talking-about-learning-in-neural-networks

- machine-learning

- neural-networks

3 Answers 3

Perhaps this should be rephrased as "attribution", but in many RL models, the signal that comprises the reinforcement (e.g. the error in the reward prediction for TD) does not assign any single action "credit" for that reward. Was it the right context, but wrong decision? Or the wrong context, but correct decision? Which specific action in a temporal sequence was the right one?

Similarly, in NN, where you have hidden layers, the output does not specify what node or pixel or element or layer or operation improved the model, so you don't necessarily know what needs tuning -- for example, the detectors (pooling & reshaping, activation, etc.) or the weight assignment (part of back propagation). This is distinct from many supervised learning methods, especially tree-based methods, where each decision tells you exactly what lift was given to the distribution segregation (in classification, for example). Part of understanding the credit problem is explored in "explainable AI", where we are breaking down all of the outputs to determine how the final decision was made. This is by either logging and reviewing at various stages (tensorboard, loss function tracking, weight visualizations, layer unrolling, etc.), or by comparing/reducing to other methods (ODEs, Bayesian, GLRM, etc.).

If this is the type of answer you're looking for, comment and I'll wrangle up some references.

- $\begingroup$ Could you please add some references you consider significant within this context? Thank you very much! $\endgroup$ – Penelope Benenati Commented Jun 14, 2021 at 15:04

I haven't been able to find an explicit definition of CAP. However, we can read some academic articles and get a good sense of what's going on.

Jürgen Schmidhuber provides an indication of what CAP is in " Deep Learning in Neural Networks: An Overview ":

Which modifiable components of a learning system are responsible for its success or failure? What changes to them improve performance? This has been called the fundamental credit assignment problem (Minsky, 1963).

This is not a definition , but it is a strong indication that the credit assignment problem pertains to how a machine learning model interpreted the input to give a correct or incorrect prediction.

From this description, it is clear that the credit assignment problem is not unique to reinforcement learning because it is difficult to interpret the decision-making logic that caused any modern machine learning model (e.g. deep neural network, gradient boosted tree, SVM) to reach its conclusion. Why are CNNs for image classification more sensitive to imperceptible noise than to what humans perceive as the semantically obvious content of an image ? For a deep FFN, it's difficult to separate the effect of a single feature or interpret a structured decision from a neural network output because all input features participate in all parts of the network. For an RBF SVM, all features are used all at once. For a tree-based model, splits deep in the tree are conditional on splits earlier in the tree; boosted trees also depend on the results of all previous trees. In each case, this is an almost overwhelming amount of context to consider when attempting an interpretation of how the model works.

CAP is related to backprop in a very general sense because if we knew which neurons caused a good/bad decision , then we could leverage that information when making weight updates to the network.

However, we can distinguish CAP from backpropagation and gradient descent because using the gradient is just a specific choice of how to assign credit to neurons (follow the gradient backwards from the loss and update those parameters proportionally). Purely in the abstract, imagine that we had some magical alternative to gradient information and the backpropagation algorithm and we could use that information to adjust neural network parameters instead. This magical method would still have to solve the CAP in some way because we would want to update the model according to whether or not specific neurons or layers made good or bad decisions, and this method need not depend on the gradient (because it's magical -- I have no idea how it would work or what it would do).

Additionally, CAP is especially important to reinforcement learning because a good reinforcement learning method would have a strong understanding of how each action influencers the outcome . For a game like chess, you only receive the win/loss signal at the end of the game, which implies that you need to understand how each move contributed to the outcome, both in a positive sense ("I won because I took a key piece on the fifth turn") and a negative sense ("I won because I spotted a trap and didn't lose a rook on the sixth turn"). Reasoning about the long chain of decisions that cause an outcome in a reinforcement learning setting is what Minsky 1963 is talking about: CAP is about understanding how each choice contributed to the outcome, and that's hard to understand in chess because during each turn you can take a large number of moves which can either contribute to winning or losing.

I no longer have access to a university library, but Schmidhuber's Minsky reference is a collected volume (Minsky, M. (1963). Steps toward artificial intelligence. In Feigenbaum, E. and Feldman, J., editors, Computers and Thought , pages 406–450. McGraw-Hill, New York.) which appears to reproduce an essay Minksy published earlier (1960) elsewhere ( Proceedings of the IRE ), under the same title . This essay includes a summary of "Learning Systems". From the context, he is clearly writing about what we now call reinforcement learning, and illustrates the problem with an example of a reinforcement learning problem from that era.

An important example of comparative failure in this credit-assignment matter is provided by the program of Friedberg [53], [54] to solve program-writing problems. The problem here is to write programs for a (simulated) very simple digital computer. A simple problem is assigned, e.g., "compute the AND of two bits in storage and put the result in an assigned location. "A generating device produces a random (64-instruction) program. The program is run and its success or failure is noted. The success information is used to reinforce individual instructions (in fixed locations) so that each success tends to increase the chance that the instructions of successful programs will appear in later trials. (We lack space for details of how this is done.) Thus the program tries to find "good" instructions, more or less independently, for each location in program memory. The machine did learn to solve some extremely simple problems. But it took of the order of 1000 times longer than pure chance would expect. In part I of [54], this failure is discussed and attributed in part to what we called (Section I-C) the "Mesa phenomenon." In changing just one instruction at a time, the machine had not taken large enough steps in its search through program space. The second paper goes on to discuss a sequence of modifications in the program generator and its reinforcement operators. With these, and with some "priming" (starting the machine off on the right track with some useful instructions), the system came to be only a little worse than chance. The authors of [54] conclude that with these improvements "the generally superior performance of those machines with a success-number reinforcement mechanism over those without does serve to indicate that such a mechanism can provide a basis for constructing a learning machine." I disagree with this conclusion. It seems to me that each of the "improvements" can be interpreted as serving only to increase the step size of the search, that is, the randomness of the mechanism; this helps to avoid the "mesa" phenomenon and thus approach chance behaviour. But it certainly does not show that the "learning mechanism" is working--one would want at least to see some better-than-chance results before arguing this point. The trouble, it seems, is with credit-assignment. The credit for a working program can only be assigned to functional groups of instructions, e.g., subroutines, and as these operate in hierarchies, we should not expect individual instruction reinforcement to work well. (See the introduction to [53] for a thoughtful discussion of the plausibility of the scheme.) It seems surprising that it was not recognized in [54] that the doubts raised earlier were probably justified. In the last section of [54], we see some real success obtained by breaking the problem into parts and solving them sequentially. This successful demonstration using division into subproblems does not use any reinforcement mechanism at all. Some experiments of similar nature are reported in [94].

(Minsky does not explicitly define CAP either.)

An excerpt from Box 1 in the article " A deep learning framework for neuroscience ", by Blake A. Richards et. al. (among the authors is Yoshua Bengio):

The concept of credit assignment refers to the problem of determining how much ‘credit’ or ‘blame’ a given neuron or synapse should get for a given outcome. More specifically, it is a way of determining how each parameter in the system (for example, each synaptic weight) should change to ensure that $\Delta F \ge 0$ . In its simplest form, the credit assignment problem refers to the difficulty of assigning credit in complex networks. Updating weights using the gradient of the objective function, $\nabla_WF(W)$ , has proven to be an excellent means of solving the credit assignment problem in ANNs. A question that systems neuroscience faces is whether the brain also approximates something like gradient-based methods.

$F$ is the objective function, $W$ are the synaptic weights, and $\Delta F = F(W+\Delta W)-F(W)$ .

Your Answer

Sign up or log in, post as a guest.

Required, but never shown

By clicking “Post Your Answer”, you agree to our terms of service and acknowledge you have read our privacy policy .

Not the answer you're looking for? Browse other questions tagged machine-learning neural-networks or ask your own question .

- Featured on Meta

- Preventing unauthorized automated access to the network

- User activation: Learnings and opportunities

- Join Stack Overflow’s CEO and me for the first Stack IRL Community Event in...

Hot Network Questions

- What made scientists think that chemistry is reducible to physics and when did that happen?

- Is there a faster way to find the positions of specific elements in a very large list?

- Does a ball fit in a pipe if they are exactly the same diameter?

- Could you suffocate someone to death with a big enough standing wave?

- Ideas for high school research project related to probability

- Why did the Apollo 13 tank #2 have a heater and a vacuum?

- Want a different order than permutation

- 2000s creepy independant film with a voice-over of a radio host giving bad self-help advice

- How do Cultivator Colossus and bounce lands interact?

- Does Dragon Ball Z: Kakarot have ecchi scenes?

- How can moving observer explain non-simultaneity?

- Dark setting action fantasy anime; protagonist has white hair, wears black, slim clothes, and fights with his hand

- Adjective separated from its noun

- Is p→p a theorem in intuitionistic logic?

- Figure out which of your friends reveals confidential information to the media!

- What is the smallest interval between two palindromic times on a 24-hour digital clock?

- Do I need to protect ICs with transils?

- Classification of countable subsets of the real line

- Why is a function that only returns a stateful lambda compiled down to any assembly at all?

- Loop tools Bridge not joining three joined circles with the same vertex count in Blender 4.2

- How to align view to uneven/irregular face to maintain straight/horizontal orientation? (without the need of rolling/rotating view afterwards)

- Is BitLocker susceptible to any known attacks other than bruteforcing when used with a very strong passphrase and no TPM?

- Is a private third party allowed to take things to court?

- VLA configuration locations

Our systems are now restored following recent technical disruption, and we’re working hard to catch up on publishing. We apologise for the inconvenience caused. Find out more: https://www.cambridge.org/universitypress/about-us/news-and-blogs/cambridge-university-press-publishing-update-following-technical-disruption

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > An Introduction to the Modeling of Neural Networks

- > Solving the problem of credit assignment

Book contents

- Frontmatter

- Acknowledgments

- 1 Introduction

- 2 The biology of neural networks: a few features for the sake of non-biologists

- 3 The dynamics of neural networks: a stochastic approach

- 4 Hebbian models of associative memory

- 5 Temporal sequences of patterns

- 6 The problem of learning in neural networks

- 7 Learning dynamics in ‘visible’ neural networks

- 8 Solving the problem of credit assignment

- 9 Self-organization

- 10 Neurocomputation

- 11 Neurocomputers

- 12 A critical view of the modeling of neural networks

8 - Solving the problem of credit assignment

Published online by Cambridge University Press: 30 November 2009

The architectures of the neural networks we considered in Chapter 7 are made exclusively of visible units. During the learning stage, the states of all neurons are entirely determined by the set of patterns to be memorized. They are so to speak pinned and the relaxation dynamics plays no role in the evolution of synaptic efficacies. How to deal with more general systems is not a simple problem. Endowing a neural network with hidden units amounts to adding many degrees of freedom to the system, which leaves room for ‘ internal representations ’ of the outside world. The building of learning algorithms that make general neural networks able to set up efficient internal representations is a challenge which has not yet been fully satisfactorily taken up. Pragmatic approaches have been made, however, mainly using the so-called back-propagation algorithm. We owe the current excitement about neural networks to the surprising successes that have been obtained so far by calling upon that technique: in some cases the neural networks seem to extract the unexpressed rules that are hidden in sets of raw data. But for the moment we really understand neither the reasons for this success nor those for the (generally unpublished) failures.

The back-propagation algorithm

A direct derivation

To solve the credit assignment problem is to devise means of building relevant internal representations; that is to say, to decide which state I µ, hid of hidden units is to be associated with a given pattern I µ, vis of visible units.

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Solving the problem of credit assignment

- Pierre Peretto

- Book: An Introduction to the Modeling of Neural Networks

- Online publication: 30 November 2009

- Chapter DOI: https://doi.org/10.1017/CBO9780511622793.009

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

Credit Assignment in Neural Networks through Deep Feedback Control

The success of deep learning sparked interest in whether the brain learns by using similar techniques for assigning credit to each synaptic weight for its contribution to the network output. However, the majority of current attempts at biologically-plausible learning methods are either non-local in time, require highly specific connectivity motifs, or have no clear link to any known mathematical optimization method. Here, we introduce Deep Feedback Control (DFC), a new learning method that uses a feedback controller to drive a deep neural network to match a desired output target and whose control signal can be used for credit assignment. The resulting learning rule is fully local in space and time and approximates Gauss-Newton optimization for a wide range of feedback connectivity patterns. To further underline its biological plausibility, we relate DFC to a multi-compartment model of cortical pyramidal neurons with a local voltage-dependent synaptic plasticity rule, consistent with recent theories of dendritic processing. By combining dynamical system theory with mathematical optimization theory, we provide a strong theoretical foundation for DFC that we corroborate with detailed results on toy experiments and standard computer-vision benchmarks.

1 Introduction

The error backpropagation (BP) algorithm [ 1 , 2 , 3 ] is currently the gold standard to perform credit assignment (CA) in deep neural networks. Although deep learning was inspired by biological neural networks, an exact mapping of BP onto biology to explain learning in the brain leads to several inconsistencies with experimental results that are not yet fully addressed [ 4 , 5 , 6 ] . First, BP requires an exact symmetry between the weights of the forward and feedback pathways [ 5 , 6 ] , also called the weight transport problem. Another issue of relevance is that, in biological networks, feedback also changes each neuron’s activation and thus its immediate output [ 7 , 8 ] , which does not occur in BP.

Lillicrap et al. [ 9 ] convincingly showed that the weight transport problem can be sidestepped in modest supervised learning problems by using random feedback connections. However, follow-up studies indicated that random feedback paths cannot provide precise CA in more complex problems [ 10 , 11 , 12 , 13 ] , which can be mitigated by learning feedback weights that align with the forward pathway [ 14 , 15 , 16 , 17 , 18 ] or approximate its inverse [ 19 , 20 , 21 , 22 ] . However, this precise alignment imposes strict constraints on the feedback weights, whereas more flexible constraints could provide the freedom to use feedback also for other purposes besides learning, such as attention and prediction [ 8 ] .

A complementary line of research proposes models of cortical microcircuits which propagate CA signals through the network using dynamic feedback [ 23 , 24 , 25 ] or multiplexed neural codes [ 26 ] , thereby directly influencing neural activations with feedback. However, these models introduce highly specific connectivity motifs and tightly coordinated plasticity mechanisms. Whether these constraints can be fulfilled by cortical networks is an interesting experimental question. Another line of work uses adaptive control theory [ 27 ] to derive learning rules for non-hierarchical recurrent neural networks (RNNs) based on error feedback, which drives neural activity to track a reference output [ 28 , 29 , 30 , 31 ] . These methods have so far only been used to train single-layer RNNs with fixed output and feedback weights, making it unclear whether they can be extended to deep neural networks. Finally, two recent studies [ 32 , 33 ] use error feedback in a dynamical setting to invert the forward pathway, thereby enabling errors to flow backward. These approaches rely on a learning rule that is non-local in time and it remains unclear whether they approximate any known optimization method. Addressing the latter, two recent studies take a first step by relating learned (non-dynamical) inverses of the forward pathway [ 21 ] and iterative inverses restricted to invertible networks [ 22 ] to approximate Gauss-Newton optimization.

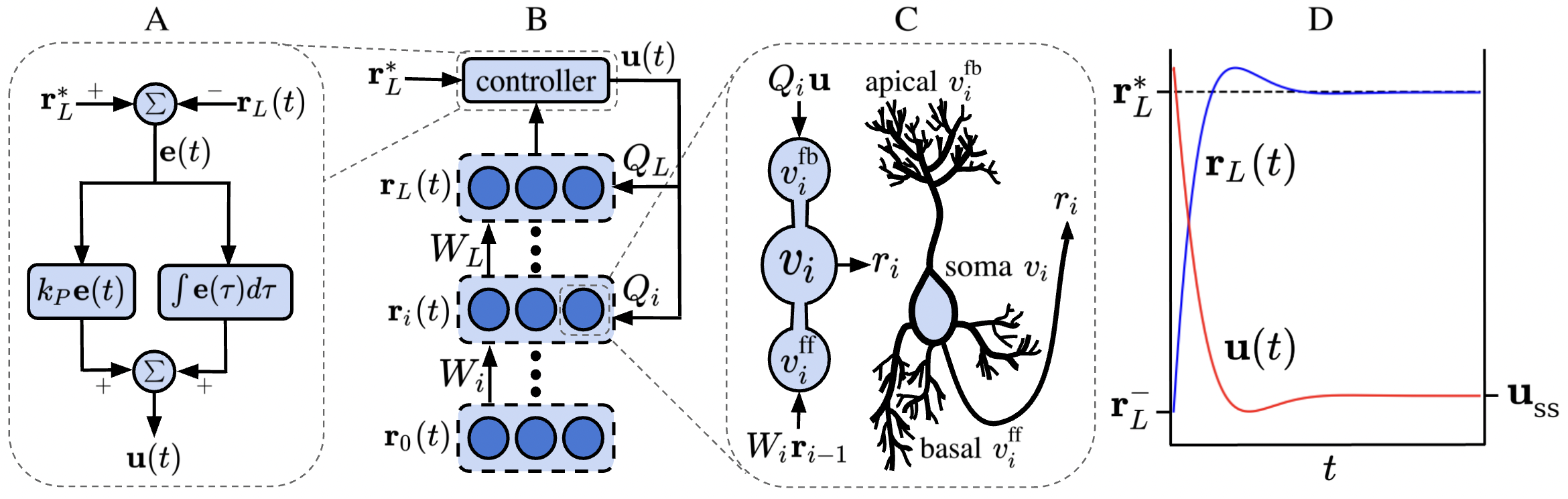

Inspired by the Dynamic Inversion method [ 32 ] , we introduce Deep Feedback Control (DFC), a new biologically-plausible CA method that addresses the above-mentioned limitations and extends the control theory approach to learning [ 28 , 29 , 30 , 31 ] to deep neural networks. DFC uses a feedback controller that drives a deep neural network to match a desired output target. For learning, DFC then simply uses the dynamic change in the neuron activations to update their synaptic weights, resulting in a learning rule fully local in space and time. We show that DFC approximates Gauss-Newton (GN) optimization and therefore provides a fundamentally different approach to CA compared to BP. Furthermore, DFC does not require precise alignment between forward and feedback weights, nor does it rely on highly specific connectivity motifs. Interestingly, the neuron model used by DFC can be closely connected to recent multi-compartment models of cortical pyramidal neurons. Finally, we provide detailed experimental results, corroborating our theoretical contributions and showing that DFC does principled CA on standard computer-vision benchmarks in a way that fundamentally differs from standard BP.

2 The Deep Feedback Control method

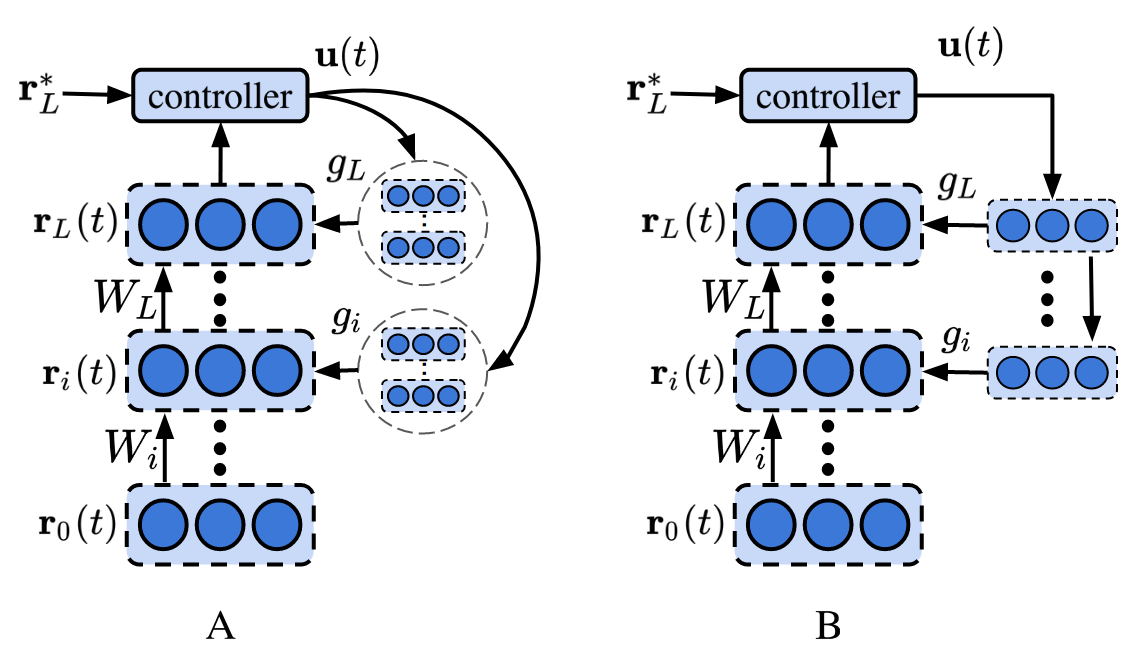

Here, we introduce the core parts of DFC. In contrast to conventional feedforward neural network models, DFC makes use of a dynamical neuron model (Section 2.1 ). We use a feedback controller to drive the neurons of the network to match a desired output target (Section 2.2 ), while simultaneously updating the synaptic weights using the change in neuronal activities (Section 2.3 ). This combination of dynamical neurons and controller leads to a simple but powerful learning method, that is linked to GN optimization and offers a flexible range of feedback connectivity (see Section 3 ).

2.1 Neuron and network dynamics

The first main component of DFC is a dynamical multilayer network, in which every neuron integrates its forward and feedback inputs according to the following dynamics:

| (1) |

with 𝐯 i subscript 𝐯 𝑖 \mathbf{v}_{i} a vector containing the pre-nonlinearity activations of the neurons in layer i 𝑖 i , W i subscript 𝑊 𝑖 W_{i} the forward weight matrix, ϕ italic-ϕ \phi a smooth nonlinearity, 𝐮 𝐮 \mathbf{u} a feedback input, Q i subscript 𝑄 𝑖 Q_{i} the feedback weight matrix, and τ v subscript 𝜏 𝑣 \tau_{v} a time constant. See Fig. 1 B for a schematic representation of the network. To simplify notation, we define 𝐫 i = ϕ ( 𝐯 i ) subscript 𝐫 𝑖 italic-ϕ subscript 𝐯 𝑖 \mathbf{r}_{i}=\phi(\mathbf{v}_{i}) as the post-nonlinearity activations of layer i 𝑖 i . The input 𝐫 0 subscript 𝐫 0 \mathbf{r}_{0} remains fixed throughout the dynamics ( 1 ). Note that in the absence of feedback, i.e., 𝐮 = 0 𝐮 0 \mathbf{u}=0 , the equilibrium state of the network dynamics ( 1 ) corresponds to a conventional multilayer feedforward network state, which we denote with superscript ‘-’:

| (2) |

2.2 Feedback controller

The second core component of DFC is a feedback controller, which is only active during learning. Instead of a single backward pass for providing feedback, DFC uses a feedback controller to continuously drive the network to an output target 𝐫 L ∗ subscript superscript 𝐫 𝐿 \mathbf{r}^{*}_{L} (see Fig. 1 D). Following the Target Propagation framework [ 20 , 21 , 22 ] , we define 𝐫 L ∗ subscript superscript 𝐫 𝐿 \mathbf{r}^{*}_{L} as the feedforward output nudged towards lower loss:

| (3) |

1 2 𝜆 superscript subscript 𝐫 𝐿 2 𝜆 𝐲 \mathbf{r}_{L}^{*}=(1-2\lambda)\mathbf{r}_{L}^{-}+2\lambda\mathbf{y} .

The feedback controller produces a feedback signal 𝐮 ( t ) 𝐮 𝑡 \mathbf{u}(t) to drive the network output 𝐫 L ( t ) subscript 𝐫 𝐿 𝑡 \mathbf{r}_{L}(t) towards its target 𝐫 L ∗ superscript subscript 𝐫 𝐿 \mathbf{r}_{L}^{*} , using the control error 𝐞 ( t ) ≜ 𝐫 L ∗ − 𝐫 L ( t ) ≜ 𝐞 𝑡 subscript superscript 𝐫 𝐿 subscript 𝐫 𝐿 𝑡 \mathbf{e}(t)\triangleq\mathbf{r}^{*}_{L}-\mathbf{r}_{L}(t) . A standard approach in designing a feedback controller is the Proportional-Integral-Derivative (PID) framework [ 34 ] . While DFC is compatible with various controller types, such as a full PID controller or a pure proportional controller (see Appendix A.8 ), we use a PI controller for a combination of simplicity and good performance, resulting in the following controller dynamics (see also Fig. 1 A):

| (4) |

where a leakage term is added to constrain the magnitude of 𝐮 int superscript 𝐮 int \mathbf{u}^{\text{int}} . For mathematical simplicity, we take the control matrices equal to K I = I subscript 𝐾 𝐼 𝐼 K_{I}=I and K P = k p I subscript 𝐾 𝑃 subscript 𝑘 𝑝 𝐼 K_{P}=k_{p}I with k p ≥ 0 subscript 𝑘 𝑝 0 k_{p}\geq 0 the proportional control constant. This PI controller adds a leaky integration of the error 𝐮 int superscript 𝐮 int \mathbf{u}^{\text{int}} to a scaled version of the error k p 𝐞 subscript 𝑘 𝑝 𝐞 k_{p}\mathbf{e} which could be implemented by a dedicated neural microcircuit (for a discussion see App. I ). Drawing inspiration from the Target Propagation framework [ 19 , 20 , 21 , 22 ] and the Dynamic Inversion framework [ 32 ] , one can think of the controller and network dynamics as performing a dynamic inversion of the output target 𝐫 L ∗ superscript subscript 𝐫 𝐿 \mathbf{r}_{L}^{*} towards the hidden layers, as the controller dynamically changes the activation of the hidden layers until the output target is reached.

2.3 Forward weight updates

The update rule for the feedforward weights has the form:

| (5) |

This learning rule simply compares the neuron’s controlled activation to its current feedforward input and is thus local in space and time. Furthermore, it can be interpreted most naturally by compartmentalizing the neuron into the central compartment 𝐯 i subscript 𝐯 𝑖 \mathbf{v}_{i} from ( 1 ) and a feedforward compartment 𝐯 i ff ≜ W i 𝐫 i − 1 ≜ superscript subscript 𝐯 𝑖 ff subscript 𝑊 𝑖 subscript 𝐫 𝑖 1 \mathbf{v}_{i}^{\text{ff}}\triangleq W_{i}\mathbf{r}_{i-1} that integrates the feedforward input. Now, the forward weight dynamics ( 5 ) represents a delta rule using the difference between the actual firing rate of the neuron, ϕ ( 𝐯 i ) italic-ϕ subscript 𝐯 𝑖 \phi(\mathbf{v}_{i}) , and its estimated firing rate, ϕ ( 𝐯 i ff ) italic-ϕ superscript subscript 𝐯 𝑖 ff \phi(\mathbf{v}_{i}^{\text{ff}}) , based on the feedforward inputs. Note that we assume τ W subscript 𝜏 𝑊 \tau_{W} to be a large time constant, such that the network ( 1 ) and controller dynamics ( 4 ) are not influenced by the weight dynamics, i.e., the weights are considered fixed in the timescale of the controller and network dynamics.

In Section 5 , we show how the feedback weights Q i subscript 𝑄 𝑖 Q_{i} can also be learned locally in time and space for supporting the stability of the network dynamics and the learning of W i subscript 𝑊 𝑖 W_{i} . This feedback learning rule needs a feedback compartment 𝐯 i fb ≜ Q i 𝐮 ≜ superscript subscript 𝐯 𝑖 fb subscript 𝑄 𝑖 𝐮 \mathbf{v}_{i}^{\text{fb}}\triangleq Q_{i}\mathbf{u} , leading to the three-compartment neuron schematized in Fig. 1 C, inspired by recent multi-compartment models of the pyramidal neuron (see Discussion). Now, that we introduced the DFC model, we will show that (i) the weight updates ( 5 ) can properly optimize a loss function (Section 3 ), (ii) the resulting dynamical system is stable under certain conditions (Section 4 ), and (iii) learning the feedback weights facilitates (i) and (ii) (Section 5 ).

3 Learning theory

To understand how DFC optimizes the feedforward mapping ( 2 ) on a given loss function, we link the weight updates ( 5 ) to mathematical optimization theory. We start by showing that DFC dynamically inverts the output error to the hidden layers (Section 3.1 ), which we link to GN optimization under flexible constraints on the feedback weights Q i subscript 𝑄 𝑖 Q_{i} and on layer activations (Section 3.2 ). In Section 3.3 , we relax some of these constraints, and show that DFC still does principled optimization by using minimum norm (MN) updates for W i subscript 𝑊 𝑖 W_{i} . During this learning theory section, we assume stable dynamics, which we investigate in more detail in Section 4 . All theoretical results of this section are tailored towards a PI controller, and they can be easily extended to pure proportional or integral control (see App. A.8 ).

3.1 DFC dynamically inverts the output error

Assuming stable dynamics, a small target stepsize λ 𝜆 \lambda , and W i subscript 𝑊 𝑖 W_{i} and Q i subscript 𝑄 𝑖 Q_{i} fixed, the steady-state solutions of the dynamical systems ( 1 ) and ( 4 ) can be approximated by:

| (6) |

1 𝛼 subscript 𝑘 𝑝 \tilde{\alpha}=\alpha/(1+\alpha k_{p}) .

subscript superscript 𝐯 ff ss Δ 𝐯 \mathbf{v}_{\mathrm{ss}}=\mathbf{v}^{\text{ff}}_{\mathrm{ss}}+\Delta\mathbf{v} , such that the steady-state network output equals its target 𝐫 L ∗ superscript subscript 𝐫 𝐿 \mathbf{r}_{L}^{*} . With linearized network dynamics, this results in solving the linear system J Δ 𝐯 = 𝜹 L 𝐽 Δ 𝐯 subscript 𝜹 𝐿 J\Delta\mathbf{v}=\boldsymbol{\delta}_{L} . As Δ 𝐯 Δ 𝐯 \Delta\mathbf{v} is of much higher dimension than 𝜹 L subscript 𝜹 𝐿 \boldsymbol{\delta}_{L} , this is an underdetermined system with infinitely many solutions. Constraining the solution to the column space of Q 𝑄 Q leads to the unique solution Δ 𝐯 = Q ( J Q ) − 1 𝜹 L Δ 𝐯 𝑄 superscript 𝐽 𝑄 1 subscript 𝜹 𝐿 \Delta\mathbf{v}=Q(JQ)^{-1}\boldsymbol{\delta}_{L} , corresponding to the steady-state solution in Lemma 1 minus a small damping constant α ~ ~ 𝛼 \tilde{\alpha} . Hence, similar to Podlaski and Machens [ 32 ] , through an interplay between the network and controller dynamics, the controller dynamically inverts the output error 𝜹 L subscript 𝜹 𝐿 \boldsymbol{\delta}_{L} to produce feedback that exactly drives the network output to its desired target.

3.2 DFC approximates Gauss-Newton optimization

To understand the optimization characteristics of DFC, we show that under flexible conditions on Q i subscript 𝑄 𝑖 Q_{i} and the layer activations, DFC approximates GN optimization. We first briefly review GN optimization and introduce two conditions needed for the main theorem.

Gauss-Newton optimization [ 35 ] is an approximate second-order optimization method used in nonlinear least-squares regression. The GN update for the model parameters 𝜽 𝜽 \boldsymbol{\theta} is computed as:

| (7) |

with J θ subscript 𝐽 𝜃 J_{\theta} the Jacobian of the model output w.r.t. 𝜽 𝜽 \boldsymbol{\theta} concatenated for all minibatch samples, J θ † subscript superscript 𝐽 † 𝜃 J^{\dagger}_{\theta} its Moore-Penrose pseudoinverse, and 𝐞 L subscript 𝐞 𝐿 \mathbf{e}_{L} the output errors.

Condition 1 .

Each layer of the network, except from the output layer, has the same activation norm:

| (8) |

Note that the latter condition considers a statistic ‖ 𝐫 i ‖ 2 subscript norm subscript 𝐫 𝑖 2 \|\mathbf{r}_{i}\|_{2} of a whole layer and does not impose specific constraints on single neural firing rates. This condition can be interpreted as each layer, except the output layer, having the same ‘energy budget’ for firing.

Condition 2 .

The column space of Q 𝑄 Q is equal to the row space of J 𝐽 J .

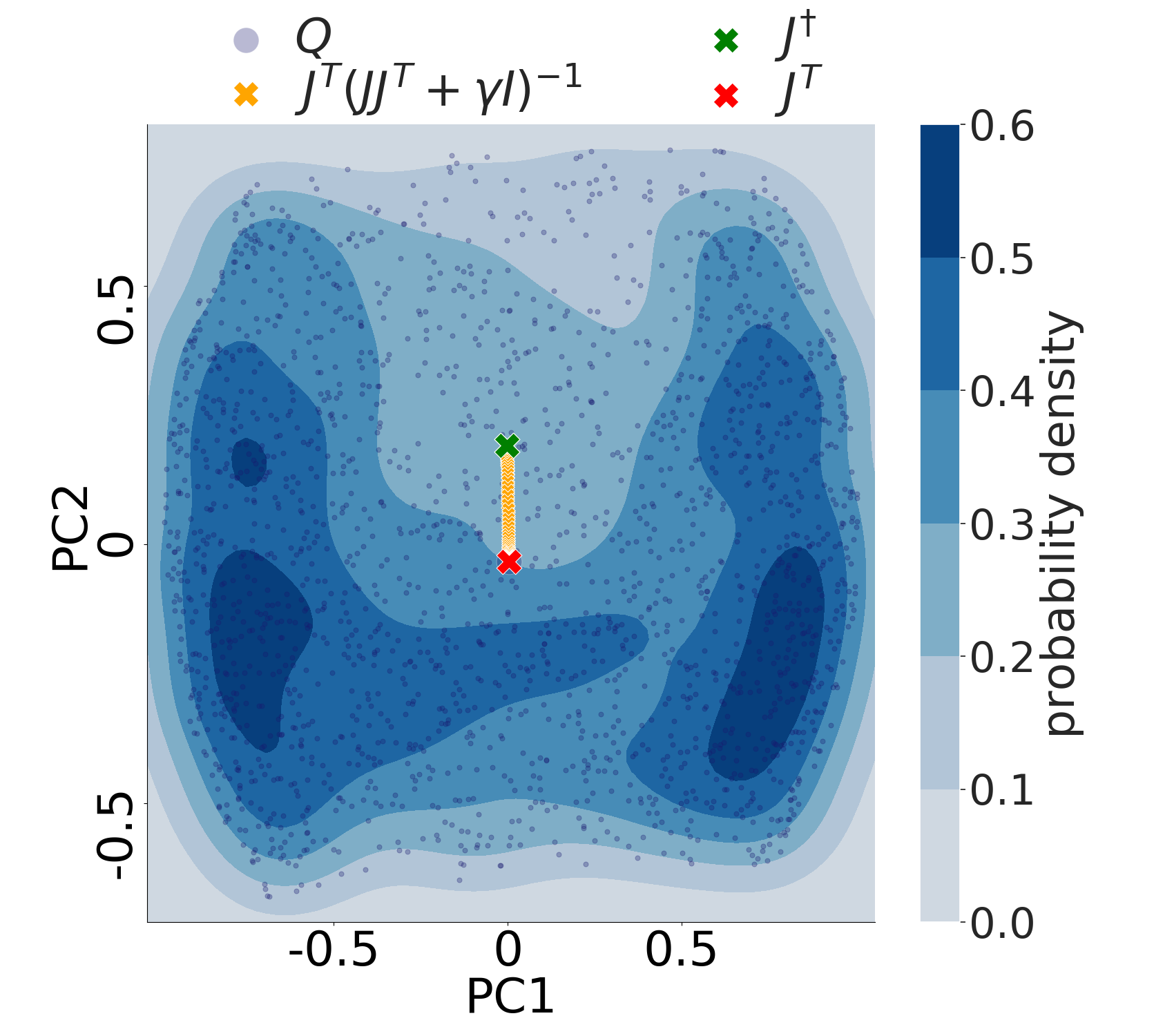

This more abstract condition imposes a flexible constraint on the feedback weights Q i subscript 𝑄 𝑖 Q_{i} , that generalizes common learning rules with direct feedback connections [ 16 , 21 ] . For instance, besides Q = J T 𝑄 superscript 𝐽 𝑇 Q=J^{T} (BP; [ 16 ] ) and Q = J † 𝑄 superscript 𝐽 † Q=J^{\dagger} [ 21 ] , many other instances of Q 𝑄 Q which have not yet been explored in the literature fulfill Condition 2 (see Fig. 2 ), hence leading to principled optimization (see Theorem 2 ). With these conditions in place, we are ready to state the main theorem of this section (full proof in App. A ).

Theorem 2 .

| (9) |

with η 𝜂 \eta a stepsize parameter, align with the weight updates for W i subscript 𝑊 𝑖 W_{i} for the feedforward network ( 2 ) prescribed by the GN optimization method with a minibatch size of 1.

In this theorem, we need Condition 2 such that the dynamical inversion Q ( J Q ) − 1 𝑄 superscript 𝐽 𝑄 1 Q(JQ)^{-1} ( 6 ) equals the pseudoinverse of J 𝐽 J and we need Condition 1 to extend this pseudoinverse to the Jacobian of the output w.r.t. the network weights, as in eq. ( 7 ). Theorem 2 links the DFC method to GN optimization, thereby showing that it does principled optimization, while being fundamentally different from BP. In contrast to recent work that connects target propagation to GN [ 21 , 22 ] , we do not need to approximate the GN curvature matrix by a block-diagonal matrix but use the full curvature instead. Hence, one can use Theorem 2 in Cai et al. [ 36 ] to obtain convergence results for this setting of GN with a minibatch size of 1, in highly overparameterized networks. Strikingly, the feedback path of DFC does not need to align with the forward path or its inverse to provide optimally aligned weight updates with GN, as long as it satisfies the flexible Condition 2 (see Fig. 2 ).

The steady-state updates ( 9 ) used in Theorem 2 differ from the actual updates ( 5 ) in two nuanced ways. First, the plasticity rule ( 5 ) uses a nonlinearity, ϕ italic-ϕ \phi , of the compartment activations, whereas in Theorem 2 this nonlinearity is not included. There are two reasons for this: (i) the use of ϕ italic-ϕ \phi in ( 5 ) can be linked to specific biophysical mechanisms in the pyramidal cell [ 37 ] (see Discussion), and (ii) using ϕ italic-ϕ \phi makes sure that saturated neurons do not update their forward weights, which leads to better performance (see App. A.6 ). Second, in Theorem 2 , the weights are only updated at steady state, whereas in ( 5 ) they are continuously updated during the dynamics of the network and controller. Before settling rapidly, the dynamics oscillate around the steady-state value (see Fig. 1 D), and hence, the accumulated continuous updates ( 5 ) will be approximately equal to its steady-state equivalent, since the oscillations approximately cancel each other out and the steady state is quickly reached (see Section 6.1 and App. A.7 ). Theorem 2 needs a L 2 superscript 𝐿 2 L^{2} loss function and Condition 1 and 2 to hold for linking DFC with GN. In the following subsection, we relax these assumptions and show that DFC still does principled optimization.

3.3 DFC uses weighted minimum norm updates

GN optimization with a minibatch size of 1 is equivalent to MN updates [ 21 ] , i.e., it computes the smallest possible weight update such that the network exactly reaches the current output target after the update. These MN updates can be generalized to weighted MN updates for targets using arbitrary loss functions. The following theorem shows the connection between DFC and these weighted MN updates, while removing the need for Condition 1 and an L 2 superscript 𝐿 2 L^{2} loss (full proof in App. A ).

Theorem 3 .

| (10) |

𝑚 1 𝐿 \mathbf{r}^{-(m+1)}_{L} the network output without feedback after the weight update.

Theorem 3 shows that Condition 2 enables the controller to drive the network towards its target 𝐫 L ∗ superscript subscript 𝐫 𝐿 \mathbf{r}_{L}^{*} with MN activation changes, Δ 𝐯 = 𝐯 − 𝐯 ff Δ 𝐯 𝐯 superscript 𝐯 ff \Delta\mathbf{v}=\mathbf{v}-\mathbf{v}^{\text{ff}} , which combined with the steady-state weight update ( 9 ) result in weighted MN updates Δ W i Δ subscript 𝑊 𝑖 \Delta W_{i} (see also App. A.4 ). When the feedback weights do not have the correct column space, the weight updates will not be MN. Nevertheless, the following proposition shows that the weight updates still follow a descent direction given arbitrary feedback weights.

Proposition 4 .

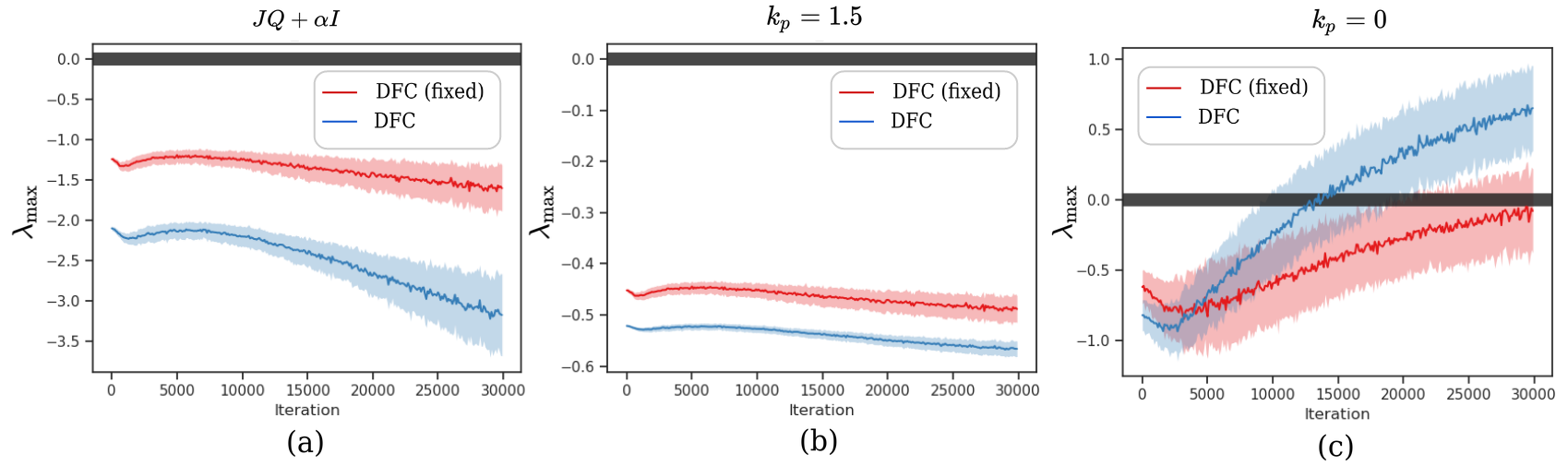

4 stability of dfc.

Until now, we assumed that the network dynamics are stable, which is necessary for DFC, as an unstable network will diverge, making learning impossible. In this section, we investigate the conditions on the feedback weights Q i subscript 𝑄 𝑖 Q_{i} necessary for stability. To gain intuition, we linearize the network around its feedforward values, assume a separation of timescales between the controller and the network ( τ u ≫ τ v much-greater-than subscript 𝜏 𝑢 subscript 𝜏 𝑣 \tau_{u}\gg\tau_{v} ), and only consider integrative control ( k p = 0 subscript 𝑘 𝑝 0 k_{p}=0 ). This results in the following dynamics (see App. B for the derivation):

| (11) |

Hence, in this simplified case, the local stability of the network around the equilibrium point depends on the eigenvalues of J Q 𝐽 𝑄 JQ , which is formalized in the following condition and proposition.

Condition 3 .

Given the network Jacobian evaluated at the steady state, J ss ≜ [ ∂ 𝐫 L − ∂ 𝐯 1 , … , ∂ 𝐫 L − ∂ 𝐯 L ] | 𝐯 = 𝐯 ss J_{\mathrm{ss}}\triangleq\left.\left[\frac{\partial\mathbf{r}^{-}_{L}}{\partial\mathbf{v}_{1}},...,\frac{\partial\mathbf{r}^{-}_{L}}{\partial\mathbf{v}_{L}}\right]\right\rvert_{\mathbf{v}=\mathbf{v}_{\mathrm{ss}}} , the real parts of the eigenvalues of J ss Q subscript 𝐽 ss 𝑄 J_{\mathrm{ss}}Q are all greater than − α 𝛼 -\alpha .

Proposition 5 .

Assuming τ u ≫ τ v much-greater-than subscript 𝜏 𝑢 subscript 𝜏 𝑣 \tau_{u}\gg\tau_{v} and k p = 0 subscript 𝑘 𝑝 0 k_{p}=0 , the network and controller dynamics are locally asymptotically stable around its equilibrium iff Condition 3 holds.

This proposition follows directly from Lyapunov’s Indirect Method [ 38 ] . When assuming the more general case where τ v subscript 𝜏 𝑣 \tau_{v} is not negligible and k p > 0 subscript 𝑘 𝑝 0 k_{p}>0 , the stability criteria quickly become less interpretable (see App. B ). However, experimentally, we see that Condition 3 is a good proxy condition for guaranteeing stability in the general case where τ v subscript 𝜏 𝑣 \tau_{v} is not negligible and k p > 0 subscript 𝑘 𝑝 0 k_{p}>0 (see Section 6 and App. B ).

5 Learning the feedback weights

Condition 2 and 3 emphasize the importance of the feedback weights for enabling efficient learning and ensuring stability of the network dynamics, respectively. As the forward weights, and hence the network Jacobian, J 𝐽 J , change during training, the set of feedback configurations that satisfy Conditions 2 and 3 also change. This creates the need to adapt the feedback weights accordingly to ensure efficient learning and network stability. We solve this challenge by learning the feedback weights, such that they can adapt to the changing network during training. We separate forward and feedback weight training in alternating wake-sleep phases [ 39 ] . Note that in practice, a fast alternation between the two phases is not required (see Section 6 ).

Inspired by the Weight Mirror method [ 14 ] , we learn the feedback weights by inserting independent zero-mean noise ϵ bold-italic-ϵ \boldsymbol{\epsilon} in the system dynamics:

| (12) |

The noise fluctuations propagated to the output carry information from the network Jacobian, J 𝐽 J . To let 𝐞 𝐞 \mathbf{e} , and hence 𝐮 𝐮 \mathbf{u} , incorporate this noise information, we set the output target 𝐫 L ∗ subscript superscript 𝐫 𝐿 \mathbf{r}^{*}_{L} to the average network output 𝐫 L − superscript subscript 𝐫 𝐿 \mathbf{r}_{L}^{-} . As the network is continuously perturbed by noise, the controller will try to counteract the noise and regulate the network towards the output target 𝐫 L − superscript subscript 𝐫 𝐿 \mathbf{r}_{L}^{-} . The feedback weights can then be trained with a simple anti-Hebbian plasticity rule with weight decay, which is local in space and time:

| (13) |

subscript 𝑄 𝑖 𝐮 subscript 𝜎 fb superscript subscript bold-italic-ϵ 𝑖 fb \mathbf{v}^{\text{fb}}_{i}=Q_{i}\mathbf{u}+\sigma_{\text{fb}}\boldsymbol{\epsilon}_{i}^{\text{fb}} . The correlation between the noise in 𝐯 i fb subscript superscript 𝐯 fb 𝑖 \mathbf{v}^{\text{fb}}_{i} and noise fluctuations in 𝐮 𝐮 \mathbf{u} provides the teaching signal for Q i subscript 𝑄 𝑖 Q_{i} . Theorem 6 shows under simplifying assumptions that the feedback learning rule ( 13 ) drives Q i subscript 𝑄 𝑖 Q_{i} to satisfy Condition 2 and 3 (see App. C for the full theorem and its proof).

Theorem 6 (Short version) .

Assume a separation of timescales τ v ≪ τ u ≪ τ Q much-less-than subscript 𝜏 𝑣 subscript 𝜏 𝑢 much-less-than subscript 𝜏 𝑄 \tau_{v}\ll\tau_{u}\ll\tau_{Q} , α 𝛼 \alpha big, k p = 0 subscript 𝑘 𝑝 0 k_{p}=0 , 𝐫 L ∗ = 𝐫 L − superscript subscript 𝐫 𝐿 superscript subscript 𝐫 𝐿 \mathbf{r}_{L}^{*}=\mathbf{r}_{L}^{-} , and Condition 3 holds. Then, for a fixed input sample and σ → 0 → 𝜎 0 \sigma\rightarrow 0 , the first moment of Q 𝑄 Q converges approximately to:

| (16) |

for some γ > 0 𝛾 0 \gamma>0 . Furthermore, 𝔼 [ Q ss ] 𝔼 delimited-[] subscript 𝑄 ss \mathbb{E}[Q_{\mathrm{ss}}] satisfies Conditions 2 and 3 , even if α = 0 𝛼 0 \alpha=0 in the latter.

Theorem 6 shows that under simplifying assumptions, Q 𝑄 Q converges towards a damped pseudoinverse of J 𝐽 J , which satisfies Conditions 2 and 3 . Empirically, we see that this also approximately holds for more general settings where τ v subscript 𝜏 𝑣 \tau_{v} is not negligible, k p > 0 subscript 𝑘 𝑝 0 k_{p}>0 , and small α 𝛼 \alpha (see Section 6 and App. C ).

𝐽 superscript 𝐽 𝑇 𝛾 𝐼 1 J^{T}(JJ^{T}+\gamma I)^{-1} over many samples.

6 Experiments

6.1 empirical verification of the theory.

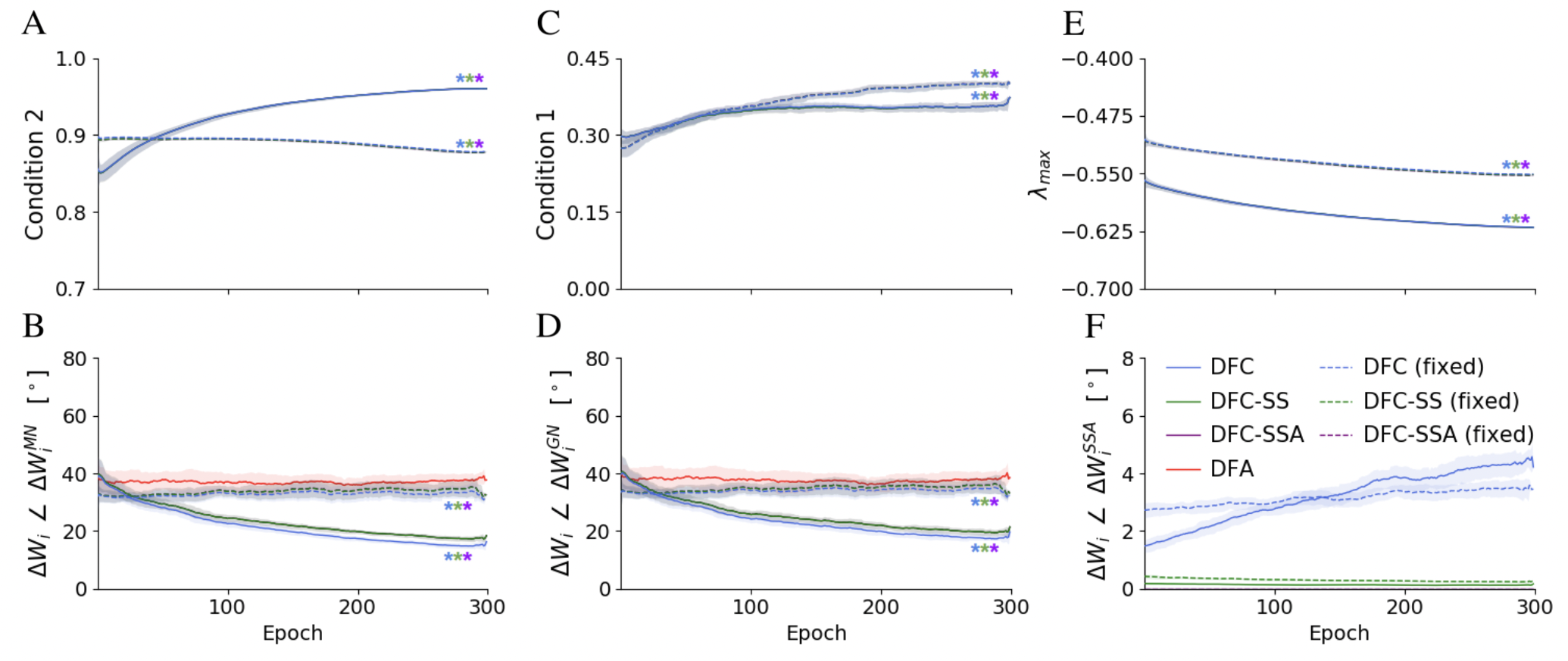

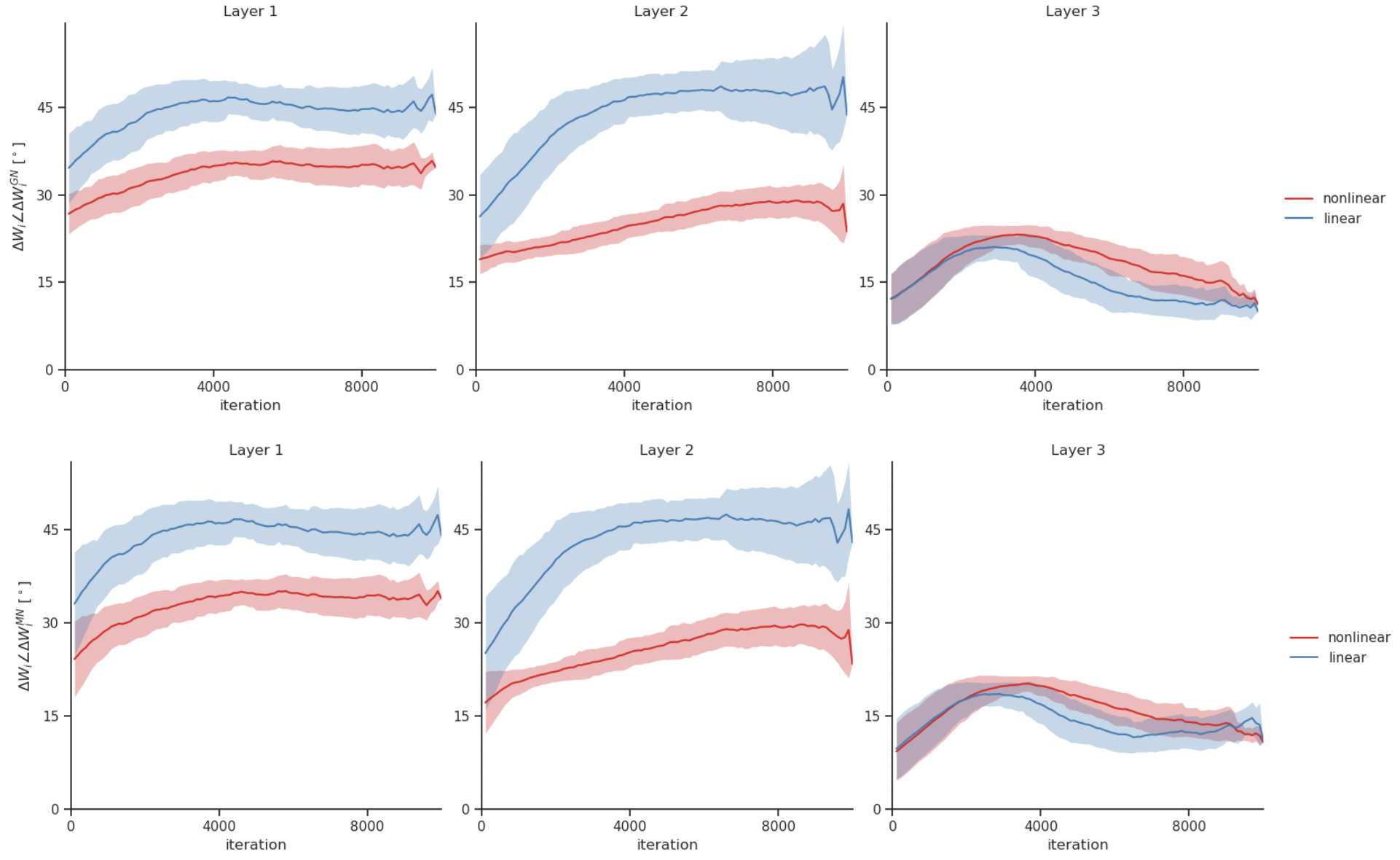

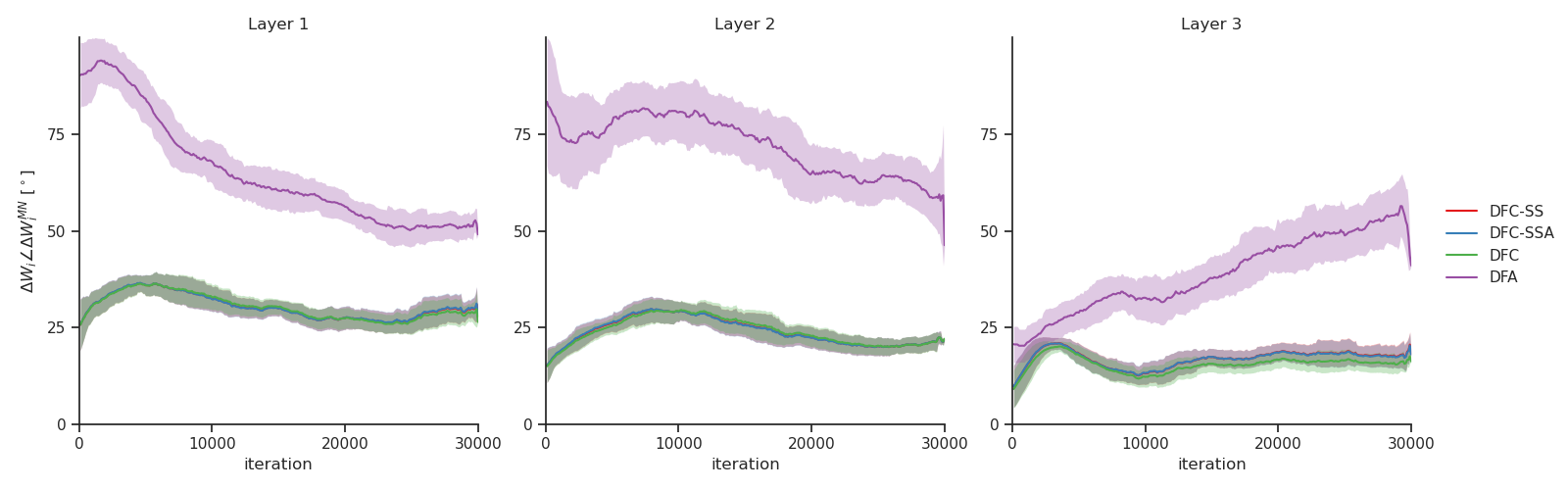

Figure 3 visualizes the theoretical results of Theorems 2 and 3 and Conditions 1 , 2 and 3 , in an empirical setting of nonlinear student teacher regression, where a randomly initialized teacher network generates synthetic training data for a student network. We see that Condition 2 is approximately satisfied for all DFC variants that learn their feedback weights (Fig. 3 A), leading to close alignment with the ideal weighted MN updates of Theorem 3 (Fig. 3 B). For nonlinear networks and linear direct feedback, it is in general not possible to perfectly satisfy Condition 2 as the network Jacobian J 𝐽 J varies for each datasample, while Q i subscript 𝑄 𝑖 Q_{i} remains the same. However, the results indicate that feedback learning finds a configuration for Q i subscript 𝑄 𝑖 Q_{i} that approximately satisfies Condition 2 for all datasamples. When the feedback weights are fixed, Condition 2 is approximately satisfied in the beginning of training due to a good initialization. However, as the network changes during training, Condition 2 degrades modestly, which results in worse alignment compared to DFC with trained feedback weights (Fig. 3 B).

For having GN updates, both Conditions 1 and 2 need to be satisfied. Although we do not enforce Condition 1 during training, we see in Fig. 3 C that it is crudely satisfied, which can be explained by the saturating properties of the tanh \tanh nonlinearity. This is reflected in the alignment with the ideal GN updates in Fig. 3 D that follows the same trend as the alignment with the MN updates. Fig. 3 E shows that all DFC variants remain stable throughout training, even when the feedback weights are fixed. In App. B , we indicate that Condition 3 is a good proxy for the stability shown in Fig. 3 E. Finally, we see in Fig. 3 F that the weight updates of DFC and DFC-SS align well with the analytical steady-state solution of Lemma 1 , confirming that our learning theory of Section 3 applies to the continuous weight updates ( 5 ) of DFC.

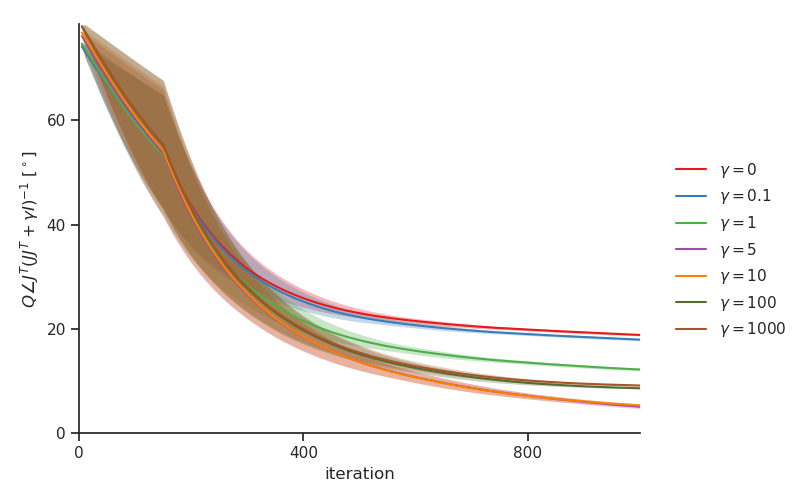

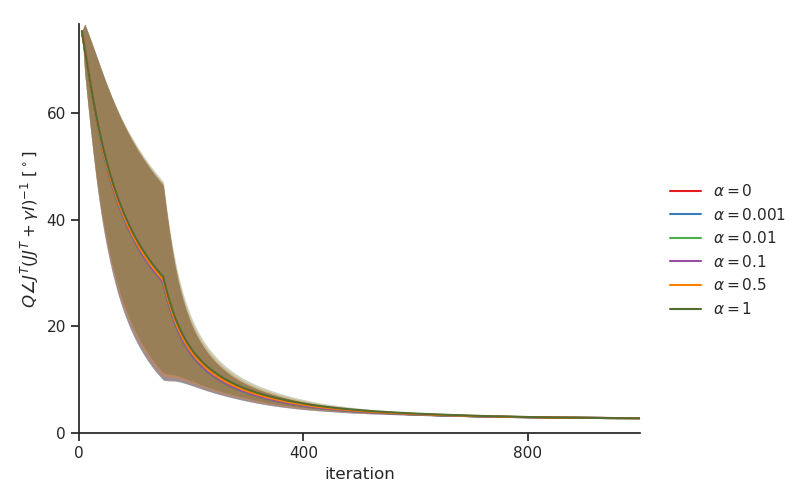

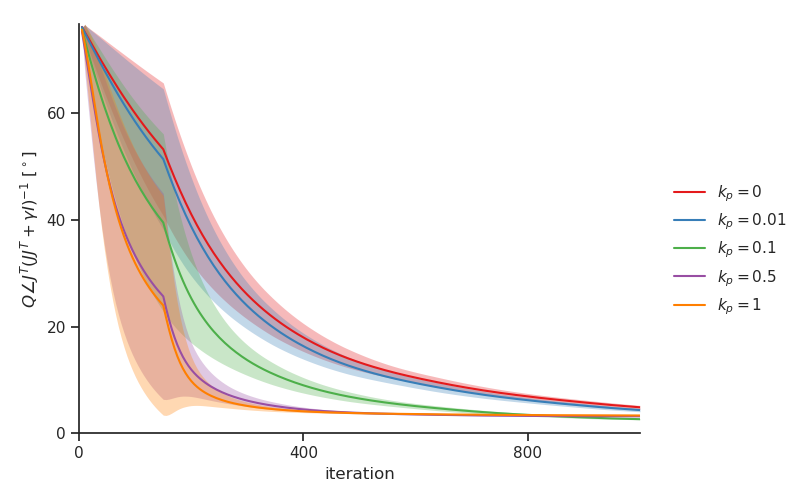

In Fig. 4, we show that the alignment with MN updates remains robust for λ ∈ [ 10 − 3 : 10 − 1 ] \lambda\in[10^{-3}:10^{-1}] and α ∈ [ 10 − 4 : 10 − 1 ] \alpha\in[10^{-4}:10^{-1}] , highlighting that our theory explains the behavior of DFC robustly when the limit of λ 𝜆 \lambda and α 𝛼 \alpha to zero does not hold. When we clamp the output target to the label ( λ = 0.5 𝜆 0.5 \lambda=0.5 ), the alignment with the MN updates decreases as expected (see Fig. 4), because the linearization of Lemma 1 becomes less accurate and the strong feedback changes the neural activations more significantly, thereby changing the pre-synaptic factor of the update rules (c.f. eq. 9 ). However, performance results on MNIST, provided in Table 2 , show that the performance of DFC remains robust for a wide range of λ 𝜆 \lambda s and α 𝛼 \alpha s, including λ = 0.5 𝜆 0.5 \lambda=0.5 , suggesting that DFC can also provide principled CA in this setting of strong feedback, which motivates future work to design a complementary theory for DFC focused on this extreme case.

![credit assignment neural networks [Uncaptioned image]](https://ar5iv.labs.arxiv.org/html/2106.07887/assets/figures/gnt_lambda_alpha_robustness_angle_alignment.png)

Figure 4: Comparison of the alignment between the DFC weight updates and the MN updates for variable values of λ 𝜆 \lambda (A) and α 𝛼 \alpha (B), when performing the nonlinear student-teacher regression task described in Fig. 3 . Stars indicate overlapping plots.

6.2 Performance of DFC on computer vision benchmarks

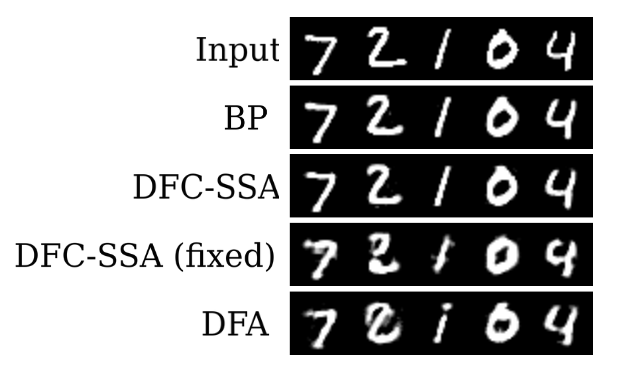

The classification results on MNIST and Fashion-MNIST (Table 1 ) show that the performances of DFC and its variants, but also its controls, lie close to the performance of BP, indicating that they perform proper CA in these tasks. To see significant differences between the methods, we consider the more challenging task of training an autoencoder on MNIST, where it is known that DFA fails to provide precise CA [ 9 , 16 , 32 ] . The results in Table 1 show that the DFC variants with trained feedback weights clearly outperform DFA and have close performance to BP. The low performance of the DFC variants with fixed feedback weights show the importance of learning the feedback weights continuously during training to satisfy Condition 2 . Finally, to disentangle optimization performance from implicit regularization mechanisms, which both influence the test performance, we investigate the performance of all methods in minimizing the training loss of MNIST. 2 2 2 We used separate hyperparameter configurations, selected for minimizing the training loss. The results in Table 1 show improved performance of the DFC method with trained feedback weights compared to BP and controls, suggesting that the approximate MN updates of DFC can faster descend the loss landscape for this simple dataset.

| MNIST | Fashion-MNIST | MNIST-autoencoder | MNIST (train loss) | |

|---|---|---|---|---|

| BP | ||||

| DFC | ||||

| DFC-SSA | ||||

| DFC-SS | ||||

| DFC (fixed) | ||||

| DFC-SSA (fixed) | ||||

| DFC-SS (fixed) | ||||

| DFA |

| DFC-SS | DFC | DFC-SS | DFC | ||

|---|---|---|---|---|---|

7 Discussion

We introduced DFC as an alternative biologically-plausible learning method for deep neural networks. DFC uses error feedback to drive the network activations to a desired output target. This process generates a neuron-specific learning signal which can be used to learn both forward and feedback weights locally in time and space. In contrast to other recent methods that learn the feedback weights and aim to approximate BP [ 14 , 15 , 16 , 17 , 26 ] , we show that DFC approximates GN optimization, making it fundamentally different from BP approximations.

DFC is optimal – i.e., Conditions 2 and 3 are satisfied – for a wide range of feedback connectivity strengths. Thus, we prove that principled learning can be achieved with local rules and without symmetric feedforward and feedback connectivity by leveraging the network dynamics. This finding has interesting implications for experimental neuroscientific research looking for precise patterns of symmetric connectivity in the brain. Moreover, from a computational standpoint, the flexibility that stems from Conditions 2 and 3 might be relevant for other mechanisms besides learning, such as attention and prediction [ 8 ] .

To present DFC in its simplest form, we used direct feedback mappings from the output controller to all hidden layers. Although numerous anatomical studies of the mammalian neocortex reported the occurrence of such direct feedback connections [ 45 , 46 ] , it is unlikely that all feedback pathways are direct. We note that DFC is also compatible with other feedback mappings, such as layerwise connections or separate feedback pathways with multiple layers of neurons (see App. H ).

Interestingly, the three-compartment neuron is closely linked to recent multi-compartment models of the cortical pyramidal neuron [ 23 , 25 , 26 , 47 ] . In the terminology of these models, our central, feedforward, and feedback compartments, correspond to the somatic, basal dendritic, and apical dendritic compartments of pyramidal neurons, respectively (see Fig. 1 C). In line with DFC, experimental observations [ 48 , 49 ] suggest that feedforward connections converge onto the basal compartment and feedback connections onto the apical compartment. Moreover, our plasticity rule for the forward weights ( 5 ) belongs to a class of dendritic predictive plasticity rules for which a biological implementation based on backpropagating action potentials has been put forward [ 37 ] .

Limitations and future work. In practice, the forward weight updates are not exactly equal to GN or MN updates (Theorems 2 and 3 ), due to (i) the nonlinearity ϕ italic-ϕ \phi in the weight update rule 5 , (ii) non-infinitesimal values for α 𝛼 \alpha and λ 𝜆 \lambda , (iii) limited training iterations for the feedback weights, and (iv) the limited capacity of linear feedback mappings to satisfy Condition 2 for each datasample. Figs. 3 and 4, and Table 2 show that DFC approximates the theory well in practice and has robust performance, however, future work can improve the results further by investigating new feedback architectures (see App. H ). We note that, even though GN optimization has desirable approximate second-order optimization properties, it is presently unclear whether these second-order characteristics translate to our setting with a minibatch size of 1. Currently, our proposed feedback learning rule ( 13 ) aims to approximate one specific configuration and hence does not capitalize on the increased flexibility of DFC and Condition 2 . Therefore, an interesting future direction is to design more flexible feedback learning rules that aim to satisfy Conditions 2 and 3 without targeting one specific configuration. Furthermore, DFC needs two separate phases for training the forward weights and feedback weights. Interestingly, if the feedback plasticity rule ( 13 ) uses a high-passed filtered version of the presynaptic input 𝐮 𝐮 \mathbf{u} , both phases can be merged into one, with plasticity always on for both forward and feedback weights (see App. C.3 ). Finally, as DFC is dynamical in nature, it is costly to simulate on commonly used hardware for deep learning, prohibiting us from testing DFC on large-scale problems such as those considered by Bartunov et al. [ 10 ] . A promising alternative is to implement DFC on analog hardware, where the dynamics of DFC can correspond to real physical processes on a chip. This would not only make DFC resource-efficient, but also position DFC as an interesting training method for analog implementations of deep neural networks, commonly used in Edge AI and other applications where low energy consumption is key [ 50 , 51 ] .

To conclude, we show that DFC can provide principled CA in deep neural networks by actively using error feedback to drive neural activations. The flexible requirements for feedback mappings combined with the strong link between DFC and GN, underline that it is possible to do principled CA in neural networks without adhering to the symmetric layer-wise feedback structure imposed by BP.

Acknowledgments and Disclosure of Funding

This work was supported by the Swiss National Science Foundation (B.F.G. CRSII5-173721 and 315230_189251), ETH project funding (B.F.G. ETH-20 19-01), the Human Frontiers Science Program (RGY0072/2019) and funding from the Swiss Data Science Center (B.F.G, C17-18, J. v. O. P18-03). João Sacramento was supported by an Ambizione grant (PZ00P3_186027) from the Swiss National Science Foundation. Pau Vilimelis Aceituno was supported by an ETH Zürich Postdoc fellowship. Javier García Ordóñez received support from La Caixa Foundation through the Postgraduate Studies in Europe scholarship. We would like to thank Anh Duong Vo and Nicolas Zucchet for feedback, William Podlaski, Jean-Pascal Pfister and Aditya Gilra for insightful discussions, and Simone Surace for his detailed feedback on Appendix C .1.

- Rumelhart et al. [1986] David E Rumelhart, Geoffrey E Hinton, and Ronald J Williams. Learning representations by back-propagating errors. Nature , 323(6088):533, 1986.

- Werbos [1982] Paul J Werbos. Applications of advances in nonlinear sensitivity analysis. In System modeling and optimization , pages 762–770. Springer, 1982.

- Linnainmaa [1970] Seppo Linnainmaa. The representation of the cumulative rounding error of an algorithm as a taylor expansion of the local rounding errors. Master’s Thesis (in Finnish), Univ. Helsinki , pages 6–7, 1970.

- Crick [1989] Francis Crick. The recent excitement about neural networks. Nature , 337(6203):129–132, 1989.

- Grossberg [1987] Stephen Grossberg. Competitive learning: From interactive activation to adaptive resonance. Cognitive Science , 11(1):23–63, 1987.

- Lillicrap et al. [2020] Timothy P Lillicrap, Adam Santoro, Luke Marris, Colin J Akerman, and Geoffrey Hinton. Backpropagation and the brain. Nature Reviews Neuroscience , pages 1–12, 2020.

- Larkum et al. [2009] Matthew E Larkum, Thomas Nevian, Maya Sandler, Alon Polsky, and Jackie Schiller. Synaptic integration in tuft dendrites of layer 5 pyramidal neurons: a new unifying principle. Science , 325(5941):756–760, 2009.

- Gilbert and Li [2013] Charles D Gilbert and Wu Li. Top-down influences on visual processing. Nature Reviews Neuroscience , 14(5):350–363, 2013.

- Lillicrap et al. [2016] Timothy P Lillicrap, Daniel Cownden, Douglas B Tweed, and Colin J Akerman. Random synaptic feedback weights support error backpropagation for deep learning. Nature Communications , 7:13276, 2016.

- Bartunov et al. [2018] Sergey Bartunov, Adam Santoro, Blake Richards, Luke Marris, Geoffrey E Hinton, and Timothy Lillicrap. Assessing the scalability of biologically-motivated deep learning algorithms and architectures. In Advances in Neural Information Processing Systems 31 , pages 9368–9378, 2018.

- Launay et al. [2019] Julien Launay, Iacopo Poli, and Florent Krzakala. Principled training of neural networks with direct feedback alignment. arXiv preprint arXiv:1906.04554 , 2019.

- Moskovitz et al. [2018] Theodore H Moskovitz, Ashok Litwin-Kumar, and LF Abbott. Feedback alignment in deep convolutional networks. arXiv preprint arXiv:1812.06488 , 2018.

- Crafton et al. [2019] Brian Alexander Crafton, Abhinav Parihar, Evan Gebhardt, and Arijit Raychowdhury. Direct feedback alignment with sparse connections for local learning. Frontiers in Neuroscience , 13:525, 2019.

- Akrout et al. [2019] Mohamed Akrout, Collin Wilson, Peter Humphreys, Timothy Lillicrap, and Douglas B Tweed. Deep learning without weight transport. In Advances in Neural Information Processing Systems 32 , pages 974–982, 2019.

- Kunin et al. [2020] Daniel Kunin, Aran Nayebi, Javier Sagastuy-Brena, Surya Ganguli, Jonathan Bloom, and Daniel Yamins. Two routes to scalable credit assignment without weight symmetry. In International Conference on Machine Learning , pages 5511–5521. PMLR, 2020.

- Lansdell et al. [2020] Benjamin James Lansdell, Prashanth Prakash, and Konrad Paul Kording. Learning to solve the credit assignment problem. In International Conference on Learning Representations , 2020.

- Guerguiev et al. [2020] Jordan Guerguiev, Konrad Kording, and Blake Richards. Spike-based causal inference for weight alignment. In International Conference on Learning Representations , 2020.

- Golkar et al. [2020] Siavash Golkar, David Lipshutz, Yanis Bahroun, Anirvan M. Sengupta, and Dmitri B. Chklovskii. A biologically plausible neural network for local supervision in cortical microcircuits, 2020.

- Bengio [2014] Yoshua Bengio. How auto-encoders could provide credit assignment in deep networks via target propagation. arXiv preprint arXiv:1407.7906 , 2014.

- Lee et al. [2015] Dong-Hyun Lee, Saizheng Zhang, Asja Fischer, and Yoshua Bengio. Difference target propagation. In Joint european conference on machine learning and knowledge discovery in databases , pages 498–515. Springer, 2015.

- Meulemans et al. [2020] Alexander Meulemans, Francesco Carzaniga, Johan Suykens, João Sacramento, and Benjamin F. Grewe. A theoretical framework for target propagation. Advances in Neural Information Processing Systems , 33:20024–20036, 2020.

- Bengio [2020] Yoshua Bengio. Deriving differential target propagation from iterating approximate inverses. arXiv preprint arXiv:2007.15139 , 2020.

- Sacramento et al. [2018] João Sacramento, Rui Ponte Costa, Yoshua Bengio, and Walter Senn. Dendritic cortical microcircuits approximate the backpropagation algorithm. In Advances in Neural Information Processing Systems 31 , pages 8721–8732, 2018.

- Whittington and Bogacz [2017] James CR Whittington and Rafal Bogacz. An approximation of the error backpropagation algorithm in a predictive coding network with local hebbian synaptic plasticity. Neural computation , 29(5):1229–1262, 2017.

- Guerguiev et al. [2017] Jordan Guerguiev, Timothy P Lillicrap, and Blake A Richards. Towards deep learning with segregated dendrites. ELife , 6:e22901, 2017.

- Payeur et al. [2021] Alexandre Payeur, Jordan Guerguiev, Friedemann Zenke, Blake Richards, and Richard Naud. Burst-dependent synaptic plasticity can coordinate learning in hierarchical circuits. Nature neuroscience , 24(5):1546, 2021.

- Slotine et al. [1991] Jean-Jacques E Slotine, Weiping Li, et al. Applied nonlinear control , volume 199. Prentice hall Englewood Cliffs, NJ, 1991.

- Gilra and Gerstner [2017] Aditya Gilra and Wulfram Gerstner. Predicting non-linear dynamics by stable local learning in a recurrent spiking neural network. Elife , 6:e28295, 2017.

- Denève et al. [2017] Sophie Denève, Alireza Alemi, and Ralph Bourdoukan. The brain as an efficient and robust adaptive learner. Neuron , 94(5):969–977, 2017.

- Alemi et al. [2018] Alireza Alemi, Christian Machens, Sophie Denève, and Jean-Jacques Slotine. Learning arbitrary dynamics in efficient, balanced spiking networks using local plasticity rules. AAAI Conference on Artificial Intelligence (AAAI) , 2018.

- Bourdoukan and Deneve [2015] Ralph Bourdoukan and Sophie Deneve. Enforcing balance allows local supervised learning in spiking recurrent networks. Advances in Neural Information Processing Systems , 28:982–990, 2015.

- Podlaski and Machens [2020] William F Podlaski and Christian K Machens. Biological credit assignment through dynamic inversion of feedforward networks. Advances in Neural Information Processing Systems 33 , 2020.

- Kohan et al. [2018] Adam A Kohan, Edward A Rietman, and Hava T Siegelmann. Error forward-propagation: Reusing feedforward connections to propagate errors in deep learning. arXiv preprint arXiv:1808.03357 , 2018.

- Franklin et al. [2015] Gene F Franklin, J David Powell, and Abbas Emami-Naeini. Feedback control of dynamic systems . Pearson London, 2015.

- Gauss [1809] Carl Friedrich Gauss. Theoria motus corporum coelestium in sectionibus conicis solem ambientium , volume 7. Perthes et Besser, 1809.

- Cai et al. [2019] Tianle Cai, Ruiqi Gao, Jikai Hou, Siyu Chen, Dong Wang, Di He, Zhihua Zhang, and Liwei Wang. A gram-gauss-newton method learning overparameterized deep neural networks for regression problems. arXiv preprint arXiv:1905.11675 , 2019.

- Urbanczik and Senn [2014] Robert Urbanczik and Walter Senn. Learning by the dendritic prediction of somatic spiking. Neuron , 81(3):521–528, 2014.

- Lyapunov [1992] A. M. Lyapunov. The general problem of the stability of motion. International Journal of Control , 55(3):531–534, 1992. doi: 10.1080/00207179208934253 .

- Hinton et al. [1995] Geoffrey E Hinton, Peter Dayan, Brendan J Frey, and Radford M Neal. The" wake-sleep" algorithm for unsupervised neural networks. Science , 268(5214):1158–1161, 1995.

- LeCun [1998] Yann LeCun. The mnist database of handwritten digits. http://yann. lecun. com/exdb/mnist/ , 1998.

- Xiao et al. [2017] Han Xiao, Kashif Rasul, and Roland Vollgraf. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747 , 2017.

- Nøkland [2016] Arild Nøkland. Direct feedback alignment provides learning in deep neural networks. In Advances in neural information processing systems , pages 1037–1045, 2016.

- Särkkä and Solin [2019] Simo Särkkä and Arno Solin. Applied stochastic differential equations , volume 10. Cambridge University Press, 2019.

- Kingma and Ba [2014] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings , 2014.

- Ungerleider et al. [2008] Leslie G Ungerleider, Thelma W Galkin, Robert Desimone, and Ricardo Gattass. Cortical connections of area v4 in the macaque. Cerebral Cortex , 18(3):477–499, 2008.

- Rockland and Van Hoesen [1994] Kathleen S Rockland and Gary W Van Hoesen. Direct temporal-occipital feedback connections to striate cortex (v1) in the macaque monkey. Cerebral cortex , 4(3):300–313, 1994.

- Richards and Lillicrap [2019] Blake A Richards and Timothy P Lillicrap. Dendritic solutions to the credit assignment problem. Current opinion in neurobiology , 54:28–36, 2019.

- Larkum [2013] Matthew Larkum. A cellular mechanism for cortical associations: an organizing principle for the cerebral cortex. Trends in neurosciences , 36(3):141–151, 2013.

- Spruston [2008] Nelson Spruston. Pyramidal neurons: dendritic structure and synaptic integration. Nature Reviews Neuroscience , 9(3):206–221, 2008.

- Xiao et al. [2020] T Patrick Xiao, Christopher H Bennett, Ben Feinberg, Sapan Agarwal, and Matthew J Marinella. Analog architectures for neural network acceleration based on non-volatile memory. Applied Physics Reviews , 7(3):031301, 2020.

- Misra and Saha [2010] Janardan Misra and Indranil Saha. Artificial neural networks in hardware: A survey of two decades of progress. Neurocomputing , 74(1-3):239–255, 2010.

- Moore [1920] Eliakim H Moore. On the reciprocal of the general algebraic matrix. Bull. Am. Math. Soc. , 26:394–395, 1920.

- Penrose [1955] Roger Penrose. A generalized inverse for matrices. In Mathematical proceedings of the Cambridge philosophical society , volume 51, pages 406–413. Cambridge University Press, 1955.

- Levenberg [1944] Kenneth Levenberg. A method for the solution of certain non-linear problems in least squares. Quarterly of applied mathematics , 2(2):164–168, 1944.

- Campbell and Meyer [2009] Stephen L Campbell and Carl D Meyer. Generalized inverses of linear transformations . SIAM, 2009.

- Schraudolph [2002] Nicol N Schraudolph. Fast curvature matrix-vector products for second-order gradient descent. Neural computation , 14(7):1723–1738, 2002.

- Zhang et al. [2019] Guodong Zhang, James Martens, and Roger B Grosse. Fast convergence of natural gradient descent for over-parameterized neural networks. In Advances in Neural Information Processing Systems 32 , pages 8080–8091, 2019.

- Seung [1996] H Sebastian Seung. How the brain keeps the eyes still. Proceedings of the National Academy of Sciences , 93(23):13339–13344, 1996.

- Koulakov et al. [2002] Alexei A Koulakov, Sridhar Raghavachari, Adam Kepecs, and John E Lisman. Model for a robust neural integrator. Nature neuroscience , 5(8):775–782, 2002.

- Goldman et al. [2003] Mark S Goldman, Joseph H Levine, Guy Major, David W Tank, and HS Seung. Robust persistent neural activity in a model integrator with multiple hysteretic dendrites per neuron. Cerebral cortex , 13(11):1185–1195, 2003.

- Goldman et al. [2010] Mark S Goldman, A Compte, and Xiao-Jing Wang. Neural integrator models. Encyclopedia of neuroscience , pages 165–178, 2010.

- Lim and Goldman [2013] Sukbin Lim and Mark S Goldman. Balanced cortical microcircuitry for maintaining information in working memory. Nature neuroscience , 16(9):1306–1314, 2013.

- Bejarano et al. [2018] D Bejarano, Eduardo Ibargüen-Mondragón, and Enith Amanda Gómez-Hernández. A stability test for non linear systems of ordinary differential equations based on the gershgorin circles. Contemporary Engineering Sciences , 11(91):4541–4548, 2018.

- Martens and Grosse [2015] James Martens and Roger Grosse. Optimizing neural networks with kronecker-factored approximate curvature. In Proceedings of the 32nd International Conference on Machine Learning , pages 2408–2417, 2015.

- Botev et al. [2017] Aleksandar Botev, Hippolyt Ritter, and David Barber. Practical gauss-newton optimisation for deep learning. In Proceedings of the 34th International Conference on Machine Learning , pages 557–565. JMLR. org, 2017.

- Glorot and Bengio [2010] Xavier Glorot and Yoshua Bengio. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the thirteenth international conference on artificial intelligence and statistics , pages 249–256. JMLR Workshop and Conference Proceedings, 2010.

- Paszke et al. [2017] Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. Automatic differentiation in pytorch. 2017.

- Bergstra et al. [2011] James S Bergstra, Rémi Bardenet, Yoshua Bengio, and Balázs Kégl. Algorithms for hyper-parameter optimization. In Advances in neural information processing systems , pages 2546–2554, 2011.

- Bergstra et al. [2013] James Bergstra, Dan Yamins, and David D Cox. Hyperopt: A python library for optimizing the hyperparameters of machine learning algorithms. In Proceedings of the 12th Python in science conference , pages 13–20. Citeseer, 2013.

- Liaw et al. [2018] Richard Liaw, Eric Liang, Robert Nishihara, Philipp Moritz, Joseph E Gonzalez, and Ion Stoica. Tune: A research platform for distributed model selection and training. arXiv preprint arXiv:1807.05118 , 2018.

- Paszke et al. [2019] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32 , pages 8024–8035. Curran Associates, Inc., 2019.

- Silver [2010] R Angus Silver. Neuronal arithmetic. Nature Reviews Neuroscience , 11(7):474–489, 2010.

- Ferguson and Cardin [2020] Katie A Ferguson and Jessica A Cardin. Mechanisms underlying gain modulation in the cortex. Nature Reviews Neuroscience , 21(2):80–92, 2020.

- Larkum et al. [2004] Matthew E Larkum, Walter Senn, and Hans-R Lüscher. Top-down dendritic input increases the gain of layer 5 pyramidal neurons. Cerebral cortex , 14(10):1059–1070, 2004.

- Naud and Sprekeler [2017] Richard Naud and Henning Sprekeler. Burst ensemble multiplexing: A neural code connecting dendritic spikes with microcircuits. bioRxiv , page 143636, 2017.

- Bengio et al. [2015] Yoshua Bengio, Dong-Hyun Lee, Jorg Bornschein, Thomas Mesnard, and Zhouhan Lin. Towards biologically plausible deep learning. arXiv preprint arXiv:1502.04156 , 2015.

Supplementary Material

Alexander Meulemans ∗ , Matilde Tristany Farinha ∗ , Javier García Ordóñez, Pau Vilimelis Aceituno, João Sacramento, Benjamin F. Grewe Institute of Neuroinformatics, University of Zürich and ETH Zürich [email protected]

Appendix A Proofs and extra information for Section 3 : Learning theory

A.1 linearized dynamics and fixed points.

In this section, we linearize the network dynamics around the feedforward voltage levels 𝐯 i − superscript subscript 𝐯 𝑖 \mathbf{v}_{i}^{-} (i.e., the equilibrium of the network when no feedback is present) and study the equilibrium points resulting from the feedback input from the controller.

First, we introduce some shorthand notations:

| (17) | ||||

| (18) | ||||

| (19) | ||||

| (20) | ||||

| (21) | ||||