Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

A survey of sentiment analysis: approaches, datasets, and future research.

1. Introduction

- A comprehensive overview of the state-of-the-art studies on sentiment analysis, which are categorized as conventional machine learning, deep learning, and ensemble learning, with a focus on the preprocessing techniques, feature extraction methods, classification methods, and datasets used, as well as the experimental results.

- An in-depth discussion of the commonly used sentiment analysis datasets and their challenges, as well as a discussion about the limitations of the current works and the potential for future research in this field.

2. Sentiment Analysis Algorithms

2.1. machine learning approach, 2.2. deep learning approach, 3. ensemble learning approach, 4. sentiment analysis datasets, 4.1. internet movie database (imdb), 4.2. twitter us airline sentiment, 4.3. sentiment140, 4.4. semeval-2017 task 4, 5. limitations and future research prospects.

- Poorly Structured and Sarcastic Texts: Many sentiment analysis methods rely on structured and grammatically correct text, which can lead to inaccuracies in analyzing informal and poorly structured texts, such as social media posts, slang, and sarcastic comments. This is because the sentiments expressed in these types of texts can be subtle and require contextual understanding beyond surface-level analysis.

- Coarse-Grained Sentiment Analysis: Although positive, negative, and neutral classes are commonly used in sentiment analysis, they may not capture the full range of emotions and intensities that a person can express. Fine-grained sentiment analysis, which categorizes emotions into more specific categories such as happy, sad, angry, or surprised, can provide more nuanced insights into the sentiment expressed in a text.

- Lack of Cultural Awareness: Sentiment analysis models trained on data from a specific language or culture may not accurately capture the sentiments expressed in texts from other languages or cultures. This is because the use of language, idioms, and expressions can vary widely across cultures, and a sentiment analysis model trained on one culture may not be effective in analyzing sentiment in another culture.

- Dependence on Annotated Data: Sentiment analysis algorithms often rely on annotated data, where humans manually label the sentiment of a text. However, collecting and labeling a large dataset can be time-consuming and resource-intensive, which can limit the scope of analysis to a specific domain or language.

- Shortcomings of Word Embeddings: Word embeddings, which are a popular technique used in deep learning-based sentiment analysis, can be limited in capturing the complex relationships between words and their meanings in a text. This can result in a model that does not accurately represent the sentiment expressed in a text, leading to inaccuracies in analysis.

- Bias in Training Data: The training data used to train a sentiment analysis model can be biased, which can impact the model’s accuracy and generalization to new data. For example, a dataset that is predominantly composed of texts from one gender or race can lead to a model that is biased toward that group, resulting in inaccurate predictions for texts from other groups.

- Fine-Grained Sentiment Analysis: The current sentiment analysis models mainly classify the sentiment into three coarse classes: positive, negative, and neutral. However, there is a need to extend this to a fine-grained sentiment analysis, which consists of different emotional intensities, such as strongly positive, positive, neutral, negative, and strongly negative. Researchers can explore various deep learning architectures and techniques to perform fine-grained sentiment analysis. One such approach is to use hierarchical attention networks that can capture the sentiment expressed in different parts of a text at different levels of granularity.

- Sentiment Quantification: Sentiment quantification is an important application of sentiment analysis. It involves computing the polarity distributions based on the topics to aid in strategic decision making. Researchers can develop more advanced models that can accurately capture the sentiment distribution across different topics. One way to achieve this is to use topic modeling techniques to identify the underlying topics in a corpus of text and then use sentiment analysis to compute the sentiment distribution for each topic.

- Handling Ambiguous and Sarcastic Texts: Sentiment analysis models face challenges in accurately detecting sentiment in ambiguous and sarcastic texts. Researchers can explore the use of reinforcement learning techniques to train models that can handle ambiguous and sarcastic texts. This involves developing models that can learn from feedback and adapt their predictions accordingly.

- Cross-lingual Sentiment Analysis: Currently, sentiment analysis models are primarily trained on English text. However, there is a growing need for sentiment analysis models that can work across multiple languages. Cross-lingual sentiment analysis would help to better understand the sentiment expressed in different languages, making sentiment analysis accessible to a larger audience. Researchers can explore the use of transfer learning techniques to develop sentiment analysis models that can work across multiple languages. One approach is to pretrain models on large multilingual corpora and then fine-tune them for sentiment analysis tasks in specific languages.

- Sentiment Analysis in Social Media: Social media platforms generate huge amounts of data every day, making it difficult to manually process the data. Researchers can explore the use of domain-specific embeddings that are trained on social media text to improve the accuracy of sentiment analysis models. They can also develop models that can handle noisy or short social media text by incorporating contextual information and leveraging user interactions.

6. Conclusions

Author contributions, institutional review board statement, informed consent statement, data availability statement, conflicts of interest.

- Ligthart, A.; Catal, C.; Tekinerdogan, B. Systematic reviews in sentiment analysis: A tertiary study. Artif. Intell. Rev. 2021 , 54 , 4997–5053. [ Google Scholar ] [ CrossRef ]

- Dang, N.C.; Moreno-García, M.N.; De la Prieta, F. Sentiment analysis based on deep learning: A comparative study. Electronics 2020 , 9 , 483. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Chakriswaran, P.; Vincent, D.R.; Srinivasan, K.; Sharma, V.; Chang, C.Y.; Reina, D.G. Emotion AI-driven sentiment analysis: A survey, future research directions, and open issues. Appl. Sci. 2019 , 9 , 5462. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Jung, Y.G.; Kim, K.T.; Lee, B.; Youn, H.Y. Enhanced Naive Bayes classifier for real-time sentiment analysis with SparkR. In Proceedings of the 2016 IEEE International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 19–21 October 2016; pp. 141–146. [ Google Scholar ]

- Athindran, N.S.; Manikandaraj, S.; Kamaleshwar, R. Comparative analysis of customer sentiments on competing brands using hybrid model approach. In Proceedings of the 2018 IEEE 3rd International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 15–16 November 2018; pp. 348–353. [ Google Scholar ]

- Vanaja, S.; Belwal, M. Aspect-level sentiment analysis on e-commerce data. In Proceedings of the 2018 IEEE International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 11–12 July 2018; pp. 1275–1279. [ Google Scholar ]

- Iqbal, N.; Chowdhury, A.M.; Ahsan, T. Enhancing the performance of sentiment analysis by using different feature combinations. In Proceedings of the 2018 IEEE International Conference on Computer, Communication, Chemical, Material and Electronic Engineering (IC4ME2), Rajshahi, Bangladesh, 8–9 February 2018; pp. 1–4. [ Google Scholar ]

- Rathi, M.; Malik, A.; Varshney, D.; Sharma, R.; Mendiratta, S. Sentiment analysis of tweets using machine learning approach. In Proceedings of the 2018 IEEE Eleventh International Conference on Contemporary Computing (IC3), Noida, India, 2–4 August 2018; pp. 1–3. [ Google Scholar ]

- Tariyal, A.; Goyal, S.; Tantububay, N. Sentiment Analysis of Tweets Using Various Machine Learning Techniques. In Proceedings of the 2018 IEEE International Conference on Advanced Computation and Telecommunication (ICACAT), Bhopal, India, 28–29 December 2018; pp. 1–5. [ Google Scholar ]

- Hemakala, T.; Santhoshkumar, S. Advanced classification method of twitter data using sentiment analysis for airline service. Int. J. Comput. Sci. Eng. 2018 , 6 , 331–335. [ Google Scholar ] [ CrossRef ]

- Rahat, A.M.; Kahir, A.; Masum, A.K.M. Comparison of Naive Bayes and SVM Algorithm based on sentiment analysis using review dataset. In Proceedings of the 2019 IEEE 8th International Conference System Modeling and Advancement in Research Trends (SMART), Moradabad, India, 22–23 November 2019; pp. 266–270. [ Google Scholar ]

- Makhmudah, U.; Bukhori, S.; Putra, J.A.; Yudha, B.A.B. Sentiment Analysis of Indonesian Homosexual Tweets Using Support Vector Machine Method. In Proceedings of the 2019 IEEE International Conference on Computer Science, Information Technology, and Electrical Engineering (ICOMITEE), Jember, Indonesia, 16–17 October 2019; pp. 183–186. [ Google Scholar ]

- Wongkar, M.; Angdresey, A. Sentiment analysis using Naive Bayes Algorithm of the data crawler: Twitter. In Proceedings of the 2019 IEEE Fourth International Conference on Informatics and Computing (ICIC), Semarang, Indonesia, 16–17 October 2019; pp. 1–5. [ Google Scholar ]

- Madhuri, D.K. A machine learning based framework for sentiment classification: Indian railways case study. Int. J. Innov. Technol. Explor. Eng. (IJITEE) 2019 , 8 , 441–445. [ Google Scholar ]

- Gupta, A.; Singh, A.; Pandita, I.; Parashar, H. Sentiment analysis of Twitter posts using machine learning algorithms. In Proceedings of the 2019 IEEE 6th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 13–15 March 2019; pp. 980–983. [ Google Scholar ]

- Prabhakar, E.; Santhosh, M.; Krishnan, A.H.; Kumar, T.; Sudhakar, R. Sentiment analysis of US Airline Twitter data using new AdaBoost approach. Int. J. Eng. Res. Technol. (IJERT) 2019 , 7 , 1–6. [ Google Scholar ]

- Hourrane, O.; Idrissi, N. Sentiment Classification on Movie Reviews and Twitter: An Experimental Study of Supervised Learning Models. In Proceedings of the 2019 IEEE 1st International Conference on Smart Systems and Data Science (ICSSD), Rabat, Morocco, 3–4 October 2019; pp. 1–6. [ Google Scholar ]

- AlSalman, H. An improved approach for sentiment analysis of arabic tweets in twitter social media. In Proceedings of the 2020 IEEE 3rd International Conference on Computer Applications & Information Security (ICCAIS), Riyadh, Saudi Arabia, 19–21 March 2020; pp. 1–4. [ Google Scholar ]

- Saad, A.I. Opinion Mining on US Airline Twitter Data Using Machine Learning Techniques. In Proceedings of the 2020 IEEE 16th International Computer Engineering Conference (ICENCO), Cairo, Egypt, 29–30 December 2020; pp. 59–63. [ Google Scholar ]

- Alzyout, M.; Bashabsheh, E.A.; Najadat, H.; Alaiad, A. Sentiment Analysis of Arabic Tweets about Violence Against Women using Machine Learning. In Proceedings of the 2021 IEEE 12th International Conference on Information and Communication Systems (ICICS), Valencia, Spain, 24–26 May 2021; pp. 171–176. [ Google Scholar ]

- Jemai, F.; Hayouni, M.; Baccar, S. Sentiment Analysis Using Machine Learning Algorithms. In Proceedings of the 2021 IEEE International Wireless Communications and Mobile Computing (IWCMC), Harbin, China, 28 June–2 July 2021; pp. 775–779. [ Google Scholar ]

- Ramadhani, A.M.; Goo, H.S. Twitter sentiment analysis using deep learning methods. In Proceedings of the 2017 IEEE 7th International Annual Engineering Seminar (InAES), Yogyakarta, Indonesia, 1–2 August 2017; pp. 1–4. [ Google Scholar ]

- Demirci, G.M.; Keskin, Ş.R.; Doğan, G. Sentiment analysis in Turkish with deep learning. In Proceedings of the 2019 IEEE International Conference on Big Data, Honolulu, HI, USA, 29–31 May 2019; pp. 2215–2221. [ Google Scholar ]

- Raza, G.M.; Butt, Z.S.; Latif, S.; Wahid, A. Sentiment Analysis on COVID Tweets: An Experimental Analysis on the Impact of Count Vectorizer and TF-IDF on Sentiment Predictions using Deep Learning Models. In Proceedings of the 2021 IEEE International Conference on Digital Futures and Transformative Technologies (ICoDT2), Islamabad, Pakistan, 20–21 May 2021; pp. 1–6. [ Google Scholar ]

- Dholpuria, T.; Rana, Y.; Agrawal, C. A sentiment analysis approach through deep learning for a movie review. In Proceedings of the 2018 IEEE 8th International Conference on Communication Systems and Network Technologies (CSNT), Bhopal, India, 24–26 November 2018; pp. 173–181. [ Google Scholar ]

- Harjule, P.; Gurjar, A.; Seth, H.; Thakur, P. Text classification on Twitter data. In Proceedings of the 2020 IEEE 3rd International Conference on Emerging Technologies in Computer Engineering: Machine Learning and Internet of Things (ICETCE), Jaipur, India, 7–8 February 2020; pp. 160–164. [ Google Scholar ]

- Uddin, A.H.; Bapery, D.; Arif, A.S.M. Depression Analysis from Social Media Data in Bangla Language using Long Short Term Memory (LSTM) Recurrent Neural Network Technique. In Proceedings of the 2019 IEEE International Conference on Computer, Communication, Chemical, Materials and Electronic Engineering (IC4ME2), Rajshahi, Bangladesh, 11–12 July 2019; pp. 1–4. [ Google Scholar ]

- Alahmary, R.M.; Al-Dossari, H.Z.; Emam, A.Z. Sentiment analysis of Saudi dialect using deep learning techniques. In Proceedings of the 2019 IEEE International Conference on Electronics, Information, and Communication (ICEIC), Auckland, New Zealand, 22–25 January 2019; pp. 1–6. [ Google Scholar ]

- Yang, Y. Convolutional neural networks with recurrent neural filters. arXiv 2018 , arXiv:1808.09315. [ Google Scholar ]

- Goularas, D.; Kamis, S. Evaluation of deep learning techniques in sentiment analysis from Twitter data. In Proceedings of the 2019 IEEE International Conference on Deep Learning and Machine Learning in Emerging Applications (Deep-ML), Istanbul, Turkey, 26–28 August 2019; pp. 12–17. [ Google Scholar ]

- Hossain, N.; Bhuiyan, M.R.; Tumpa, Z.N.; Hossain, S.A. Sentiment analysis of restaurant reviews using combined CNN-LSTM. In Proceedings of the 2020 IEEE 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; pp. 1–5. [ Google Scholar ]

- Tyagi, V.; Kumar, A.; Das, S. Sentiment Analysis on Twitter Data Using Deep Learning approach. In Proceedings of the 2020 IEEE 2nd International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida, India, 18–19 December 2020; pp. 187–190. [ Google Scholar ]

- Rhanoui, M.; Mikram, M.; Yousfi, S.; Barzali, S. A CNN-BiLSTM model for document-level sentiment analysis. Mach. Learn. Knowl. Extr. 2019 , 1 , 832–847. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Jang, B.; Kim, M.; Harerimana, G.; Kang, S.U.; Kim, J.W. Bi-LSTM model to increase accuracy in text classification: Combining Word2vec CNN and attention mechanism. Appl. Sci. 2020 , 10 , 5841. [ Google Scholar ] [ CrossRef ]

- Chundi, R.; Hulipalled, V.R.; Simha, J. SAEKCS: Sentiment analysis for English–Kannada code switchtext using deep learning techniques. In Proceedings of the 2020 IEEE International Conference on Smart Technologies in Computing, Electrical and Electronics (ICSTCEE), Bengaluru, India, 10–11 July 2020; pp. 327–331. [ Google Scholar ]

- Thinh, N.K.; Nga, C.H.; Lee, Y.S.; Wu, M.L.; Chang, P.C.; Wang, J.C. Sentiment Analysis Using Residual Learning with Simplified CNN Extractor. In Proceedings of the 2019 IEEE International Symposium on Multimedia (ISM), San Diego, CA, USA, 9–11 December 2019; pp. 335–3353. [ Google Scholar ]

- Janardhana, D.; Vijay, C.; Swamy, G.J.; Ganaraj, K. Feature Enhancement Based Text Sentiment Classification using Deep Learning Model. In Proceedings of the 2020 IEEE 5th International Conference on Computing, Communication and Security (ICCCS), Bihar, India, 14–16 October 2020; pp. 1–6. [ Google Scholar ]

- Chowdhury, S.; Rahman, M.L.; Ali, S.N.; Alam, M.J. A RNN Based Parallel Deep Learning Framework for Detecting Sentiment Polarity from Twitter Derived Textual Data. In Proceedings of the 2020 IEEE 11th International Conference on Electrical and Computer Engineering (ICECE), Dhaka, Bangladesh, 17–19 December 2020; pp. 9–12. [ Google Scholar ]

- Vimali, J.; Murugan, S. A Text Based Sentiment Analysis Model using Bi-directional LSTM Networks. In Proceedings of the 2021 IEEE 6th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 8–10 July 2021; pp. 1652–1658. [ Google Scholar ]

- Anbukkarasi, S.; Varadhaganapathy, S. Analyzing Sentiment in Tamil Tweets using Deep Neural Network. In Proceedings of the 2020 IEEE Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 449–453. [ Google Scholar ]

- Kumar, D.A.; Chinnalagu, A. Sentiment and Emotion in Social Media COVID-19 Conversations: SAB-LSTM Approach. In Proceedings of the 2020 IEEE 9th International Conference System Modeling and Advancement in Research Trends (SMART), Moradabad, India, 4–5 December 2020; pp. 463–467. [ Google Scholar ]

- Hossen, M.S.; Jony, A.H.; Tabassum, T.; Islam, M.T.; Rahman, M.M.; Khatun, T. Hotel review analysis for the prediction of business using deep learning approach. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 1489–1494. [ Google Scholar ]

- Younas, A.; Nasim, R.; Ali, S.; Wang, G.; Qi, F. Sentiment Analysis of Code-Mixed Roman Urdu-English Social Media Text using Deep Learning Approaches. In Proceedings of the 2020 IEEE 23rd International Conference on Computational Science and Engineering (CSE), Dubai, United Arab Emirates, 12–13 December 2020; pp. 66–71. [ Google Scholar ]

- Dhola, K.; Saradva, M. A Comparative Evaluation of Traditional Machine Learning and Deep Learning Classification Techniques for Sentiment Analysis. In Proceedings of the 2021 IEEE 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Uttar Pradesh, India, 28–29 January 2021; pp. 932–936. [ Google Scholar ]

- Tan, K.L.; Lee, C.P.; Anbananthen, K.S.M.; Lim, K.M. RoBERTa-LSTM: A Hybrid Model for Sentiment Analysis with Transformer and Recurrent Neural Network. IEEE Access 2022 , 10 , 21517–21525. [ Google Scholar ] [ CrossRef ]

- Kokab, S.T.; Asghar, S.; Naz, S. Transformer-based deep learning models for the sentiment analysis of social media data. Array 2022 , 14 , 100157. [ Google Scholar ] [ CrossRef ]

- AlBadani, B.; Shi, R.; Dong, J.; Al-Sabri, R.; Moctard, O.B. Transformer-based graph convolutional network for sentiment analysis. Appl. Sci. 2022 , 12 , 1316. [ Google Scholar ] [ CrossRef ]

- Tiwari, D.; Nagpal, B. KEAHT: A knowledge-enriched attention-based hybrid transformer model for social sentiment analysis. New Gener. Comput. 2022 , 40 , 1165–1202. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Tesfagergish, S.G.; Kapočiūtė-Dzikienė, J.; Damaševičius, R. Zero-shot emotion detection for semi-supervised sentiment analysis using sentence transformers and ensemble learning. Appl. Sci. 2022 , 12 , 8662. [ Google Scholar ] [ CrossRef ]

- Maghsoudi, A.; Nowakowski, S.; Agrawal, R.; Sharafkhaneh, A.; Kunik, M.E.; Naik, A.D.; Xu, H.; Razjouyan, J. Sentiment Analysis of Insomnia-Related Tweets via a Combination of Transformers Using Dempster-Shafer Theory: Pre–and Peri–COVID-19 Pandemic Retrospective Study. J. Med Internet Res. 2022 , 24 , e41517. [ Google Scholar ] [ CrossRef ]

- Jing, H.; Yang, C. Chinese text sentiment analysis based on transformer model. In Proceedings of the 2022 IEEE 3rd International Conference on Electronic Communication and Artificial Intelligence (IWECAI), Sanya, China, 14–16 January 2022; pp. 185–189. [ Google Scholar ]

- Alrehili, A.; Albalawi, K. Sentiment analysis of customer reviews using ensemble method. In Proceedings of the 2019 IEEE International Conference on Computer and Information Sciences (ICCIS), Aljouf, Saudi Arabia, 3–4 April 2019; pp. 1–6. [ Google Scholar ]

- Bian, W.; Wang, C.; Ye, Z.; Yan, L. Emotional Text Analysis Based on Ensemble Learning of Three Different Classification Algorithms. In Proceedings of the 2019 IEEE 10th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Metz, France, 18–21 September 2019; Volume 2, pp. 938–941. [ Google Scholar ]

- Gifari, M.K.; Lhaksmana, K.M.; Dwifebri, P.M. Sentiment Analysis on Movie Review using Ensemble Stacking Model. In Proceedings of the 2021 IEEE International Conference Advancement in Data Science, E-learning and Information Systems (ICADEIS), Bali, Indonesia, 13–14 October 2021; pp. 1–5. [ Google Scholar ]

- Parveen, R.; Shrivastava, N.; Tripathi, P. Sentiment Classification of Movie Reviews by Supervised Machine Learning Approaches Using Ensemble Learning & Voted Algorithm. In Proceedings of the IEEE 2nd International Conference on Data, Engineering and Applications (IDEA), Bhopal, India, 28–29 February 2020; pp. 1–6. [ Google Scholar ]

- Aziz, R.H.H.; Dimililer, N. Twitter Sentiment Analysis using an Ensemble Weighted Majority Vote Classifier. In Proceedings of the 2020 IEEE International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 23–24 December 2020; pp. 103–109. [ Google Scholar ]

- Varshney, C.J.; Sharma, A.; Yadav, D.P. Sentiment analysis using ensemble classification technique. In Proceedings of the 2020 IEEE Students Conference on Engineering & Systems (SCES), Prayagraj, India, 10–12 July 2020; pp. 1–6. [ Google Scholar ]

- Athar, A.; Ali, S.; Sheeraz, M.M.; Bhattachariee, S.; Kim, H.C. Sentimental Analysis of Movie Reviews using Soft Voting Ensemble-based Machine Learning. In Proceedings of the 2021 IEEE Eighth International Conference on Social Network Analysis, Management and Security (SNAMS), Gandia, Spain, 6–9 December 2021; pp. 1–5. [ Google Scholar ]

- Nguyen, H.Q.; Nguyen, Q.U. An ensemble of shallow and deep learning algorithms for Vietnamese Sentiment Analysis. In Proceedings of the 2018 IEEE 5th NAFOSTED Conference on Information and Computer Science (NICS), Ho Chi Minh City, Vietnam, 23–24 November 2018; pp. 165–170. [ Google Scholar ]

- Kamruzzaman, M.; Hossain, M.; Imran, M.R.I.; Bakchy, S.C. A Comparative Analysis of Sentiment Classification Based on Deep and Traditional Ensemble Machine Learning Models. In Proceedings of the 2021 IEEE International Conference on Science & Contemporary Technologies (ICSCT), Dhaka, Bangladesh, 5–7 August 2021; pp. 1–5. [ Google Scholar ]

- Al Wazrah, A.; Alhumoud, S. Sentiment Analysis Using Stacked Gated Recurrent Unit for Arabic Tweets. IEEE Access 2021 , 9 , 137176–137187. [ Google Scholar ] [ CrossRef ]

- Tan, K.L.; Lee, C.P.; Lim, K.M.; Anbananthen, K.S.M. Sentiment Analysis with Ensemble Hybrid Deep Learning Model. IEEE Access 2022 , 10 , 103694–103704. [ Google Scholar ] [ CrossRef ]

- Maas, A.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning word vectors for sentiment analysis. In Proceedings of the IEEE 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 142–150. [ Google Scholar ]

- Go, A.; Bhayani, R.; Huang, L. Twitter sentiment classification using distant supervision. CS224N Proj. Rep. Stanf. 2009 , 1 , 2009. [ Google Scholar ]

- Rosenthal, S.; Farra, N.; Nakov, P. SemEval-2017 task 4: Sentiment analysis in Twitter. arXiv 2019 , arXiv:1912.00741. [ Google Scholar ]

Click here to enlarge figure

| Literature | Features | Classifier | Dataset | Accuracy (%) |

|---|---|---|---|---|

| Jung et al. (2016) [ ] | MNB | Sentiment140 | 85 | |

| Athindran et al. (2018) [ ] | NB | Self-collected dataset (from Tweets) | 77 | |

| Vanaja et al. (2018) [ ] | A priori algorithm | NB, SVM | Self-collected dataset (from Amazon) | 83.42 |

| Iqbal et al. (2018) [ ] | Unigram, Bigram | NB, SVM, ME | IMDb | 88 |

| Sentiment140 | 90 | |||

| Rathi et al. (2018) [ ] | TF-IDF | DT | Sentiment140, Polarity Dataset, and University of Michigan dataset | 84 |

| AdaBoost | 67 | |||

| SVM | 82 | |||

| Hemakala and Santhoshkumar (2018) [ ] | AdaBoost | Indian Airlines | 84.5 | |

| Tariyal et al. (2018) [ ] | Regression Tree | Own dataset | 88.99 | |

| Rahat et al. (2019) [ ] | SVC | Airline review | 82.48 | |

| MNB | 76.56 | |||

| Makhmudah et al. (2019) [ ] | TF-IDF | SVM | Tweets related to homosexuals | 99.5 |

| Wongkar and Angdresey (2019) [ ] | NB | Twitter (2019 presidential candidates of the Republic of Indonesia) | 75.58 | |

| Madhuri (2019) [ ] | SVM | Twitter (Indian Railways) | 91.5 | |

| Gupta et al. (2019) [ ] | TF-IDF | Neural Network | Sentiment140 | 80 |

| Prabhakar et al. (2019) [ ] | AdaBoost (Bagging and Boosting) | Skytrax and Twitter (Airlines) | 68 F-score | |

| Hourrane et al. (2019) [ ] | TF-IDF | Ridge Classifier | IMDb | 90.54 |

| Sentiment 140 | 76.84 | |||

| Alsalman (2020) [ ] | TF-IDF | MNB | Arabic Tweets | 87.5 |

| Saad et al. (2020) [ ] | Bag of Words | SVM | Twitter US Airline Sentiment | 83.31 |

| Alzyout et al. (2021) [ ] | TF-IDF | SVM | Self-collected dataset | 78.25 |

| Jemai et al. (2021) [ ] | NB | NLTK corpus | 99.73 |

| Literature | Embedding | Classifier | Dataset | Accuracy (%) |

|---|---|---|---|---|

| Ramadhani et al. (2017) [ ] | MLP | Korean and English Tweets | 75.03 | |

| Demirci et al. (2019) [ ] | word2vec | MLP | Turkish Tweets | 81.86 |

| Raza et al. (2021) [ ] | Count Vectorizer and TF-IDF Vectorizer | MLP | COVID-19 reviews | 93.73 |

| Dholpuria et al. (2018) [ ] | CNN | IMDb (3000 reviews) | 99.33 | |

| Harjule et al. (2020) [ ] | LSTM | Twitter US Airline Sentiment | 82 | |

| Sentiment140 | 66 | |||

| Uddin et al. (2019) [ ] | LSTM | Bangla Tweets | 86.3 | |

| Alahmary and Al-Dossari (2018) [ ] | word2vec | BiLSTM | Saudi dialect Tweets | 94 |

| Yang (2018) [ ] | GloVe | Recurrent neural filter-based CNN and LSTM | Stanford Sentiment Treebank | 53.4 |

| Goularas and Kamis (2019) [ ] | word2vec and GloVe | CNN and LSTM | Tweets from semantic evaluation | 59 |

| Hossain and Bhuiyan (2019) [ ] | word2vec | CNN and LSTM | Foodpanda and Shohoz Food | 75.01 |

| Tyagi et al. (2020) [ ] | GloVe | CNN and BiLSTM | Sentiment140 | 81.20 |

| Rhanoui et al. (2019) [ ] | doc2vec | CNN and BiLSTM | French articles and international news | 90.66 |

| Jang et al. (2020) [ ] | word2vec | hybrid CNN and BiLSTM | IMDb | 90.26 |

| Chundi et al. (2020) [ ] | Convolutional BiLSTM | English, Kannada, and a mixture of both languages | 77.6 | |

| Thinh et al. (2019) [ ] | 1D-CNN with GRU | IMDb | 90.02 | |

| Janardhana et al. (2020) [ ] | GloVe | Convolutional RNN | Movie reviews | 84 |

| Chowdhury et al. (2020) [ ] | word2vec, GloVe, and sentiment-specific word embedding | BiLSTM | Twitter US Airline Sentiment | 81.20 |

| Vimali and Murugan (2021) [ ] | BiLSTM | Self-collected | 90.26 | |

| Anbukkarasi and Varadhaganapathy (2020) [ ] | DBLSTM | Self-collected (Tamil Tweets) | 86.2 | |

| Kumar and Chinnalagu (2020) [ ] | SAB-LSTM | Self-collected | 29 (POS) 50 (NEG) 21 (NEU) | |

| Hossen et al. (2021) [ ] | LSTM | Self-collected | 86 | |

| GRU | 84 | |||

| Younas et al. (2020) [ ] | mBERT | Pakistan elections in 2018 (Tweets) | 69 | |

| XLM-R | 71 | |||

| Dhola and Saradva (2021) [ ] | BERT | Sentiment140 | 85.4 | |

| Tan et a. (2022) [ ] | RoBERTa-LSTM | IMDb | 92.96 | |

| Twitter US Airline Sentiment | 91.37 | |||

| Sentiment140 | 89.70 | |||

| Kokab et al. (2022) [ ] | BERT | CBRNN | US airline reviews | 97 |

| Self-driving car reviews | 90 | |||

| US presidential election reviews | 96 | |||

| IMDb | 93 | |||

| AlBadani et al. (2022) [ ] | ST-GCN | ST-GCN | SST-B | 95.43 |

| IMDB | 94.94 | |||

| Yelp 2014 | 72.7 | |||

| Tiwari and Nagpal (2022) [ ] | BERT | KEAHT | COVID-19 vaccine | 91 |

| Indian Farmer Protests | 81.49 | |||

| Tesfagergish et al. (2022) [ ] | Zero-shot transformer | Ensemble learning | SemEval 2017 | 87.3 |

| Maghsoudi et al. (2022) [ ] | Transformer | DST | Self-collected | 84 |

| Jing and Yang (2022) [ ] | Light-Transformer | Light-Transformer | NLPCC2014 Task2 | 76.40 |

| Literature | Feature Extractor | Classifier | Dataset | Accuracy (%) |

|---|---|---|---|---|

| Alrehili et al. (2019) [ ] | NB + SVM + RF + Bagging + Boosting | Self-collected | 89.4 | |

| Bian et al. (2019) [ ] | TF-IDF | LR + SVM + KNN | COVID-19 reviews | 98.99 |

| Gifari and Lhaksmana (2021) [ ] | TF-IDF | MNB + KNN + LR | IMDb | 89.40 |

| Parveen et al. (2020) [ ] | MNB + BNB + LR + LSVM + NSVM | Movie reviews | 91 | |

| Aziz and Dimililer (2020) [ ] | TF-IDF | NB + LR + SGD + RF + DT + SVM | SemEval-2017 4A | 72.95 |

| SemEval-2017 4B | 90.8 | |||

| SemEval-2017 4C | 68.89 | |||

| Varshney et al. (2020) [ ] | TF-IDF | LR + NB + SGD | Sentiment140 | 80 |

| Athar et al. (2021) [ ] | TF-IDF | LR + NB + XGBoost + RF + MLP | IMDb | 89.9 |

| Nguyen and Nguyen (2018) [ ] | TF-IDF, word2vec | LR + SVM + CNN + LSTM (Mean) | Vietnamese Sentiment | 69.71 |

| LR + SVM + CNN + LSTM (Vote) | Vietnamese Sentiment Food Reviews | 89.19 | ||

| LR + SVM + CNN + LSTM (Vote) | Vietnamese Sentiment | 92.80 | ||

| Kamruzzaman et al.(2021) [ ] | GloVe | 7-Layer CNN + GRU + GloVe | Grammar and Online Product Reviews | 94.19 |

| Attention embedding | 7-Layer CNN + LSTM + Attention Layer | Restaurant Reviews | 96.37 | |

| Al Wazrah and Alhumoud (2021) [ ] | AraVec | SGRU + SBi-GRU + AraBERT | Arabic Sentiment Analysis | 90.21 |

| Tan et a. (2022) [ ] | RoBERTa-LSTM + RoBERTa-BiLSTM + RoBERTa-GRU | IMDb | 94.9 | |

| Twitter US Airline Sentiment | 91.77 | |||

| Sentiment140 | 89.81 |

| Dataset | Classes | Strongly Positive | Positive | Neutral | Negative | Strongly Negative | Total |

|---|---|---|---|---|---|---|---|

| IMDb | 2 | - | 25,000 | - | 25,000 | - | 50,000 |

| Twitter US Airline Sentiment | 3 | - | 2363 | 3099 | 9178 | - | 14,160 |

| Sentiment140 | 2 | - | 800,000 | - | 800,000 | - | 1,600,000 |

| SemEval-2017 4A | 3 | - | 22,277 | 28,528 | 11,812 | - | 62,617 |

| SemEval-2017 4B | 2 | - | 17,414 | - | 7735 | - | 25,149 |

| SemEval-2017 4C | 5 | 1151 | 15,254 | 19,187 | 6943 | 476 | 43,011 |

| SemEval-2017 4D | 2 | - | 17,414 | - | 7735 | - | 25,149 |

| SemEval-2017 4E | 5 | 1151 | 15,254 | 19,187 | 6943 | 476 | 43,011 |

| The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

Share and Cite

Tan, K.L.; Lee, C.P.; Lim, K.M. A Survey of Sentiment Analysis: Approaches, Datasets, and Future Research. Appl. Sci. 2023 , 13 , 4550. https://doi.org/10.3390/app13074550

Tan KL, Lee CP, Lim KM. A Survey of Sentiment Analysis: Approaches, Datasets, and Future Research. Applied Sciences . 2023; 13(7):4550. https://doi.org/10.3390/app13074550

Tan, Kian Long, Chin Poo Lee, and Kian Ming Lim. 2023. "A Survey of Sentiment Analysis: Approaches, Datasets, and Future Research" Applied Sciences 13, no. 7: 4550. https://doi.org/10.3390/app13074550

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

- Open access

- Published: 17 April 2023

Twitter sentiment analysis using hybrid gated attention recurrent network

- Nikhat Parveen 1 , 2 ,

- Prasun Chakrabarti 3 ,

- Bui Thanh Hung 4 &

- Amjan Shaik 2 , 5

Journal of Big Data volume 10 , Article number: 50 ( 2023 ) Cite this article

4908 Accesses

16 Citations

Metrics details

This article has been updated

Sentiment analysis is the most trending and ongoing research in the field of data mining. Nowadays, several social media platforms are developed, among that twitter is a significant tool for sharing and acquiring peoples’ opinions, emotions, views, and attitudes towards particular entities. This made sentiment analysis a fascinating process in the natural language processing (NLP) domain. Different techniques are developed for sentiment analysis, whereas there still exists a space for further enhancement in accuracy and system efficacy. An efficient and effective optimization based feature selection and deep learning based sentiment analysis is developed in the proposed architecture to fulfil it. In this work, the sentiment 140 dataset is used for analysing the performance of proposed gated attention recurrent network (GARN) architecture. Initially, the available dataset is pre-processed to clean and filter out the dataset. Then, a term weight-based feature extraction termed Log Term Frequency-based Modified Inverse Class Frequency (LTF-MICF) model is used to extract the sentiment-based features from the pre-processed data. In the third phase, a hybrid mutation-based white shark optimizer (HMWSO) is introduced for feature selection. Using the selected features, the sentiment classes, such as positive, negative, and neutral, are classified using the GARN architecture, which combines recurrent neural networks (RNN) and attention mechanisms. Finally, the performance analysis between the proposed and existing classifiers is performed. The evaluated performance metrics and the gained value for such metrics using the proposed GARN are accuracy 97.86%, precision 96.65%, recall 96.76% and f-measure 96.70%, respectively.

Introduction

Sentiment Analysis (SA) uses text analysis, NLP (Natural Language Processing), and statistics to evaluate the user’s sentiments. SA is also called emotion AI or opinion mining [ 1 ]. The term ‘sentiment’ refers to feelings, thoughts, or attitudesexpressed about a person, situation, or thing. SA is one of the NLP techniques used to identify whether the obtained data or information is positive, neutral or negative. Business experts frequently use it to monitor or detect sentiments to gauge brand reputation, social data and understand customer needs [ 2 , 3 ]. Over recent years, the amount of information uploaded or generated online has rapidly increased due to the enormous number of Internet users [ 4 , 5 ].

Globally, with the emergence of technology, social media sites [ 6 , 7 ] such as Twitter, Instagram, Facebook, LinkedIn, YouTube etc.,have been used by people to express their views or opinions about products, events or targets. Nowadays, Twitter is the global micro-blogging platform greatly preferred by users to share their opinions in the form of short messages called tweets [ 8 ]. Twitterholds 152 M (million) daily active users and 330 M monthly active users,with 500 M tweets sent daily [ 9 ]. Tweets often effectively createa vast quantity of sentiment data based on analysis. Twitter is an effective OSN (online social network) for disseminating information and user interactions. Twitter sentiments significantly influence diverse aspects of our lives [ 10 ]. SA and text classification aims at textual information extraction and further categorizes the polarity as positive (P), negative (N) or neutral (Ne).

NLP techniques are often used to retrieve information from text or tweet content. NLP-based sentiment classification is the procedure in which the machine (computer) extracts the meaning of each sentence generated by a human. Manual analysis of TSA (Twitter Sentiment Analysis) is time-consuming and requires more experts for tweet labelling. Hence, to overcome these challenges automated model is developed. The innovations of ML (Machine learning) algorithms [ 11 , 12 ],such as SVM (Support Vector Machine), MNB (Multinomial Naïve Bayes), LR (Logistic Regression), NB (Naïve Bayes) etc., have been used in the analysis of online sentiments. However, these methods illustrated good performance, but these approaches are very slow and need more time to perform the training process.

DL model is introduced to classify Twitter sentiments effectively. DL is the subset of ML that utilizes multiple algorithms to solve complicated problems. DL uses a chain of progressive events and permits the machine to deal with vast data and little human interaction. DL-based sentiment analysis offers accurate results and can be applied to various applications such as movie recommendations, product predictions, emotion recognition [ 13 , 14 , 15 ],etc. Such innovations have motivated several researchers to introduce DL in Twitter sentiment analysis.

SA (Sentiment Analysis) is deliberated with recognizing and classifying the polarity or opinions of the text data. Nowadays, people widely share their opinions and sentiments on social sites. Thus, a massive amount of data is generated online, and effectively mining the online data is essential for retrieving quality information. Analyzing online sentiments can createa combined opinion on certain products. Moreover, TSA (Twitter Sentiment Analysis) is challenging for multiple reasons. Short texts (tweets), owing to the maximum character limit, is a major issue. The presence of misspellings, slang and emoticons in the tweets requires an additional pre-processing step for filtering the raw data. Also, selecting a new feature extraction model would be challenging,further impacting sentiment classification. Therefore, this work aims to develop a new feature extraction and selection approach integrated with a hybrid DL classification model for accurate tweet sentiment classification. The existing research works [ 16 , 17 , 18 , 19 , 20 , 21 ] focus on DL-based TSA, which haven’t attained significant results because of smaller dataset usage and slower manual text labelling. However, the datasets with unwanted details and spaces also reduce the classification algorithm’s efficiency. Further, the dimension occupied by extracted features also degrades the efficiency of a DL approach. Hence, to overcome such issues, this work aims to develop a successful DL algorithm for performing Twitter SA. Pre-processing is a major contributor to this architecture as it can enhance DL efficiency by removing unwanted details from the dataset. This pre-processing also reduces the processing time of a feature extraction algorithm. Followed to that, an optimization-based feature selection process was introduced, which reduces the effort of analyzing irrelevant features. However, unlike existing algorithms, the proposed GARN can efficiently analyse the text-based features. Further, combining the attention mechanism with DL has enhanced the overall efficiency of the proposed DL algorithm. As attention mechanism have the greater ability to learn the selected features by reducing the complexity of model. This merit causes the attention mechanism to integrate with RNN and achieved effective performance.

The major objectives of the proposed research are:

To introduce a new deep model Hybrid Mutation-based White Shark Optimizer with a Gated Attention Recurrent Network (HMWSO-GARN) for Twitter sentiment analysis.

The feature set can be extracted with the new Term weighting-based feature extraction (TW-FE) approach named Log Term Frequency-based Modified Inverse Class Frequency (LTF-MICF) is used and compared with traditional feature extraction models.

To identify the polarity of tweets with the bio-inspired feature selection and deep classification model.

To evaluate the performance using different metrics and compare it with traditional DL procedures on TSA.

Related works

Some of the works related to dl-based twitter sentiment analysis are:.

Alharbi et al. [ 16 ] presented the analysis of Twitter sentiments using a DNN (deep neural network) based approach called CNN (Convolutional Neural Network). The classification of tweets was processed based on dual aspects, such as using social activities and personality traits. The sentiment (P, N or Ne) analysis was demonstrated with the CNN model, where the input layer involves the feature lists and the pre-trained word embedding (Word2Vec). The dual datasets used for processing were SemEval-2016_1 and SemEval-2016_2. The accuracy obtained by CNN was 88.46%, whereas the existing methods achieved less accuracy than CNN. The accuracy of existing methods is LSTM (86.48%), SVM (86.75%), KNN (k-nearest neighbour) (82.83%), and J48 (85.44%), respectively.

Tam et al. [ 17 ] developed a Convolutional Bi-LSTM model based on sentiment classification on Twitter data. Here, the integration of CNN-Bi-LSTM was characterized byextracting local high-level features. The input layer gets the text input and slices it into tokens. Each token was transformed into NV (numeric values). Next, the pre-trained WE (word embedding), such as GloVe and W2V (word2vector), were used to create the word vector matrix. The important words were extracted using the CNN model,and the feature set was further minimized using the max-pooling layer. The Bi-LSTM (backwards, forward) layers were utilized to learn the textual context. The dense layer (DeL) was included after the Bi-LSTM layer to interconnect the input data with output using weights. The performance was experimented using datasets TLSA (Twitter Label SA) and SST-2 (Stanford Sentiment Treebank). The accuracy with the TLSA dataset was (94.13%) and (91.13%) with the SST-2 dataset.

Chugh et al. [ 18 ] developed an improved DL model for information retrieval and classification of sentiments. The hybridized optimization algorithm SMCA was the integration of SMO (Spider Monkey Optimization) and CSA (Crow Search Algorithm). The presented DRNN (DeepRNN) was trained using the algorithm named SMCA. Here, the sentiment categorization was processed with DeepRNN-SMCA and the information retrieval was done with FuzzyKNN. The datasets used were the mobile reviews amazon dataset and telecom tweets dataset. Forsentiment classification, the accuracy obtained on the first dataset was (0.967), andthe latter was gained (0.943). The performance with IR (information retrieval) on dataset 1 gained (0.831) accuracy and dataset 2 obtained (0.883) accuracy.

Alamoudi et al. [ 19 ] performed aspect-based SA and sentiment classification aboutWE (word embeddings) and DL. The sentiment categorization involves both ternary and binary classes. Initially, the YELP review dataset was prepared and pre-processed for classification. The feature extraction was modelled with TF-IDF, BoW and Glove WE. Initially, the NB and LR were used for first set feature (TF-IDF, BoW features) modelling; then, the Glove features were modelled using diverse models such as ALBERT, CNN, and BERT for the ternary classification. Next, aspect and sentence-based binary SA was executed. The WE vector for sentence and aspect was done with the Glove approach. The similarity among aspects and sentence vectors was measured using cosine similarity, and binary aspects were classified. The highest accuracy (98.308%) was obtained when executed with the ALBERT model on aYELP 2-class dataset, whereas the BERT model gained (89.626%) accuracy with a YELP 3-class dataset.

Tan et al. [ 20 ] introduced a hybrid robustly optimized BERT approach (RoBERTa) with LSTM for analyzing the sentiment data with transformer and RNN. The textual data was processed with word embedding, and tokenization of the subwordwas characterized with the RoBERTa model. The long-distance Tm (temporal) dependencies were encoded using the LSTM model. The DA (data augmentation) based on pre-trained word embedding was developed to synthesize multiple lexical samples and present the minority class-based oversampling. Processing of DA solves the problem of an imbalanced dataset with greater lexical training samples. The Adam optimization algorithm was used to perform hyperparameter tuning,leading to greater results with SA. The implementation datasets were Sentiment140,Twitter US Airline,and IMDb datasets. The overall accuracy gained with these datasets was 89.70%, 91.37% and 92.96%, respectively.

Hasib et al. [ 21 ] proposed a novel DL-based sentiment analysis of Twitter data for the US airline service. The Twitter tweet is collected from the Kaggle dataset: crowdflowerTwitter US airline sentiment. Two models are used for feature extraction:DNN and convolutional neural network (CNN). Before applying four layers, the tweets are converted to metadata and tf-idf. The four layers of DNN aretheinput, covering, and output layers. CNN for feature extraction is by the following phases; data pre-processing, embedded features, CNN and integration features. The overall precision is 85.66%, recall is 87.33%, and f1-score is 87.66%, respectively. Sentiment analysis was used to identify the attitude expressed using text samples. To identify such attitudes, a novel term weighting scheme was developed by Carvalho and Guedes in [ 24 ], which was an unsupervised weighting scheme (UWS). It can process the input without considering the weighting factor. The SWS (Supervised Weighting Schemes) was also introduced, which utilizes the class information related to the calculated term weights. It had shown a more promising outcome than existing weighting schemes.

Learning from online courses are considered as the mainstream of learning domain. However, it was identified that analysing the users comments are considered as the major key for enhancing the efficiency and quality of online courses. Therefore, identifying sentiments from the user’s comments were considered as the efficient process for enhancing the learning process of online course. By taking this as major goal, an ensemble learning architecture was introduced by Pu et al. in [ 34 ] which utilizes glove, and Word2Vec for obtaining vector representation. Then, the extraction of deep features was achieved using CNN (Convolutional neural network) and bidirectional long and short time network (Bi-LSTM). The integration of suggested models were achieved using ensemble multi-objective gray wolf optimization (MOGWO). It achieves 91% f1-score value.

The sentiment dictionaries use binary sentiment analysis like BERT, word2vec and TF-IDF were used to convert movie and product review into vectors. Three-way decision in binary sentiment analysis separates the data sample into uncertain region (UNC), positive (POS) region and Negative (NEG) region. UNC got benefit from this three-way decision model and enhances the effect of binary sentiment analysis process. For the optimal feature selection, Chen, J et al. [ 35 ] developed a three-way decision model which get the optimal features representation of positive and negative domains for sentiment analysis. Simulation was done in both Amazon and IMDB database to show the effectiveness of the proposed methodology.

The advancements in biga data analytics (BDA) model is obtained by the people who generate large amount of data in their day-to-day live. The linguistic based tweets, feature extraction and sentimental texts placed between the tweets are analysed by the sentimental analysis (SA) process. In this article, Jain, D.K et al. [ 36 ] developed a model which contains pre-processing, feature extraction, feature selection and classification process. Hadoop Map Reduce tool is used to manage the big data, then pre-processing method is initiated to remove the unwanted words from the text. For feature extraction, TF-IDF vector is utilized and Binary Brain Storm Optimization (BBSO) is used to select the relevant features from the group of vectors. Finally, the incidence of both positive and negative sentiments is classified using Fuzzy Cognitive Maps (FCMs). Table 1 shows the comparative analysis of Twitter sentiment analysis using DL techniques.

Problem statement

There are many problems related to twitter sentiment analysis using DL techniques. The author in [ 16 ] has used the DL model and performed the sentiment classification from Twitter data. To classify such data, this method analysed each user’s behavioural information. However, this method has faced struggles in interpreting exact tweet words from the massive tweet corpus; due to this, the efficiency of a classification algorithm has been reduced.ConvBiLSTM was introduced in [ 17 ], which used glove and word2vec-based features for sentiment classification. However, the extracted features are not sufficient to achieve satisfactory accuracy. Then, processing time reduction was considered a major objective in [ 18 ], which utilizes DeepRNN for sentiment classification. But it fails to reduce the dimension occupied by the extracted features. This makes several valuable featuresfall within the local optimum. DL and word embedding processes were combined in [ 19 ], which utilizes Yelp reviews for processing. It has shown efficient performance for two classes but fails to provide better accuracy for three-class classification. Recently, a hybrid LSTM architecture was developed in [ 20 ], which has shown flexible processing over sentiment classification and takes a huge amount of time to process large datasets. DNN-based feature extraction and CNN-based sentiment classification were performed in [ 21 ], which haven’t shown more efficient performance than other algorithms. Further, it also concentrated only on 2 classes.

Few of the existing literatures fails to achieve efficient processing time, complexity and accuracy due to the availability of large dataset. Further, the extraction of low-level and unwanted features reduces the efficiency of classifier. Further, the usage of all extracted features occupies large dimension. These demerits makes the existing algorithms not suitable for efficient processing. This shortcomings open a research space for efficient combined algorithm for twitter data analysis. To overcome such issue, the proposed architecture has combined RNN and attention mechanism. The features required for classification is extracted using LTF-MICF which provides features for twitter processing. Then, the dimension occupied by huge extracted features are reduced using HMWSO algorithm. This algorithm has the ability to process the features in less time complexity and shows better optimal feature selection process. This selected features has enhanced the performance of proposed classifier over the large dataset and also achieved efficient accuracy with less misclassification error rate.

Proposed methodology

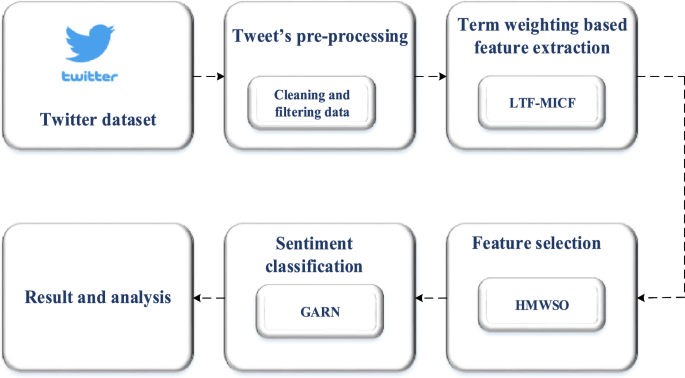

For sentiment classification of Twitter tweets, a DL technique of gated attention recurrent network (GARN) is proposed. The Twitter dataset (Sentiment140 dataset) with sentiment tweets that the public can access is initially collected and given as input. After collecting data, the next stage is pre-processing the tweets. In the pre-processing stage, tokenization, stopwords removal, stemming, slang and acronym correction, removal of numbers, punctuations &symbol removal, removal of uppercase and replacing with lowercase, character &URL, hashtag & user mention removal are done. Now the pre-processed dataset act as input for the next process. Based on term frequency, a term weight is allocated for each term in the dataset using the Log Term Frequency-based Modified Inverse Class Frequency (LTF-MICF) extraction technique. Next, Hybrid Mutation based White Shark Optimizer (HMWSO) is used to select optimal term weight. Finally, the output of HMWSO is fed into the gated attention recurrent network (GARN) for sentiment classification with three different classes. Figure 1 shows a diagrammatic representation of the proposed methodology.

Architecture diagram

Tweets pre-processing

Pre-processing is converting the long data into short text to perform other processes such as classification, detecting unwanted news, sentiment analysis etc., as Twitter users use different styles to post their tweets. Some may post the tweet in abbreviations, symbols, URLs, hashtags, and punctuations. Also, tweets may consist of emojis, emoticons, or stickers to express the user’s sentiments and feelings. Sometimes the tweets may be in a hybrid form,such as adding abbreviations, symbols and URLs. So these kinds of symbols, abbreviations, and punctuations should be removed from the tweet toclassify the dataset further. The features to be removed from the tweet dataset are tokenization, stopwords removal, stemming, slag and acronym correction, removal of numbers, punctuation and symbol removal, noise removal, URL, hashtags, replacing long characters, upper case to lower case, and lemmatization.

Tokenization

Tokenization [ 28 ] is splitting a text cluster into small words, symbols, phrases and other meaningful forms known as tokens. These tokens are considered as input for further processing. Another important use of tokenization is that it can identify meaningful words.The tokenization challenge depends only on the type of language used. For example, in languages such as English and French, some words may be separated by white spaces. Other languages, such as Chinese and Thai words,are not separated. The tokenization process is carried out in the NLTK Python library. In this phase, the data is processed in three forms: convert the text document into word counts. Secondly,data cleansing and filtering occur, andfinally, the document is split into tokens or words.

The example provided below illustrates the original tweet before and after performing tokenization:

Before tokenization

DLis a technology which trains the machineto behave naturally like a human being.

After tokenization

Deep, learning, is, a, technology, which, train, the, machine, to, behave, naturally, like, a, human, being.

Numerous tools are available to tokenize a text document. Some of them are as follows;

NLTK word tokenize

Nlpdotnet tokenizer

TextBlob word tokenize

Mila tokenizer

Pattern word tokenize

MBSP word tokenize

Stopwords removal

Stopword removal [ 28 ] is a process of removing frequently used words with meaningless in a text document. Stopwords such as are, this, that, and, so are frequently occurring words in a sentence. These words are also termed pronouns, articles and prepositions. Such words are not used forfurther processing, so removing those words is required. If such words are not removed, the sentence seems heavy and becomes less important for the analyst.Also, they are not considered keywords in Twitter analysis applications. Many methods exist to remove stopwords from a document; they are.

Classic method

Mutual information (MI) method

Term based random sampling (TBRS) method

Removing stopwords from a pre-compiled list is performed using a classic-based method. Z-methods are known as Zipf’s law-based methods. In Z-methods, three removal processes occur: removing the most frequently used words, removing the words which occur once in a sentence, and removing words with a document frequency of low inverse. In the mutual MI method, the information with low mutual will be removed. In the TBRS method, the words are randomly chosen from the document and given rank for a particular term using the Kullback–Leibler divergence formula, which is represented as;

where \(Q_{l} (t)\) is the normalized term frequency (NTF) of the term \(t\) within a mass \(l\) , and NTF is denoted as \(Q(t)\) of term \(t\) in the entire document. Finally, using this equation, the least terms are considered a stopword list from which the duplications are removed.

Removing prefixesand suffixes from a word is performed using the stemming method. It can also be defined as detecting the root and stem of a word and removing them. For example, processed word processing can be stemmed from a single word as a process [ 28 ]. The two points to be considered while performing stemming are: the words with different meanings must be kept separate, and the words of morphological forms will contain the same meaning and must be mapped with a similar stem. There are stemming algorithms to classify the words. The algorithms are divided into three methods: truncating, statistical, and mixed methods. Truncating method is the process of removing a suffix from a plural word. Some rules must be carried out to remove suffixes from the plurals to convert the plural word into the singular form.

Different stemmer algorithms are used under the truncating method. Some algorithms are Lovins stemmer, porters stemmer, paice and husk stemmer, and Dawson stemmer. Lovins stemmer algorithm is used to remove the lengthy suffix from a word. The drawback of using this stemmer is that it consumes more time to process. Porter’s stemmer algorithm removes suffixes from a word by applying many rules. If the applied rule is satisfied, the suffix is automatically removed. The algorithm consists of 60 rules and is faster than theLovins algorithm. Paice and husk is an iterative algorithm that consists of 120 rules to remove the last character of the suffixed word. This algorithm performs two operations, namely, deletion and replacement. The Dawson algorithm keeps the suffixed words in reverse order by predicting their length and the last character. In statistical methods, some algorithms are used: N-gram stemmer, HMM stemmer, and YASS stemmer. In a mixed process, the inflectional and derivational methods are used.

Slang and acronym correction

Users typically use acronyms and slang to limit the characters in a tweet posted on social media [ 29 ]. The use of acronyms and slangis an important issue because the users do not have the same mindset to make the acronym in the same full form, and everyone considers the tweet in different styles or slang. Sometimes, the acronym posted may possess other meanings or be associated with other problems. So, interpreting these kinds of acronyms and replacing them with meaningful words should be done so the machine can easily understand the acronym’s meaning.

An example illustrates the original tweet with acronyms and slang before and after removal.

Before removal : ROM permanently stores information in the system, whereas RAM temporarily stores information in the system.

After removal : Read Only Memory permanently store information in the system, whereas Random Access Memory temporarily store information in the system.

Removal of numbers

Removal of numbers in the Twitter dataset is a process of deleting the occurrence of numbers between any words in a sentence [ 29 ].

An example illustrates the original tweet before and after removing numbers.

Before removal : My ink “My Way…No Regrets” Always Make Happiness Your #1 Priority.

After removal : My ink “My Way … No Regrets” Always Make Happiness Your # Priority.

Once removed, the tweet will no longer contain any numbers.

Punctuation and symbol removal

The punctuation and symbols are removed in this stage. Punctuations such as ‘.’, ‘,’, ‘?’, ‘!’, and ‘:’ are removed from the tweet [ 29 , 30 ].

An example illustrates the original tweet before and after removing punctuation marks.

After removal : My ink My Way No Regrets Always Make Happiness Your Priority.

After removal, the tweet will not contain any punctuation. Symbol removal is the process of removing all the symbols from the tweet.

An example illustrates the original tweet before and after removing symbols.

After removal : wednesday addams as a disney princess keeping it.

After removal, there would not be any symbols in the tweet.

Removal of uppercase into lowercase character

In this process of removal or deletion, all the uppercase charactersare replaced with lowercase characters [ 30 ].

An example illustrates the original tweet before and after removing uppercase characters into lowercase characters.

After removal : my ink my way no regrets always make happiness your priority.

After removal, the tweet will no longer contain capital letters.

URL, hashtag & user mention removal

For clear reference,Twitter users post tweets with various URLs and hashtags [ 29 , 30 ]. This information ishelpful for the people but mostly noise, which cannot be used for further processes. The example provided below illustrates the original tweet with URL, hashtag and user mention before removal and after removal:

Before removal : This gift is given by #ahearttouchingpersonfor securing @firstrank. Click on the below linkto know more https://tinyurl.com/giftvoucher .

After removal : This is a gift given by a heart touching person for securing first rank. Click on the below link to know more.

Term weighting-based feature extraction

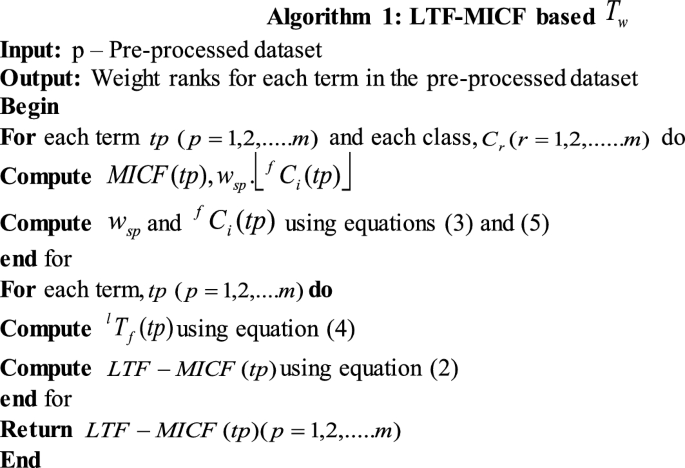

After the pre-processing, the pre-processed data is extracted in text documents based on the term weighting \(T_{w}\) [ 22 ]. A new term weighting scheme,Log term frequency-based modified inverse class frequency (LTF-MICF), is employed in this research paper for feature extraction based on term weight. The technique integrates two different term weighting schemes: log term frequency (LTF) and modified inverse class frequency (MICF). The frequently occurring terms in the document are known as term frequency \(^{f} T\) . But, \(^{f} T\) alone is insufficient because the frequently occurring terms will possess heavyweight in the document. So, the proposed hybrid feature extraction technique can overcome this issue. Therefore, \(^{f} T\) is integrated with MICF, an effective \(T_{w}\) approach. Inverse class frequency \(^{f} C_{i}\) is the inverse ratio of the total class of terms that occurs on training tweets to the total classes. The algorithm for the TW-FE technique is shown in algorithm 1 [ 22 ].

Two steps are involved in calculating LTF \(^{l} T_{f}\) . The first step is to calculate the \(^{f} T\) of each term in the pre-processed dataset. The second step is, applying log normalization to the output of the computed \(^{f} T\) data. The modified version of \(^{f} C_{i}\) , the MICF is calculated for each term in the document. MICF is said to be executed then;each term in the document should have different class-particular ranks, which should possess differing contributions to the total term rank. It is necessary to assign dissimilar weights for dissimilar class-specific ranks. Consequently, the sum of the weights of all class-specific ranks is employed as the total term rank. The proposed formula for \(T_{w}\) using LTF-based MICF is represented as follows [ 22 ];

where a specific weighting factor is denoted \(w_{sp}\) for each \(tp\) for class \(C_{r}\) , which can be clearly represented as;

The method used to assign a weight for a given dataset is known as the weighting factor (WF). Where the number of tweets \(s_{i}\) in class \(C_{r}\) which contains pre-processed terms \(tp\) is denoted as \(s_{i} \mathop{t}\limits^{\rightharpoonup}\) . The number of \(s_{i}\) in other classes, which contains \(tp\) is denoted as \(s_{i} \mathop{t}\limits^{\leftarrow}\) . The number of \(s_{i}\) in-class \(C_{r}\) , which do not possess, \(tp\) is denoted as \(s_{i} \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{t}\) . The number of \(s_{i}\) in other classes, which do not possess, \(tp\) is denoted as \(s_{i} \tilde{t}\) . To eliminate negative weights, the constant ‘1’ is used. In extreme cases, to avoid a zero-denominator issue, the minimal denominator is set to ‘1’ if \(s_{i} \mathop{t}\limits^{\leftarrow}\) = 0 or \(s_{i} \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{t}\) = 0. The formula for \(^{l} T_{f} (tp)\) and \(^{f} C_{i} (tp)\) can be presented as follows [ 22 ];

where raw count of \(tp\) on \(s_{i}\) is denoted as \(^{f} T(tp,s_{i} )\) , i.e., the total times of \(tp\) occurs on \(s_{i}\) .

where \(r\) refers to the total number of classes in \(s_{i}\) , and \(C(tp)\) is the total number of classes in \(tp\) . The dataset features are represented as \(f_{j} = \left\{ {f_{1} ,f_{2} ,..........f_{3} ,......f_{m} } \right\}\) after \(T_{w}\) , where the number of weighted terms in the pre-processed dataset is denoted as \(f_{1} ,f_{2} ,...f_{3} ,...f_{m}\) respectively. The computed rank values of each term in the text document of tweets are used for performing the further process.

Feature selection

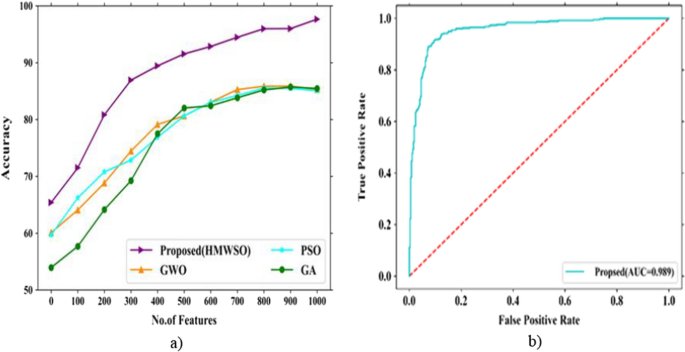

The existence of irrelevant features in the data can reduce the accuracy level of the classification process and make the model to learn those irrelevant features. This issue is termed as the optimization issue. This issue can be ignored only by taking optimal solutions from the processed dataset. Therefore, a feature selection algorithm named White shark optimizer with a hybrid mutation strategy is utilized to achieve a feature selection process.

White Shark Optimizer (WSO)

WSO is proposed based on the behaviour of the white shark while foraging [ 23 ]. Great white shark in the ocean catches prey by moving the waves and other features to catch prey kept deep in the ocean. Since the white shark catch prey based on three behaviours, namely: (1) the velocity of the shark in catching the prey, (2) searching for the best optimal food source, (3) the movement of other sharks toward the shark, which is near to the optimal food source. The initial white shark population is represented as;

where \(W_{q}^{p}\) is the initial parameters of the \(p_{th}\) white shark in the \(q_{th}\) dimension. The upper and lower bounds in the \(q_{th}\) dimension are denoted as \(up_{q}\) and \(lb_{q}\) , respectively. Whereas \(r\) denotes a random number in the range [0, 1].

The white shark’s velocity is to locate the prey based on the motion of the sea wave is represented as [ 23 ];

where \(s = 1,2,....m\) is the index of a white shark with a population size of \(m\) . The new velocity of \(p_{th}\) shark is denoted as \(vl_{s + 1}^{p}\) in \((s + 1)_{th}\) step. The initial speed of the \(p_{th}\) shark in the \(s_{th}\) step is denoted as \(vl_{s}^{p}\) . The global best position achieved by any \(p_{th}\) shark in \(s_{th}\) step is denoted as \(W_{{gbest_{s} }}\) . The initial position of the \(p_{th}\) shark in \(s_{th}\) step is denoted as \(W_{s}^{p}\) . The best position of the \(p_{th}\) shark and the index vector on attainingthe best position are denoted as \(W_{best}^{{vl_{s}^{p} }}\) and \(vc^{i}\) . Where \(C_{1}\) and \(C_{2}\) in the equation is defined as the creation of uniform random numbers of the interval [1, 0]. \(F_{1}\) and \(F_{2}\) are the force of the shark to control the effect of \(W_{{gbest_{s} }}\) and \(W_{best}^{{vl_{s}^{p} }}\) on \(W_{s}^{p}\) . \(\mu\) represents to analyze the convergence factor of the shark. The index vector of the white shark is represented as;

where \(rand(1,t)\) is a random numbers vector obtained with a uniform distribution in the interval [0, 1].The forces of the shark to control the effect are represented as follows;

The initial and maximum sum of the iteration is denoted as \(u\) and \(U\) , whereas the white shark’s current and sub-ordinate velocities are denoted as \(F_{\min }\) and \(F_{\max }\) . The convergence factor is represented as;

where \(\tau\) is defined as the acceleration coefficient. The strategy for updating the position of the white shark is represented as follows;

The new position of the \(p_{th}\) shark in \((s + 1)\) iteration, \(\neg\) represent the negation operator, \(c\) and \(d\) represents the binary vectors. The search space lower and upper bounds are denoted as \(lo\) and \(ub\) . \(W_{0}\) and \(fr\) denotes the logical vector and frequency at which the shark moves. The binary and logic vectors are expressed as follows;

The frequency at which the white shark moves is represented as;

\(fr_{\max }\) and \(fr_{\min }\) represents the maximum and minimum frequency rates. The increase in force at each iteration is represented as;

where \(MV\) represents the weight of the terms in the document.

The best optimal solution is represented as;

where the position updation following the food source of \(p_{th}\) the white shark is denoted as \(W_{s + 1}^{\prime p}\) . The \({\text{sgn}} (r_{2} - 0.5)\) produce 1 or −1 to modify the search direction. The food source and shark distance \(\vec{D}is_{w}\) and the strength of the white shark following other sharks close to the food source \(Str_{sns}\) is formulated as follows;

The initial best optimal solutions are kept constant, and the position of other sharks is updated according to these two constant optimal solutions. The fish school behaviour of the sharks is formulated as follows;

The weight factor \(^{j} we\) is represented as;

where \(^{q} fit\) is defined as the fitness of each term in the text document. The expansion of the equation is represented as;

The concatenation of hybrid mutation \(HM\) is applied to the WSO for a faster convergence process. Thus, the hybrid mutation applied with the optimizer is represented as;

whereas \(G_{a} (\mu ,\sigma )\) and \(C_{a} (\mu ,\sigma )\) represents an arbitrary number of both Gaussian and Cauchy distribution. \((\mu ,\sigma )\) and \((\mu^{\prime},\sigma^{\prime})\) represents the mean and variance function of both Gaussian and Cauchy distributions. \(D_{1}\) and \(D_{2}\) represents the coefficients of Gaussian \(^{t + 1} GM\) along with Cauchy \(^{t + 1} CM\) mutation. On applying these two hybrid mutation operators, a new solution is produced that is represented as;

whereas \(^{p}_{we}\) represents the weight vector and \(PS\) represents the size of the population. The selected features from the extracted features are represented as \(Sel(p = 1,2,...m)\) . The WSO output is denoted as \((sel) = \left\{ {sel^{1} ,sel^{2} ,.....sel^{m} } \right.\left. {} \right\}\) ,which is a new sub-group of terms in the dataset. At the same time, \(m\) denotes a new number of each identical feature. Finally, the feature selection stage provides a dataset document with optimal features.

Gated attention recurrent network (GARN) classifier

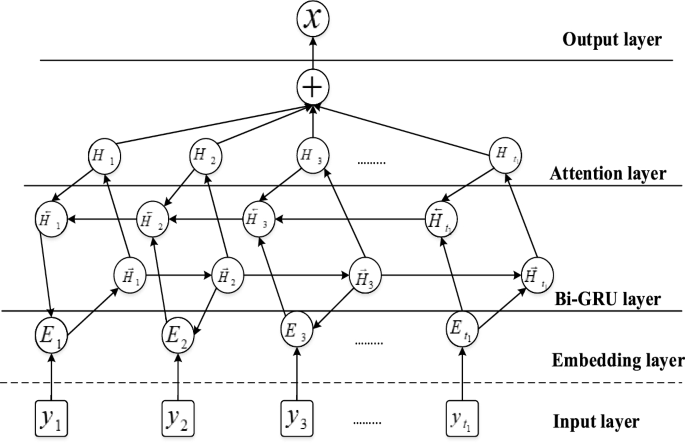

GARN is a hybrid network of Bi-GRU with an attention mechanism. Many problems occur due to the utilization of recurrent neural network (RNNs) because it employs old information rather than the current information for classification. To overcome this problem, a bidirectional recurrent neural network (BRNN) model is proposed, which can utilize both old and current information. So, to perform both the forward and reverse functions, two RNNs are employed. The output will be connected to a similar output layer to record the feature sequence. Based on the BRNN model, another bidirectional gated recurrent unit (Bi-GRU) model is introduced, which replaces the hidden layer of the BRNN with a single GRU memory unit. Here, the hybridization of both Bi-GRU with attention is considered agated attention recurrent network (GARN) [ 25 ] and its structure is given in Fig. 2 .

Structure of GARN

Consider an m-dimensional input data as \((y_{1} ,y_{2} ,....,y_{m} )\) . The hidden layer in the BGRU produces an output \(H_{{t_{1} }}\) at a time interval \(t_{1}\) is represented as;

where the weight factor for two connecting layers is denoted as \(w_{e}\) , \(c\) is the bias vector, \(\sigma\) represents the activation function, positive and negative outputs of GRU is denoted as \(\vec{H}_{{t_{1} }}\) and \(\overleftarrow {H} _{{t_{1} }}\) , \(\oplus\) is a bitwise operator.

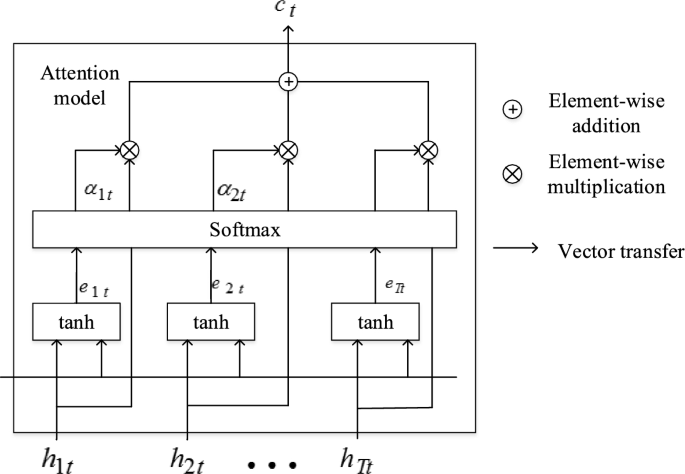

Attention mechanism

In sentiment analysis, the attention module is very important to denote the correlation between the terms in a sentence and the output [ 26 ]. For direct simplification, an attention model is used in this proposal named as feed-forward attention model. This simplification is to produce a single vector \(\nu\) from the total sequence represented as;

Where \(\beta\) is a learning function and is identified using \(H_{{t_{1} }}\) . From the above Eq. 34 , the attention mechanism produces a fixed length for the embedding layer in a BGRU model for every single vector \(\nu\) by measuring the average weight of the data sequence \(H\) . The structure for attention mechanism is shown in Fig. 3 . Therefore, the final sub-set for the classification is obtained from:

Structure of attention mechanism

Sentiment classification

Twitter sentiment analysis is formally a classification problem. The proposed approach classifies the sentiment data into three classes: positive, negative and neutral. For classification, the softmax classifier is used to classify the output in the hidden layer \(H^{\# }\) is represented as;

where \(w_{e}\) is the weight factor, \(c\) is a bias vector and \(H^{\# }\) is the output of the last hidden layer. Also, the cross-entropy is evaluated as a loss function represented as;

The total number of samples is denoted as, \(n\) . The real category of the sentence is denoted as \(sen_{j}\) ,the sentence with the predictive category is denoted as \(x_{j}\) , and the \(L2\) regular item is denoted as \(\lambda ||\theta ||^{2}\) .

Results and discussion

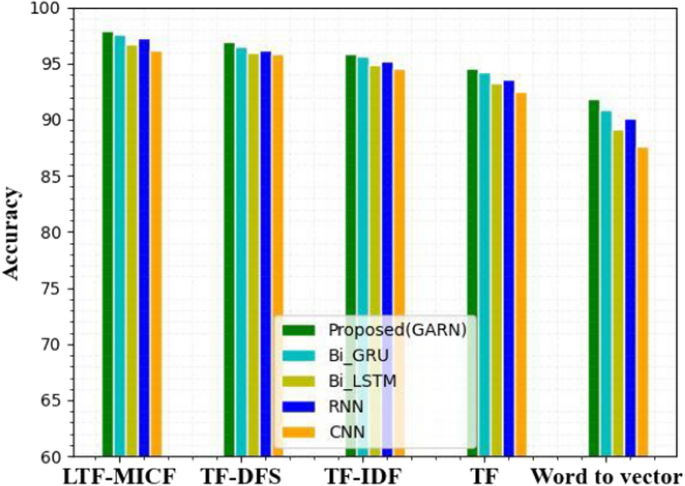

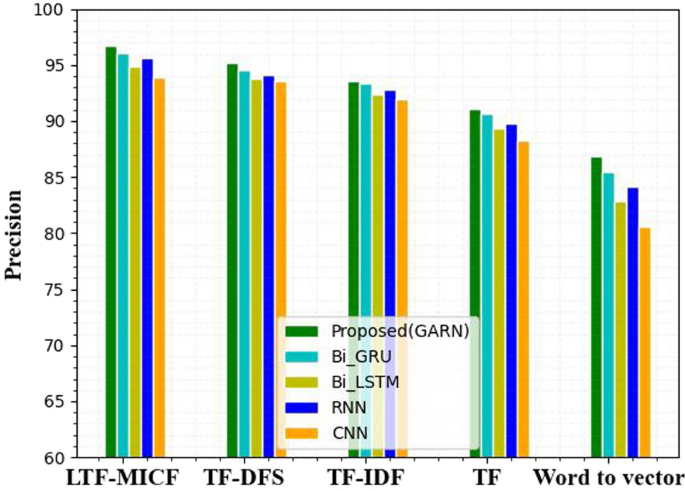

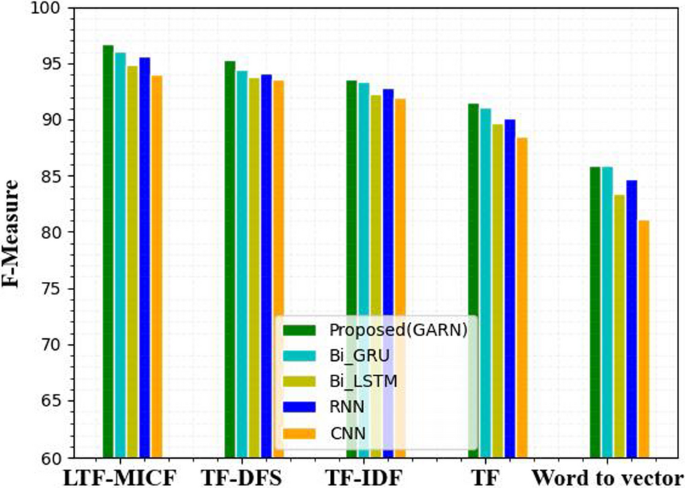

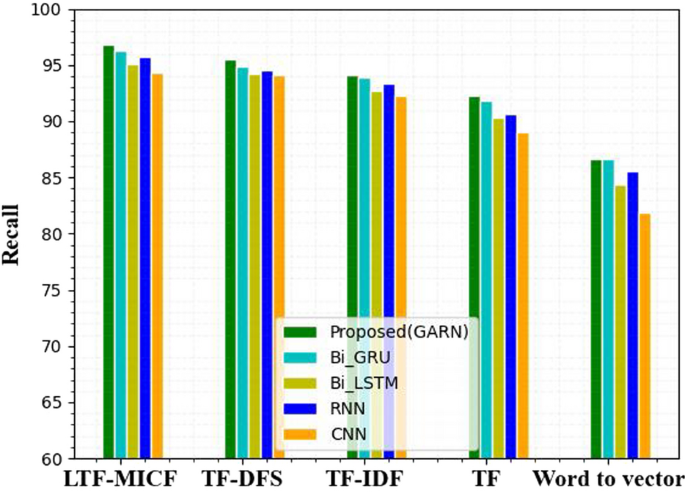

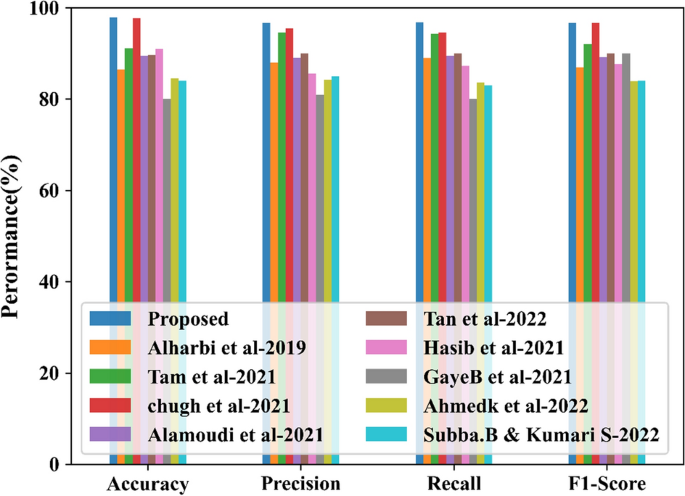

This section briefly describes the performance metrics like accuracy, precision, recall and f-measure. The overall analysis of the Twitter sentiment classification with pre-processing, feature extraction, feature selection and classification are also analyzed and discussed clearly. Results on comparing the existing and trending classifiers with term weighting schemes in bar graphs and tables are included. Finally, a small discussion about the overall workflow concluded the research by importing the analyzed performance metrics. The sentiment is an expression from individuals based on an opinion on any subject. Tweet-based analysis of sentiment mainly focuses on detecting positive and negative sentiments. So, it is necessary to enhance the classification classes in which a neutral class is added to the datasets.

The dataset utilized in our proposed work is Sentiment 140, gathered from [ 27 ], which contains 1,600,000tweets extracted from Twitter API. The score values for each tweet as, for positive tweets, the rank value is 4.Similarly, for negative tweets rank value is 0, and for neutral tweets, the rank value is 2.The total number of positive tweets in a dataset is 20832, neutral tweets are 18318, negative tweets are 22542, and irrelevant tweets are 12990. From the entire dataset, 70%is used for training, 15% for testing and 15% for validation. Table 2 shows the system configuration of the designed classifier.

Performance metrics

In this proposed method, 4 different weight schemes are compared with other existing,proposed classifiers in which the performance metrics are precision, f1-score, recall and accuracy. Four notations, namely, true-positive \((t_{p} )\) , true-negative \((t_{n} )\) , false-positive \((f_{p} )\) and false-negative, \((f_{n} )\) are particularly utilized to measure the performance metrics.

Accuracy \((A_{c} )\)

Accuracy is the dataset’s information accurately being classified by the proposed classifier. The accuracy value for the proposed method is obtained using Eq. 39 .

Precision \((P_{r} )\)

Precision is defined as the number of terms accurately identified positive to the total identified positively. The precision value for the proposed method is obtained using Eq. 40 .

Recall \((R_{e} )\)