- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- Introduction & Top Questions

- Analog computers

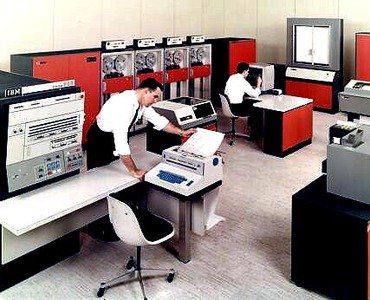

- Mainframe computer

- Supercomputer

- Minicomputer

- Microcomputer

- Laptop computer

- Embedded processors

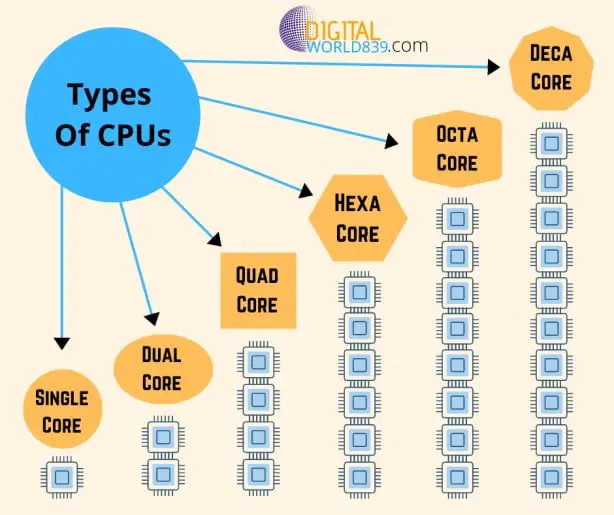

- Central processing unit

- Main memory

- Secondary memory

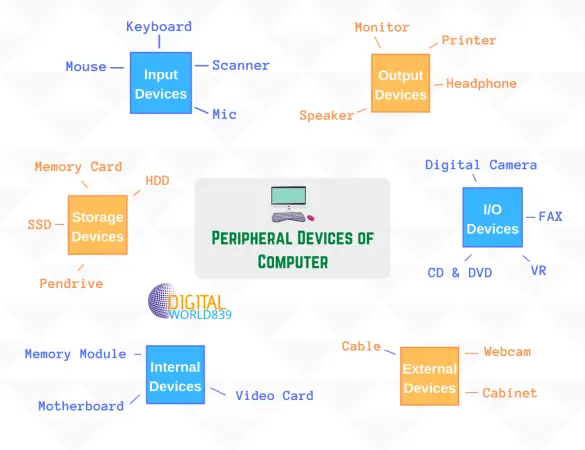

- Input devices

- Output devices

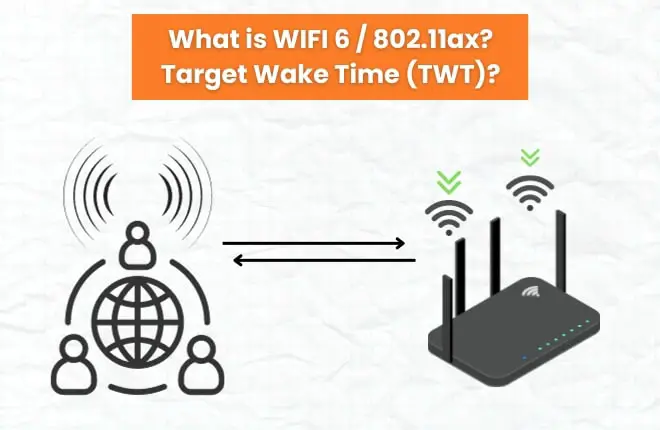

- Communication devices

- Peripheral interfaces

- Fabrication

- Transistor size

- Power consumption

- Quantum computing

- Molecular computing

- Role of operating systems

- Multiuser systems

- Thin systems

- Reactive systems

- Operating system design approaches

- Local area networks

- Wide area networks

- Business and personal software

- Scientific and engineering software

- Internet and collaborative software

- Games and entertainment

Analog calculators: from Napier’s logarithms to the slide rule

Digital calculators: from the calculating clock to the arithmometer, the jacquard loom.

- The Difference Engine

- The Analytical Engine

- Ada Lovelace, the first programmer

- Herman Hollerith’s census tabulator

- Other early business machine companies

- Vannevar Bush’s Differential Analyzer

- Howard Aiken’s digital calculators

- The Turing machine

- The Atanasoff-Berry Computer

- The first computer network

- Konrad Zuse

- Bigger brains

- Von Neumann’s “Preliminary Discussion”

- The first stored-program machines

- Machine language

- Zuse’s Plankalkül

- Interpreters

- Grace Murray Hopper

- IBM develops FORTRAN

- Control programs

- The IBM 360

- Time-sharing from Project MAC to UNIX

- Minicomputers

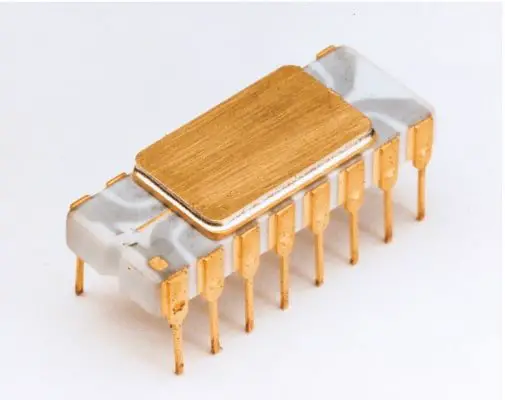

- Integrated circuits

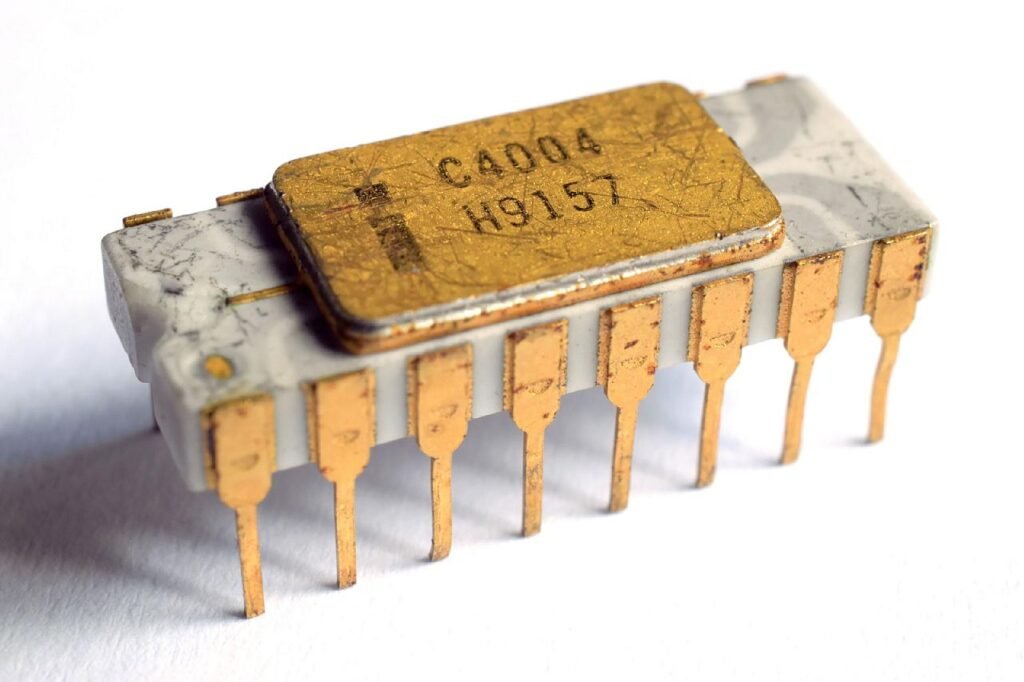

- The Intel 4004

- Early computer enthusiasts

- The hobby market expands

- From Star Trek to Microsoft

- Application software

- Commodore and Tandy enter the field

- The graphical user interface

- The IBM Personal Computer

- Microsoft’s Windows operating system

- Workstation computers

- Embedded systems

- Handheld digital devices

- The Internet

- Social networking

- Ubiquitous computing

- What is a computer?

- Who invented the computer?

- What can computers do?

- Are computers conscious?

- What is the impact of computer artificial intelligence (AI) on society?

History of computing

Our editors will review what you’ve submitted and determine whether to revise the article.

- University of Rhode Island - College of Arts and Sciences - Department of Computer Science and Statistics - History of Computers

- LiveScience - History of Computers: A Brief Timeline

- Computer History Museum - Timeline of Computer history

- Engineering LibreTexts - What is a computer?

- Computer Hope - What is a Computer?

- computer - Children's Encyclopedia (Ages 8-11)

- computer - Student Encyclopedia (Ages 11 and up)

- Table Of Contents

A computer might be described with deceptive simplicity as “an apparatus that performs routine calculations automatically.” Such a definition would owe its deceptiveness to a naive and narrow view of calculation as a strictly mathematical process. In fact, calculation underlies many activities that are not normally thought of as mathematical. Walking across a room, for instance, requires many complex, albeit subconscious, calculations. Computers, too, have proved capable of solving a vast array of problems, from balancing a checkbook to even—in the form of guidance systems for robots—walking across a room.

Recent News

Before the true power of computing could be realized, therefore, the naive view of calculation had to be overcome. The inventors who labored to bring the computer into the world had to learn that the thing they were inventing was not just a number cruncher, not merely a calculator. For example, they had to learn that it was not necessary to invent a new computer for every new calculation and that a computer could be designed to solve numerous problems, even problems not yet imagined when the computer was built. They also had to learn how to tell such a general problem-solving computer what problem to solve. In other words, they had to invent programming.

They had to solve all the heady problems of developing such a device, of implementing the design, of actually building the thing. The history of the solving of these problems is the history of the computer. That history is covered in this section, and links are provided to entries on many of the individuals and companies mentioned. In addition, see the articles computer science and supercomputer .

Early history

Computer precursors.

The earliest known calculating device is probably the abacus . It dates back at least to 1100 bce and is still in use today, particularly in Asia. Now, as then, it typically consists of a rectangular frame with thin parallel rods strung with beads. Long before any systematic positional notation was adopted for the writing of numbers, the abacus assigned different units, or weights, to each rod. This scheme allowed a wide range of numbers to be represented by just a few beads and, together with the invention of zero in India, may have inspired the invention of the Hindu-Arabic number system . In any case, abacus beads can be readily manipulated to perform the common arithmetical operations—addition, subtraction, multiplication, and division—that are useful for commercial transactions and in bookkeeping.

The abacus is a digital device; that is, it represents values discretely. A bead is either in one predefined position or another, representing unambiguously, say, one or zero.

Calculating devices took a different turn when John Napier , a Scottish mathematician, published his discovery of logarithms in 1614. As any person can attest , adding two 10-digit numbers is much simpler than multiplying them together, and the transformation of a multiplication problem into an addition problem is exactly what logarithms enable. This simplification is possible because of the following logarithmic property: the logarithm of the product of two numbers is equal to the sum of the logarithms of the numbers. By 1624, tables with 14 significant digits were available for the logarithms of numbers from 1 to 20,000, and scientists quickly adopted the new labor-saving tool for tedious astronomical calculations.

Most significant for the development of computing, the transformation of multiplication into addition greatly simplified the possibility of mechanization. Analog calculating devices based on Napier’s logarithms—representing digital values with analogous physical lengths—soon appeared. In 1620 Edmund Gunter , the English mathematician who coined the terms cosine and cotangent , built a device for performing navigational calculations: the Gunter scale, or, as navigators simply called it, the gunter. About 1632 an English clergyman and mathematician named William Oughtred built the first slide rule , drawing on Napier’s ideas. That first slide rule was circular, but Oughtred also built the first rectangular one in 1633. The analog devices of Gunter and Oughtred had various advantages and disadvantages compared with digital devices such as the abacus. What is important is that the consequences of these design decisions were being tested in the real world.

In 1623 the German astronomer and mathematician Wilhelm Schickard built the first calculator . He described it in a letter to his friend the astronomer Johannes Kepler , and in 1624 he wrote again to explain that a machine he had commissioned to be built for Kepler was, apparently along with the prototype , destroyed in a fire. He called it a Calculating Clock , which modern engineers have been able to reproduce from details in his letters. Even general knowledge of the clock had been temporarily lost when Schickard and his entire family perished during the Thirty Years’ War .

But Schickard may not have been the true inventor of the calculator. A century earlier, Leonardo da Vinci sketched plans for a calculator that were sufficiently complete and correct for modern engineers to build a calculator on their basis.

The first calculator or adding machine to be produced in any quantity and actually used was the Pascaline, or Arithmetic Machine , designed and built by the French mathematician-philosopher Blaise Pascal between 1642 and 1644. It could only do addition and subtraction, with numbers being entered by manipulating its dials. Pascal invented the machine for his father, a tax collector, so it was the first business machine too (if one does not count the abacus). He built 50 of them over the next 10 years.

In 1671 the German mathematician-philosopher Gottfried Wilhelm von Leibniz designed a calculating machine called the Step Reckoner . (It was first built in 1673.) The Step Reckoner expanded on Pascal’s ideas and did multiplication by repeated addition and shifting.

Leibniz was a strong advocate of the binary number system . Binary numbers are ideal for machines because they require only two digits, which can easily be represented by the on and off states of a switch. When computers became electronic, the binary system was particularly appropriate because an electrical circuit is either on or off. This meant that on could represent true, off could represent false, and the flow of current would directly represent the flow of logic.

Leibniz was prescient in seeing the appropriateness of the binary system in calculating machines, but his machine did not use it. Instead, the Step Reckoner represented numbers in decimal form, as positions on 10-position dials. Even decimal representation was not a given: in 1668 Samuel Morland invented an adding machine specialized for British money—a decidedly nondecimal system.

Pascal’s, Leibniz’s, and Morland’s devices were curiosities, but with the Industrial Revolution of the 18th century came a widespread need to perform repetitive operations efficiently. With other activities being mechanized, why not calculation? In 1820 Charles Xavier Thomas de Colmar of France effectively met this challenge when he built his Arithmometer , the first commercial mass-produced calculating device. It could perform addition, subtraction, multiplication, and, with some more elaborate user involvement, division. Based on Leibniz’s technology , it was extremely popular and sold for 90 years. In contrast to the modern calculator’s credit-card size, the Arithmometer was large enough to cover a desktop.

Calculators such as the Arithmometer remained a fascination after 1820, and their potential for commercial use was well understood. Many other mechanical devices built during the 19th century also performed repetitive functions more or less automatically, but few had any application to computing. There was one major exception: the Jacquard loom , invented in 1804–05 by a French weaver, Joseph-Marie Jacquard .

The Jacquard loom was a marvel of the Industrial Revolution. A textile-weaving loom , it could also be called the first practical information-processing device. The loom worked by tugging various-colored threads into patterns by means of an array of rods. By inserting a card punched with holes, an operator could control the motion of the rods and thereby alter the pattern of the weave. Moreover, the loom was equipped with a card-reading device that slipped a new card from a pre-punched deck into place every time the shuttle was thrown, so that complex weaving patterns could be automated.

What was extraordinary about the device was that it transferred the design process from a labor-intensive weaving stage to a card-punching stage. Once the cards had been punched and assembled, the design was complete, and the loom implemented the design automatically. The Jacquard loom, therefore, could be said to be programmed for different patterns by these decks of punched cards.

For those intent on mechanizing calculations, the Jacquard loom provided important lessons: the sequence of operations that a machine performs could be controlled to make the machine do something quite different; a punched card could be used as a medium for directing the machine; and, most important, a device could be directed to perform different tasks by feeding it instructions in a sort of language—i.e., making the machine programmable.

It is not too great a stretch to say that, in the Jacquard loom, programming was invented before the computer. The close relationship between the device and the program became apparent some 20 years later, with Charles Babbage’s invention of the first computer.

Welcome to CS

Section 2.3 the first generation.

Subsection 2.3.1 The Stored Program Computer

Remark 2.3.5 .

Chapter 2 - Background

2.15 The Emergence of First Generation Computers 1946-1959

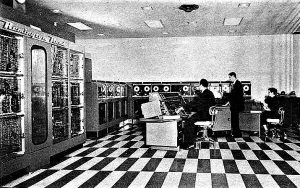

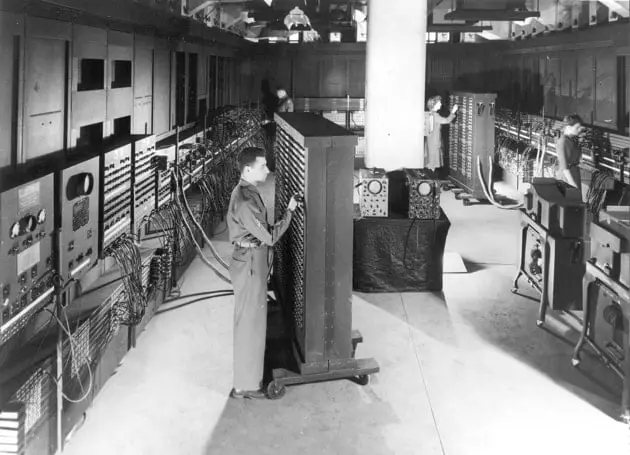

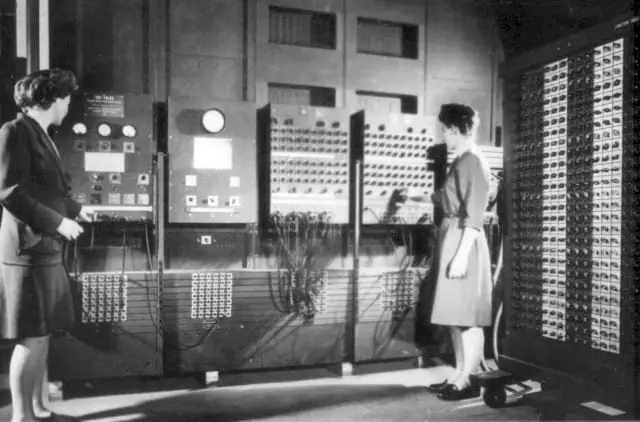

In late 1945 researchers at the Moore School of the University of Pennsylvania powered up a machine that was 100 feet long, 10 feet high, and 3 feet deep. It contained 17,000 vacuum tubes, about 70,000 resistors, 10,000 capacitors, and 6,000 switches. The Electronic Numerical Integrator and Calculator (ENIAC) was the first fully electronic computer. Designed and developed by J. Presper Eckert and John W. Mauchly under contractor supervision of Lieutenant Herman Goldstine, 25 ENIAC funding came from the Army’s Ballistic Research Laboratory at Aberdeen. ENIAC had a serious defect. Although it could compute several hundred times faster than an electro-mechanical or relay-type machine 26 , the computer required rewiring with each new problem, consuming from 30 minutes to a full day. 27 Nevertheless, in instantiating the first electronic computer, Eckert, Mauchly and Goldstine advanced the trajectory of computers beyond being just an idea.

Upon completing the design of ENIAC, the team faced a new challenge: to design the next computer, one to be significantly better, preferably without the impossible constraint of rewiring. In the summer of 1944, Lieutenant Goldstine, by chance, encountered John von Neumann while waiting for a train. Goldstine began to tell von Neumann, the foremost applied mathematician of his time, about ENIAC. Von Neumann was himself involved in a number of secret projects needing more computation than then available, yet did not know of ENIAC.

On August 7, 1944, von Neumann visited the Moore School and began contributing ideas immediately. The collaboration between von Neumann, Eckert, Mauchly and Goldstine proved fruitful when they hit upon the concept of storing the logic instructions in memory – the “stored-program” 28 computer. Instead of manual resetting of the switches, or worse yet, rewiring, to set-up the calculations of a new problem, the programmer could modify the program arithmetically. 29 With these new architectural ideas, they designed the ENIAC’s successor, the EDVAC (Electronic Discrete Variable Automatic Calculator).

Elsewhere, Thomas J. Watson Jr. had rejoined IBM after serving in the Air Force. In early 1946, Eckert and Mauchly gave Watson Jr. a tour of the Moore School and ENIAC. Although Watson Jr. sensed that Eckert and Mauchly thought they had a product with which to best IBM, he was unimpressed. “The truth was that I reacted to ENIAC the way some people probably reacted to the Wright brother’s airplane: it didn’t move me at all. I can’t imagine why I didn’t think, “Good God, that’s the future of the IBM company.” 30

Funding the development of computer systems, such as the ENIAC and EDVAC, represented only one way the government advanced understanding of computer technologies. Government agencies also sponsored meetings and courses, with the objectives of theory development, diffusion of knowledge, and training of personnel needed to explore the computer trajectory. Diffusing the growing knowledge and practice of computers ensured the government a future base of personnel to design and build the computers the military would need.

During the summer of 1946, the Moore School held a six-week course entitled, “Theory and Techniques for the Design of Electronic Digital Computers,” sponsored by the Office of Naval Research and the Army Ordinance Department. 31 Six months later, the Navy sponsored a four-day conference at Harvard, 350 people attended. 32 Representatives of government agencies, universities and a wide range of companies attended both of these significant events. Although many companies were learning about computers, none of them would be the first to act; that honor belongs to a start-up formed by Eckert and Mauchly in March 1946.

In 1946, the University of Pennsylvania dismissed Eckert and Mauchly because of their interests in commercializing the ENIAC and EDVAC. The two perceived an economic opportunity in selling computers and together formed the Electronic Control Company. The Census Bureau awarded them their first contract in June, beating out Raytheon. In 1947, the company changed its name to the Eckert-Mauchly Computer Corporation.

Two additional contracts to build computers were signed – with A.C. Nielson and Prudential Life Insurance Company – with badly needed cash advances to fund continuing product investment. Henry Straus, a Delaware racetrack owner and vice president of American Totalizer, committed to invest half a million dollars for 40 percent of Eckert-Mauchly common stock. But Straus died in an airplane crash, and local financial institutions refused to honor the notes Straus had given the company. By 1949, the company verged on bankruptcy, despite having their three contracts. 33

Eckert and Mauchly contacted everyone they knew who might be interested in either funding them, or acquiring them: NCR, Remington Rand, IBM, Philco, Burroughs, Hughes Aircraft, and others. They signed their acquisition by Remington Rand in February 1950. Remington Rand then attempted to cancel the three contracts. Nielson and Prudential agreed reluctantly. But the Census Bureau refused to cancel. They were going to make Remington Rand deliver the agreed upon computer. This act, obviously, influenced the economic history of computers by forcing product instantiation.

In the Spring of 1951, the Eckert-Mauchly division of Remington Rand shipped the first UNIVAC I to the Census Bureau. The next five UNIVAC I’s shipped to the government as well, to the Atomic Energy Commission (2), Air Force, Army, and Navy. 34

Another start-up, however, captured the honors of shipping the first commercial computer. The founders of Engineering Research Associates (ERA), after resigning from the Naval Communications Supplementary Activity, signed a contract with the Navy to develop the Atlas I computer. They had the right to resell the same technology commercially. The Atlas I computer as the ERA 1101 shipped in December 1950.

In 1952, Remington Rand, repeating a pattern of organizational development used in the office machinery, acquired their second computer firm, ERA. This time Remington Rand owned the two leading firms, not firms wanting to be acquired because they had trouble competing. Remington Rand controlled the emerging computer market-structure, yet remained a distant second to IBM in office machinery, especially tabulating equipment. In 1954, Remington Rand sold their first UNIVAC to a commercial customer: General Electric.

Goldstine held a Ph.D. and participated actively in the project.

RichardR. Nelson, “The Computer Industry,” Government and Technical Progress: A Cross-Industry Analysis,” Pergammon Press, 1982, p. 165

George Schussel, “IBM and REMRAND” Datamation, May 1965, p. 55

Stored-programing laid the beginnings of software, both as component and architecture.

Nelson, p. 167

Thomas J. Watson Jr., “Father, Son & Co.,” Bantam Books, 1990, p.143

Nelson, p 167

Ibid.., p 168

Nelson, pp 169-170

Nelson, p. 170

- Introduction

- Entrepreneurial Capitalism

- From Ideas to Entrepreneurs to Adaptive Corporations

- Firms constructing social networks as Populations

- Three Revolutions in Computer Technologies and Corporate Usage 1968-1988

- Institutional Change in Communications: Deregulation and Break-up of AT&T

- A Brief Overview of Computer Communications 1968-1988

- Personal Comments

- Terminology

- Preconditions

- The Institutions of Competitive Capitalism

- The Telegraph and the Information Revolution

- The Institutions of Corporate Capitalism

- Alexander Graham Bell and Bell Telephone Co. -- 1873-1878

- Vail Joins the Bell Telephone Company -- 1878-1887

- Monopoly Asserted -- 1918-1934

- The FCC and AT&T Regulation -- 1934-1946

- The U.S. vs. Western Union Lawsuit -- 1949-1956

- Computer Inquiry I and the Carterfone -- 1965-1973

- The FCC, Jurisdictional Disputes and Direct Connection of CPE -- 1973-1978

- Antitrust, Computer Inquiry II and the Break-up of AT&T - 1973-1984

- The Emergence of First Generation Computers 1946-1959

- The Entrance of IBM - 1952

- Real-Time Computing -- The SAGE Project -- 1952 - 1958

- The Transistor - 1947

- Second Generation Computing -- 1959-1963

- The Integrated Circuit -- 1959

- Management Information Systems -- 1959-1972

- The IBM System/360 and the Third Generation of Computing --1964

- Timesharing -- Project MAC -- 1962-1968

- The Minicomputer -- 1959-1979

- The Microprocessor -- 1971

- Personal Distributed Computing -- Xerox PARC -- 1980

- Personal Computers -- 1973-1988

- Beginnings of Modem Competition: Codex and Milgo 1956-1967

- Carterfone, ATT and the FCC 1948-1967

- The Remarkable Growth in the Use of Computers

- The FCC and Computer Inquiry I 1966-1967

- Codex and Milgo: Needing Money 1967-1968

- Multiplexer Innovation: American Data Systems 1966-1968

- Euphoric Markets and Venture Capital 1967-1968

- Codex and Milgo Become Public Companies 1968

- American Data Systems Off and Running 1968

- Carterfone, Computer Inquiry I and Deregulation 1967-1968

- In Perspective

- The Intergalactic Network: 1962-1964

- The Seminal Experiment: 1965

- Circuit Switching

- Paul Baran - 1959-1965

- Donald Davies - 1965-1966

- Packet Switching

- Planning the ARPANET: 1967-1968

- The RFQ and Bidding: 1968

- Bolt Beranek and Newman: The Winning Bid -1968

- Entrepreneurism Flourishes 1968-1972

- The Economic Roller Coaster 1969-1975

- AT&T and Computer Inquiry I 1969

- Codex Encounters Unexpected Problems: 1969

- ADS Has a Blockbuster 1969

- Codex Turns the Corner: 1970

- ADS Hits a Wall: 1970

- AT&T and Computer Inquiry I 1970-1971

- Firms and Collective Behavior: The Creation of the IDCMA 197

- Codex and the 9600: 1971

- ADS Falls on Hard Times: 1971-1972

- Codex Passes a Milestone: 1972

- Data Communications 1972

- The Communications Subnet: BBN 1969

- Host-to-Host Software: The Network Working Group 1968-1969

- Delivery of the First IMP to UCLA - September 1969

- IPTO Management Changes - 1969

- Host-to-Host Software - 1970

- Network Topology - 1969-1970

- Network Measurement Center - 1969-1970

- Early Surprises - 1969-1970

- Host-to-Host Software: The Network Control Program - 1970-1971

- ALOHANET and Norm Abramson: 1966 - 1972

- NPL Network and Donald Davies 1966 - 1971

- ICCC Demonstration 1971-1972

- Minicomputers, Distributed Data Processing and Microprocessors

- The Justice Department: IBM and AT&T

- Codex: LSI modems and Front-End Processors 1973

- Wesley Chu and the Statistical Multiplexer 1966-1975

- Codex: The LSI Modem and Competition 1974-1975

- ADS: Rebirth as Micom 1973-1976

- CPE Certification and Computer Inquiry II

- Codex: The Statistical Multiplexer and Competition 1975-1976

- Modems, Multiplexers and Networks 1976-1978

- Micom: The Statistical Multiplexer 1976-1978

- Codex and Motorola 1977-1978

- Micom: Meteoric Success and Competition 1978-1979

- Commercializing Arpanet 1972 - 1975

- Packet Radio and Robert Kahn: 1972-1974

- CYCLADES Network and Louis Pouzin 1971 - 1972

- Transmission Control Protocol (TCP) 1973-1976

- A Proliferation of Communication Projects

- Token Ring and David Farber, UC Irvine and the NSF 1969-1974

- Ethernet and Robert Metcalfe and Xerox PARC 1971-1975

- Massachusetts Institute of Technology 1974 - 1977

- Metcalfe Joins the Systems Development Division of Xerox 1975-1978

- Xerox Network System (XNS) 1977-1978

- TCP to TCP/IP 1976-1979

- Open System Interconnection (OSI) 1975 - 1979

- National Bureau of Standards and MITRE 1971 - 1979

- The NBS and MITRE Workshop of January 1979

- Prime Computers

- The Workshop

- Robert Metcalfe and the MIT Laboratory of Computer Science

- Robert Metcalfe and Digital Equipment Corporation

- The Symposium

- The Return of Venture Capital

- Robert Metcalfe and the Founding of 3Com

- Michael Pliner and the Founding of Sytek

- Ralph Ungermann and Charlie Bass and the Founding of Ungermann-Bass

- Micom: The DataPBX and IPO 1978-1981

- Codex: The DataPBX 1978-1981

- Sytek: A Broadband Network and Needing Cash

- Ungermann-Bass: Xerox, Broadband and Needing a Chip

- 3Com: Product Strategy and Waiting for a PC

- Emerging LAN Competition 1981

- Bridge Communications

- Concord Data Systems

- The Office of the Future, the PBX to CBX, and AT&T

- The IBM PC and IBM’s Token Ring LAN 1981-1982

- 3Com, Ungermann-Bass and Sytek – 1981-'82

- Ungermann-Bass

- The Data Communication Competitors 1981-1982

- Other Data Communication Competitors

- The Early LAN Competitors – 1982

- A Second Wave of LAN Competition - 1982

- Digital Equipment Corporation (DEC)

- Communications Machinery Corporation (CMC)

- General Electric

- The AT&T Settlement: January 1982

- AT&T Introduces CBXs and LANs

- Does IBM Need Both LANs and PBXs?

- 3Com - 1982

- Ungermann-Bass - 1982

- Sytek - 1982

- Ethernet Chips, Boundless Hope and Market Confusion

- Standards Making and the OSI Reference Model

- IEEE Committee 802: 1979 - 1980

- DIX (Digital Equipment Corporation, Intel, and Xerox): 1979 - 1980

- IEEE Committee 802 and DIX: 1980 - 1981

- ISO/OSI (Open Systems Interconnection): 1979 - 1980

- TCP/IP and XNS: 1979-1980

- ISO/OSI (Open Systems Interconnection): 1981 - 1982

- TCP/IP and XNS 1981 - 1983

- IEEE Committee 802: 1981 - 1982

- ISO/OSI (Open Systems Interconnection): 1982 - 1983

- The Emergence of Technological Order: 1983 - 1984

- Alex Brown & Sons Conference: March 1983

- 3Com, Ungermann-Bass, and Sytek: 1983 – 1984

- The Early LAN Competitors: 1983 – 1984

- The Second Wave of LAN Competitors: 1983 – 1984

- Excelan 1983-1984

- The Data Communication Competitors: 1983 – 1984

- New DataPBX Competitors

- State of Competition: 1985

- 3Com, Ungermann-Bass and Sytek: 1985 –1986

- The Early LAN Competitors: 1985 - 1986

- The Second Wave of LAN Competitors: 1985 - 1986

- Communications Machinery Corporation

- The Data Communication Competitors: 1985-1986

- Micom - Interlan

- The Revolution of Digital Transmission

- AT&T and the T-1 Tariffs 1982-1984

- The T-1 Multiplexer

- The Beginnings of “Be Your Own Bell”

- Data Communications: First Signs of Digital Networks 1982-1985

- Data Communications - Industry Overview

- General DataComm

- Digital Communication Associates

- Other Data Communication Firms

- Tymnet and the Caravan Project 1982

- Entrepreneurs: The T-1 start-ups 1982-1985

- Network Equipment Technologies

- Cohesive Networks

- Network Switching Systems

- Spectrum Digital

- Market Analysis 1984-1987

- Samples of Experts' Opinions

- The Yankee Group

- Datapro Research

- Alex. Brown & Sons

- Salomon Brothers Inc.

- T-1 Multiplexer OEM Relationships - 1985

- Data Communication: Wide Area Networks 1985-1988

- which firms will adapt successfully?

- The Emergence of Internetworking

- Interconnecting Local Area Networks (LANs)

- Repeaters - Physical Layer: Solutions to Extend a Network

- Bridges - Data Link Layer: Adding a Few Networks Together

- Gateways/Routers - Network Layer: Integrating Countless Networks

- Open System Interconnection (OSI) Gaining Momentum

- The Department of Defense - OSI and TCP/IP

- The Role of the National Bureau of Standards (NBS)

- Autofact Trade Show - November 1985

- The NBS in Action: OSINET, COS, and GOSIP

- LANs and WANs: The Public Demonstrations - 1988

- ENE and Interop

- Enterprise Network Event (OSI) - June

- Interop (TCP/IP) Trade Show - September

- Data Communications: Firms Adapting or Dying? 1987-1988

- DCA, Racal Electronics, Timeplex, Paradyne, and Stratacom

- Networking: Firms Responding to Market Consolidation: 1987-1988

- Concord Communications, Inc.

- DEC, Excelan, Sytek, and CMC

- Internetworking: Entrepreneurs and Start-Ups: 1985-1988

- cisco Systems

- Product Revenues 1970-1988

- Computer and Terminal Forecasts 1968-1988

- Computer Communications Market

- Computer Communications Revenues Reconcilliation

- Product Categories and Firms

- Computer Communications Start-Ups

- Timing of Start-Up Financing

- Computer Communications Market-Structure Consolodation

- Income Statement Analysis

- Balance Sheet Analysis

- Financial Histories Aligned by IPO Year

- Cash Uses of Pre-IPO Capital

- Market Windows and Organization Ecology

- Entrepreneurial Profit

- Market Research Forecasting Uncertainties

- Dominant Design Examples

- Synoptics and 3Com Analysis

- Data Communications Firm Interrelationships

- Data Communications Sector Income Statements

- Networking Sector Income Statements

- Networking Market-Structure Analysis

- Selection Pressures in Networking

- Investment in Innovation by Data Communications and Networking Firms

- Internetworking Sector Income Statements

- Bolt Beranek & Newman (BBN) documents

- Manley Irwin Papers

- Market Analysis

- Abramson, Norm

- Bachman, Charles

- Baran, Paul

- Bass, Charlie

- Bell, Gordon

- Bingham, John

- Botwinick, Edward

- Carrico, Bill

- Chu, Wesley

- Clark, Dave

- Clark, Wesley

- Crocker, Steve

- Dalal, Yogen

- Dambrackas, Bill

- Davidson, John

- Davies, Donald

- Donnan, Robert

- Estrin, Judy

- Evans, Roger

- Farber, David

- Fernandez, Manny

- Forkish, Robbie

- Forney, David

- Frank, Howard

- Frankel, Steve

- Graube, Maris

- Grumbles, George

- Heafner, John

- Heart, Frank

- Holsinger, Jerry

- Huffaker, Craig

- Hunt, Bruce

- Johnson, Johnny

- Jordan, Jim

- Kahn, Robert

- Kaufman, Phil

- Kinney, Matt

- Kleinrock, Leonard

- Krause, Bill

- Krechmer, Ken

- LaBarre, Lee & Brusil, Paul

- Licklider, J.C.R.

- Liddle, David

- Loughry, Don

- MacLean, Audrey

- Maxwell, Kim

- McDowell, Jerry

- Metcalfe, Robert

- Miller, Ken

- Mulvenna, Jerry

- Nordin, Bert

- Norred, Bill

- Nyborg, Phil

- Pliner, Michael

- Pogran, Ken

- Postel, Jon

- Pouzin, Louis

- Rekhi, Kanwal

- Roberts, Larry

- Rosenthal, Robert

- Saltzer, Jerry

- Salwen, Howard

- Severino, Paul

- Slomin, Mike

- Smith, Bruce

- Smith, Mark

- Smith, Robert & Thompson, Thomas

- Strassburg, Bernard

- Taylor, Robert

- Ungermann, Ralph

- Warmenhoven, Dan

- Wecker, Stuart

- White, James

- Wiggins, Robert

- Wilkes, Art

- Zimmerman, Hubert

- David Forney

- Jerry Holsinger

- Craig Huffaker

- Bert Nordin

- Bill Dambrackas

- Robert Smith & Thomas Thompson

- Roger Evans

- Steve Frankel

- Bill Norred

- Paul Severino

- Matt Kinney

- Howard Frank

- Robert Wiggins

- Johnny Johnson

- Edward Botwinick

- George Grumbles

- John Bingham

- Ken Krechmer

- Kim Maxwell

- Bill Krause

- Robert Metcalfe

- Bill Carrico

- Judy Estrin

- Kanwal Rekhi

- Howard Salwen

- Michael Pliner

- Larry Roberts

- James White

- Charlie Bass

- John Davidson

- Ralph Ungermann

- Jerry McDowell

- Manny Fernandez

- Robbie Forkish

- Audrey MacLean

- Bruce Smith

- Frank Heart

- Robert Kahn

- Gordon Bell

- Stuart Wecker

- Don Loughry

- Dan Warmenhoven

- Phil Kaufman

- Yogen Dalal

- David Liddle

- Robert Taylor

- Steve Crocker

- J.C.R. Licklider

- Phil Nyborg

- Mike Slomin

- Bernard Strassburg

- Maris Graube

- Jerry Saltzer

- Charles Bachman

- Louis Pouzin

- Hubert Zimmerman

- Lee LaBarre & Paul Brusil

- John Heafner

- Jerry Mulvenna

- Robert Rosenthal

- Donald Davies

- Wesley Clark

- David Farber

- Leonard Kleinrock

- Norm Abramson

- Random article

- Teaching guide

- Privacy & cookies

A brief history of computers

by Chris Woodford . Last updated: January 19, 2023.

C omputers truly came into their own as great inventions in the last two decades of the 20th century. But their history stretches back more than 2500 years to the abacus: a simple calculator made from beads and wires, which is still used in some parts of the world today. The difference between an ancient abacus and a modern computer seems vast, but the principle—making repeated calculations more quickly than the human brain—is exactly the same.

Read on to learn more about the history of computers—or take a look at our article on how computers work .

Listen instead... or scroll to keep reading

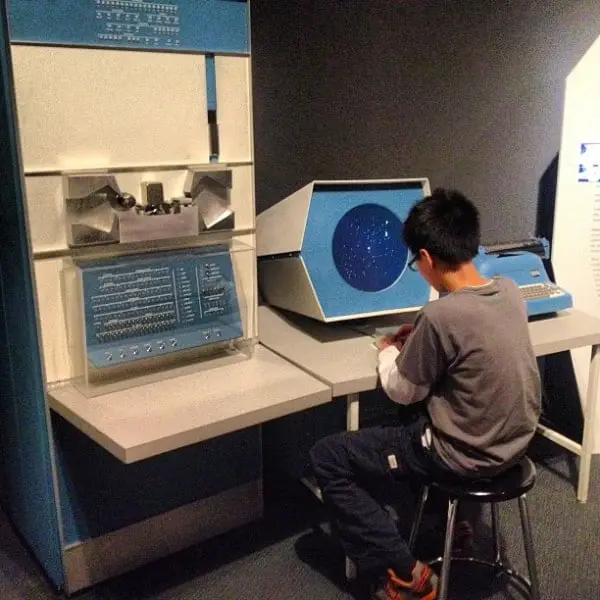

Photo: A model of one of the world's first computers (the Difference Engine invented by Charles Babbage) at the Computer History Museum in Mountain View, California, USA. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

Cogs and Calculators

It is a measure of the brilliance of the abacus, invented in the Middle East circa 500 BC, that it remained the fastest form of calculator until the middle of the 17th century. Then, in 1642, aged only 18, French scientist and philosopher Blaise Pascal (1623–1666) invented the first practical mechanical calculator , the Pascaline, to help his tax-collector father do his sums. The machine had a series of interlocking cogs ( gear wheels with teeth around their outer edges) that could add and subtract decimal numbers. Several decades later, in 1671, German mathematician and philosopher Gottfried Wilhelm Leibniz (1646–1716) came up with a similar but more advanced machine. Instead of using cogs, it had a "stepped drum" (a cylinder with teeth of increasing length around its edge), an innovation that survived in mechanical calculators for 300 hundred years. The Leibniz machine could do much more than Pascal's: as well as adding and subtracting, it could multiply, divide, and work out square roots. Another pioneering feature was the first memory store or "register."

Apart from developing one of the world's earliest mechanical calculators, Leibniz is remembered for another important contribution to computing: he was the man who invented binary code, a way of representing any decimal number using only the two digits zero and one. Although Leibniz made no use of binary in his own calculator, it set others thinking. In 1854, a little over a century after Leibniz had died, Englishman George Boole (1815–1864) used the idea to invent a new branch of mathematics called Boolean algebra. [1] In modern computers, binary code and Boolean algebra allow computers to make simple decisions by comparing long strings of zeros and ones. But, in the 19th century, these ideas were still far ahead of their time. It would take another 50–100 years for mathematicians and computer scientists to figure out how to use them (find out more in our articles about calculators and logic gates ).

Artwork: Pascaline: Two details of Blaise Pascal's 17th-century calculator. Left: The "user interface": the part where you dial in numbers you want to calculate. Right: The internal gear mechanism. Picture courtesy of US Library of Congress .

Engines of Calculation

Neither the abacus, nor the mechanical calculators constructed by Pascal and Leibniz really qualified as computers. A calculator is a device that makes it quicker and easier for people to do sums—but it needs a human operator. A computer, on the other hand, is a machine that can operate automatically, without any human help, by following a series of stored instructions called a program (a kind of mathematical recipe). Calculators evolved into computers when people devised ways of making entirely automatic, programmable calculators.

Photo: Punched cards: Herman Hollerith perfected the way of using punched cards and paper tape to store information and feed it into a machine. Here's a drawing from his 1889 patent Art of Compiling Statistics (US Patent#395,782), showing how a strip of paper (yellow) is punched with different patterns of holes (orange) that correspond to statistics gathered about people in the US census. Picture courtesy of US Patent and Trademark Office.

The first person to attempt this was a rather obsessive, notoriously grumpy English mathematician named Charles Babbage (1791–1871). Many regard Babbage as the "father of the computer" because his machines had an input (a way of feeding in numbers), a memory (something to store these numbers while complex calculations were taking place), a processor (the number-cruncher that carried out the calculations), and an output (a printing mechanism)—the same basic components shared by all modern computers. During his lifetime, Babbage never completed a single one of the hugely ambitious machines that he tried to build. That was no surprise. Each of his programmable "engines" was designed to use tens of thousands of precision-made gears. It was like a pocket watch scaled up to the size of a steam engine , a Pascal or Leibniz machine magnified a thousand-fold in dimensions, ambition, and complexity. For a time, the British government financed Babbage—to the tune of £17,000, then an enormous sum. But when Babbage pressed the government for more money to build an even more advanced machine, they lost patience and pulled out. Babbage was more fortunate in receiving help from Augusta Ada Byron (1815–1852), Countess of Lovelace, daughter of the poet Lord Byron. An enthusiastic mathematician, she helped to refine Babbage's ideas for making his machine programmable—and this is why she is still, sometimes, referred to as the world's first computer programmer. [2] Little of Babbage's work survived after his death. But when, by chance, his notebooks were rediscovered in the 1930s, computer scientists finally appreciated the brilliance of his ideas. Unfortunately, by then, most of these ideas had already been reinvented by others.

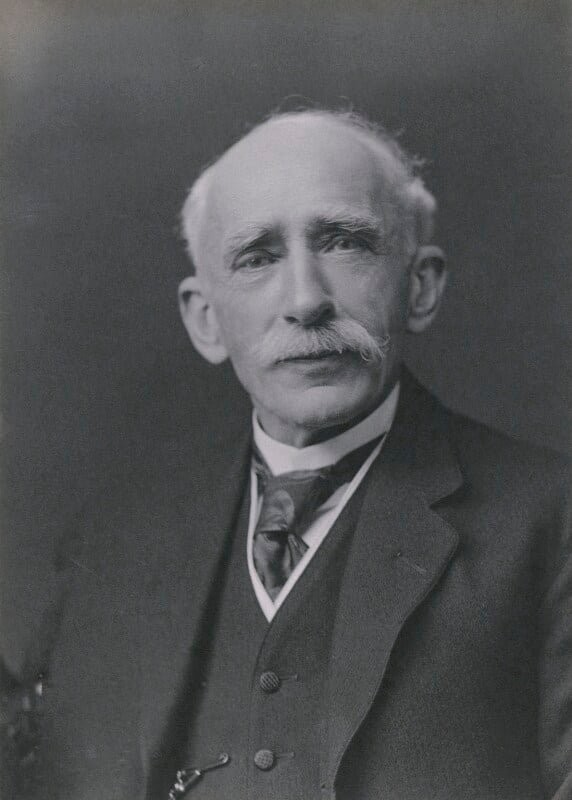

Artwork: Charles Babbage (1791–1871). Picture from The Illustrated London News, 1871, courtesy of US Library of Congress .

Babbage had intended that his machine would take the drudgery out of repetitive calculations. Originally, he imagined it would be used by the army to compile the tables that helped their gunners to fire cannons more accurately. Toward the end of the 19th century, other inventors were more successful in their effort to construct "engines" of calculation. American statistician Herman Hollerith (1860–1929) built one of the world's first practical calculating machines, which he called a tabulator, to help compile census data. Then, as now, a census was taken each decade but, by the 1880s, the population of the United States had grown so much through immigration that a full-scale analysis of the data by hand was taking seven and a half years. The statisticians soon figured out that, if trends continued, they would run out of time to compile one census before the next one fell due. Fortunately, Hollerith's tabulator was an amazing success: it tallied the entire census in only six weeks and completed the full analysis in just two and a half years. Soon afterward, Hollerith realized his machine had other applications, so he set up the Tabulating Machine Company in 1896 to manufacture it commercially. A few years later, it changed its name to the Computing-Tabulating-Recording (C-T-R) company and then, in 1924, acquired its present name: International Business Machines (IBM).

Photo: Keeping count: Herman Hollerith's late-19th-century census machine (blue, left) could process 12 separate bits of statistical data each minute. Its compact 1940 replacement (red, right), invented by Eugene M. La Boiteaux of the Census Bureau, could work almost five times faster. Photo by Harris & Ewing courtesy of US Library of Congress .

Bush and the bomb

Photo: Dr Vannevar Bush (1890–1974). Picture by Harris & Ewing, courtesy of US Library of Congress .

The history of computing remembers colorful characters like Babbage, but others who played important—if supporting—roles are less well known. At the time when C-T-R was becoming IBM, the world's most powerful calculators were being developed by US government scientist Vannevar Bush (1890–1974). In 1925, Bush made the first of a series of unwieldy contraptions with equally cumbersome names: the New Recording Product Integraph Multiplier. Later, he built a machine called the Differential Analyzer, which used gears, belts, levers, and shafts to represent numbers and carry out calculations in a very physical way, like a gigantic mechanical slide rule. Bush's ultimate calculator was an improved machine named the Rockefeller Differential Analyzer, assembled in 1935 from 320 km (200 miles) of wire and 150 electric motors . Machines like these were known as analog calculators—analog because they stored numbers in a physical form (as so many turns on a wheel or twists of a belt) rather than as digits. Although they could carry out incredibly complex calculations, it took several days of wheel cranking and belt turning before the results finally emerged.

Impressive machines like the Differential Analyzer were only one of several outstanding contributions Bush made to 20th-century technology. Another came as the teacher of Claude Shannon (1916–2001), a brilliant mathematician who figured out how electrical circuits could be linked together to process binary code with Boolean algebra (a way of comparing binary numbers using logic) and thus make simple decisions. During World War II, President Franklin D. Roosevelt appointed Bush chairman first of the US National Defense Research Committee and then director of the Office of Scientific Research and Development (OSRD). In this capacity, he was in charge of the Manhattan Project, the secret $2-billion initiative that led to the creation of the atomic bomb. One of Bush's final wartime contributions was to sketch out, in 1945, an idea for a memory-storing and sharing device called Memex that would later inspire Tim Berners-Lee to invent the World Wide Web . [3] Few outside the world of computing remember Vannevar Bush today—but what a legacy! As a father of the digital computer, an overseer of the atom bomb, and an inspiration for the Web, Bush played a pivotal role in three of the 20th-century's most far-reaching technologies.

Photo: "A gigantic mechanical slide rule": A differential analyzer pictured in 1938. Picture courtesy of and © University of Cambridge Computer Laboratory, published with permission via Wikimedia Commons under a Creative Commons (CC BY 2.0) licence.

Turing—tested

The first modern computers.

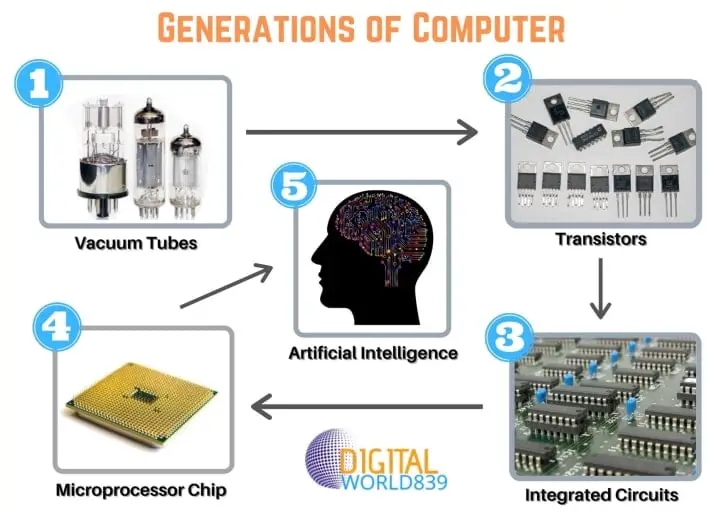

The World War II years were a crucial period in the history of computing, when powerful gargantuan computers began to appear. Just before the outbreak of the war, in 1938, German engineer Konrad Zuse (1910–1995) constructed his Z1, the world's first programmable binary computer, in his parents' living room. [4] The following year, American physicist John Atanasoff (1903–1995) and his assistant, electrical engineer Clifford Berry (1918–1963), built a more elaborate binary machine that they named the Atanasoff Berry Computer (ABC). It was a great advance—1000 times more accurate than Bush's Differential Analyzer. These were the first machines that used electrical switches to store numbers: when a switch was "off", it stored the number zero; flipped over to its other, "on", position, it stored the number one. Hundreds or thousands of switches could thus store a great many binary digits (although binary is much less efficient in this respect than decimal, since it takes up to eight binary digits to store a three-digit decimal number). These machines were digital computers: unlike analog machines, which stored numbers using the positions of wheels and rods, they stored numbers as digits.

The first large-scale digital computer of this kind appeared in 1944 at Harvard University, built by mathematician Howard Aiken (1900–1973). Sponsored by IBM, it was variously known as the Harvard Mark I or the IBM Automatic Sequence Controlled Calculator (ASCC). A giant of a machine, stretching 15m (50ft) in length, it was like a huge mechanical calculator built into a wall. It must have sounded impressive, because it stored and processed numbers using "clickety-clack" electromagnetic relays (electrically operated magnets that automatically switched lines in telephone exchanges)—no fewer than 3304 of them. Impressive they may have been, but relays suffered from several problems: they were large (that's why the Harvard Mark I had to be so big); they needed quite hefty pulses of power to make them switch; and they were slow (it took time for a relay to flip from "off" to "on" or from 0 to 1).

Photo: An analog computer being used in military research in 1949. Picture courtesy of NASA on the Commons (where you can download a larger version.

Most of the machines developed around this time were intended for military purposes. Like Babbage's never-built mechanical engines, they were designed to calculate artillery firing tables and chew through the other complex chores that were then the lot of military mathematicians. During World War II, the military co-opted thousands of the best scientific minds: recognizing that science would win the war, Vannevar Bush's Office of Scientific Research and Development employed 10,000 scientists from the United States alone. Things were very different in Germany. When Konrad Zuse offered to build his Z2 computer to help the army, they couldn't see the need—and turned him down.

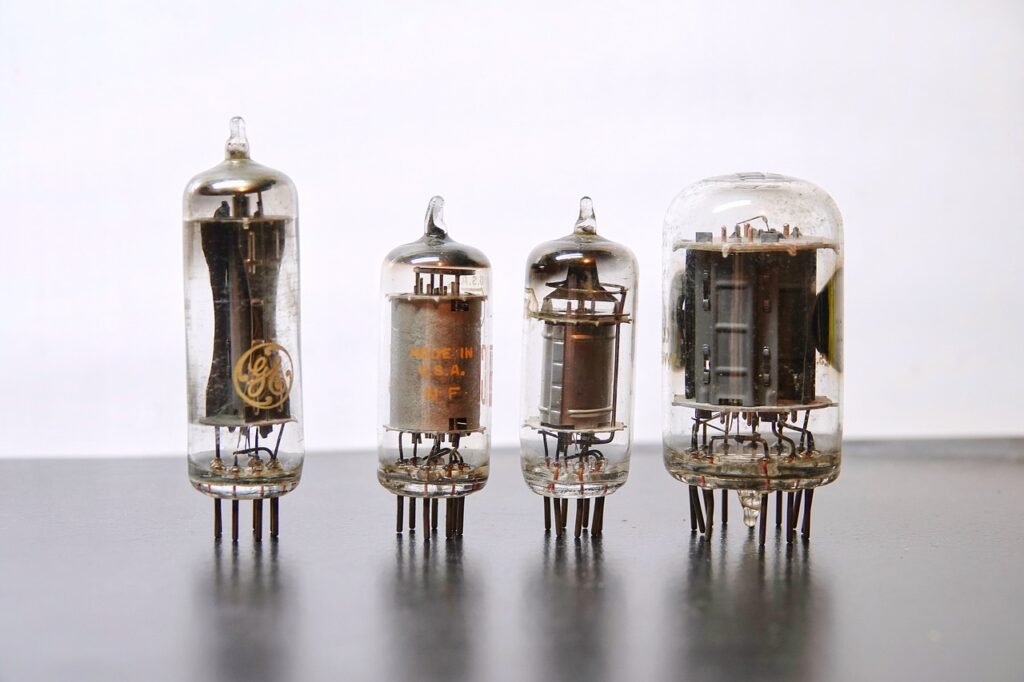

On the Allied side, great minds began to make great breakthroughs. In 1943, a team of mathematicians based at Bletchley Park near London, England (including Alan Turing) built a computer called Colossus to help them crack secret German codes. Colossus was the first fully electronic computer. Instead of relays, it used a better form of switch known as a vacuum tube (also known, especially in Britain, as a valve). The vacuum tube, each one about as big as a person's thumb (earlier ones were very much bigger) and glowing red hot like a tiny electric light bulb, had been invented in 1906 by Lee de Forest (1873–1961), who named it the Audion. This breakthrough earned de Forest his nickname as "the father of radio" because their first major use was in radio receivers , where they amplified weak incoming signals so people could hear them more clearly. [5] In computers such as the ABC and Colossus, vacuum tubes found an alternative use as faster and more compact switches.

Just like the codes it was trying to crack, Colossus was top-secret and its existence wasn't confirmed until after the war ended. As far as most people were concerned, vacuum tubes were pioneered by a more visible computer that appeared in 1946: the Electronic Numerical Integrator And Calculator (ENIAC). The ENIAC's inventors, two scientists from the University of Pennsylvania, John Mauchly (1907–1980) and J. Presper Eckert (1919–1995), were originally inspired by Bush's Differential Analyzer; years later Eckert recalled that ENIAC was the "descendant of Dr Bush's machine." But the machine they constructed was far more ambitious. It contained nearly 18,000 vacuum tubes (nine times more than Colossus), was around 24 m (80 ft) long, and weighed almost 30 tons. ENIAC is generally recognized as the world's first fully electronic, general-purpose, digital computer. Colossus might have qualified for this title too, but it was designed purely for one job (code-breaking); since it couldn't store a program, it couldn't easily be reprogrammed to do other things.

Photo: Sir Maurice Wilkes (left), his collaborator William Renwick, and the early EDSAC-1 electronic computer they built in Cambridge, pictured around 1947/8. Picture courtesy of and © University of Cambridge Computer Laboratory, published with permission via Wikimedia Commons under a Creative Commons (CC BY 2.0) licence.

ENIAC was just the beginning. Its two inventors formed the Eckert Mauchly Computer Corporation in the late 1940s. Working with a brilliant Hungarian mathematician, John von Neumann (1903–1957), who was based at Princeton University, they then designed a better machine called EDVAC (Electronic Discrete Variable Automatic Computer). In a key piece of work, von Neumann helped to define how the machine stored and processed its programs, laying the foundations for how all modern computers operate. [6] After EDVAC, Eckert and Mauchly developed UNIVAC 1 (UNIVersal Automatic Computer) in 1951. They were helped in this task by a young, largely unknown American mathematician and Naval reserve named Grace Murray Hopper (1906–1992), who had originally been employed by Howard Aiken on the Harvard Mark I. Like Herman Hollerith's tabulator over 50 years before, UNIVAC 1 was used for processing data from the US census. It was then manufactured for other users—and became the world's first large-scale commercial computer.

Machines like Colossus, the ENIAC, and the Harvard Mark I compete for significance and recognition in the minds of computer historians. Which one was truly the first great modern computer? All of them and none: these—and several other important machines—evolved our idea of the modern electronic computer during the key period between the late 1930s and the early 1950s. Among those other machines were pioneering computers put together by English academics, notably the Manchester/Ferranti Mark I, built at Manchester University by Frederic Williams (1911–1977) and Thomas Kilburn (1921–2001), and the EDSAC (Electronic Delay Storage Automatic Calculator), built by Maurice Wilkes (1913–2010) at Cambridge University. [7]

Photo: Control panel of the UNIVAC 1, the world's first large-scale commercial computer. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

The microelectronic revolution

Vacuum tubes were a considerable advance on relay switches, but machines like the ENIAC were notoriously unreliable. The modern term for a problem that holds up a computer program is a "bug." Popular legend has it that this word entered the vocabulary of computer programmers sometime in the 1950s when moths, attracted by the glowing lights of vacuum tubes, flew inside machines like the ENIAC, caused a short circuit, and brought work to a juddering halt. But there were other problems with vacuum tubes too. They consumed enormous amounts of power: the ENIAC used about 2000 times as much electricity as a modern laptop. And they took up huge amounts of space. Military needs were driving the development of machines like the ENIAC, but the sheer size of vacuum tubes had now become a real problem. ABC had used 300 vacuum tubes, Colossus had 2000, and the ENIAC had 18,000. The ENIAC's designers had boasted that its calculating speed was "at least 500 times as great as that of any other existing computing machine." But developing computers that were an order of magnitude more powerful still would have needed hundreds of thousands or even millions of vacuum tubes—which would have been far too costly, unwieldy, and unreliable. So a new technology was urgently required.

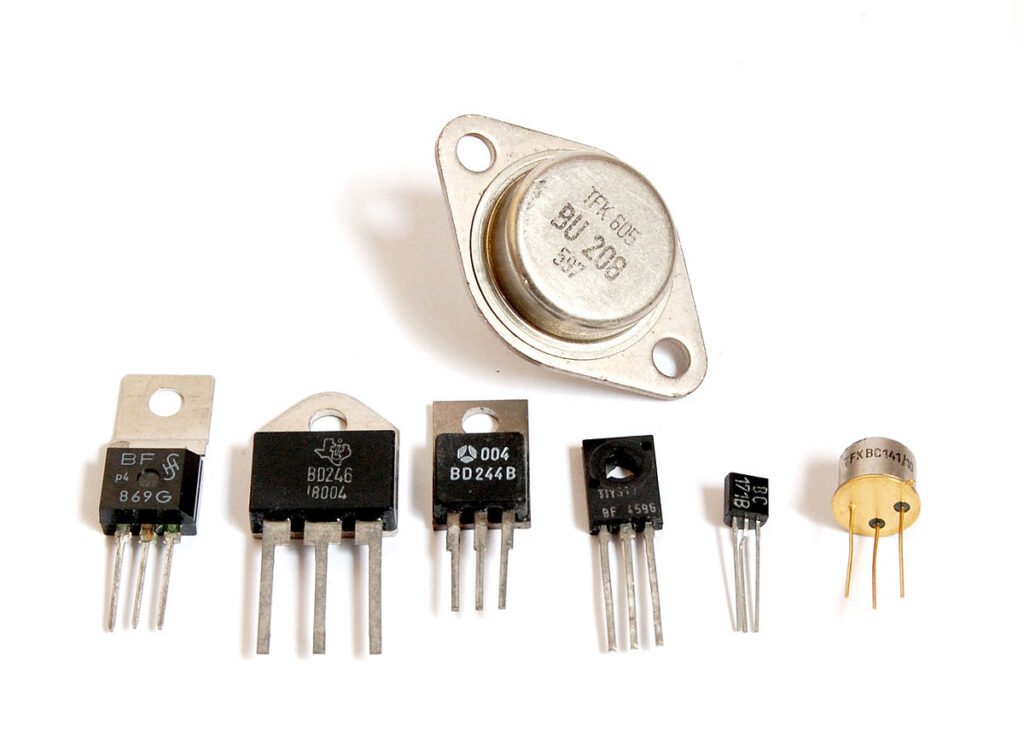

The solution appeared in 1947 thanks to three physicists working at Bell Telephone Laboratories (Bell Labs). John Bardeen (1908–1991), Walter Brattain (1902–1987), and William Shockley (1910–1989) were then helping Bell to develop new technology for the American public telephone system, so the electrical signals that carried phone calls could be amplified more easily and carried further. Shockley, who was leading the team, believed he could use semiconductors (materials such as germanium and silicon that allow electricity to flow through them only when they've been treated in special ways) to make a better form of amplifier than the vacuum tube. When his early experiments failed, he set Bardeen and Brattain to work on the task for him. Eventually, in December 1947, they created a new form of amplifier that became known as the point-contact transistor. Bell Labs credited Bardeen and Brattain with the transistor and awarded them a patent. This enraged Shockley and prompted him to invent an even better design, the junction transistor, which has formed the basis of most transistors ever since.

Like vacuum tubes, transistors could be used as amplifiers or as switches. But they had several major advantages. They were a fraction the size of vacuum tubes (typically about as big as a pea), used no power at all unless they were in operation, and were virtually 100 percent reliable. The transistor was one of the most important breakthroughs in the history of computing and it earned its inventors the world's greatest science prize, the 1956 Nobel Prize in Physics . By that time, however, the three men had already gone their separate ways. John Bardeen had begun pioneering research into superconductivity , which would earn him a second Nobel Prize in 1972. Walter Brattain moved to another part of Bell Labs.

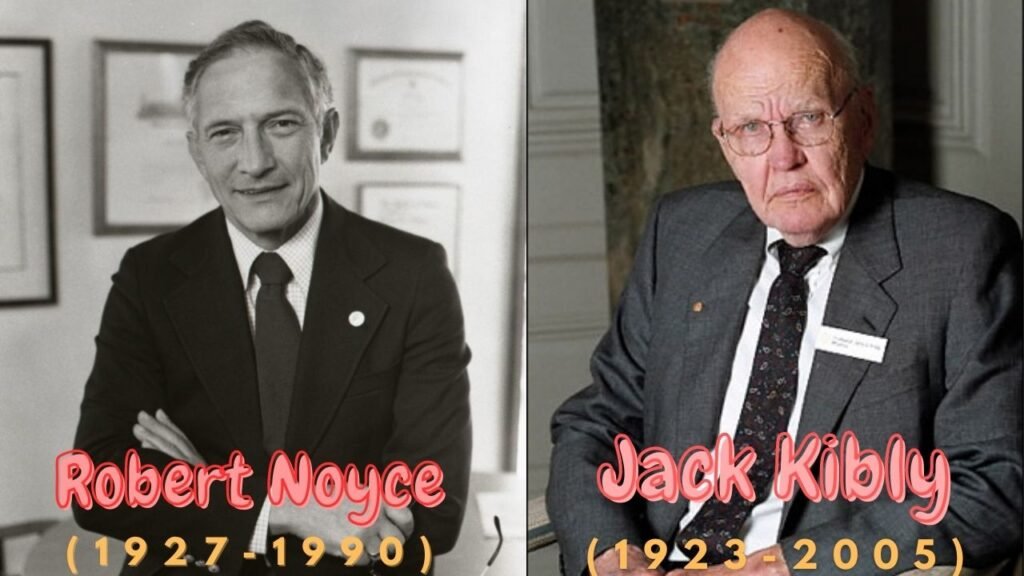

William Shockley decided to stick with the transistor, eventually forming his own corporation to develop it further. His decision would have extraordinary consequences for the computer industry. With a small amount of capital, Shockley set about hiring the best brains he could find in American universities, including young electrical engineer Robert Noyce (1927–1990) and research chemist Gordon Moore (1929–). It wasn't long before Shockley's idiosyncratic and bullying management style upset his workers. In 1956, eight of them—including Noyce and Moore—left Shockley Transistor to found a company of their own, Fairchild Semiconductor, just down the road. Thus began the growth of "Silicon Valley," the part of California centered on Palo Alto, where many of the world's leading computer and electronics companies have been based ever since. [8]

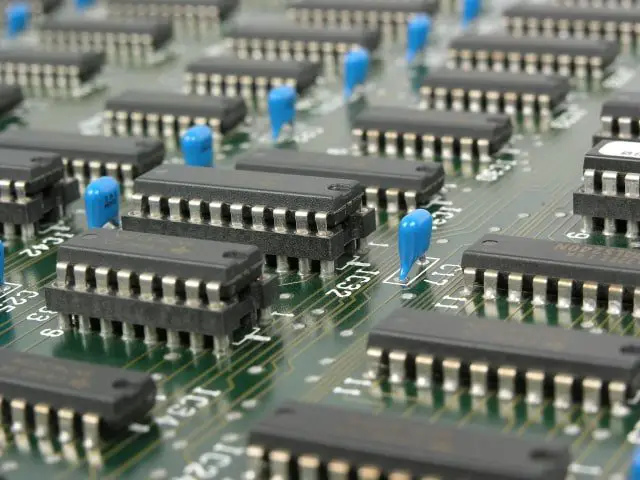

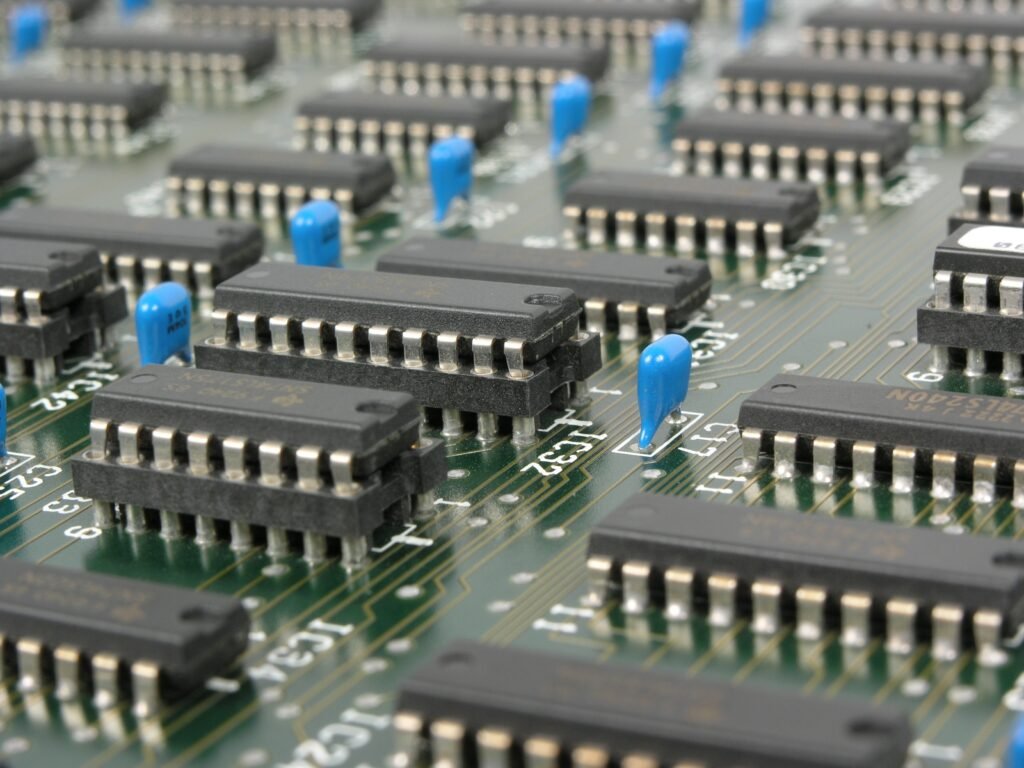

It was in Fairchild's California building that the next breakthrough occurred—although, somewhat curiously, it also happened at exactly the same time in the Dallas laboratories of Texas Instruments. In Dallas, a young engineer from Kansas named Jack Kilby (1923–2005) was considering how to improve the transistor. Although transistors were a great advance on vacuum tubes, one key problem remained. Machines that used thousands of transistors still had to be hand wired to connect all these components together. That process was laborious, costly, and error prone. Wouldn't it be better, Kilby reflected, if many transistors could be made in a single package? This prompted him to invent the "monolithic" integrated circuit (IC) , a collection of transistors and other components that could be manufactured all at once, in a block, on the surface of a semiconductor. Kilby's invention was another step forward, but it also had a drawback: the components in his integrated circuit still had to be connected by hand. While Kilby was making his breakthrough in Dallas, unknown to him, Robert Noyce was perfecting almost exactly the same idea at Fairchild in California. Noyce went one better, however: he found a way to include the connections between components in an integrated circuit, thus automating the entire process.

Photo: An integrated circuit from the 1980s. This is an EPROM chip (effectively a forerunner of flash memory , which you could only erase with a blast of ultraviolet light).

Mainframes, minis, and micros

Photo: An IBM 704 mainframe pictured at NASA in 1958. Designed by Gene Amdahl, this scientific number cruncher was the successor to the 701 and helped pave the way to arguably the most important IBM computer of all time, the System/360, which Amdahl also designed. Photo courtesy of NASA .

Photo: The control panel of DEC's classic 1965 PDP-8 minicomputer. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

Integrated circuits, as much as transistors, helped to shrink computers during the 1960s. In 1943, IBM boss Thomas Watson had reputedly quipped: "I think there is a world market for about five computers." Just two decades later, the company and its competitors had installed around 25,000 large computer systems across the United States. As the 1960s wore on, integrated circuits became increasingly sophisticated and compact. Soon, engineers were speaking of large-scale integration (LSI), in which hundreds of components could be crammed onto a single chip, and then very large-scale integrated (VLSI), when the same chip could contain thousands of components.

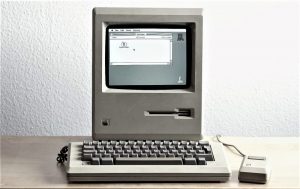

The logical conclusion of all this miniaturization was that, someday, someone would be able to squeeze an entire computer onto a chip. In 1968, Robert Noyce and Gordon Moore had left Fairchild to establish a new company of their own. With integration very much in their minds, they called it Integrated Electronics or Intel for short. Originally they had planned to make memory chips, but when the company landed an order to make chips for a range of pocket calculators, history headed in a different direction. A couple of their engineers, Federico Faggin (1941–) and Marcian Edward (Ted) Hoff (1937–), realized that instead of making a range of specialist chips for a range of calculators, they could make a universal chip that could be programmed to work in them all. Thus was born the general-purpose, single chip computer or microprocessor—and that brought about the next phase of the computer revolution.

Personal computers

By 1974, Intel had launched a popular microprocessor known as the 8080 and computer hobbyists were soon building home computers around it. The first was the MITS Altair 8800, built by Ed Roberts . With its front panel covered in red LED lights and toggle switches, it was a far cry from modern PCs and laptops. Even so, it sold by the thousand and earned Roberts a fortune. The Altair inspired a Californian electronics wizard name Steve Wozniak (1950–) to develop a computer of his own. "Woz" is often described as the hacker's "hacker"—a technically brilliant and highly creative engineer who pushed the boundaries of computing largely for his own amusement. In the mid-1970s, he was working at the Hewlett-Packard computer company in California, and spending his free time tinkering away as a member of the Homebrew Computer Club in the Bay Area.

After seeing the Altair, Woz used a 6502 microprocessor (made by an Intel rival, Mos Technology) to build a better home computer of his own: the Apple I. When he showed off his machine to his colleagues at the club, they all wanted one too. One of his friends, Steve Jobs (1955–2011), persuaded Woz that they should go into business making the machine. Woz agreed so, famously, they set up Apple Computer Corporation in a garage belonging to Jobs' parents. After selling 175 of the Apple I for the devilish price of $666.66, Woz built a much better machine called the Apple ][ (pronounced "Apple Two"). While the Altair 8800 looked like something out of a science lab, and the Apple I was little more than a bare circuit board, the Apple ][ took its inspiration from such things as Sony televisions and stereos: it had a neat and friendly looking cream plastic case. Launched in April 1977, it was the world's first easy-to-use home "microcomputer." Soon home users, schools, and small businesses were buying the machine in their tens of thousands—at $1298 a time. Two things turned the Apple ][ into a really credible machine for small firms: a disk drive unit, launched in 1978, which made it easy to store data; and a spreadsheet program called VisiCalc, which gave Apple users the ability to analyze that data. In just two and a half years, Apple sold around 50,000 of the machine, quickly accelerating out of Jobs' garage to become one of the world's biggest companies. Dozens of other microcomputers were launched around this time, including the TRS-80 from Radio Shack (Tandy in the UK) and the Commodore PET. [9]

Apple's success selling to businesses came as a great shock to IBM and the other big companies that dominated the computer industry. It didn't take a VisiCalc spreadsheet to figure out that, if the trend continued, upstarts like Apple would undermine IBM's immensely lucrative business market selling "Big Blue" computers. In 1980, IBM finally realized it had to do something and launched a highly streamlined project to save its business. One year later, it released the IBM Personal Computer (PC), based on an Intel 8080 microprocessor, which rapidly reversed the company's fortunes and stole the market back from Apple.

The PC was successful essentially for one reason. All the dozens of microcomputers that had been launched in the 1970s—including the Apple ][—were incompatible. All used different hardware and worked in different ways. Most were programmed using a simple, English-like language called BASIC, but each one used its own flavor of BASIC, which was tied closely to the machine's hardware design. As a result, programs written for one machine would generally not run on another one without a great deal of conversion. Companies who wrote software professionally typically wrote it just for one machine and, consequently, there was no software industry to speak of.

In 1976, Gary Kildall (1942–1994), a teacher and computer scientist, and one of the founders of the Homebrew Computer Club, had figured out a solution to this problem. Kildall wrote an operating system (a computer's fundamental control software) called CP/M that acted as an intermediary between the user's programs and the machine's hardware. With a stroke of genius, Kildall realized that all he had to do was rewrite CP/M so it worked on each different machine. Then all those machines could run identical user programs—without any modification at all—inside CP/M. That would make all the different microcomputers compatible at a stroke. By the early 1980s, Kildall had become a multimillionaire through the success of his invention: the first personal computer operating system. Naturally, when IBM was developing its personal computer, it approached him hoping to put CP/M on its own machine. Legend has it that Kildall was out flying his personal plane when IBM called, so missed out on one of the world's greatest deals. But the truth seems to have been that IBM wanted to buy CP/M outright for just $200,000, while Kildall recognized his product was worth millions more and refused to sell. Instead, IBM turned to a young programmer named Bill Gates (1955–). His then tiny company, Microsoft, rapidly put together an operating system called DOS, based on a product called QDOS (Quick and Dirty Operating System), which they acquired from Seattle Computer Products. Some believe Microsoft and IBM cheated Kildall out of his place in computer history; Kildall himself accused them of copying his ideas. Others think Gates was simply the shrewder businessman. Either way, the IBM PC, powered by Microsoft's operating system, was a runaway success.

Yet IBM's victory was short-lived. Cannily, Bill Gates had sold IBM the rights to one flavor of DOS (PC-DOS) and retained the rights to a very similar version (MS-DOS) for his own use. When other computer manufacturers, notably Compaq and Dell, starting making IBM-compatible (or "cloned") hardware, they too came to Gates for the software. IBM charged a premium for machines that carried its badge, but consumers soon realized that PCs were commodities: they contained almost identical components—an Intel microprocessor, for example—no matter whose name they had on the case. As IBM lost market share, the ultimate victors were Microsoft and Intel, who were soon supplying the software and hardware for almost every PC on the planet. Apple, IBM, and Kildall made a great deal of money—but all failed to capitalize decisively on their early success. [10]

Photo: Personal computers threatened companies making large "mainframes" like this one. Picture courtesy of NASA on the Commons (where you can download a larger version).

The user revolution

Fortunately for Apple, it had another great idea. One of the Apple II's strongest suits was its sheer "user-friendliness." For Steve Jobs, developing truly easy-to-use computers became a personal mission in the early 1980s. What truly inspired him was a visit to PARC (Palo Alto Research Center), a cutting-edge computer laboratory then run as a division of the Xerox Corporation. Xerox had started developing computers in the early 1970s, believing they would make paper (and the highly lucrative photocopiers Xerox made) obsolete. One of PARC's research projects was an advanced $40,000 computer called the Xerox Alto. Unlike most microcomputers launched in the 1970s, which were programmed by typing in text commands, the Alto had a desktop-like screen with little picture icons that could be moved around with a mouse: it was the very first graphical user interface (GUI, pronounced "gooey")—an idea conceived by Alan Kay (1940–) and now used in virtually every modern computer. The Alto borrowed some of its ideas, including the mouse , from 1960s computer pioneer Douglas Engelbart (1925–2013).

Photo: During the 1980s, computers started to converge on the same basic "look and feel," largely inspired by the work of pioneers like Alan Kay and Douglas Engelbart. Photographs in the Carol M. Highsmith Archive, courtesy of US Library of Congress , Prints and Photographs Division.

Back at Apple, Jobs launched his own version of the Alto project to develop an easy-to-use computer called PITS (Person In The Street). This machine became the Apple Lisa, launched in January 1983—the first widely available computer with a GUI desktop. With a retail price of $10,000, over three times the cost of an IBM PC, the Lisa was a commercial flop. But it paved the way for a better, cheaper machine called the Macintosh that Jobs unveiled a year later, in January 1984. With its memorable launch ad for the Macintosh inspired by George Orwell's novel 1984 , and directed by Ridley Scott (director of the dystopic movie Blade Runner ), Apple took a swipe at IBM's monopoly, criticizing what it portrayed as the firm's domineering—even totalitarian—approach: Big Blue was really Big Brother. Apple's ad promised a very different vision: "On January 24, Apple Computer will introduce Macintosh. And you'll see why 1984 won't be like '1984'." The Macintosh was a critical success and helped to invent the new field of desktop publishing in the mid-1980s, yet it never came close to challenging IBM's position.

Ironically, Jobs' easy-to-use machine also helped Microsoft to dislodge IBM as the world's leading force in computing. When Bill Gates saw how the Macintosh worked, with its easy-to-use picture-icon desktop, he launched Windows, an upgraded version of his MS-DOS software. Apple saw this as blatant plagiarism and filed a $5.5 billion copyright lawsuit in 1988. Four years later, the case collapsed with Microsoft effectively securing the right to use the Macintosh "look and feel" in all present and future versions of Windows. Microsoft's Windows 95 system, launched three years later, had an easy-to-use, Macintosh-like desktop and MS-DOS running behind the scenes.

Photo: The IBM Blue Gene/P supercomputer at Argonne National Laboratory: one of the world's most powerful computers. Picture courtesy of Argonne National Laboratory published on Wikimedia Commons in 2009 under a Creative Commons Licence .

From nets to the Internet

Standardized PCs running standardized software brought a big benefit for businesses: computers could be linked together into networks to share information. At Xerox PARC in 1973, electrical engineer Bob Metcalfe (1946–) developed a new way of linking computers "through the ether" (empty space) that he called Ethernet. A few years later, Metcalfe left Xerox to form his own company, 3Com, to help companies realize "Metcalfe's Law": computers become useful the more closely connected they are to other people's computers. As more and more companies explored the power of local area networks (LANs), so, as the 1980s progressed, it became clear that there were great benefits to be gained by connecting computers over even greater distances—into so-called wide area networks (WANs).

Photo: Computers aren't what they used to be: they're much less noticeable because they're much more seamlessly integrated into everyday life. Some are "embedded" into household gadgets like coffee makers or televisions . Others travel round in our pockets in our smartphones—essentially pocket computers that we can program simply by downloading "apps" (applications).

Today, the best known WAN is the Internet —a global network of individual computers and LANs that links up hundreds of millions of people. The history of the Internet is another story, but it began in the 1960s when four American universities launched a project to connect their computer systems together to make the first WAN. Later, with funding for the Department of Defense, that network became a bigger project called ARPANET (Advanced Research Projects Agency Network). In the mid-1980s, the US National Science Foundation (NSF) launched its own WAN called NSFNET. The convergence of all these networks produced what we now call the Internet later in the 1980s. Shortly afterward, the power of networking gave British computer programmer Tim Berners-Lee (1955–) his big idea: to combine the power of computer networks with the information-sharing idea Vannevar Bush had proposed in 1945. Thus, was born the World Wide Web —an easy way of sharing information over a computer network, which made possible the modern age of cloud computing (where anyone can access vast computing power over the Internet without having to worry about where or how their data is processed). It's Tim Berners-Lee's invention that brings you this potted history of computing today!

And now where?

If you liked this article..., don't want to read our articles try listening instead, find out more, on this site.

- Supercomputers : How do the world's most powerful computers work?

Other websites

There are lots of websites covering computer history. Here are a just a few favorites worth exploring!

- The Computer History Museum : The website of the world's biggest computer museum in California.

- The Computing Age : A BBC special report into computing past, present, and future.

- Charles Babbage at the London Science Museum : Lots of information about Babbage and his extraordinary engines. [Archived via the Wayback Machine]

- IBM History : Many fascinating online exhibits, as well as inside information about the part IBM inventors have played in wider computer history.

- Wikipedia History of Computing Hardware : covers similar ground to this page.

- Computer history images : A small but interesting selection of photos.

- Transistorized! : The history of the invention of the transistor from PBS.

- Intel Museum : The story of Intel's contributions to computing from the 1970s onward.

There are some superb computer history videos on YouTube and elsewhere; here are three good ones to start you off:

- The Difference Engine : A great introduction to Babbage's Difference Engine from Doron Swade, one of the world's leading Babbage experts.

- The ENIAC : A short Movietone news clip about the completion of the world's first programmable electronic computer.

- A tour of the Computer History Museum : Dag Spicer gives us a tour of the world's most famous computer museum, in California.

For older readers

For younger readers.

Text copyright © Chris Woodford 2006, 2023. All rights reserved. Full copyright notice and terms of use .

Rate this page

Tell your friends, cite this page, more to explore on our website....

- Get the book

- Send feedback

First generation of computers

Modern computer era owes much to the great technological advances that took place during World War II. Thus, the invention of electronic circuits, vacuum tubes and capacitors replace the generation of mechanical components while numerical calculation replaces analog calculation. The computers and products of this era constitute the so-called first generation of computers .

- Date : 1951 to 1958

- Featured Computers : Atanasoff Berry Computer, MARK I, UNIVAC, ENIAC

What is the first generation of computers?

The first generation of computers was launched in the middle of the 20th century , specifically between 1946 and 1958, a period that generated great technological advances based on the search for an aid instrument in the scientific and military fields. These computers were very notorious and particular for the magnitude of their size and for the little power to acquire one.

Characteristics of the first generation of computers

History of the first generation of computers, first generation of computers inventors, featured pcs from the first generation of computers.

The first generation of computers generated in the mid-twentieth century had the first indication or antecedent of modern computers, but among its main characteristics were its large size as well as its high cost of acquisition , and the recurrent theme of failures and errors for being experimental.

The computers counted with the use of vacuum tubes to process the information, punched cards for data entry and exit and programs, and used magnetic cylinders to store information and internal instructions.

The first ones on the market cost approximately $10,000. Due to their large size, their use implied a great amount of electricity, generating an overheating in the system, requiring special auxiliary air conditioning systems (in order to avoid this overheating). For example, the large ENIAC computer weighing up to 30 tons.

The first-generation computers used magnetic drums as data storage elements to be changed later in the second generation by ferrite memories.

The historical development of the first generation of computers does not have an exact beginning, since it is the result of previous discoveries and experimentation from different authors, but in this case would begin to take its development since the twentieth century .

The design of Charles Babbage ’s analytical machine collected ideas as a primitive way of giving orders to the machine for the automatic performance of calculations and the introduction of data storage systems. These ideas were incorporated into the design of the ENIAC , the first electronic computer to be built . In it, they were later based for the UNIVAC I , which was the first computer to be manufactured commercially, as well as being the first to use a compiler to change the language from program to machine language.

Its main advances were the system of magnetic tapes, which were read back and forth, and the possibility of checking errors. The introduction of integrated circuits allowed the appearance of the first desktop computer in 1974 . This immediate success led to the appearance of the IBM PC in 1981.

- Howard Aiken (1900- 1973), developed the Automatic Sequence Controller Calculator (ASCC), where, at the same time, he relied on Babbage’s work with the analytical machine, managing to build the Mark 1 , the first electro-mechanical computer (1944), which had a speed of a couple of tenths of seconds to add or subtract, two seconds to multiply two numbers of 11 digits and divide in a term of more or less 4 seconds.

- Eckert and Mauchly contributed to the development of first-generation computers by forming a private company and building UNIVAC I, which the Census Committee used to evaluate the 1950 census. IBM had a monopoly on punch card data processing equipment.