Research Paper Classification Using Machine and Deep Learning Techniques

New citation alert added.

This alert has been successfully added and will be sent to:

You will be notified whenever a record that you have chosen has been cited.

To manage your alert preferences, click on the button below.

New Citation Alert!

Please log in to your account

Information & Contributors

Bibliometrics & citations, index terms.

Computing methodologies

Machine learning

Machine learning approaches

Classification and regression trees

Recommendations

Deep learning--based text classification: a comprehensive review.

Deep learning--based models have surpassed classical machine learning--based approaches in various text classification tasks, including sentiment analysis, news categorization, question answering, and natural language inference. In this article, we ...

Boosting to correct inductive bias in text classification

This paper studies the effects of boosting in the context of different classification methods for text categorization, including Decision Trees, Naive Bayes, Support Vector Machines (SVMs) and a Rocchio-style classifier. We identify the inductive biases ...

Chinese text classification by the Naïve Bayes Classifier and the associative classifier with multiple confidence threshold values

Each type of classifier has its own advantages as well as certain shortcomings. In this paper, we take the advantages of the associative classifier and the Naive Bayes Classifier to make up the shortcomings of each other, thus improving the accuracy of ...

Information

Published in.

Association for Computing Machinery

New York, NY, United States

Publication History

Permissions, check for updates, author tags.

- deep learning

- gradient-boosted trees

- machine learning

- text classification

- topic classification

- Research-article

- Refereed limited

Contributors

Other metrics, bibliometrics, article metrics.

- 0 Total Citations

- 12 Total Downloads

- Downloads (Last 12 months) 12

- Downloads (Last 6 weeks) 12

View Options

Login options.

Check if you have access through your login credentials or your institution to get full access on this article.

Full Access

View options.

View or Download as a PDF file.

View online with eReader .

HTML Format

View this article in HTML Format.

Share this Publication link

Copying failed.

Share on social media

Affiliations, export citations.

- Please download or close your previous search result export first before starting a new bulk export. Preview is not available. By clicking download, a status dialog will open to start the export process. The process may take a few minutes but once it finishes a file will be downloadable from your browser. You may continue to browse the DL while the export process is in progress. Download

- Download citation

- Copy citation

We are preparing your search results for download ...

We will inform you here when the file is ready.

Your file of search results citations is now ready.

Your search export query has expired. Please try again.

- Open access

- Published: 26 August 2019

Research paper classification systems based on TF-IDF and LDA schemes

- Sang-Woon Kim 1 &

- Joon-Min Gil ORCID: orcid.org/0000-0001-6774-8476 2

Human-centric Computing and Information Sciences volume 9 , Article number: 30 ( 2019 ) Cite this article

36k Accesses

163 Citations

2 Altmetric

Metrics details

With the increasing advance of computer and information technologies, numerous research papers have been published online as well as offline, and as new research fields have been continuingly created, users have a lot of trouble in finding and categorizing their interesting research papers. In order to overcome the limitations, this paper proposes a research paper classification system that can cluster research papers into the meaningful class in which papers are very likely to have similar subjects. The proposed system extracts representative keywords from the abstracts of each paper and topics by Latent Dirichlet allocation (LDA) scheme. Then, the K-means clustering algorithm is applied to classify the whole papers into research papers with similar subjects, based on the Term frequency-inverse document frequency (TF-IDF) values of each paper.

Introduction

Numerous research papers have been published online as well as offline with the increasing advance of computer and information technologies, which makes it difficult for users to search and categorize their interesting research papers for a specific subject [ 1 ]. Therefore, it is desired that these huge numbers of research papers are systematically classified with similar subjects so that users can find their interesting research papers easily and conveniently. Typically, finding research papers on specific topics or subjects is time consuming activity. For example, researchers are usually spending a long time on the Internet to find their interesting papers and are bored because the information they are looking for is not retrieved efficiently due to the fact that the papers are not grouped in their topics or subjects for easy and fast access.

The commonly-used analysis for the classification of a huge number of research papers is run on large-scale computing machines without any consideration on big data properties. As time goes on, it is difficult to manage and process efficiently those research papers that continue to quantitatively increase. Since the relation of the papers to be analyzed and classified is very complex, it is also difficult to catch quickly the subject of each research paper and, moreover hard to accurately classify research papers with the similar subjects in terms of contents. Therefore, there is a need to use an automated processing method for such a huge number of research papers so that they are classified fast and accurately.

The abstract is one of important parts in a research paper as it describes the gist of the paper. Typically, it is a next most part that users read after paper title. Accordingly, users tend to read firstly a paper abstract in order to catch the research direction and summary before reading contents in the body of a paper. In this regard, the core words of research papers should be written in the abstract concisely and interestingly. Therefore, in this paper, we use the abstract data of research papers as a clue to classify similar papers fast and correct.

To classify a huge number of papers into papers with similar subjects, we propose the paper classification system based on term frequency-inverse document frequency (TF-IDF) [ 2 , 3 , 4 ] and Latent Dirichlet allocation (LDA) [ 5 ] schemes. The proposed system firstly constructs a representative keyword dictionary with the keywords that user inputs, and with the topics extracted by the LDA. Secondly, it uses the TF-IDF scheme to extract subject words from the abstract of papers based on the keyword dictionary. Then, the K-means clustering algorithm [ 6 , 7 , 8 ] is applied to classify the papers with similar subjects, based on the TF-IDF values of each paper.

To extract subject words from a set of massive papers efficiently, in this paper, we use the Hadoop Distributed File Systems (HDFS) [ 9 , 10 ] that can process big data rapidly and stably with high scalability. We also use the map-reduce programming model [ 11 , 12 ] to calculate the TF-IDF value from the abstract of each paper. Moreover, in order to demonstrate the validation and applicability of the proposed system, this paper evaluates the performance of the proposed system, based on actual paper data. As the experimental data of performance evaluation, we use the titles and abstracts of the papers published on Future Generation Compute Systems (FGCS) journal [ 13 ] from 1984 to 2017. The experimental results indicate that the proposed system can well classify the whole papers with papers with similar subjects according to the relationship of the keywords extracted from the abstracts of papers.

The remainder of the paper is organized as follows: In “ Related work ” section, we provide related work on research paper classification. “ System flow diagram ” section presents a system flow diagram for our research paper classification system. “ Paper classification system ” section explains the paper classification system based on TF-IDF and LDA schemes in detail. In “ Experiments ” section, we carry out experiments to evaluate the performance of the proposed paper classification system. In particular, Elbow scheme is applied to determine the optimal number of clusters in the K-means clustering algorithm, and Silhouette schemes are introduced to show the validation of clustering results. Finally, “ Conclusion ” section concludes the paper.

Related work

This section briefly reviews the literature on paper classification methods related on the research subject of this paper.

Document classification has direct relation with the paper classification of this paper. It is a problem that assigns a document to one or more predefined classes according to a specific criterion or contents. The representative application areas of document classification are follows as:

News article classification: The news articles are generally massive, because they are tremendously issued in daily or hourly. There have been lots of works for automatic news article classification [ 14 ].

Opinion mining: It is very important to analyze the information on opinions, sentiment, and subjectivity in documents with a specific topic [ 15 ]. Analysis results can be applied to various areas such as website evaluation, the review of online news articles, opinion in blog or SNS, etc. [ 16 ].

Email classification and spam filtering: Its area can be considered as a document classification problem not only for spam filtering, but also for classifying messages and sorting them into a specific folder [ 17 ].

A wide variety of classification techniques have been used to document classification [ 18 ]. Automatic document classification can be divided into two methods: supervised and unsupervised [ 19 , 20 , 21 ]. In the supervised classification, documents are classified on the basis of supervised learning methods. These methods generally analyze the training data (i.e., pair data of predefined input–output) and produce an inferred function which can be used for mapping other examples. On the other hand, unsupervised classification groups documents, based on similarity among documents without any predefined criterion. As automatic document classification algorithms, there have been developed various types of algorithms such as Naïve Bayes classifier, TF-IDF, Support Vector Machine (SVM), K-Nearest Neighbors (KNN), Decision Tree, and so on [ 22 , 23 ].

Meanwhile, as works related on paper classification, Bravo-Alcobendas et al. [ 24 ] proposed a document clustering algorithm that extracts the characteristics of documents by Non-negative matrix factorization (NMF) and that groups documents by K-means clustering algorithm. This work mainly focuses on the reduction of high-dimensional vector formed by word counts in documents, not on a sophisticated classification in terms of a variety of subject words.

In [ 25 ], Taheriyan et al. proposed the paper classification method based on a relation graph using interrelationships among papers, such as citations, authors, common references, etc. This method has better performance as the links among papers increase. It mainly focuses on interrelationships among papers without any consideration of paper contents or subjects. Thus, the papers can be misclassified regardless of subjects.

In [ 26 ], Hanyurwimfura et al. proposed the paper classification method based on research paper’s title and common terms. In [ 27 ], Nanbo et al. proposed the paper classification method that extracts keywords from research objectives and background and that groups papers on the basis of the extracted keywords. In these works, the results achieved on using important information such as paper’s subjects, objectives, background were promising ones. However, they does not consider frequently occurring keywords in paper classification. Paper title, research objectives, and research background provide only limited information, leading to inaccurate decision [ 28 ].

In [ 29 ], Nguyen et al. proposed the paper classification method based on Bag-of-Word scheme and KNN algorithm. This method extracts topics from all contents of a paper without any consideration for the reduction of computational complexity. Thus, it suffers from extensive computational time when data volume sharply increases.

Different from the above mentioned methods, our method uses three kinds of keywords: keywords that users input, keywords extracted from abstracts, and topics extracted by LDA scheme. These keywords are used to calculate the TF-IDF of each paper, with an aim to considering an importance of papers. Then, the K-means clustering algorithm is applied to classify the papers with similar subjects, based on the TF-IDF values of each paper. Meanwhile, our classification method is designed and implemented on Hadoop Distributed File System (HDFS) to efficiently process the massive research papers that have the characteristics of big data. Moreover, map-reduce programming model is used for the parallel processing of the massive research papers. To our best knowledge, our work is the first to use the analysis of paper abstracts based on TF-IDF and LDA schemes for paper classification.

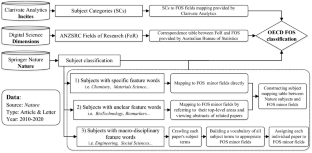

System flow diagram

The paper classification system proposed in this paper consists of four main processes (Fig. 1 ): (1) Crawling, (2) Data Management and Topic Modeling, (3) TF-IDF, and (4) Classification. This section describes a system flow diagram for our paper classification system.

Detailed flows for the system flow diagram shown in Fig. 1 are as follows:

It automatically collects keywords and abstracts data of the papers published during a given period. It also executes preprocessing for these data, such as the removal of stop words, the extraction of only nouns, etc.

It constructs a keyword dictionary based on crawled keywords. Because total keywords of whole papers are huge, this paper uses only top-N keywords with high frequency among the whole keywords

It extracts topics from the crawled abstracts by LDA topic modeling

It calculates paper lengths as the number of occurrences of words in the abstract of each paper

It calculates a TF value for both of the keywords obtained by Step 2 and the topics obtained by Step 3

It calculates an IDF value for both of the keywords obtained by Step 2 and the topics obtained by Step 3

It calculates a TF-IDF value for each keyword using the values obtained by Steps 4, 5, and 6

It groups the whole papers into papers with a similar subject, based on the K-means clustering algorithm

In the next section, we provide a detailed description for the above mentioned steps.

Paper classification system

Crawling of abstract data.

The abstract is one of important parts in a paper as it describes the gist of the paper [ 30 ]. Typically, next a paper title, the next most part of papers that users are likely to read is the abstract. That is, users tend to read firstly a paper abstract in order to catch the research direction and summary before reading all contents in the paper. Accordingly, the core words of papers should be written concisely and interestingly in the abstract. Because of this, this paper classifies similar papers based on abstract data fast and correct.

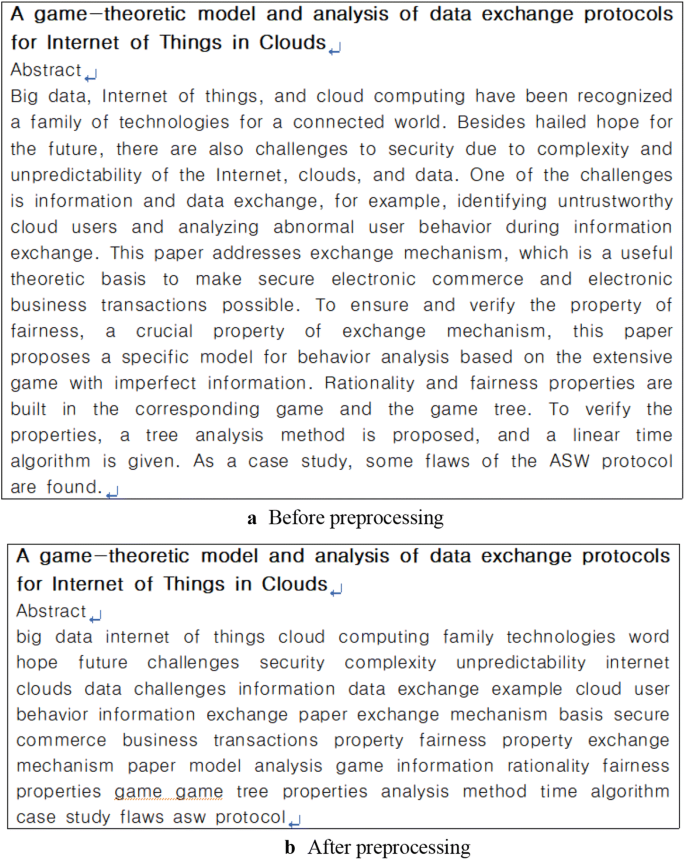

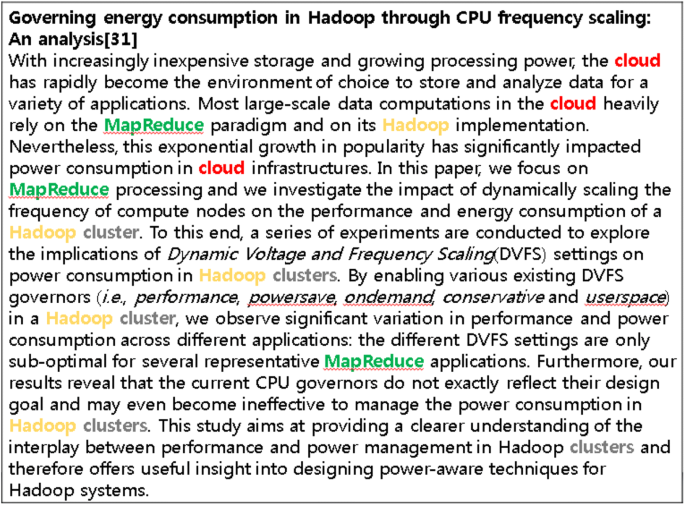

As you can see in the crawling step of Fig. 1 , the data crawler collects the paper abstract and keywords according to the checking items of crawling list. It also removes stop words in the crawled abstract data and then extracts only nouns from the data. Since the abstract data have large-scale volume and are produced fast, they have a typical characteristic of big data. Therefore, this paper manages the abstract data on HDFS and calculates the TF-IDF value of each paper using the map-reduce programming model. Figure 2 shows an illustrative example for the abstract data before and after the elimination of stop words and the extraction of nouns are applied.

Abstract data before and after preprocessing

After the preprocessing (i.e., the removal of stop words and the extraction of only nouns), the amount of abstract data should be greatly reduced. This will result to enhancing the processing efficiency of the proposed paper classification system.

Managing paper data

The save step in Fig. 1 constructs the keyword dictionary using the abstract data and keywords data crawled in crawling step and saves it to the HDFS.

In order to process lots of keywords simply and efficiently, this paper categorizes several keywords with similar meanings into one representative keyword. In this paper, we construct 1394 representative keywords from total keywords of all abstracts and make a keyword dictionary of these representative keywords. However, even these representative keywords cause much computational time if they are used for paper classification without a way of reducing computation. To alleviate this suffering, we use the keyword sets of top frequency 10, 20, and 30 among these representative keywords, as shown in Table 1 .

Topic modeling

Latent Dirichlet allocation (LDA) is a probabilistic model that can extract latent topics from a collection of documents. The basic idea is that documents are represented as random mixtures over latent topics, where each topic is characterized by a distribution over words [ 31 , 32 ].

The LDA estimates the topic-word distribution \(P(t|z)\) and the document-topic distribution \(P(z|d)\) from an unlabeled corpus using Dirichlet priors for the distributions with a fixed number of topics [ 31 , 32 ]. As a result, we get \(P(z|d)\) for each document and further build the feature vector as

In this paper, using LDA scheme, we extract topic sets from the abstract data crawled in crawling step. Three kinds of topic sets are extracted, each of which consists of 10, 20, and 30 topics, respectively. Table 2 shows topic sets with 10 topics and the keywords of each topic. The remaining topic sets with 20 and 30 topics are omitted due to space limitations.

The TF-IDF has been widely used in the fields of information retrieval and text mining to evaluate the relationship for each word in the collection of documents. In particular, they are used for extracting core words (i.e., keywords) from documents, calculating similar degrees among documents, deciding search ranking, and so on.

The TF in TF-IDF means the occurrence of specific words in documents. Words with a high TF value have an importance in documents. On the other hand, the DF implies how many times a specific word appears in the collection of documents. It calculates the occurrence of the word in multiple documents, not in only a document. Words with a high DF value do not have an importance because they commonly appear in all documents. Accordingly, the IDF that is an inverse of the DF is used to measure an importance of words in all documents. The high IDF values mean rare words in all documents, resulting to the increase of an importance.

Paper length

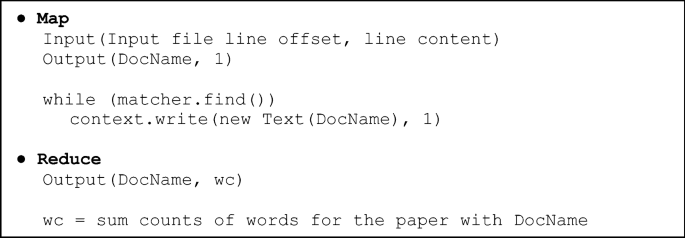

The paper length step of Fig. 1 calculates a total number of occurrences of words after separating words in a given abstract using white spaces as a delimiter. The objective of this step is to prevent unbalancing of TF values caused by a quantity of abstracts. Figure 3 shows a map-reduce algorithm for the calculation of paper length. In this figure, DocName and wc represents a paper title and a paper length, respectively.

Map-reduce algorithm for the calculation of paper length

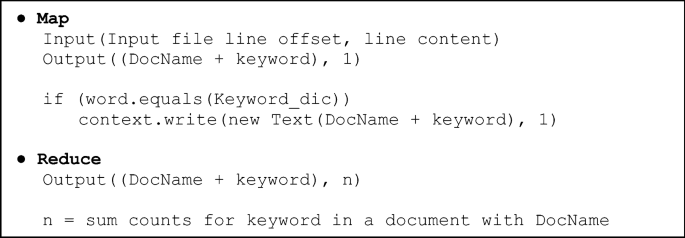

Word frequency

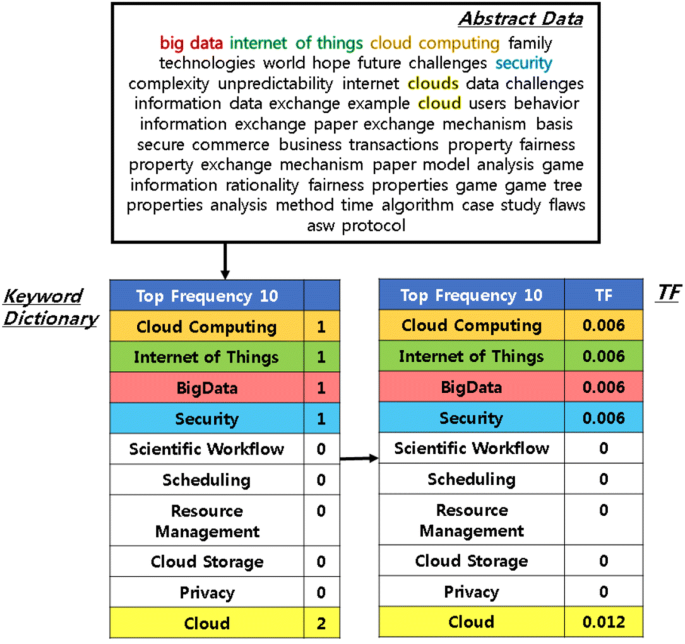

The TF calculation step in Fig. 1 counts how many times the keywords defined in a keyword dictionary and the topics extracted by LDA appear in abstract data. The TF used in this paper is defined as

where, \(n_{i,j}\) represents the number of occurrences of word \(t_{i}\) in document \(d_{j}\) and \(\sum\limits_{k} {n_{k,j} }\) represents a total number of occurrences of words in document \(d_{j}\) . K and D are the number of keywords and documents (i.e., papers), respectively.

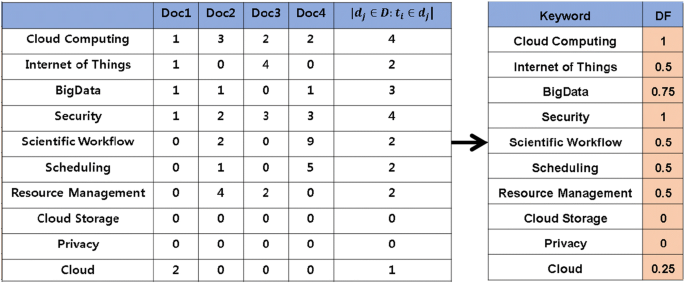

Figure 4 illustrates TF calculation for 10 keywords of top frequency. The abstract data in this figure have the paper length of 64. As we can see in this figure, the keywords ‘cloud computing’, ‘Internet of Things’, and ‘Big Data’ have the TF value of 0.015 because of one occurrence in the abstract data. The keyword ‘cloud computing’ has the TF value of 0.03 because of two occurrences. Figure 5 shows map-reduce algorithm to calculate word frequency (i.e., TF). In this figure, n represents the number of occurrences of a keyword in a document with a paper title of DocName .

An illustrative example of TF calculation

Map-reduce algorithm for the calculation of word frequency

Document frequency

While the TF means the number of occurrences of each keyword in a document, the DF means how many times each keyword appears in the collection of documents. In the DF calculation step in Fig. 1 , the DF is calculated by dividing the total number of documents by the number of documents that contain a specific keyword. It is defined as

where, \(\left| D \right|\) represents total number of documents and \(\left| {d_{j} \in D:t_{j} \in d_{j} } \right|\) represents the number of documents that keyword \(t_{j}\) occurs. Figure 6 shows an illustrative example when four documents are used to calculate the DF value.

An illustrative example of DF calculation

Figure 7 shows the map-reduce algorithm to calculate the DF of each paper.

Map-reduce algorithm for the calculation of document frequency

Keywords with a high DF value cannot have an importance because they commonly appear in the most documents. Accordingly, the IDF that is an inverse of the DF is used to measure an importance of keywords in the collection of documents. The IDF is defined as

Using Eqs. ( 2 ) and ( 4 ), the TF-IDF is defined as

The TF-IDF value increases when a specific keyword has high frequency in a document and the frequency of documents that contain the keyword among the whole documents is low. This principle can be used to find the keywords frequently occurring in documents. Consequently, using the TF-IDF calculated by Eq. ( 5 ), we can find out what keywords are important in each paper.

Figure 8 shows the map-reduce algorithm for the TF-IDF calculation of each paper.

Map-reduce algorithm for TF-IDF calculation

- K-means clustering

Typically, clustering technique is used to classify a set of data into classes of similar data. Until now, it has been applied to various applications in many fields such as marketing, biology, pattern recognition, web mining, analysis of social networks, etc. [ 33 ]. Among various clustering techniques, we choose the k-means clustering algorithm, which is one of unsupervised learning algorithm, because of its effectiveness and simplicity. More specifically, the algorithm is to classify the data set of N items based on features into k disjoint subsets. This is done by minimizing distances between data item and the corresponding cluster centroid.

Mathematically, the k-means clustering algorithm can be described as follows:

where, k is the number of clusters, \(x_{j}\) is the j th data point in the i th cluster \(C_{i}\) , and \(c_{i}\) is the centroid of \(C_{i}\) . The notation \(\left\| {x_{j} - c_{i} } \right\|^{2}\) stands for the distance between \(x_{j}\) and \(c_{i}\) , and Euclidean distance is commonly used as a distance measure. To achieve a representative clustering, a sum of squared error function, E , should be as small as possible.

The advantage of the K-means clustering algorithm is that (1) dealing with different types of attributes; (2) discovering clusters with arbitrary shape; (3) minimal requirements for domain knowledge to determine input parameters; (4) dealing with noise and outliers; and (5) minimizing the dissimilarity between data [ 34 ].

The TF-IDF value represents an importance of the keywords that determines characteristics of each paper. Thus, the classification of papers by TF-IDF value leads to finding a group of papers with similar subjects according to the importance of keywords. Because of this, this paper uses the K-means clustering algorithm, which is one of most used clustering algorithm, to group papers with similar subjects. The K-means clustering algorithm used in this paper calculates a center of the cluster that represents a group of papers with a specific subject and allocates a paper to a cluster with high similarity, based on a Euclidian distance between the TF-IDF value of the paper and a center value of each cluster.

The K-means clustering algorithm is computationally faster than the other clustering algorithms. However, it produces different clustering results for different number of clusters. So, it is required to determine the number of clusters (i.e., K value) in advance before clustering. To overcome the limitations, we will use the Elbow scheme [ 35 ] that can find a proper number of clusters. Also, we will use the Silhouette scheme [ 36 , 37 ] to validate the performance of clustering results by K-means clustering scheme. The detailed descriptions of the two schemes will be provided in next section with performance evaluation.

Experiments

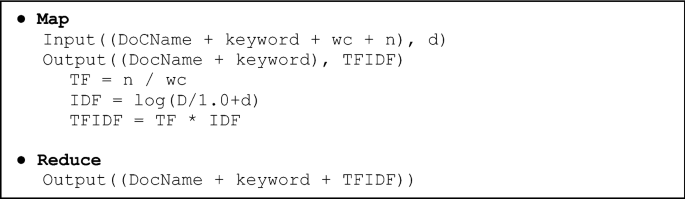

Experimental environment.

The paper classification system proposed by this paper is based on the HDFS to manage and process massive paper data. Specifically, we build the Hadoop cluster composed of one master node, one sub node, and four data nodes. The TF-IDF calculation module is implemented with Java language on Hadoop-2.6.5 version. We also implemented the LDA calculation module using Spark MLlib in python. The K-means clustering algorithm is implemented using Scikit-learn library [ 38 ].

Meanwhile, as experimental data, we use the actual papers published on Future Generation Computer System (FGCS) journal [ 13 ] during the period of 1984 to 2017. The titles, abstracts, and keywords of total 3264 papers are used as core data for paper classification. Figure 9 shows overall system architecture for our paper classification system.

Overall system architecture for our paper classification system

The keyword dictionaries used for performance evaluation in this paper are constructed with the three methods shown in Table 3 . The constructed keyword dictionaries are applied to Elbow and Silhouette schemes, respectively, to compare and analyze the performance of the proposed system.

Experimental results

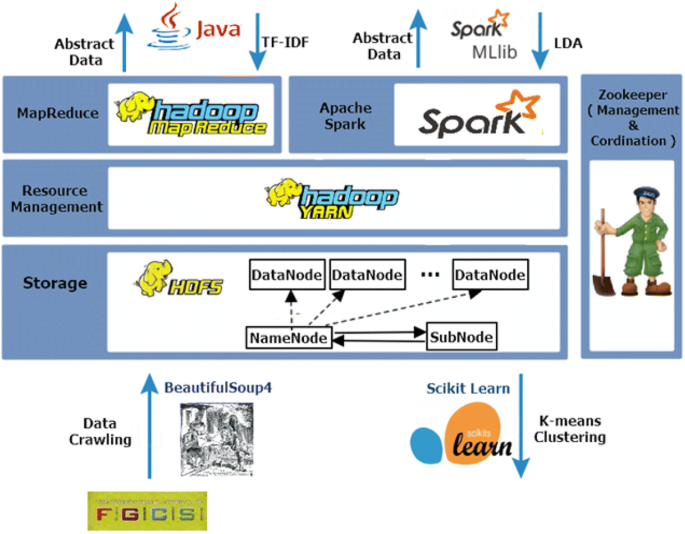

Applying elbow scheme.

When using K-means clustering algorithm, users should determine a number of clusters before the clustering of a dataset is executed. One method to validate the number of clusters is to use the Elbow scheme [ 35 ]. We perform Elbow scheme to find out an optimal number of clusters, changing the value ranging from 2 to 100.

Table 4 shows the number of clusters obtained by Elbow scheme for the three methods shown in Table 3 .

As we can see in the results of Table 4 , the number of clusters becomes more as the number of keywords increases. It is natural phenomenon because the large number of keywords results in more elaborate clustering for the given keywords. However, on comparing the number of clusters of three methods, we can see that Method 3 has the lower number of clusters than other two methods. This is because Method 3 can complementarily use the advantages of the remaining two methods when it groups papers with similar subjects. That is, Method 1 depends on the keywords input by users. It cannot be guaranteed that these keywords are always correct to group papers with similar subjects. The reason is because users can register incorrect keywords for their own papers. Method 2 makes up for the disadvantage of Method 1 using the topics automatically extracted by LDA scheme. Figure 10 shows elbow graph when Method 3 are used. In this figure, an upper arrow represents the optimal number of clusters calculated by Elbow scheme.

Elbow graph for Method 3

Applying Silhouette scheme

The silhouette scheme is one of various evaluation methods as a measure to evaluate the performance of clustering [ 36 , 37 ]. The silhouette value becomes higher as two data within a same cluster is closer. It also becomes higher as two data within different clusters is farther. Typically, a silhouette value ranges from − 1 to 1, where a high value indicates that data are well matched to their own cluster and poorly matched to neighboring clusters. Generally, the silhouette value more than 0.5 means that clustering results are validated [ 36 , 37 ].

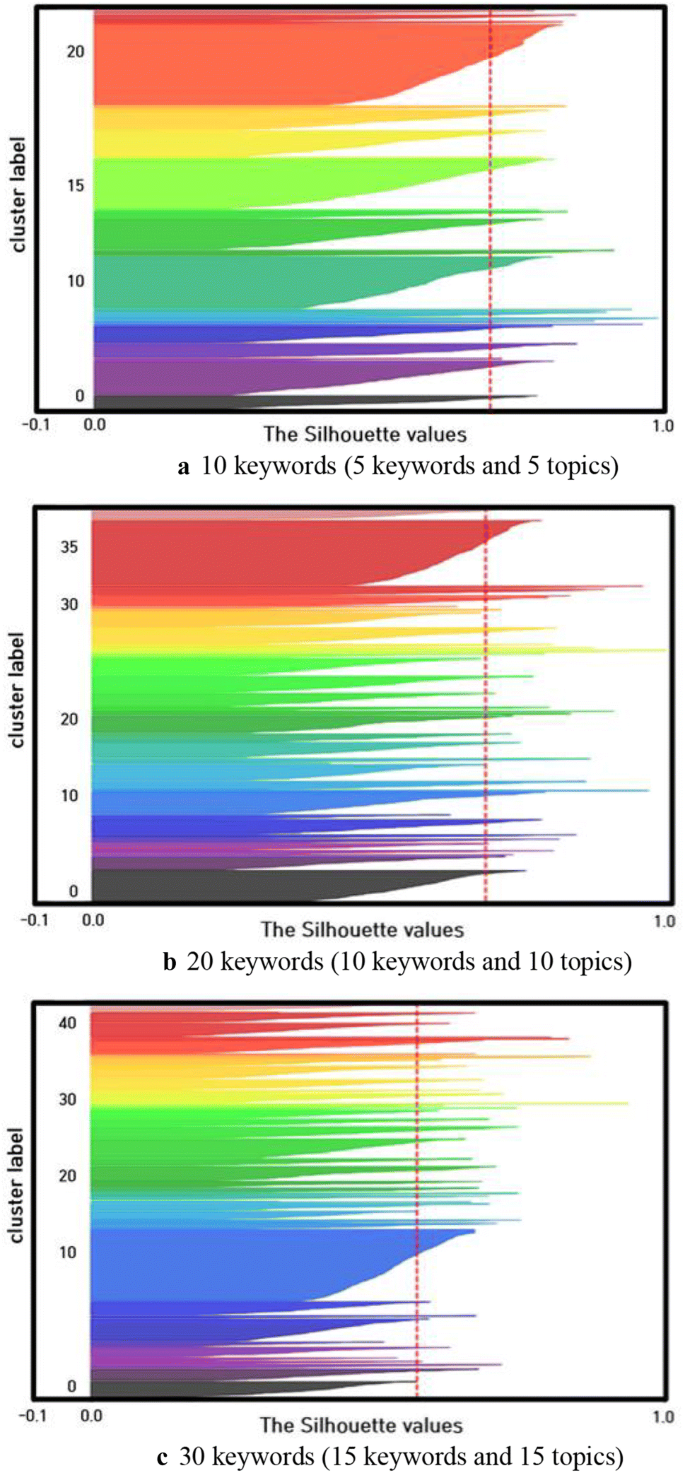

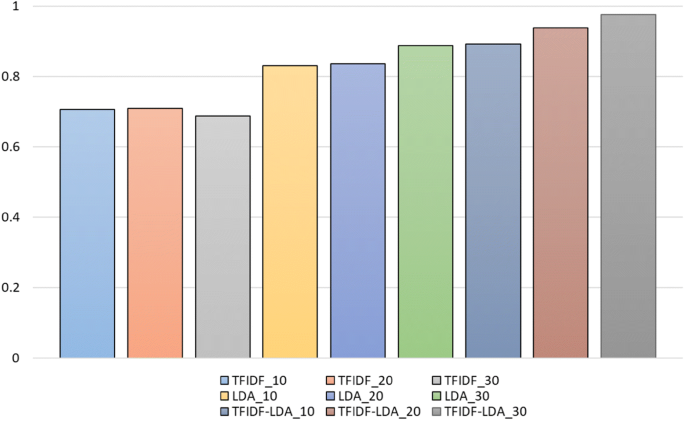

Table 5 shows an average silhouette value for each of the three methods shown in Table 3 . We can see from results of this table that the K-means clustering algorithm used in the paper produces good clustering when 10 and 30 keywords are used. It is should be noted that the silhouette values of more than 0.5 represent valid clustering. Figure 11 shows the silhouette graph for each of 10, 20, and 30 keywords when Method 3 are used. In this figure, a dashed line represents the average silhouette value. We omit the remaining silhouette graphs due to space limitations.

Silhouette graph for Method 3

Analysis of classification results

Table 6 shows an illustrative example for classification results. In this table, the papers in cluster 1 indicate that they are grouped by two keywords ‘cloud’ and ‘bigdata’ as a primary keyword. For cluster 2, two keywords ‘IoT’ and ‘privacy’ have an important role in grouping the papers in this cluster. For cluster 3, three keywords ‘IoT’, ‘security’ and ‘privacy’ have an important role. In particular, according to whether or not the keyword ‘security’ is used, the papers in cluster 2 and cluster 3 are grouped into different clusters.

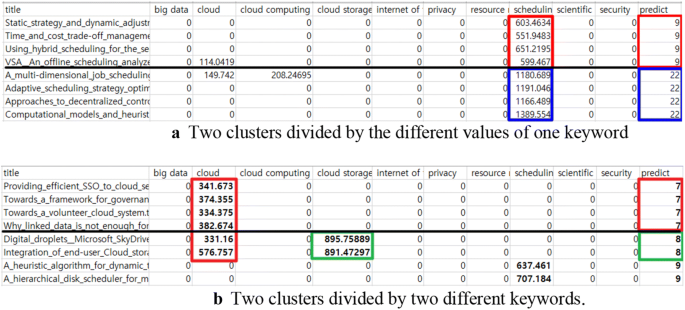

Figure 12 shows a TF-IDF value and a clustering result for some papers. In this figure, ‘predict’ means cluster number, whose cluster contains a paper with the title denoted in first column. In Fig. 12 a, we can observe that all papers have the same keyword ‘scheduling’, but they are divided into two clusters according to a TF-IDF value of the keyword. Figure 12 b indicates that all papers have the same keyword ‘cloud’, but they are grouped into different clusters (cluster 7 and cluster 8) according whether or not a TF-IDF value of the keyword ‘cloud storage’ exists.

Illustrative examples of clustering results

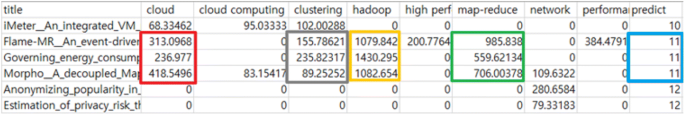

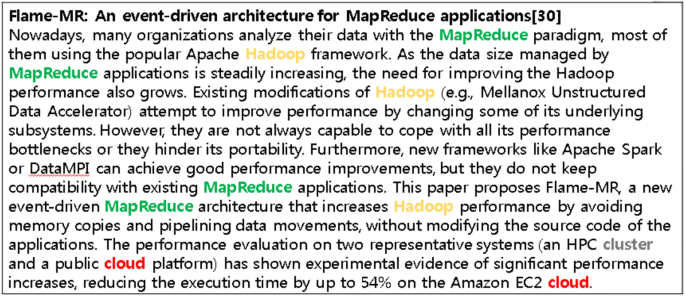

Figure 13 shows an analysis result for the papers belonging to the same cluster. In this figure, we can see that three papers in cluster 11 have four common keywords ‘cloud’, ‘clustering’, ‘hadoop’, and ‘map-reduce’ as a primary keyword. Therefore, we can see from this figure that the papers are characterized by these four common keywords.

Clustering results by common keywords

Figures 14 and 15 show abstract examples for first and second papers among the four ones shown in Fig. 13 , respectively. From these figures, we can see that four keywords (‘cloud’, ‘clustering’, ‘hadoop’, and ‘map-reduce’) are properly included in the abstracts of the two papers.

An abstract example for [ 39 ]

An abstract example for [ 40 ]

Evaluation on the accuracy of the proposed classification system

The accuracy the proposed classification systems has been evaluated by using the well-known F-Score [ 41 ] which measure how good paper classification is when compared with reference classification. The F-Score is a combination of the precision and recall values used in information extraction. The precision, recall, and F-Score are defined as follows.

In the above equations, TP, TN, FP, and FN represents true positive, true negative, false positive, and false negative, respectively. We carried out our experiments on 500 research papers randomly selected among the total 3264 ones used for our experiments. This experiment is run 5 times and the average of F-Score values is recorded.

Figure 16 shows the F-Score values of the three methods to construct keyword dictionaries shown in Table 3 .

F-score values of three methods (TF-IDF, LDA, TF-IDF + LDA)

As we can see in the results of Fig. 16 , the F-score value of Method 3 (the combination of TF-IDF and LDA) is higher than that of other methods. The main reason is that Method 3 can complementarily use the advantages of the remaining two methods. That is, TF-IDF can extract only the frequently occurring keywords in research papers and LDA can extract only the topics which are latent in research papers. On the other hand, the combination of TF-IDF and LDA can lead to the more detailed classification of research papers because frequently occurring keywords and the correlation between latent topics are simultaneously used to classify the papers.

We presented a paper classification system to efficiently support the paper classification, which is essential to provide users with fast and efficient search for their desired papers. The proposed system incorporates TF-IDF and LDA schemes to calculate an importance of each paper and groups the papers with similar subjects by the K-means clustering algorithm. It can thereby achieve correct classification results for users’ interesting papers. For the experiments to demonstrate the performance of the proposed system, we used actual data based on the papers published in FGCS journal. The experimental results showed that the proposed system can classify the papers with similar subjects according to the keywords extracted from the abstracts of papers. In particular, when a keyword dictionary with both of the keywords extracted from the abstracts and the topics extracted by LDA scheme was used, our classification system has better clustering performance and higher F-Score values. Therefore, our classification systems can classify research papers in advance by both of keywords and topics with the support of high-performance computing techniques, and then the classified research papers will be applied to search the papers within users’ interesting research areas, fast and efficiently.

This work has been mainly focused on developing and analyzing research paper classification. To be a generic approach, the work needs to be expanded into various types of datasets, e.g. documents, tweets, and so on. Therefore, future work involves working upon various types of datasets in the field of text mining, as well as developing even more efficient classifiers for research paper datasets.

Availability of data and materials

Not applicable.

Bafna P, Pramod D, Vaidya A (2016) Document clustering: TF-IDF approach. In: IEEE int. conf. on electrical, electronics, and optimization techniques (ICEEOT). pp 61–66

Ramos J (2003) Using TF-IDF to determine word relevance in document queries. In: Proc. of the first int. conf. on machine learning

Havrlant L, Kreinovich V (2017) A simple probabilistic explanation of term frequency-inverse document frequency (TF-IDF) heuristic (and variations motivated by this explanation). Int J Gen Syst 46(1):27–36

Article MathSciNet Google Scholar

Trstenjak B, Mikac S, Donko D (2014) KNN with TF-IDF based framework for text categorization. Procedia Eng 69:1356–1364

Article Google Scholar

Yau C-K et al (2014) Clustering scientific documents with topic modeling. Scientometrics 100(3):767–786

Balabantaray RC, Sarma C, Jha M (2013) Document clustering using K-means and K-medoids. Int J Knowl Based Comput Syst 1(1):7–13.

Google Scholar

Gupta H, Srivastava R (2014) K-means based document clustering with automatic “K” selection and cluster refinement. Int J Comput Sci Mob Appl 2(5):7–13

Gurusamy R, Subramaniam V (2017) A machine learning approach for MRI brain tumor classification. Comput Mater Continua 53(2):91–108

Nagwani NK (2015) Summarizing large text collection using topic modeling and clustering based on MapReduce framework. J Big Data 2(1):1–18

Kim J-J (2017) Hadoop based wavelet histogram for big data in cloud. J Inf Process Syst 13(4):668–676

Dean J, Ghemawat S (2008) MapReduce: simplified data processing on large clusters. Commun ACM 51(1):107–113

Cho W, Choi E (2017) DTG big data analysis for fuel consumption estimation. J Inf Process Syst 13(2):285–304

FGCS Journal. https://www.journals.elsevier.com/future-generation-computer-systems . Accessed 15 Aug 2018.

Gui Y, Gao G, Li R, Yang X (2012) Hierarchical text classification for news articles based-on named entities. In: Proc. of int. conf. on advanced data mining and applications. pp 318–329

Chapter Google Scholar

Singh J, Singh G, Singh R (2017) Optimization of sentiment analysis using machine learning classifiers. Hum-cent Comput Inf Sci 7:32

Mahendran A et al (2013) “Opinion Mining for text classification,” Int. J Sci Eng Technol 2(6):589–594

Alsmadi I, Alhami I (2015) Clustering and classification of email contents. J King Saud Univ Comput Inf Sci. 27(1):46–57

Rossi RG, Lopes AA, Rezende SO (2016) Optimization and label propagation in bipartite heterogeneous networks to improve transductive classification of texts. Inf Process Manag 52(2):217–257

Barigou F (2018) Impact of instance selection on kNN-based text categorization. J Inf Process Syst 14(2):418–434

Baker K, Bhandari A, Thotakura R (2009) An interactive automatic document classification prototype. In: Proc. of the third workshop on human-computer interaction and information retrieval. pp 30–33

Xuan J et al. (2017) Automatic bug triage using semi-supervised text classification. arXiv preprint arXiv:1704.04769

Aggarwal CC, Zhai CX (2012) A survey of text classification algorithms. In: Mining text data, Springer, Berlin, pp 163–222

Duda RO, Hart PE, Stork DG (2012) Pattern classification. Wiley, Hoboken

MATH Google Scholar

Bravo-Alcobendas D, Sorzano COS (2009) Clustering of biomedical scientific papers. In: 2009 IEEE Int. symp. on intelligent signal processing. pp 205–209

Taheriyan M (2011) Subject classification of research papers based on interrelationships analysis. In: ACM proc. of the 2011 workshop on knowledge discovery, modeling and simulation. pp 39–44

Hanyurwimfura D, Bo L, Njagi D, Dukuzumuremyi JP (2014) A centroid and Relationship based clustering for organizing research papers. Int J Multimed Ubiquitous Eng 9(3):219–234

Nanba H, Kando N, Okumura M (2011) Classification of research papers using citation links and citation types: towards automatic review article generation. Adv Classif Res Online 11(1):117–134

Mohsen T (2011) Subject classification of research papers based on interrelationships analysis. In: Proceeding of the 2011 workshop on knowledge discovery, modeling and simulation. pp 39–44

Nguyen TH, Shirai K (2013) Text classification of technical papers based on text segmentation. In: Int. conf. on application of natural language to information systems. pp 278–284

Gurung P, Wagh R (2017) A study on topic identification using K means clustering algorithm: big vs. small documents. Adv Comput Sci Technol 10(2):221–233

Blei DM, Ng AY, Jordan MI (2003) Latent Dirichlet allocation. J Mach Learn Res 3:993–1022

Jiang Y, Jia A, Feng Y, Zhao D (2012) Recommending academic papers via users’ reading purposes. In: Proc. of the sixth ACM conf. on recommender systems. pp 241–244

Xu R, Wunsch D (2008) Clustering. Wiley, Hoboken

Book Google Scholar

Gan G, Ma C, Wu J (2007) Data clustering: theory, algorithms, and applications. SIAM, Alexandria

Book MATH Google Scholar

Kodinariya TM, Makwana PR (2013) Review on determining number of cluster in K-means clustering. Int J Adv Res Comput Sci Manag Stud 1(6):90–95

Oliveira GV et al (2017) Improving K-means through distributed scalable metaheuristics. Neurocomputing 246:45–57

Rousseeuw PJ (1987) Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. J Comput Appl Math 20:53–65

Article MATH Google Scholar

Scikit-Learn. http://scikit-learn.org/stable/modules/classes.html . Accessed 15 Aug 2018.

Veiga J, Exposito RR, Taboada GL, Tounno J (2016) Flame-MR: an event-driven architecture for MapReduce applications. Future Gener Comput Syst 65:46–56

Ibrahim S, Phan T-D, Carpen-Amarie A, Chihoub H-E, Moise D, Antoniu G (2016) Governing energy consumption in Hadoop through CPU frequency scaling: an analysis. Future Gener Comput Syst 54:219–232

Visentini I, Snidaro L, Foresti GL (2016) Diversity-aware classifier ensemble selection via F-score. Inf Fus 28:24–43

Download references

Acknowledgements

This work was supported by research grants from Daegu Catholic University in 2017.

Author information

Authors and affiliations.

Department of Police Administration, Daegu Catholic University, 13-13 Hayang-ro, Hayang-eup, Gyeongsan, Gyeongbuk, 38430, South Korea

Sang-Woon Kim

School of Information Technology Eng., Daegu Catholic University, 13-13 Hayang-ro, Hayang-eup, Gyeongsan, Gyeongbuk, 38430, South Korea

Joon-Min Gil

You can also search for this author in PubMed Google Scholar

Contributions

SWK proposed a main idea for keyword analysis and edited the manuscript. JMG was a major contributor in writing the manuscript and carried out the performance experiments. Both authors read and approved the final manuscript.

Corresponding author

Correspondence to Joon-Min Gil .

Ethics declarations

Competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Reprints and permissions

About this article

Cite this article.

Kim, SW., Gil, JM. Research paper classification systems based on TF-IDF and LDA schemes. Hum. Cent. Comput. Inf. Sci. 9 , 30 (2019). https://doi.org/10.1186/s13673-019-0192-7

Download citation

Received : 03 December 2018

Accepted : 12 August 2019

Published : 26 August 2019

DOI : https://doi.org/10.1186/s13673-019-0192-7

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Paper classification

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Article-level classification of scientific publications: A comparison of deep learning, direct citation and bibliographic coupling

Maxime rivest.

1 Science-Metrix Inc., Montréal, Québec, Canada

2 Elsevier B.V., Amsterdam, Netherlands

Etienne Vignola-Gagné

Éric archambault.

3 1science, Montréal, Québec, Canada

Associated Data

The data underlying the results presented in the study are available, for scholarly research, from Elsevier BV on the ICSR Lab ( https://www.elsevier.com/icsr/icsrlab ). ICSR Lab is intended for scholarly research only and is a cloud-based computational platform which enables researchers to analyze large structured datasets, including those that power Elsevier solutions such as Scopus and PlumX. All other relevant data are within the manuscript and its Supporting information files.

Classification schemes for scientific activity and publications underpin a large swath of research evaluation practices at the organizational, governmental, and national levels. Several research classifications are currently in use, and they require continuous work as new classification techniques becomes available and as new research topics emerge. Convolutional neural networks, a subset of “deep learning” approaches, have recently offered novel and highly performant methods for classifying voluminous corpora of text. This article benchmarks a deep learning classification technique on more than 40 million scientific articles and on tens of thousands of scholarly journals. The comparison is performed against bibliographic coupling-, direct citation-, and manual-based classifications—the established and most widely used approaches in the field of bibliometrics, and by extension, in many science and innovation policy activities such as grant competition management. The results reveal that the performance of this first iteration of a deep learning approach is equivalent to the graph-based bibliometric approaches. All methods presented are also on par with manual classification. Somewhat surprisingly, no machine learning approaches were found to clearly outperform the simple label propagation approach that is direct citation. In conclusion, deep learning is promising because it performed just as well as the other approaches but has more flexibility to be further improved. For example, a deep neural network incorporating information from the citation network is likely to hold the key to an even better classification algorithm.

Introduction

Bibliographic and bibliometric classifications of research activities and publications have a subtle but pervasive influence on research policy and on performance assessments of all kinds [ 1 , 2 ]. In bibliometric assessments, assigning a research group’s or institution’s output to one field of research rather than another may drastically alter the results of its evaluation. The classification scheme and the design choices extend to the selection of reference groups and benchmark levels used for normalizations and comparisons. Briefly put, nearly all investigators and scholars whose research performance is evaluated with bibliometric indicators, or who have even a passing interest in the citation impact of their written works, are affected by design choices in some of the core classificatory systems of science that are commonly used in research evaluation. This explains why the development of relevant and precise classification systems carries so much weight.

Classifying documents is as old as libraries themselves. For instance, in the great Library of Alexandria, Callimachus classified work in tables called “Pinakes”, which contained the following subjects: rhetoric, law, epic, tragedy, comedy, lyric poetry, history, medicine, mathematics, natural science, and miscellanea [ 3 ]. Classifications are rarely consensual and are typically criticized shortly after inception, and this may have started as early as Aristophanes’s “pugnacious” criticism of Callimachus’s Pinakes [ 4 ].

A classification aims at grouping similar documents under a common class. Documents can share commonalities on various dimensions such as language, field, and so forth. The multiple dimensions of knowledge are pervasive in all characterization of research. As a consequence, classifications are required at various scales (such as at the journal level and the article level), and there are also various types of classes that can be used simultaneously to characterize research activities and publications (e.g., to reflect the organizational structure of universities or that of industry). For example, disciplines and faculties found in academia categorize themselves to reflect their topic of interest, as do Scopus All Science Journal Classification. Contrastingly, US National Library of Medicine’s Medical Subject Headings thesaurus rather aim to reflect a heterogenous mix of subjects and experimental methods.

All classifications of intellectual work present boundary challenges to various degrees (i.e., establishing clearly what is counted in and what is not). Classifying research documents such as journals and articles does not evade these challenges. In research, new knowledge is continuously created, and newer knowledge does not always fit snugly into pre-existing classes [ 5 ]. These characteristic challenges of classifying activities are true at all scales, even though some authors argue they are more particularly problematic at the journal level [ 6 , 7 ]. The “fractal” nature of classification challenges means that scientific journals could frequently be classified in more than one class, but this is equally true of scientific articles. This gives rise to controversies over using mutually exclusive classification schemes, which make reporting more convenient and clearer, and multiple-class assignments, which are ontologically more robust.

One reason to use classifications in science studies and in research assessment is to capture, interpret, and discuss changes in research practices. Some level of abstraction and aggregation is useful to retrieve the higher-level trends and patterns that are most often the object of analyses conducted by institutions, government agencies, and all manner of commentators. For bibliometric evaluation, one of the main reasons to classify scientific work and literature is to normalize bibliometric indices. This is necessary because of disciplinary-specific practices in authorship and citation practices, as well as variations in citation patterns over time [ 8 ].

A defining aspect of classification systems, particularly those used in research evaluation, is the level of aggregation of the classification, such as at the journal or the article level, or at the conference or the presentation level. The classification of scientific work at the journal and article levels has been extensively studied [ 9 – 12 ]. Journal-level classifications often recapitulate historical disciplinary conventions and nomenclatures, making their use more intuitive for certain audiences, including research administrators, policymakers, and funders. They are certainly useful for journal publishers who need to categorize their journals and to present them on their websites in a compact manner, and they can also be used to specify the field of activity of authors [ 13 ].

Though there are many cases where journal-level classifications are useful, there are many cases where classifying articles is preferable and more precise. For instance, it is often useful in bibliometrics to individually classify each article in multidisciplinary/general journals such as PLOS ONE , Nature , or Science . Moreover, there are articles published in, for example, an oncology journal whose core topic could be more relevant to surgery. As a result, journal-level classifications are not tremendously precise compared to those at the article level [ 6 , 8 , 10 , 11 ]. Without negating the need for journal-level classifications for many use cases, there are therefore several reasons to prefer an article-based classification to a journal-based one in a host of research evaluation contexts.

Knowledge is evolving extremely rapidly, and this creates notable classification problems at both the journal and the article levels. For instance, the 1findr [ 14 ] database indexes the content of close to 100,000 active and inactive refereed scholarly journals, whereas the Scopus database [ 15 ] presents a more selective view of a similar corpus by selecting journals that are highly regarded by peers and/or that are the most highly cited in their fields ( Fig 1 ). In both databases, the doubling period is approximately 17 years—meaning these indexes contain as many articles in the last 17 years as during all years prior. This rapid growth of scientific publications creates a huge strain on classification needs and not only because of the large number of articles that need to be classified every day. Furthermore, because of the evolving nature of scientific knowledge, new classes need to be added to classification schemes, which sometimes require overhauling the whole classification scheme and reclassifying thousands of journals and millions of articles. Performing this classification work manually is prohibitively expensive and time-consuming. This means that precise computational methods of classification are sorely needed.

Although articles have been manually classified by librarians for decades if not centuries, computational classification is a comparatively recent development given the requirement for processing capacity and large-scale capture and characterization of scientific publications [ 6 ]. Ongoing investigations carried out since the 1970s have resulted in the creation of a toolbox of classificatory approaches, each supported by varying bases of evidence, and from which investigators must pick and choose a technique based on their object’s features. Article-level computational classification techniques are mostly based on clustering algorithms and use a bottom-up approach, requiring relatively involved follow-up work to link the clusters obtained to categories that are intelligible to potential users. Determination of optimal levels of cluster aggregation is an ongoing development [ 11 , 12 , 16 , 17 ]. Computational classification techniques have also benefited from advances in network analysis and text mining [ 18 – 21 ].

There is a plethora of methods developed and routinely used in the computer science fields of natural language processing and, more recently, machine learning and artificial intelligence (AI). These methods could advantageously be used in bibliometrics. More specifically, deep learning has recently been found to be extremely performant in finding patterns in noisy data, providing the network can be trained on a large enough volume of data. Yet, despite all the work that has been conducted to date on computational classification methods, bibliometricians have yet to make sustained use of AI, and in particular deep learning methods, in their classificatory and clustering practices.

The BioASQ challenge is a notable example of recent use of deep learning to bibliometrics [ 22 ] related task, but BioASQ’s researcher and bibliometrician have so far explored the topic in parallel. The BioASQ challenge aim at promoting methodologies and systems for large-scale biomedical semantic indexing since 2012 by hosting a competition where team try to automatically assign pubmed’s mesh-terms to scholarly publication [ 23 ]. Perhaps surprinsingly, none of the 9182 articles published in journals with (scientomet* OR informetri* OR bibliometri*) in their name ever mentioned BioASQ in their title, abstract, or keywords and only one of those 9182 publications cite a BioASQ related publication (according to this search on Scopus: “SRCTITLE (scientomet* OR informetri* OR bibliometri*) AND REF (bioasq)”). This latter publication is not focused on the subject of text classification.

As just mentioned, most solution to the BioASQ challenge now use technics related to deep neural networks. Several were based on BERT and BioBERT [ 22 ]. In this paper, we have chosen to explore another type of deep neural network (namely character-based neural network) because we felt that our task was different from the BioASQ task and less suited to those architectures. For example, the granularity of the task is several levels of magnitude different, we are classifying 174 subfield instead of approximately 27,000 mesh-terms. Furthermore, our training set is extremely noisy (56% accuracy). This noise may seem like a bad experimental design but is an integral part of the task facing bibliometricians. Indeed, any reclassification system or strategy useful to creating both an article and a journal level classification will need to be robust to noisy training set as it is prohibitively expensive to gather an article level training set. The last difference is that we used a much bigger set of input data and some are less amenable to tokenisation. Indeed, defining tokens for affiliations and source citation or other very rare and specific jargon words seemed suboptimal. Nevertheless, these were useful hypothesis that helped us choose a model architecture but as they are untested hypotheses other architectures should be explored in future works.

Generally speaking, classifications are only as good as the measurement and validation systems used to put them to the test [ 6 , 7 ]. To examine how precise a deep learning classification technique could be, the results of this approach were compared to two other methods frequently used to map science: bibliographic coupling and direct citation links. This examination was based on scores of citation concentration [ 6 ] and on agreement with a benchmark dataset classified manually.

In addition to the novelty of this experiment with the use of a deep learning technique, the computational experiment was conducted at a very large scale. Often due to computational limits, bibliographic coupling has previously tended to be computed on record samples rather than at the corpus level of bibliographic databases such as Scopus and the Web of Science. The present paper examines the use of deep learning with bibliographic coupling, essentially at the corpus level of the Scopus database. One advantage of the approach proposed in the present paper is that it can be used to classify articles, as well as journals through aggregation of the resulting information. Obtaining a single classification scheme at both scales enables direct comparisons of article-level and journal-level classifications, which has seldom been realized in prior studies [ 10 ]. Lastly, and more importantly, whereas a large sample of the research on classifications addresses the creation of new classifications, this paper examines whether computational classification techniques, including the use of AI, can be used advantageously to maintain existing classification schemes compared to the use of a manual method.

Empirical setting and input data

This paper uses Science-Metrix’s bibliometric-optimized version of the Scopus database to retrieve scientific article metadata records and their associated citation data. This implementation used the 8 May 2019 build of the Scopus database. Publication records from the following types of sources were used in the experiment: book series, conference proceedings, and journals. For book series and journals, only the document types articles, conference papers, reviews, and short surveys were kept. For conference proceedings, only those classified as articles, reviews, and conference papers were used. Additional filters were applied such as removing sources not having valid ISSNs. Overall, 41 million Scopus publication records were used in the experiment.

Training set

Science-Metrix’s journal classification was used to seed the journal classes to which articles or journals would be assigned to test the three computational classification techniques. This classification scheme is available in more than 25 languages. It contains a core set of 15,000 journals and is freely available (see science-metrix.com/classification and Supporting information ). An additional set of some 15,000 journals that had fewer than 30 articles each when the original classification was designed are also used internally. Journals are classified in a mutually exclusive manner into 176 scientific subfields, and these subfields are mapped to 22 fields, which themselves are mapped to six domains.

A first version of that classification tree was designed from the top down by examining and drawing best practices from the classifications used by the US National Science Foundation, the Web of Science (now Clarivate, Thomson Reuters at the time), the Australian Research Council, and the European Science Foundation and the OECD. Journals were assigned to categories seeded from these classifications and then run through several iterations of automated suggestions, drawing insight from direct citation, Latent Dirichlet Allocation [ 24 ], and mapping of departmental names. Each iteration was followed by manual corrections to the suggestions obtained with these signals [ 9 ].

Except for the manual addition of new journals in the production version of the classification, the original version of the classification has not been updated in the last 10 years. As a consequence, some journals may have drifted in terms of subfields. In addition, many of the journals would no doubt be classified more precisely if the coupling were performed again due to improved signals (10 more years of publications means substantially more references and citations).

A newer version of this classification is currently under development and provides greater granularity, having 330 subfields, 33 fields, and seven domains. Although it is not yet used in bibliometric production, it can be seen in the 1findr abstracting and indexing database (see 1findr.com). The techniques experimented with here have been used to develop this expanded version, which currently provides a class for more than 56,000 journals, with an equal amount yet to be classified and more than 130 million scholarly articles waiting to be classified.

Article- and journal-level classification

In contrast to a large share of the papers on classification, which use clustering techniques to determine fields and topics using a bottom-up approach, this experiment maps signals obtained from three coupling techniques to the existing Science-Metrix classification. The coupling/linking is performed at the article level, whereas journal-level classes are determined by the most frequent subfield of the sum of articles in each journal.

Benchmarking deep learning against two bibliometric classification techniques

In order to benchmark the result obtained by the more experimental deep learning technique (DL), articles and journals were also mapped to the Science-Metrix classification using two commonly used techniques in bibliometrics: bibliographic coupling (BC) and direct citation (DC).

DL was compared to the other classification techniques using concentration scores of the references in review articles (at both the article and the journal levels) and was also compared to assignations by bibliometrics experts who manually classified articles.

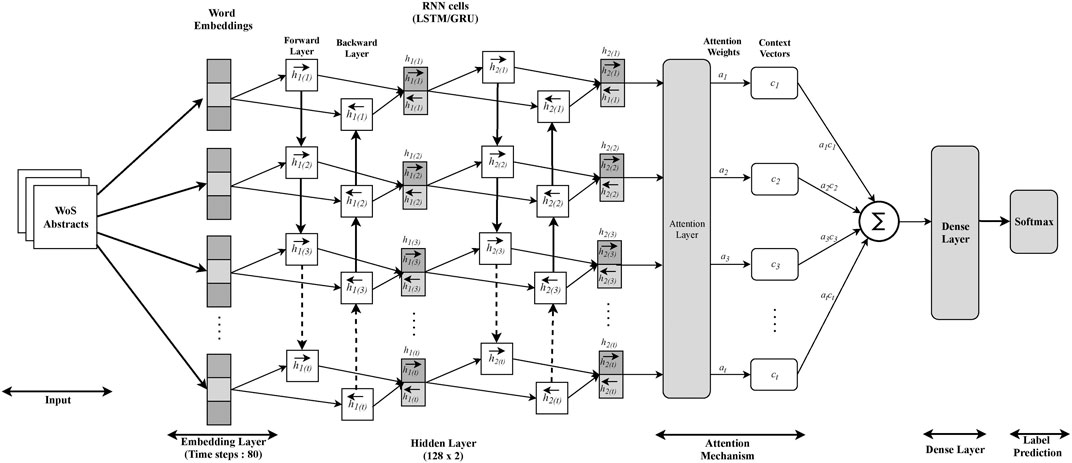

Deep learning: A modified character-based convolutional deep neural network

The classifier deployed a character-based (i.e., the alphabetical letters composing the articles’ text) convolutional deep neural network. From single letters, the model learned words and discovered features. This character-based approach has recently been developed by adapting computer vision strategies to classification and has been used on large datasets of texts such as those from Wikipedia-based ontologies or Yahoo! Answers comment logs [ 25 ]. It has been found to be extremely performant in finding patterns in noisy data.

The model performed best when it was given the following features: authors’ affiliations, names of journals referenced in the bibliography, titles of references, publication abstract, publication keywords, publication title, and classification of publication references. All features, except the classification of publication references, were truncated, but not padded, to a specific maximum length ( Table 1 ) and concatenated into one long string of text, which was itself truncated to a maximum length of 3,000 characters, the length of the 98 th percentile when ordering the concatenated vectors per length.

| Branch | Feature | Length |

|---|---|---|

| Text branch | ||

| Title | 175 | |

| Keywords | 150 | |

| Authors’ affiliations | 450 | |

| Abstract | 1750 | |

| Journal title of references | 1000 | |

| Article title of references | 500 | |

| Subfield branch | ||

| Vector of classifications of a publication’s references | 176 |

The order of this table reflects the items’ order in the model. The lengths represent the maximum allowable length for each feature. Each character was embedded into a one-hot encoded vector of length 68. One-hot encoding is defined as a vector filled with zeroes and ones only at the position assigned to the character. Table 2 presents an example of character embedding for the word “cab”.

| 0 | 1 | 0 | |

| 0 | 0 | 1 | |

| 1 | 0 | 0 |

When encoding, the 26 letters of the English alphabet, the 10 Arabic numbers, several punctuation signs (e.g., "-,;.!?:’_/\|&#$%ˆ&*˜+ = <>()[]{}\), and the space character each occupied one position in the vector. Any character that was not in this list was encoded as a hash sign (i.e., #). The subfield was the only feature not fed to the model as raw text. Instead, the subfield information was encoded into a vector of the proportion of each subfield mentioned in the reference list.

The deep neural network architecture is shown in Fig 2 . A rectified linear unit was used as the activation function between each layer, except after the last one, where a softmax was used instead. The kernels had a width of seven for the first two convolutions and three for the others. The model was trained with a stochastic gradient descent as the optimizer and categorical cross-entropy as the loss function. The gradient descent had a learning rate of 0.01, with a nesterov momentum of 0.9 and a decay of 0.000001. The model was trained on batches of 64 publications at a time and an epoch was considered passed after ~11,000 articles. The model was trained on a random sample of 24,000,000 articles.

Other machine learning algorithms

In addition to DL, three other machine learning approaches were considered for inclusion in the comparison to established bibliometric algorithm-based classifications. These are presented below but were all outperformed by the DL (the modified character-based convolutional deep neural network presented above) approach in the end. To keep the paper focused on the comparison between DL and bibliometric approaches, the rest of the paper will not present results for these three other methods after this section. However, the interested reader can find the results of our experiments in the ( S1 and S2 Figs, S1 and S3 Tables).

A character-based neural network, without the above-mentioned modifications, was one of the methods tested. The model architecture followed the small ConvNets developed in Zhang et al. (preprint) (20), but a short description follows. The input layer was a character-encoded 2D matrix with 70 characters. Then, the input layer was fed into two consecutive layers of 256 kernels of width 7. Those two layers were each followed by a maxpooling layer of width equal to 3 and activated with a rectified linear unit. Then, four layers each of 256 features but this time of width 3 and without maxpooling followed the two initial layers of width 7. Then, the layer was flattened and passed to two fully connected layers of 1,024 nodes each, followed by a 0.5 dropout layer. Finally, after the last dropout we used a dense layer with as many nodes as there were subfields to predict. This last layer was softmax activated. A stochastic gradient descent with learning rate 0.01, a nesterov momentum of 0.9, and decay of 0.000001 was used as the optimizer. Mini batches were of size 64 and an epoch was considered passed (for the learning rate decay) after 11,000 articles were processed. The model was trained on a random sample of 24,000,000 articles.

A support vector machine (SVM) and a logistic regression were also tested as alternative shallow machine learning strategies. Both were based on term frequency–inverse document frequency (TF–IDF) one and two grams. The 150,000 tokens with the highest TF–IDF scores were used. The SVM and logistic regression models were trained on 1 million articles. There was no need to train on more articles since preliminary tests showed no model improvements after 0.75 million articles.

Bibliographic coupling

A BC-based similarity measure between each publication P and each subfield S was calculated and normalized as follows:

where X is the set of all BC values between publication P and all other publications of subfield S , and T is total number of citations given by all papers of subfield S .

Direct citation

A DC-based similarity measure between one publication P and one subfield S was calculated and normalized as follows:

where inCits is the number of citations received by paper P from all papers in subfield S , outCits is the number of citations given by paper P to subfield S , subfieldNR is the total number of citations given by subfield S , and paperNR is the total number of citations given by paper P . In the end, paper P is classified in the subfield S with the highest DCsimilarity . This is calculated for all papers crossed with all subfields.

Bibliometricians and machine learning researchers have different approaches to evaluate classification tasks. In bibliometrics, the task is often to search for the “true” classification, and no clear gold standard datasets exist for article-level classification of scientific publications. To mitigate the lack of a gold standard and in an attempt to quantitatively evaluate classification schemes, the concentration of reviews’ references (Herfindahl index) currently serves as the evaluation metric for such a task (more on that below) [ 6 ]. In machine learning research, it has become standard to evaluate classification methods by splitting the dataset into three sections: training, validation, and test. Unfortunately, this was not strictly possible here because the bibliometrics-based classification strategies (DC, BC) do not lend themselves well to such a dataset split. More importantly, the training set that we used here is only a rough approximation of what we want to achieve and not the ground truth per se. Indeed, the training set comprises articles roughly labeled by extending a journal’s label (from disciplinary journals) to all its articles, whereas our task is to classify each article independently of their journal classification. By doing so, we created a training set for which 56% of the articles corresponded to the mode classification of 5 experts. That said, we are still presenting those standard evaluation metrics in the supplementary material but we focus on two other evaluation strategies—namely, a human, manually assembled gold standard (which acts as our test set) and the Herfindahl index.

Performance measurements were produced for both article- and journal-level classification algorithms. We note already, however, that the bibliometric community is decisively moving toward article-level classification, where possible, for the reasons presented above, and that measurements for journal-based classification algorithms benefit from prior use of citation-based algorithms in the construction of the Science-Metrix ontology.

Comparison to training set

Precision, recall, specificity, sensitivity, and F1 were calculated. To measure the scores, the complete dataset was used (as opposed to a validation/test split); this was done to avoid biasing the results toward BC and DC as they are not amenable to the train/validation/test split. Moreover, in all machine learning cases, the training datasets were smaller than the validation dataset, which limits the confounding effect that overfitting could have. Furthermore, the training set is not ground truth, in fact only 56% of the articles corresponded to the mode classification of 5 experts. In other words, 44% of the labels in the training set were sub optimally assigned. This limits greatly the relevance of such metrics. Thus, the results for those are presented in the supplementary materials. Comparison to training set is done mostly to follow academic standard of the machine learning discipline, but the reader should interpret the results from this evaluation with great caution because the training set has 44% of it’s label sub optimally assigned. No statistical test were used as we measured precision, recall, specificity, sensitivity, and F1 on the whole dataset relevant for this task (i.e., the population) as opposed to using a few random samples [ 26 ].

Benchmark 1 (B1): Citation concentration (Herfindahl index)

As proposed by Klavans and Boyack [ 6 ], a citation concentration score was calculated on all articles having 100 or more references that could successfully be classified (i.e., the cited journals were in the set of classified journals). This technique assumes that large review articles tend to present an exhaustive analysis of a phenomenon by summarizing content from a large volume of prior research in a single or a few research subfields and specialties. In other words, and everything being equal, review papers would inherently capture clusters of topically related publications and would therefore act as appropriate reference points for benchmarking degrees of disciplinary concentration. A more accurate classification algorithm should therefore lead to a larger Herfindahl index. This use of the Herfindahl index as an accuracy measure applied to bibliometric classification appears to have been a novel development by Boyack and Klavans [ 27 , 28 ]. Citation distribution profiles have also been measured with the Herfindahl index in a small number of unrelated studies aiming to assess the concentration and direction of “citedness” (i.e., uptake) among large populations of peer-reviewed articles [ 29 ].

The Herfindahl index itself originates in the economic literature but has been applied to measure the concentration of citations within a corpus in the last decade [ 29 ]. It has also been applied as a measure of disciplinary diversity among citations. Klavans and Boyack’s application of the index to measure clustering performance, however, appears to us to be a novel development.

Herfindahl index scores were measured for 379,413 papers. Citation concentration by subfield was calculated with the Herfindahl index for DL, BC, and BC, at the article and journal levels.

Benchmark 2 (B2): Manual article classification

To create a test set, five bibliometric analysts were asked to manually classify the same set of 100 randomly sampled scientific publications from disciplinary journals, and six other analysts were asked to do the same for another set of 100 articles from multidisciplinary journals. The analysts were asked to classify the publications as best they could, using whichever information was at their disposal. Most analysts used search engines to acquire additional information about authors and their affiliation. Analysts could assign more than one subfield to a publication when they were uncertain, in which case they were asked to rank subfields by relevance.

For each of the three classification techniques, the percentage of agreement between the computational and the manual classification was computed (the subfield most often assigned as the first choice by manual classification was used as the benchmark; in case of a tie, all top subfields were kept and computed classes were given a point for matching one or the other).

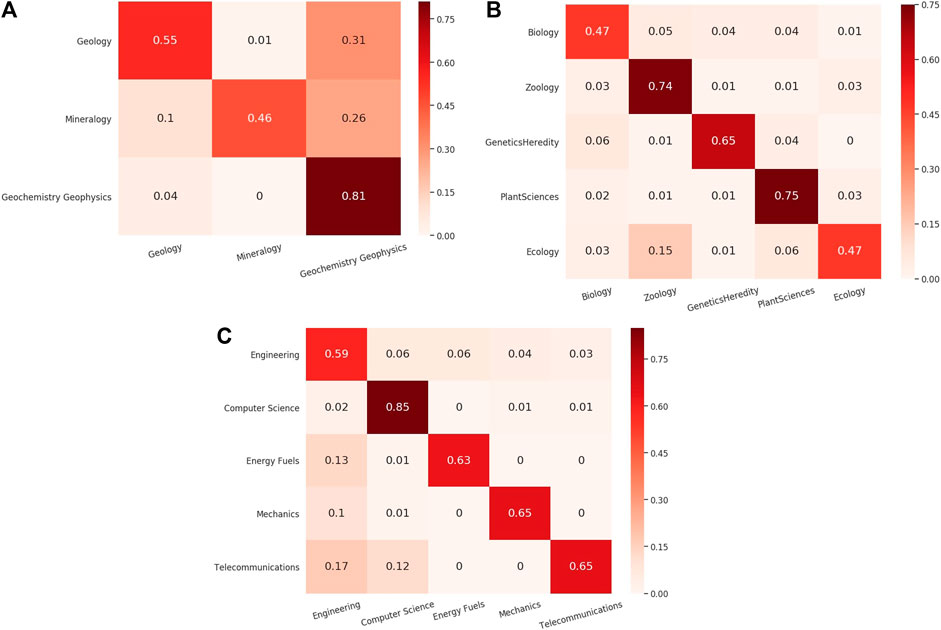

A comparison between each classification strategy and the Science-Metrix classification (which served as the training dataset) provided a first proxy to evaluate the quality of a classification strategy. We see that DL tended to replicate Science-Metrix classification more than BC and DC, given its higher macro-averaged F1 score and much higher macro-averaged precision than those calculated for BC and DC ( S3 Table and see S1 Table for precision per subfield). That said, all journal-level classifications strategies were closer to the Science-Metrix journal level classification than any article-level classification strategy ( S3 Table ), which can be easily explained by the fact that the Science-Metrix classification is itself at the journal level. A measurement of the pairs of the most frequently substituted subfields by article-level DL and DC revealed that these pairs were generally formed of topically similar subfields. For example, the subfield Networking & telecommunications was assigned to an article belonging to Automobile design & engineering (according to the journal-level Science-Metrix classification) 65% of the time by DL (DC = 59%), Economic theory was substituted with Economics 51% of the time (DC = 34%), Mining & metallurgy was confused with Materials 47% of the time for DL (DC = 23%), and Horticulture with Plant biology & botany 42% of the time for DL (DC = 39%) (see S2 Table for full results). Altogether, these results show that very different strategies can similarly approximate a journal classification. This first set of results should be interpreted with caution since the Science-Metrix classification was an imperfect (53% accurate) journal-level classification used as a training set in the absence of a gold standard to act as a ground truth.

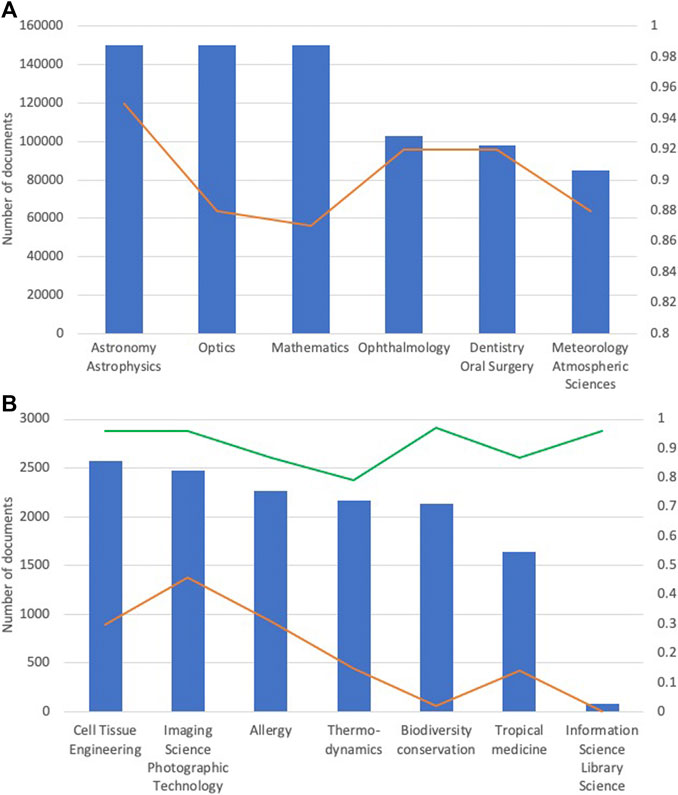

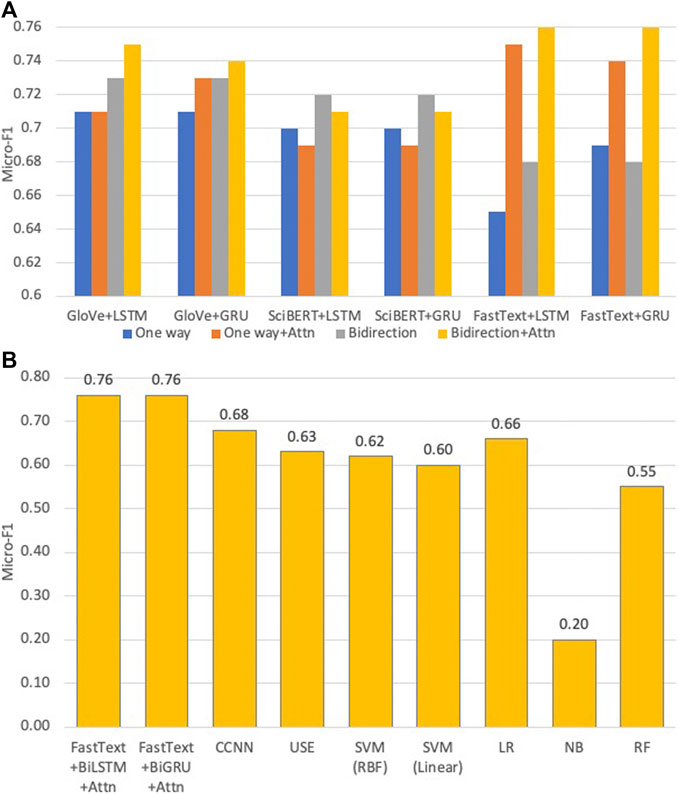

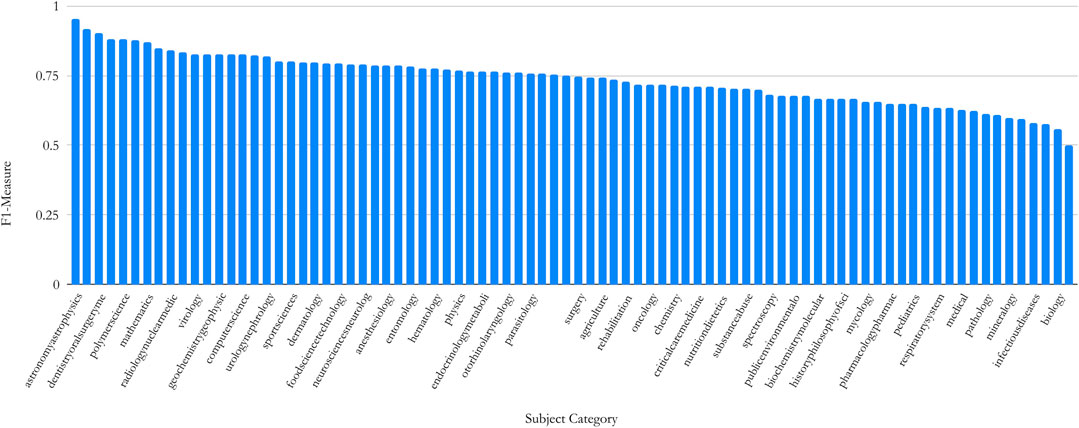

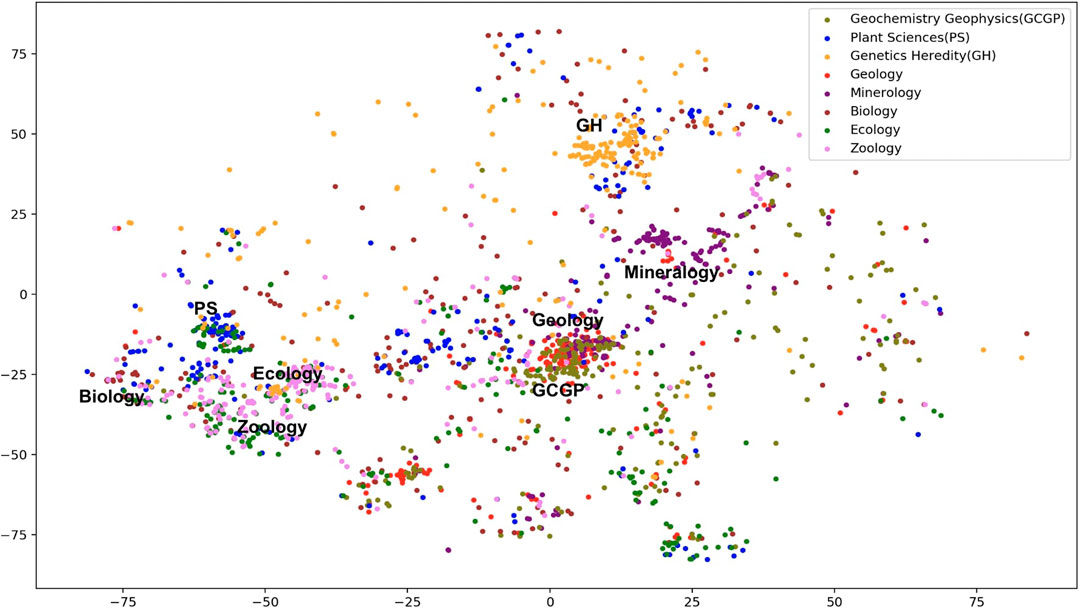

Median Herfindahl index scores obtained for the three classification techniques (B1) reveal that, though the scores are not all that markedly different, DL is the least effective technique for classifying articles (DL = 0.35 vs. 0.39 for BC and 0.43 for DC) and is fairly similar to DC when classifying journals (tie at 0.29 for DL and DC, with BC slightly lower at 0.27) ( Fig 3 ). DL’s (article) median was the lowest of the three techniques because it had fewer high scores (as opposed to more low scores). This is shown in the width of the violins and the spread of the top boxes of the boxplots in Fig 3 . More precisely, the width of the bottom parts of the violins are equivalent (as is the spread of the lower boxes), whereas the width of the top parts of the violins are narrowest at the top for DL.

The curves show a distribution of all the 379,413 Herfindahl scores that were computed for each classification technique, while the pale grey thicker vertical lines are outliers.