What Is Hypothesis Testing? An In-Depth Guide with Python Examples

Hypothesis testing allows us to make data-driven decisions by testing assertions about populations. It is the backbone behind scientific research, business analytics, financial modeling, and more.

This comprehensive guide aims to solidify your understanding with:

- Explanations of key terminology and the overall hypothesis testing process

- Python code examples for t-tests, z-tests, chi-squared, and other methods

- Real-world examples spanning science, business, politics, and technology

- A frank discussion around limitations and misapplications

- Next steps to mastering practical statistics with Python

So let‘s get comfortable with making statements, gathering evidence, and letting the data speak!

Fundamentals of Hypothesis Testing

Hypothesis testing is structured around making a claim in the form of competing hypotheses, gathering data, performing statistical tests, and making decisions about which hypothesis the evidence supports.

Here are some key terms about hypotheses and the testing process:

Null Hypothesis ($H_0$): The default statement about a population parameter. Generally asserts that there is no statistical significance between two data sets or that a sample parameter equals some claimed population parameter value. The statement being tested that is either rejected or supported.

Alternative Hypothesis ($H_1$): The statement that sample observations indicate statistically significant effect or difference from what the null hypothesis states. $H_1$ and $H_0$ are mutually exclusive, meaning if statistical tests support rejecting $H_0$, then you conclude $H_1$ has strong evidence.

Significance Level ($\alpha$): The probability of incorrectly rejecting a true null hypothesis, known as making a Type I error. Common significance levels are 90%, 95%, and 99%. The lower significance level, the more strict the criteria is for rejecting $H_0$.

Test Statistic: Summary calculations of sample data including mean, proportion, correlation coefficient, etc. Used to determine statistical significance and improbability under $H_0$.

P-value: Probability of obtaining sample results at least as extreme as the test statistic, assuming $H_0$ is true. Small p-values indicate strong statistical evidence against the null hypothesis.

Type I Error: Incorrectly rejecting a true null hypothesis

Type II Error : Failing to reject a false null hypothesis

These terms set the stage for the overall process:

1. Make Hypotheses

Define the null ($H_0$) and alternative hypothesis ($H_1$).

2. Set Significance Level

Typical significance levels are 90%, 95%, and 99%. Higher significance means more strict burden of proof for rejecting $H_0$.

3. Collect Data

Gather sample and population data related to the hypotheses under examination.

4. Determine Test Statistic

Calculate relevant test statistics like p-value, z-score, t-statistic, etc along with degrees of freedom.

5. Compare to Significance Level

If the test statistic falls in the critical region based on the significance, reject $H_0$, otherwise fail to reject $H_0$.

6. Draw Conclusions

Make determinations about hypotheses given the statistical evidence and context of the situation.

Now that you know the process and objectives, let’s apply this to some concrete examples.

Python Examples of Hypothesis Tests

We‘ll demonstrate hypothesis testing using Numpy, Scipy, Pandas and simulated data sets. Specifically, we‘ll conduct and interpret:

- Two sample t-tests

- Paired t-tests

- Chi-squared tests

These represent some of the most widely used methods for determining statistical significance between groups.

We‘ll plot the data distributions to check normality assumptions where applicable. And determine if evidence exists to reject the null hypotheses across several scenarios.

Two Sample T-Test with NumPy

Two sample t-tests determine whether the mean of a numerical variable differs significantly across two independent groups. It assumes observations follow approximate normal distributions within each group, but not that variances are equal.

Let‘s test for differences in reported salaries at hypothetical Company X vs Company Y:

$H_0$ : Average reported salaries are equal at Company X and Company Y

$H_1$ : Average reported salaries differ between Company X and Company Y

First we‘ll simulate salary samples for each company based on random normal distributions, set a 95% confidence level, run the t-test using NumPy, then interpret.

The t-statistic of 9.35 shows the difference between group means is nearly 9.5 standard errors. The very small p-value rejects the idea the salaries are equal across a randomly sampled population of employees.

Since the test returned a p-value lower than the significance level, we reject $H_0$, meaning evidence supports $H_1$ that average reported salaries differ between these hypothetical companies.

Paired T-Test with Pandas

While an independent groups t-test analyzes mean differences between distinct groups, a paired t-test looks for significant effects pre vs post some treatment within the same set of subjects. This helps isolate causal impacts by removing effects from confounding individual differences.

Let‘s analyze Amazon purchase data to determine if spending increases during the holiday months of November and December.

$H_0$ : Average monthly spending is equal pre-holiday and during the holiday season

$H_1$ : Average monthly spending increases during the holiday season

We‘ll import transaction data using Pandas, add seasonal categories, then run and interpret the paired t-test.

Since the p-value is below the 0.05 significance level, we reject $H_0$. The output shows statistically significant evidence at 95% confidence that average spending increases during November-December relative to January-October.

Visualizing the monthly trend helps confirm the spike during the holiday months.

Single Sample Z-Test with NumPy

A single sample z-test allows testing whether a sample mean differs significantly from a population mean. It requires knowing the population standard deviation.

Let‘s test if recently surveyed shoppers differ significantly in their reported ages from the overall customer base:

$H_0$ : Sample mean age equals population mean age of 39

$H_1$ : Sample mean age does not equal population mean of 39

Here the absolute z-score over 2 and p-value under 0.05 indicates statistically significant evidence that recently surveyed shopper ages differ from the overall population parameter.

Chi-Squared Test with SciPy

Chi-squared tests help determine independence between categorical variables. The test statistic measures deviations between observed and expected outcome frequencies across groups to determine magnitude of relationship.

Let‘s test if credit card application approvals are independent across income groups using simulated data:

$H_0$ : Credit card approvals are independent of income level

$H_1$ : Credit approvals and income level are related

Since the p-value is greater than the 0.05 significance level, we fail to reject $H_0$. There is not sufficient statistical evidence to conclude that credit card approval rates differ by income categories.

ANOVA with StatsModels

Analysis of variance (ANOVA) hypothesis tests determine if mean differences exist across more than two groups. ANOVA expands upon t-tests for multiple group comparisons.

Let‘s test if average debt obligations vary depending on highest education level attained.

$H_0$ : Average debt obligations are equal across education levels

$H_1$ : Average debt obligations differ based on education level

We‘ll simulate ordered education and debt data for visualization via box plots and then run ANOVA.

The ANOVA output shows an F-statistic of 91.59 that along with a tiny p-value leads to rejecting $H_0$. We conclude there are statistically significant differences in average debt obligations based on highest degree attained.

The box plots visualize these distributions and means vary across four education attainment groups.

Real World Hypothesis Testing

Hypothesis testing forms the backbone of data-driven decision making across science, research, business, public policy and more by allowing practitioners to draw statistically-validated conclusions.

Here is a sample of hypotheses commonly tested:

- Ecommerce sites test if interface updates increase user conversions

- Ridesharing platforms analyze if surge pricing reduces wait times

- Subscription services assess if free trial length impacts customer retention

- Manufacturers test if new production processes improve output yields

Pharmaceuticals

- Drug companies test efficacy of developed compounds against placebo groups

- Clinical researchers evaluate impacts of interventions on disease factors

- Epidemiologists study if particular biomarkers differ in afflicted populations

- Software engineers measure if algorithm optimizations improve runtime complexity

- Autonomous vehicles assess whether new sensors reduce accident rates

- Information security analyzes if software updates decrease vulnerability exploits

Politics & Social Sciences

- Pollsters determine if candidate messaging influences voter preference

- Sociologists analyze if income immobility changed across generations

- Climate scientists examine anthropogenic factors contributing to extreme weather

This represents just a sample of the wide ranging real-world applications. Properly formulated hypotheses, statistical testing methodology, reproducible analysis, and unbiased interpretation helps ensure valid reliable findings.

However, hypothesis testing does still come with some limitations worth addressing.

Limitations and Misapplications

While hypothesis testing empowers huge breakthroughs across disciplines, the methodology does come with some inherent restrictions:

Over-reliance on p-values

P-values help benchmark statistical significance, but should not be over-interpreted. A large p-value does not necessarily mean the null hypothesis is 100% true for the entire population. And small p-values do not directly prove causality as confounding factors always exist.

Significance also does not indicate practical real-world effect size. Statistical power calculations should inform necessary sample sizes to detect desired effects.

Errors from Multiple Tests

Running many hypothesis tests by chance produces some false positives due to randomness. Analysts should account for this by adjusting significance levels, pre-registering testing plans, replicating findings, and relying more on meta-analyses.

Poor Experimental Design

Bad data, biased samples, unspecified variables, and lack of controls can completely undermine results. Findings can only be reasonably extended to populations reflected by the test samples.

Garbage in, garbage out definitely applies to statistical analysis!

Assumption Violations

Most common statistical tests make assumptions about normality, homogeneity of variance, independent samples, underlying variable relationships. Violating these premises invalidates reliability.

Transformations, bootstrapping, or non-parametric methods can help navigate issues for sound methodology.

Lack of Reproducibility

The replication crisis impacting scientific research highlights issues around lack of reproducibility, especially involving human participants and high complexity systems. Randomized controlled experiments with strong statistical power provide much more reliable evidence.

While hypothesis testing methodology is rigorously developed, applying concepts correctly proves challenging even among academics and experts!

Next Level Hypothesis Testing Mastery

We‘ve covered core concepts, Python implementations, real-world use cases, and inherent limitations around hypothesis testing. What should you master next?

Parametric vs Non-parametric

Learn assumptions and application differences between parametric statistics like z-tests and t-tests that assume normal distributions versus non-parametric analogs like Wilcoxon signed-rank tests and Mann-Whitney U tests.

Effect Size and Power

Look beyond just p-values to determine practical effect magnitude using indexes like Cohen‘s D. And ensure appropriate sample sizes to detect effects using prospective power analysis.

Alternatives to NHST

Evaluate Bayesian inference models and likelihood ratios that move beyond binary reject/fail-to-reject null hypothesis outcomes toward more integrated evidence.

Tiered Testing Framework

Construct reusable classes encapsulating data processing, visualizations, assumption checking, and statistical tests for maintainable analysis code.

Big Data Integration

Connect statistical analysis to big data pipelines pulling from databases, data lakes and APIs at scale. Productionize analytics.

I hope this end-to-end look at hypothesis testing methodology, Python programming demonstrations, real-world grounding, inherent restrictions and next level considerations provides a launchpad for practically applying core statistics! Please subscribe using the form below for more data science tutorials.

Dr. Alex Mitchell is a dedicated coding instructor with a deep passion for teaching and a wealth of experience in computer science education. As a university professor, Dr. Mitchell has played a pivotal role in shaping the coding skills of countless students, helping them navigate the intricate world of programming languages and software development.

Beyond the classroom, Dr. Mitchell is an active contributor to the freeCodeCamp community, where he regularly shares his expertise through tutorials, code examples, and practical insights. His teaching repertoire includes a wide range of languages and frameworks, such as Python, JavaScript, Next.js, and React, which he presents in an accessible and engaging manner.

Dr. Mitchell’s approach to teaching blends academic rigor with real-world applications, ensuring that his students not only understand the theory but also how to apply it effectively. His commitment to education and his ability to simplify complex topics have made him a respected figure in both the university and online learning communities.

Similar Posts

Intro to Property-Based Testing in Python

Property-based testing is an innovative technique for testing software through specifying invariant properties rather than manual…

What is Docker? Learn How to Use Containers – Explained with Examples

Docker‘s lightweight container virtualization has revolutionized development workflows. This comprehensive guide demystifies Docker fundamentals while equipping…

How to Create a Timeline Component with React

As a full-stack developer, building reusable UI components is a key skill. In this comprehensive 3200+…

A Brief History of the Command Line

The command line interface (CLI) has been a constant companion of programmers, system administrators and power…

Why I love Vim: It’s the lesser-known features that make it so amazing

Credit: Unsplash Vim has been my go-to text editor for years. As a full-stack developer, I…

How I Started the Process of Healing a Dying Software Group

As the new manager of a struggling 20-person software engineering team, I faced serious challenges that…

Your Data Guide

How to Perform Hypothesis Testing Using Python

Step into the intriguing world of hypothesis testing, where your natural curiosity meets the power of data to reveal truths!

This article is your key to unlocking how those everyday hunches—like guessing a group’s average income or figuring out who owns their home—can be thoroughly checked and proven with data.

Thanks for reading Your Data Guide! Subscribe for free to receive new posts and support my work.

I am going to take you by the hand and show you, in simple steps, how to use Python to explore a hypothesis about the average yearly income.

By the time we’re done, you’ll not only get the hang of creating and testing hypotheses but also how to use statistical tests on actual data.

Perfect for up-and-coming data scientists, anyone with a knack for analysis, or just if you’re keen on data, get ready to gain the skills to make informed decisions and turn insights into real-world actions.

Join me as we dive deep into the data, one hypothesis at a time!

Before we get started, elevate your data skills with my expert eBooks—the culmination of my experiences and insights.

Support my work and enhance your journey. Check them out:

eBook 1: Personal INTERVIEW Ready “SQL” CheatSheet

eBook 2: Personal INTERVIEW Ready “Statistics” Cornell Notes

Best Selling eBook: Top 50+ ChatGPT Personas for Custom Instructions

Data Science Bundle ( Cheapest ): The Ultimate Data Science Bundle: Complete

ChatGPT Bundle ( Cheapest ): The Ultimate ChatGPT Bundle: Complete

💡 Checkout for more such resources: https://codewarepam.gumroad.com/

What is a hypothesis, and how do you test it?

A hypothesis is like a guess or prediction about something specific, such as the average income or the percentage of homeowners in a group of people.

It’s based on theories, past observations, or questions that spark our curiosity.

For instance, you might predict that the average yearly income of potential customers is over $50,000 or that 60% of them own their homes.

To see if your guess is right, you gather data from a smaller group within the larger population and check if the numbers ( like the average income, percentage of homeowners, etc. ) from this smaller group match your initial prediction.

You also set a rule for how sure you need to be to trust your findings, often using a 5% chance of error as a standard measure . This means you’re 95% confident in your results. — Level of Significance (0.05)

There are two main types of hypotheses : the null hypothesi s, which is your baseline saying there’s no change or difference, and the alternative hypothesis , which suggests there is a change or difference.

For example,

If you start with the idea that the average yearly income of potential customers is $50,000,

The alternative could be that it’s not $50,000—it could be less or more, depending on what you’re trying to find out.

To test your hypothesis, you calculate a test statistic —a number that shows how much your sample data deviates from what you predicted.

How you calculate this depends on what you’re studying and the kind of data you have. For example, to check an average, you might use a formula that considers your sample’s average, the predicted average, the variation in your sample data, and how big your sample is.

This test statistic follows a known distribution ( like the t-distribution or z-distribution ), which helps you figure out the p-value.

The p-value tells you the odds of seeing a test statistic as extreme as yours if your initial guess was correct.

A small p-value means your data strongly disagrees with your initial guess.

Finally, you decide on your hypothesis by comparing the p-value to your error threshold.

If the p-value is smaller or equal, you reject the null hypothesis, meaning your data shows a significant difference that’s unlikely due to chance.

If the p-value is larger, you stick with the null hypothesis , suggesting your data doesn’t show a meaningful difference and any change might just be by chance.

We’ll go through an example that tests if the average annual income of prospective customers exceeds $50,000.

This process involves stating hypotheses , specifying a significance level , collecting and analyzing data , and drawing conclusions based on statistical tests.

Example: Testing a Hypothesis About Average Annual Income

Step 1: state the hypotheses.

Null Hypothesis (H0): The average annual income of prospective customers is $50,000.

Alternative Hypothesis (H1): The average annual income of prospective customers is more than $50,000.

Step 2: Specify the Significance Level

Significance Level: 0.05, meaning we’re 95% confident in our findings and allow a 5% chance of error.

Step 3: Collect Sample Data

We’ll use the ProspectiveBuyer table, assuming it's a random sample from the population.

This table has 2,059 entries, representing prospective customers' annual incomes.

Step 4: Calculate the Sample Statistic

In Python, we can use libraries like Pandas and Numpy to calculate the sample mean and standard deviation.

SampleMean: 56,992.43

SampleSD: 32,079.16

SampleSize: 2,059

Step 5: Calculate the Test Statistic

We use the t-test formula to calculate how significantly our sample mean deviates from the hypothesized mean.

Python’s Scipy library can handle this calculation:

T-Statistic: 4.62

Step 6: Calculate the P-Value

The p-value is already calculated in the previous step using Scipy's ttest_1samp function, which returns both the test statistic and the p-value.

P-Value = 0.0000021

Step 7: State the Statistical Conclusion

We compare the p-value with our significance level to decide on our hypothesis:

Since the p-value is less than 0.05, we reject the null hypothesis in favor of the alternative.

Conclusion:

There’s strong evidence to suggest that the average annual income of prospective customers is indeed more than $50,000.

This example illustrates how Python can be a powerful tool for hypothesis testing, enabling us to derive insights from data through statistical analysis.

How to Choose the Right Test Statistics

Choosing the right test statistic is crucial and depends on what you’re trying to find out, the kind of data you have, and how that data is spread out.

Here are some common types of test statistics and when to use them:

T-test statistic:

This one’s great for checking out the average of a group when your data follows a normal distribution or when you’re comparing the averages of two such groups.

The t-test follows a special curve called the t-distribution . This curve looks a lot like the normal bell curve but with thicker ends, which means more chances for extreme values.

The t-distribution’s shape changes based on something called degrees of freedom , which is a fancy way of talking about your sample size and how many groups you’re comparing.

Z-test statistic:

Use this when you’re looking at the average of a normally distributed group or the difference between two group averages, and you already know the standard deviation for all in the population.

The z-test follows the standard normal distribution , which is your classic bell curve centered at zero and spreading out evenly on both sides.

Chi-square test statistic:

This is your go-to for checking if there’s a difference in variability within a normally distributed group or if two categories are related.

The chi-square statistic follows its own distribution, which leans to the right and gets its shape from the degrees of freedom —basically, how many categories or groups you’re comparing.

F-test statistic:

This one helps you compare the variability between two groups or see if the averages of more than two groups are all the same, assuming all groups are normally distributed.

The F-test follows the F-distribution , which is also right-skewed and has two types of degrees of freedom that depend on how many groups you have and the size of each group.

In simple terms, the test you pick hinges on what you’re curious about, whether your data fits the normal curve, and if you know certain specifics, like the population’s standard deviation.

Each test has its own special curve and rules based on your sample’s details and what you’re comparing.

Join my community of learners! Subscribe to my newsletter for more tips, tricks, and exclusive content on mastering Data Science & AI. — Your Data Guide Join my community of learners! Subscribe to my newsletter for more tips, tricks, and exclusive content on mastering data science and AI. By Richard Warepam ⭐️ Visit My Gumroad Shop: https://codewarepam.gumroad.com/

Ready for more?

Life With Data

- by bprasad26

How to Perform Hypothesis Testing in Python

Hypothesis testing is a critical aspect of data science and statistical analysis that lets you infer the relationship between variables in a dataset. It helps you make informed decisions by providing statistical proof of a claim or statement. Python, a popular language for data science, offers multiple packages that can perform hypothesis testing, such as NumPy, SciPy, and StatsModels.

In this comprehensive guide, we will delve into the concept of hypothesis testing, the steps involved in performing one, and a step-by-step approach to conducting hypothesis testing using Python, with examples.

Table of Contents

- Understanding Hypothesis Testing

- Steps to Perform Hypothesis Testing

- Setting Up the Environment

Example 1: One Sample T-test

Example 2: independent two sample t-test, example 3: paired sample t-test, example 4: chi-square test, 1. understanding hypothesis testing.

Before diving into the practical implementation, it’s essential to understand what a hypothesis is and why it is critical in statistical analysis and data science. A hypothesis is essentially an assumption that we make about the population parameters based on the observed data.

The hypothesis testing process aims to determine whether there is enough statistical evidence in favor of a certain belief or assumption regarding the population. It involves two types of hypotheses:

- Null Hypothesis (H0) : It is a statement about the population that either is believed to be true or is used to put forth an argument unless it can be shown to be incorrect beyond a reasonable doubt.

- Alternative Hypothesis (H1) : It is a claim about the population that is contradictory to the null hypothesis and what we would conclude when the null hypothesis is found to be unlikely.

The objective of hypothesis testing is to provide statistical evidence whether the null hypothesis is true or not.

2. Steps to Perform Hypothesis Testing

The general steps to perform hypothesis testing are:

- Define the Null and Alternative Hypothesis : First, you need to state the null hypothesis and the alternative hypothesis based on the problem statement or question.

- Choose a Significance Level : The significance level, often denoted by alpha (α), is a probability threshold that determines when you reject the null hypothesis. Commonly used values are 0.01, 0.05, and 0.1.

- Select the Appropriate Test : Depending on the nature of your data and the question you’re trying to answer, you’ll choose a specific statistical test (e.g., t-test, chi-square test, ANOVA, etc.)

- Compute the Test Statistic : This involves calculating the test statistic using the appropriate formula.

- Make a Decision : Based on the computed test statistic, you will reject or fail to reject the null hypothesis. If the p-value is less than the chosen significance level, you reject the null hypothesis.

3. Setting Up the Environment

To perform hypothesis testing in Python, you need to install some essential packages, such as numpy, scipy, pandas, and matplotlib. You can install them using pip:

After the installation, import the required libraries:

4. Hypothesis Testing in Python: Examples

One sample T-test is used when we want to compare the mean of a population to a specified value. For example, let’s consider a scenario where we want to test if the average height of men in a town is 180 cm.

If the p-value is less than our significance level (let’s say 0.05), we reject the null hypothesis.

The independent two sample T-test is used when we want to compare the means of two independent groups. Let’s take an example where we want to compare the average heights of men and women.

Here, again if the p-value is less than our significance level (0.05), we reject the null hypothesis.

A paired sample T-test is used when we want to compare the means of the same group at two different times. For example, let’s consider a scenario where we want to test the effect of a training program on weight loss.

Here, if the p-value is less than our significance level (0.05), we reject the null hypothesis.

A Chi-square test is used when we want to see if there is a relationship between two categorical variables. For instance, let’s test if there is a relationship between gender and preference for a certain product.

In this case, if the p-value is less than our significance level (0.05), we reject the null hypothesis that there is no relationship between gender and product preference.

5. Conclusion

Hypothesis testing is a crucial aspect of data science and statistical analysis. It provides a statistical framework that allows you to make decisions based on data. Python, with its robust statistical libraries, is a great tool for performing these tests.

Remember that while hypothesis testing can provide powerful insights, it is not infallible. The results of a hypothesis test are merely statistical inferences and are subject to a certain level of uncertainty. Always carefully consider the design of your study, your choice of hypotheses, and the assumptions of the statistical tests you use.

Share this:

Leave a reply cancel reply, discover more from life with data.

Subscribe now to keep reading and get access to the full archive.

Type your email…

Continue reading

Intro to Hypothesis Testing in Python

Chris Kucewicz

Beginner’s guide to using Python to conduct and interpret hypothesis tests

Hypothesis testing is a fundamental part of statistical analysis which allows data scientists to make inferences ( an inference is the process of drawing conclusions about a population based on sample data from the population ). In this blog post, I’ll walk through how to conduct a hypothesis test using Python in Jupyter Notebook. Whether you’re a data science beginner or looking to brush up on your skills, this guide will help you understand the process of hypothesis testing and interpreting the results.

What is Hypothesis Testing?

Hypothesis testing is a statistical method that helps us decide whether there is enough evidence to reject a null hypothesis (H_0) in favor of an alternative hypothesis (H_1). Another way to think about this is that hypothesis testing helps us determine if there is enough evidence from a sample to draw conclusions about the population from which the sample was taken. The process usually involves the following steps:

1. Define your hypotheses : Define your null and alternative hypotheses. 2. Choose a significance level: Commonly referred to as alpha (α). Alpha is typically set at 0.05, although 0.01 is also sometimes used. There are benefits and drawbacks to the alpha you pick. Read more about this: here . 3. Select the appropriate test: The test you select will depend on the available data and your hypotheses. 4. Compute the test statistic and p-value : Using the sample data. 5. Make a decision: Reject or fail to reject the null hypothesis based on the p-value and significance level.

Setting Up Your Environment

First, open Jupyter Notebook and create a new notebook for this project. Before we can start any hypothesis testing, ensure you have Python installed along with the necessary libraries. For this tutorial, we’ll be using pandas , scipy , and matplotlib . You can install these libraries using pip :

Example: One-Sample T-Test

In this post, I’ll be showing how to conduct a one-sample t-test to determine if the mean of a sample data set is significantly different from a known population mean. To learn more about one-sample t-tests, click here .

Step 1A: Import Libraries and Load Data

First, import the necessary libraries and load your data. For this example, we’ll use Numpy’s .random and .normal() methods to generate a fake data set. We use .seed() to ensure that we will consistently get the same random numbers. For more on .seed() , click here .

For this post, we create a sample data set that is normally distributed and has 150 observations with a mean of 25 and standard deviation of 5. In this example, we will assume the population mean (also known as ‘mu’) is 28.

Step 1B: Visualize the Data

While visualizing the sample data distribution is not a required step of hypothesis testing, it can lead to a better understanding of the data. Thus visualization is often recommended.

To do this, we construct a histogram of the sample data ax.hist(df['Sample Data', bins = 20, alpha = 0.7]) ( Please use caution with this line of code. The alpha parameter specified for ax.hist() controls the transparency of the histogram bins and should not be confused with our significance level (alpha) used in hypothesis testing ). Next, we plot lines to represent the population mean and sample mean using ax.axvline() .

This code outputs the following histogram:

From the histogram we can visualize the sample mean and population mean. While the histogram clearly shows that the sample mean differs from the population mean, we must be careful not to assume that this difference is significant. Visualization alone cannot help us determine if differences in the sample mean and the population mean are significant; This is why we rely on statistical tests.

Step 2: Formulate the Hypotheses

We want to test if the sample mean is significantly different from the population mean.

- Null hypothesis (H_0): The sample mean is equal to the population mean (μ = 28).

- Alternative hypothesis (H_1): The sample mean is not equal to the population mean (μ ≠ 28).

Step 3: Choose Significance Level

In this example we will set alpha = 0.05. For a experiment with reliable results, it is crucial to set your significance level prior to conducting the statistical test.

Step 4: Conduct the T-Test

Conducting a statistical test by calculating the test statistic and p-value by hand is a laborious process. Luckily for us, the stats module in scipy has a function called ttest_1samp that makes it easy for users to conduct a t-test. ttest_1samp takes two arguments — (1) the sample data and (2) the population mean — and returns a test statistic (aka ‘t-statistic’) and p-value. You can read more about ttest_1samp here .

Step 5: Interpret the Results

Now that the t-statistic and p-value have been calculated, we need to interpret the p-value to decide whether to reject or fail to reject the null hypothesis. If the p-value is less than our significance level (α = 0.05), we reject the null hypothesis. There are two options we can take to correctly interpret the results.

Option #1 : We can compare the p-value output in Step 4 to our previously set alpha level of 0.05.

- Interpretation: Based on the output, the p-value is 0.000000068998. This is much less than 0.05. Thus, we reject the null hypothesis and conclude that at a 5% significance level, there is enough evidence to support the claim that the difference between the sample mean and population mean is significant.

Option #2 : We can use a Python if statement to make the decision for us by specifying p_value < alpha :

We see that the output in Option #2 matches our interpretation from Option #1.

In this blog post, we’ve walked through conducting a one-sample t-test using Python in Jupyter Notebook. We’ve covered formulating hypotheses, performing the test, interpreting results, and visualizing the data. Hypothesis testing is a powerful tool in statistics, and Python makes it accessible and straightforward to apply in your data analysis projects.

By mastering these techniques, you can draw meaningful insights from your data and make data-driven decisions with confidence.

(A one-sample t-test is only one of the many types of statistical tests that can be performed. Click here to learn more about the different types of statistical tests and when to use them.)

- https://www.scribbr.com/statistics/statistical-tests/

- https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.ttest_1samp.html

- https://stackoverflow.com/questions/22639587/random-seed-what-does-it-do

- https://datatab.net/tutorial/one-sample-t-test

Written by Chris Kucewicz

Math nerd, baseball fan, public transit advocate. I write about my journey from math teacher to data analyst. www.linkedin.com/in/chriskucewicz/

Text to speech

Visual Design.

Upgrade to get unlimited access ($10 one off payment).

7 Tips for Beginner to Future-Proof your Machine Learning Project

LLM Prompt Engineering Techniques for Knowledge Graph Integration

Develop a Data Analytics Web App in 3 Steps

What Does ChatGPT Say About Machine Learning Trend and How Can We Prepare For It?

- Apr 14, 2022

An Interactive Guide to Hypothesis Testing in Python

Updated: Jun 12, 2022

upgrade and grab the cheatsheet from our infographics gallery

What is hypothesis testing.

Hypothesis testing is an essential part in inferential statistics where we use observed data in a sample to draw conclusions about unobserved data - often the population.

Implication of hypothesis testing:

clinical research: widely used in psychology, biology and healthcare research to examine the effectiveness of clinical trials

A/B testing: can be applied in business context to improve conversions through testing different versions of campaign incentives, website designs ...

feature selection in machine learning: filter-based feature selection methods use different statistical tests to determine the feature importance

college or university: well, if you major in statistics or data science, it is likely to appear in your exams

For a brief video walkthrough along with the blog, check out my YouTube channel.

4 Steps in Hypothesis testing

Step 1. define null and alternative hypothesis.

Null hypothesis (H0) can be stated differently depends on the statistical tests, but generalize to the claim that no difference, no relationship or no dependency exists between two or more variables.

Alternative hypothesis (H1) is contradictory to the null hypothesis and it claims that relationships exist. It is the hypothesis that we would like to prove right. However, a more conservational approach is favored in statistics where we always assume null hypothesis is true and try to find evidence to reject the null hypothesis.

Step 2. Choose the appropriate test

Common Types of Statistical Testing including t-tests, z-tests, anova test and chi-square test

T-test: compare two groups/categories of numeric variables with small sample size

Z-test: compare two groups/categories of numeric variables with large sample size

ANOVA test: compare the difference between two or more groups/categories of numeric variables

Chi-Squared test: examine the relationship between two categorical variables

Correlation test: examine the relationship between two numeric variables

Step 3. Calculate the p-value

How p value is calculated primarily depends on the statistical testing selected. Firstly, based on the mean and standard deviation of the observed sample data, we are able to derive the test statistics value (e.g. t-statistics, f-statistics). Then calculate the probability of getting this test statistics given the distribution of the null hypothesis, we will find out the p-value. We will use some examples to demonstrate this in more detail.

Step 4. Determine the statistical significance

p value is then compared against the significance level (also noted as alpha value) to determine whether there is sufficient evidence to reject the null hypothesis. The significance level is a predetermined probability threshold - commonly 0.05. If p value is larger than the threshold, it means that the value is likely to occur in the distribution when the null hypothesis is true. On the other hand, if lower than significance level, it means it is very unlikely to occur in the null hypothesis distribution - hence reject the null hypothesis.

Hypothesis Testing with Examples

Kaggle dataset “ Customer Personality Analysis” is used in this case study to demonstrate different types of statistical test. T-test, ANOVA and Chi-Square test are sensitive to large sample size, and almost certainly will generate very small p-value when sample size is large . Therefore, I took a random sample (size of 100) from the original data:

T-test is used when we want to test the relationship between a numeric variable and a categorical variable.There are three main types of t-test.

one sample t-test: test the mean of one group against a constant value

two sample t-test: test the difference of means between two groups

paired sample t-test: test the difference of means between two measurements of the same subject

For example, if I would like to test whether “Recency” (the number of days since customer’s last purchase - numeric value) contributes to the prediction of “Response” (whether the customer accepted the offer in the last campaign - categorical value), I can use a two sample t-test.

The first sample would be the “Recency” of customers who accepted the offer:

The second sample would be the “Recency” of customers who rejected the offer:

To compare the “Recency” of these two groups intuitively, we can use histogram (or distplot) to show the distributions.

It appears that positive response have lower Recency compared to negative response. To quantify the difference and make it more scientific, let’s follow the steps in hypothesis testing and carry out a t-test.

Step1. define null and alternative hypothesis

null: there is no difference in Recency between the customers who accepted the offer in the last campaign and who did not accept the offer

alternative: customers who accepted the offer has lower Recency compared to customers who did not accept the offer

Step 2. choose the appropriate test

To test the difference between two independent samples, two-sample t-test is the most appropriate statistical test which follows student t-distribution. The shape of student-t distribution is determined by the degree of freedom, calculated as the sum of two sample size minus 2.

In python, simply import the library scipy.stats and create the t-distribution as below.

Step 3. calculate the p-value

There are some handy functions in Python calculate the probability in a distribution. For any x covered in the range of the distribution, pdf(x) is the probability density function of x — which can be represented as the orange line below, and cdf(x) is the cumulative density function of x — which can be seen as the cumulative area. In this example, we are testing the alternative hypothesis that — Recency of positive response minus the Recency of negative response is less than 0. Therefore we should use a one-tail test and compare the t-statistics we get against the lowest value in this distribution — therefore p-value can be calculated as cdf(t_statistics) in this case.

ttest_ind() is a handy function for independent t-test in python that has done all of these for us automatically. Pass two samples rececency_P and recency_N as the parameters, and we get the t-statistics and p-value.

Here I use plotly to visualize the p-value in t-distribution. Hover over the line and see how point probability and p-value changes as the x shifts. The area with filled color highlights the p-value we get for this specific test.

Check out the code in our Code Snippet section, if you want to build this yourself.

An interactive visualization of t-distribution with t-statistics vs. significance level.

Step 4. determine the statistical significance

The commonly used significance level threshold is 0.05. Since p-value here (0.024) is smaller than 0.05, we can say that it is statistically significant based on the collected sample. A lower Recency of customer who accepted the offer is likely not occur by chance. This indicates the feature “Response” may be a strong predictor of the target variable “Recency”. And if we would perform feature selection for a model predicting the "Recency" value, "Response" is likely to have high importance.

Now that we know t-test is used to compare the mean of one or two sample groups. What if we want to test more than two samples? Use ANOVA test.

ANOVA examines the difference among groups by calculating the ratio of variance across different groups vs variance within a group . Larger ratio indicates that the difference across groups is a result of the group difference rather than just random chance.

As an example, I use the feature “Kidhome” for the prediction of “NumWebPurchases”. There are three values of “Kidhome” - 0, 1, 2 which naturally forms three groups.

Firstly, visualize the data. I found box plot to be the most aligned visual representation of ANOVA test.

It appears there are distinct differences among three groups. So let’s carry out ANOVA test to prove if that’s the case.

1. define hypothesis:

null hypothesis: there is no difference among three groups

alternative hypothesis: there is difference between at least two groups

2. choose the appropriate test: ANOVA test for examining the relationships of numeric values against a categorical value with more than two groups. Similar to t-test, the null hypothesis of ANOVA test also follows a distribution defined by degrees of freedom. The degrees of freedom in ANOVA is determined by number of total samples (n) and the number of groups (k).

dfn = n - 1

dfd = n - k

3. calculate the p-value: To calculate the p-value of the f-statistics, we use the right tail cumulative area of the f-distribution, which is 1 - rv.cdf(x).

To easily get the f-statistics and p-value using Python, we can use the function stats.f_oneway() which returns p-value: 0.00040.

An interactive visualization of f-distribution with f-statistics vs. significance level. (Check out the code in our Code Snippet section, if you want to build this yourself. )

4. determine the statistical significance : Compare the p-value against the significance level 0.05, we can infer that there is strong evidence against the null hypothesis and very likely that there is difference in “NumWebPurchases” between at least two groups.

Chi-Squared Test

Chi-Squared test is for testing the relationship between two categorical variables. The underlying principle is that if two categorical variables are independent, then one categorical variable should have similar composition when the other categorical variable change. Let’s look at the example of whether “Education” and “Response” are independent.

First, use stacked bar chart and contingency table to summary the count of each category.

If these two variables are completely independent to each other (null hypothesis is true), then the proportion of positive Response and negative Response should be the same across all Education groups. It seems like composition are slightly different, but is it significant enough to say there is dependency - let’s run a Chi-Squared test.

null hypothesis: “Education” and “Response” are independent to each other.

alternative hypothesis: “Education” and “Response” are dependent to each other.

2. choose the appropriate test: Chi-Squared test is chosen and you probably found a pattern here, that Chi-distribution is also determined by the degree of freedom which is (row - 1) x (column - 1).

3. calculate the p-value: p value is calculated as the right tail cumulative area: 1 - rv.cdf(x).

Python also provides a useful function to get the chi statistics and p-value given the contingency table.

An interactive visualization of chi-distribution with chi-statistics vs. significance level. (Check out the code in our Code Snippet section, if you want to build this yourself. )

4. determine the statistical significanc e: the p-value here is 0.41, suggesting that it is not statistical significant. Therefore, we cannot reject the null hypothesis that these two categorical variables are independent. This further indicates that “Education” may not be a strong predictor of “Response”.

Thanks for reaching so far, we have covered a lot of contents in this article but still have two important hypothesis tests that are worth discussing separately in upcoming posts.

z-test: test the difference between two categories of numeric variables - when sample size is LARGE

correlation: test the relationship between two numeric variables

Hope you found this article helpful. If you’d like to support my work and see more articles like this, treat me a coffee ☕️ by signing up Premium Membership with $10 one-off purchase.

Take home message.

In this article, we interactively explore and visualize the difference between three common statistical tests: t-test, ANOVA test and Chi-Squared test. We also use examples to walk through essential steps in hypothesis testing:

1. define the null and alternative hypothesis

2. choose the appropriate test

3. calculate the p-value

4. determine the statistical significance

- Data Science

Recent Posts

How to Self Learn Data Science in 2022

- Scipy lecture notes »

- 3. Packages and applications »

- Edit Improve this page: Edit it on Github.

3.1. Statistics in Python ¶

Author : Gaël Varoquaux

Requirements

- Standard scientific Python environment (numpy, scipy, matplotlib)

- Statsmodels

To install Python and these dependencies, we recommend that you download Anaconda Python or Enthought Canopy , or preferably use the package manager if you are under Ubuntu or other linux.

- Bayesian statistics in Python : This chapter does not cover tools for Bayesian statistics. Of particular interest for Bayesian modelling is PyMC , which implements a probabilistic programming language in Python.

- Read a statistics book : The Think stats book is available as free PDF or in print and is a great introduction to statistics.

Why Python for statistics?

R is a language dedicated to statistics. Python is a general-purpose language with statistics modules. R has more statistical analysis features than Python, and specialized syntaxes. However, when it comes to building complex analysis pipelines that mix statistics with e.g. image analysis, text mining, or control of a physical experiment, the richness of Python is an invaluable asset.

- Data as a table

- The pandas data-frame

- Student’s t-test: the simplest statistical test

- Paired tests: repeated measurements on the same individuals

- “formulas” to specify statistical models in Python

- Multiple Regression: including multiple factors

- Post-hoc hypothesis testing: analysis of variance (ANOVA)

- Pairplot: scatter matrices

- lmplot: plotting a univariate regression

- Testing for interactions

- Full code for the figures

- Solutions to this chapter’s exercises

In this document, the Python inputs are represented with the sign “>>>”.

Disclaimer: Gender questions

Some of the examples of this tutorial are chosen around gender questions. The reason is that on such questions controlling the truth of a claim actually matters to many people.

3.1.1. Data representation and interaction ¶

3.1.1.1. data as a table ¶.

The setting that we consider for statistical analysis is that of multiple observations or samples described by a set of different attributes or features . The data can than be seen as a 2D table, or matrix, with columns giving the different attributes of the data, and rows the observations. For instance, the data contained in examples/brain_size.csv :

3.1.1.2. The pandas data-frame ¶

We will store and manipulate this data in a pandas.DataFrame , from the pandas module. It is the Python equivalent of the spreadsheet table. It is different from a 2D numpy array as it has named columns, can contain a mixture of different data types by column, and has elaborate selection and pivotal mechanisms.

Creating dataframes: reading data files or converting arrays ¶

It is a CSV file, but the separator is “;”

Reading from a CSV file: Using the above CSV file that gives observations of brain size and weight and IQ (Willerman et al. 1991), the data are a mixture of numerical and categorical values:

Missing values

The weight of the second individual is missing in the CSV file. If we don’t specify the missing value (NA = not available) marker, we will not be able to do statistical analysis.

Creating from arrays : A pandas.DataFrame can also be seen as a dictionary of 1D ‘series’, eg arrays or lists. If we have 3 numpy arrays:

We can expose them as a pandas.DataFrame :

Other inputs : pandas can input data from SQL, excel files, or other formats. See the pandas documentation .

Manipulating data ¶

data is a pandas.DataFrame , that resembles R’s dataframe:

For a quick view on a large dataframe, use its describe method: pandas.DataFrame.describe() .

groupby : splitting a dataframe on values of categorical variables:

groupby_gender is a powerful object that exposes many operations on the resulting group of dataframes:

Use tab-completion on groupby_gender to find more. Other common grouping functions are median, count (useful for checking to see the amount of missing values in different subsets) or sum. Groupby evaluation is lazy, no work is done until an aggregation function is applied.

What is the mean value for VIQ for the full population?

How many males/females were included in this study?

Hint use ‘tab completion’ to find out the methods that can be called, instead of ‘mean’ in the above example.

What is the average value of MRI counts expressed in log units, for males and females?

groupby_gender.boxplot is used for the plots above (see this example ).

Plotting data ¶

Pandas comes with some plotting tools ( pandas.tools.plotting , using matplotlib behind the scene) to display statistics of the data in dataframes:

Scatter matrices :

Two populations

The IQ metrics are bimodal, as if there are 2 sub-populations.

Plot the scatter matrix for males only, and for females only. Do you think that the 2 sub-populations correspond to gender?

3.1.2. Hypothesis testing: comparing two groups ¶

For simple statistical tests , we will use the scipy.stats sub-module of scipy :

Scipy is a vast library. For a quick summary to the whole library, see the scipy chapter.

3.1.2.1. Student’s t-test: the simplest statistical test ¶

1-sample t-test: testing the value of a population mean ¶.

scipy.stats.ttest_1samp() tests if the population mean of data is likely to be equal to a given value (technically if observations are drawn from a Gaussian distributions of given population mean). It returns the T statistic , and the p-value (see the function’s help):

With a p-value of 10^-28 we can claim that the population mean for the IQ (VIQ measure) is not 0.

2-sample t-test: testing for difference across populations ¶

We have seen above that the mean VIQ in the male and female populations were different. To test if this is significant, we do a 2-sample t-test with scipy.stats.ttest_ind() :

3.1.2.2. Paired tests: repeated measurements on the same individuals ¶

PIQ, VIQ, and FSIQ give 3 measures of IQ. Let us test if FISQ and PIQ are significantly different. We can use a 2 sample test:

The problem with this approach is that it forgets that there are links between observations: FSIQ and PIQ are measured on the same individuals. Thus the variance due to inter-subject variability is confounding, and can be removed, using a “paired test”, or “repeated measures test” :

This is equivalent to a 1-sample test on the difference:

T-tests assume Gaussian errors. We can use a Wilcoxon signed-rank test , that relaxes this assumption:

The corresponding test in the non paired case is the Mann–Whitney U test , scipy.stats.mannwhitneyu() .

- Test the difference between weights in males and females.

- Use non parametric statistics to test the difference between VIQ in males and females.

Conclusion : we find that the data does not support the hypothesis that males and females have different VIQ.

3.1.3. Linear models, multiple factors, and analysis of variance ¶

3.1.3.1. “formulas” to specify statistical models in python ¶, a simple linear regression ¶.

Given two set of observations, x and y , we want to test the hypothesis that y is a linear function of x . In other terms:

where e is observation noise. We will use the statsmodels module to:

- Fit a linear model. We will use the simplest strategy, ordinary least squares (OLS).

- Test that coef is non zero.

First, we generate simulated data according to the model:

“formulas” for statistics in Python

See the statsmodels documentation

Then we specify an OLS model and fit it:

We can inspect the various statistics derived from the fit:

Terminology:

Statsmodels uses a statistical terminology: the y variable in statsmodels is called ‘endogenous’ while the x variable is called exogenous. This is discussed in more detail here .

To simplify, y (endogenous) is the value you are trying to predict, while x (exogenous) represents the features you are using to make the prediction.

Retrieve the estimated parameters from the model above. Hint : use tab-completion to find the relevent attribute.

Categorical variables: comparing groups or multiple categories ¶

Let us go back the data on brain size:

We can write a comparison between IQ of male and female using a linear model:

Tips on specifying model

Forcing categorical : the ‘Gender’ is automatically detected as a categorical variable, and thus each of its different values are treated as different entities.

An integer column can be forced to be treated as categorical using:

Intercept : We can remove the intercept using - 1 in the formula, or force the use of an intercept using + 1 .

By default, statsmodels treats a categorical variable with K possible values as K-1 ‘dummy’ boolean variables (the last level being absorbed into the intercept term). This is almost always a good default choice - however, it is possible to specify different encodings for categorical variables ( http://statsmodels.sourceforge.net/devel/contrasts.html ).

Link to t-tests between different FSIQ and PIQ

To compare different types of IQ, we need to create a “long-form” table, listing IQs, where the type of IQ is indicated by a categorical variable:

We can see that we retrieve the same values for t-test and corresponding p-values for the effect of the type of iq than the previous t-test:

3.1.3.2. Multiple Regression: including multiple factors ¶

Consider a linear model explaining a variable z (the dependent variable) with 2 variables x and y :

Such a model can be seen in 3D as fitting a plane to a cloud of ( x , y , z ) points.

Example: the iris data ( examples/iris.csv )

Sepal and petal size tend to be related: bigger flowers are bigger! But is there in addition a systematic effect of species?

3.1.3.3. Post-hoc hypothesis testing: analysis of variance (ANOVA) ¶

In the above iris example, we wish to test if the petal length is different between versicolor and virginica, after removing the effect of sepal width. This can be formulated as testing the difference between the coefficient associated to versicolor and virginica in the linear model estimated above (it is an Analysis of Variance, ANOVA ). For this, we write a vector of ‘contrast’ on the parameters estimated: we want to test "name[T.versicolor] - name[T.virginica]" , with an F-test :

Is this difference significant?

Going back to the brain size + IQ data, test if the VIQ of male and female are different after removing the effect of brain size, height and weight.

3.1.4. More visualization: seaborn for statistical exploration ¶

Seaborn combines simple statistical fits with plotting on pandas dataframes.

Let us consider a data giving wages and many other personal information on 500 individuals ( Berndt, ER. The Practice of Econometrics. 1991. NY: Addison-Wesley ).

The full code loading and plotting of the wages data is found in corresponding example .

3.1.4.1. Pairplot: scatter matrices ¶

We can easily have an intuition on the interactions between continuous variables using seaborn.pairplot() to display a scatter matrix:

Categorical variables can be plotted as the hue:

Look and feel and matplotlib settings

Seaborn changes the default of matplotlib figures to achieve a more “modern”, “excel-like” look. It does that upon import. You can reset the default using:

To switch back to seaborn settings, or understand better styling in seaborn, see the relevent section of the seaborn documentation .

3.1.4.2. lmplot: plotting a univariate regression ¶

A regression capturing the relation between one variable and another, eg wage and eduction, can be plotted using seaborn.lmplot() :

Robust regression

Given that, in the above plot, there seems to be a couple of data points that are outside of the main cloud to the right, they might be outliers, not representative of the population, but driving the regression.

To compute a regression that is less sentive to outliers, one must use a robust model . This is done in seaborn using robust=True in the plotting functions, or in statsmodels by replacing the use of the OLS by a “Robust Linear Model”, statsmodels.formula.api.rlm() .

3.1.5. Testing for interactions ¶

Do wages increase more with education for males than females?

The plot above is made of two different fits. We need to formulate a single model that tests for a variance of slope across the two populations. This is done via an “interaction” .

Can we conclude that education benefits males more than females?

Take home messages

- Hypothesis testing and p-values give you the significance of an effect / difference.

- Formulas (with categorical variables) enable you to express rich links in your data.

- Visualizing your data and fitting simple models give insight into the data.

- Conditionning (adding factors that can explain all or part of the variation) is an important modeling aspect that changes the interpretation.

3.1.6. Full code for the figures ¶

Code examples for the statistics chapter.

Boxplots and paired differences

Plotting simple quantities of a pandas dataframe

Analysis of Iris petal and sepal sizes

Simple Regression

Multiple Regression

Test for an education/gender interaction in wages

Visualizing factors influencing wages

Air fares before and after 9/11

3.1.7. Solutions to this chapter’s exercises ¶

Relating Gender and IQ

Gallery generated by Sphinx-Gallery

Table Of Contents

- 3.1.1.1. Data as a table

- Creating dataframes: reading data files or converting arrays

- Manipulating data

- Plotting data

- 1-sample t-test: testing the value of a population mean

- 2-sample t-test: testing for difference across populations

- 3.1.2.2. Paired tests: repeated measurements on the same individuals

- A simple linear regression

- Categorical variables: comparing groups or multiple categories

- 3.1.3.2. Multiple Regression: including multiple factors

- 3.1.3.3. Post-hoc hypothesis testing: analysis of variance (ANOVA)

- 3.1.4.1. Pairplot: scatter matrices

- 3.1.4.2. lmplot: plotting a univariate regression

- 3.1.5. Testing for interactions

- 3.1.6. Full code for the figures

- 3.1.7. Solutions to this chapter’s exercises

Previous topic

3. Packages and applications

3.1.6.1. Boxplots and paired differences

- Show Source

Quick search

Hypothesis Testing using Python

- March 25, 2024

- Machine Learning

Hypothesis Testing is a statistical method used to make inferences or decisions about a population based on sample data. It starts with a null hypothesis (H0), which represents a default stance or no effect, and an alternative hypothesis (H1 or Ha), which represents what we aim to prove or expect to find. The process involves using sample data to determine whether to reject the null hypothesis in favor of the alternative hypothesis, based on the likelihood of observing the sample data under the null hypothesis. So, if you want to learn how to perform Hypothesis Testing, this article is for you. In this article, I’ll take you through the task of Hypothesis Testing using Python.

Hypothesis Testing: Process We Can Follow

So, Hypothesis Testing is a fundamental process in data science for making data-driven decisions and inferences about populations based on sample data. Below is the process we can follow for the task of Hypothesis Testing:

- Gather the necessary data required for the hypothesis test.

- Define Null (H0) and Alternative Hypothesis (H1 or Ha).

- Choose the Significance Level (α) , which is the probability of rejecting the null hypothesis when it is true.

- Select the appropriate statistical tests. Examples include t-tests for comparing means, chi-square tests for categorical data, and ANOVA for comparing means across more than two groups.

- Perform the chosen statistical test on your data.

- Determine the p-value and interpret the results of your statistical tests.

To get started with Hypothesis Testing, we need appropriate data. I found an ideal dataset for this task. You can download the dataset from here .

Now, let’s get started with the task of Hypothesis Testing by importing the necessary Python libraries and the dataset :

So, the dataset is based on the performance of two themes on a website. Our task is to find which theme performs better using Hypothesis Testing. Let’s go through the summary of the dataset, including the number of records, the presence of missing values, and basic statistics for the numerical columns:

The dataset contains 1,000 records across 10 columns, with no missing values. Here’s a quick summary of the numerical columns:

- Click Through Rate : Ranges from about 0.01 to 0.50 with a mean of approximately 0.26.

- Conversion Rate : Also ranges from about 0.01 to 0.50 with a mean close to the Click Through Rate, approximately 0.25.

- Bounce Rate : Varies between 0.20 and 0.80, with a mean around 0.51.

- Scroll Depth : Shows a spread from 20.01 to nearly 80, with a mean of 50.32.

- Age : The age of users ranges from 18 to 65 years, with a mean age of about 41.5 years.

- Session Duration : This varies widely from 38 seconds to nearly 1800 seconds (30 minutes), with a mean session duration of approximately 925 seconds (about 15 minutes).

Now, let’s move on to comparing the performance of both themes based on the provided metrics. We’ll look into the average Click Through Rate, Conversion Rate, Bounce Rate, and other relevant metrics for each theme. Afterwards, we can perform hypothesis testing to identify if there’s a statistically significant difference between the themes:

The comparison between the Light Theme and Dark Theme on average performance metrics reveals the following insights:

- Click Through Rate (CTR) : The Dark Theme has a slightly higher average CTR (0.2645) compared to the Light Theme (0.2471).

- Conversion Rate : The Light Theme leads with a marginally higher average Conversion Rate (0.2555) compared to the Dark Theme (0.2513).

- Bounce Rate : The Bounce Rate is slightly higher for the Dark Theme (0.5121) than for the Light Theme (0.4990).

- Scroll Depth : Users on the Light Theme scroll slightly further on average (50.74%) compared to those on the Dark Theme (49.93%).

- Age : The average age of users is similar across themes, with the Light Theme at approximately 41.73 years and the Dark Theme at 41.33 years.

- Session Duration : The average session duration is slightly longer for users on the Light Theme (930.83 seconds) than for those on the Dark Theme (919.48 seconds).

From these insights, it appears that the Light Theme slightly outperforms the Dark Theme in terms of Conversion Rate, Bounce Rate, Scroll Depth, and Session Duration, while the Dark Theme leads in Click Through Rate. However, the differences are relatively minor across all metrics.

Getting Started with Hypothesis Testing

We’ll use a significance level (alpha) of 0.05 for our hypothesis testing. It means we’ll consider a result statistically significant if the p-value from our test is less than 0.05.

Let’s start with hypothesis testing based on the Conversion Rate between the Light Theme and Dark Theme. Our hypotheses are as follows:

- Null Hypothesis (H0): There is no difference in Conversion Rates between the Light Theme and Dark Theme.

- Alternative Hypothesis (Ha): There is a difference in Conversion Rates between the Light Theme and Dark Theme.

We’ll use a two-sample t-test to compare the means of the two independent samples. Let’s proceed with the test:

The result of the two-sample t-test gives a p-value of approximately 0.635. Since this p-value is much greater than our significance level of 0.05, we do not have enough evidence to reject the null hypothesis. Therefore, we conclude that there is no statistically significant difference in Conversion Rates between the Light Theme and Dark Theme based on the data provided.

Now, let’s conduct hypothesis testing based on the Click Through Rate (CTR) to see if there’s a statistically significant difference between the Light Theme and Dark Theme regarding how often users click through. Our hypotheses remain structured similarly:

- Null Hypothesis (H0): There is no difference in Click Through Rates between the Light Theme and Dark Theme.

- Alternative Hypothesis (Ha): There is a difference in Click Rates between the Light Theme and Dark Theme.

We’ll perform a two-sample t-test on the CTR for both themes. Let’s proceed with the calculation:

The two-sample t-test for the Click Through Rate (CTR) between the Light Theme and Dark Theme yields a p-value of approximately 0.048. This p-value is slightly below our significance level of 0.05, indicating that there is a statistically significant difference in Click Through Rates between the Light Theme and Dark Theme, with the Dark Theme likely having a higher CTR given the direction of the test statistic.

Now, let’s perform Hypothesis Testing based on two other metrics: bounce rate and scroll depth, which are important metrics for analyzing the performance of a theme or a design on a website. I’ll first perform these statistical tests and then create a table to show the report of all the tests we have done:

So, here’s a table comparing the performance of the Light Theme and Dark Theme across various metrics based on hypothesis testing:

- Click Through Rate : The test reveals a statistically significant difference, with the Dark Theme likely performing better (P-Value = 0.048).

- Conversion Rate : No statistically significant difference was found (P-Value = 0.635).

- Bounce Rate : There’s no statistically significant difference in Bounce Rates between the themes (P-Value = 0.230).

- Scroll Depth : Similarly, no statistically significant difference is observed in Scroll Depths (P-Value = 0.450).

In summary, while the two themes perform similarly across most metrics, the Dark Theme has a slight edge in terms of engaging users to click through. For other key performance indicators like Conversion Rate, Bounce Rate, and Scroll Depth, the choice between a Light Theme and a Dark Theme does not significantly affect user behaviour according to the data provided.

So, Hypothesis Testing is a statistical method used to make inferences or decisions about a population based on sample data. It starts with a null hypothesis (H0), which represents a default stance or no effect, and an alternative hypothesis (H1 or Ha), which represents what we aim to prove or expect to find. The process involves using sample data to determine whether to reject the null hypothesis in favor of the alternative hypothesis, based on the likelihood of observing the sample data under the null hypothesis.

I hope you liked this article on Hypothesis Testing using Python. Feel free to ask valuable questions in the comments section below. You can follow me on Instagram for many more resources.

Aman Kharwal

Data Strategist at Statso. My aim is to decode data science for the real world in the most simple words.

Recommended For You

Business Concepts Every Data Scientist Should Know

- September 6, 2024

AI & ML Roadmap

- September 4, 2024

SQL Subqueries Guide

- September 3, 2024

Web Scraping from Amazon with Python

- September 2, 2024

One comment

Thank you for the explanation, it’s very useful!

May I ask why we use equal_var = False in the t-tests? If I understand well, it means, we use the Welch’s t-test instead of normal t-test — why is it needed?

On the other hand, I thought we need to do a Levene’s test first – why we don’t need it now?

Thank you for your kind reply. Anita

Leave a Reply Cancel reply

Discover more from thecleverprogrammer.

Subscribe now to keep reading and get access to the full archive.

Type your email…

Continue reading

- Subscription

Tutorial: Text Analysis in Python to Test a Hypothesis

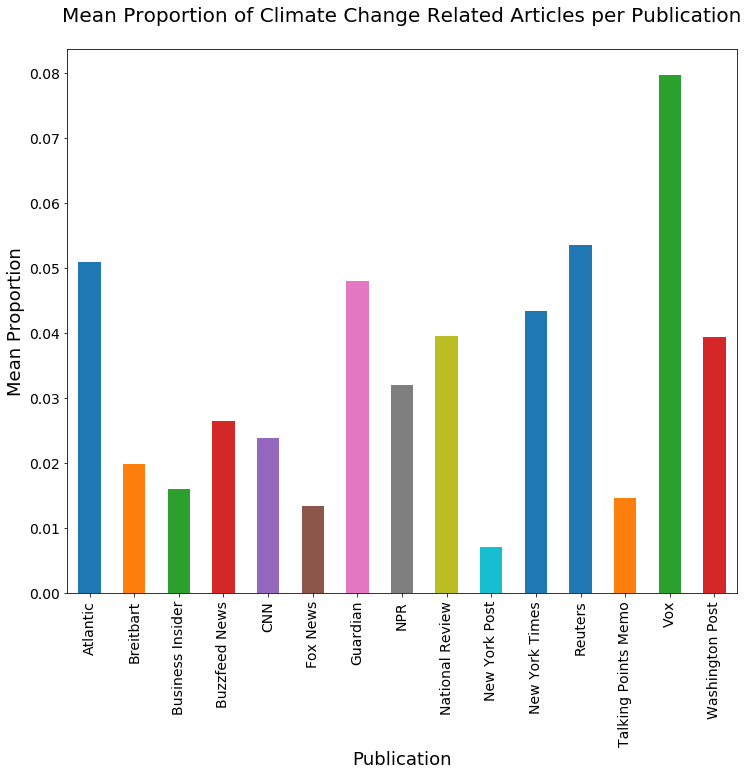

People often complain about important subjects being covered too little in the news. One such subject is climate change. The scientific consensus is that this is an important problem, and it stands to reason that the more people are aware of it, the better our chances may be of solving it. But how can we assess how widely covered climate change is by various media outlets? We can use Python to do some text analysis!

Specifically, in this post, we'll try to answer some questions about which news outlets are giving climate change the most coverage. At the same time, we'll learn some of the programming skills required to analyze text data in Python and test a hypothesis related to that data.

This tutorial assumes that you’re fairly familiar with Python and the popular data science package pandas. If you'd like to brush up on pandas, check out this post, and if you need to build a more thorough foundation, Dataquest's data science courses cover all of the Python and pandas fundamentals in more depth.

Finding & Exploring our Data Set

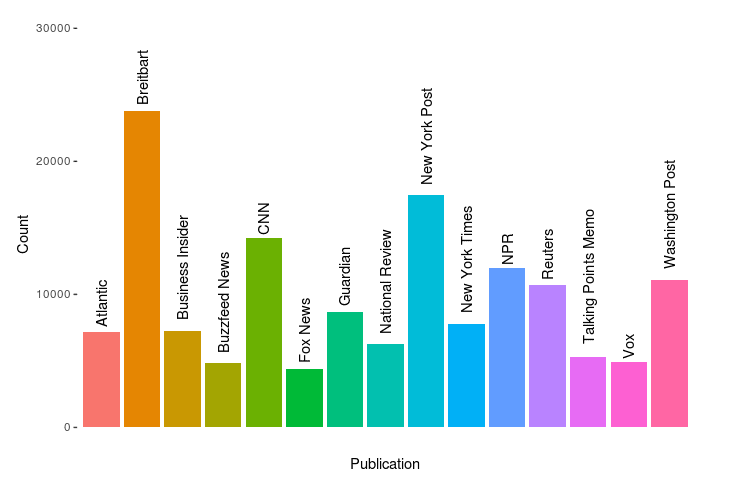

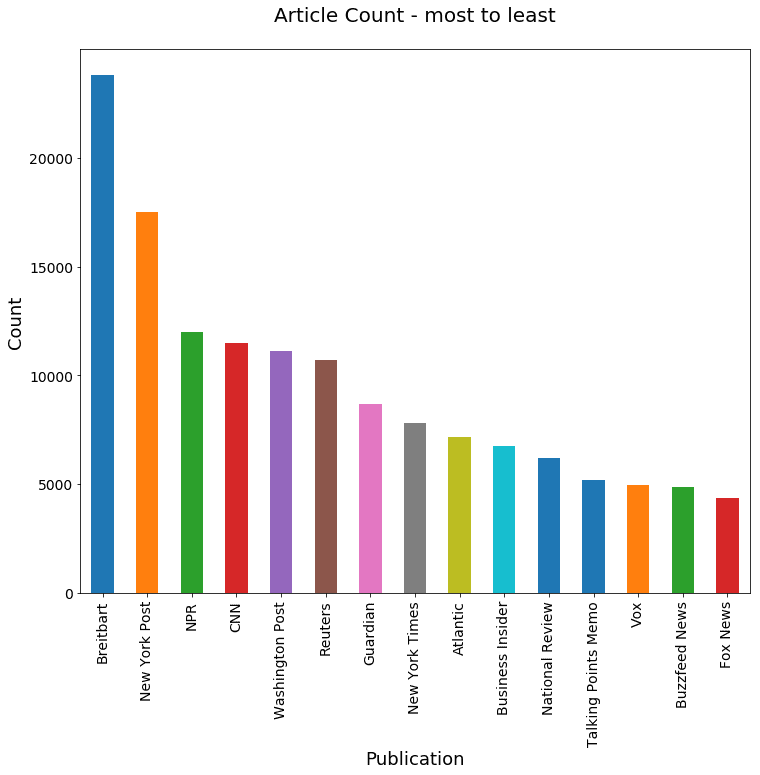

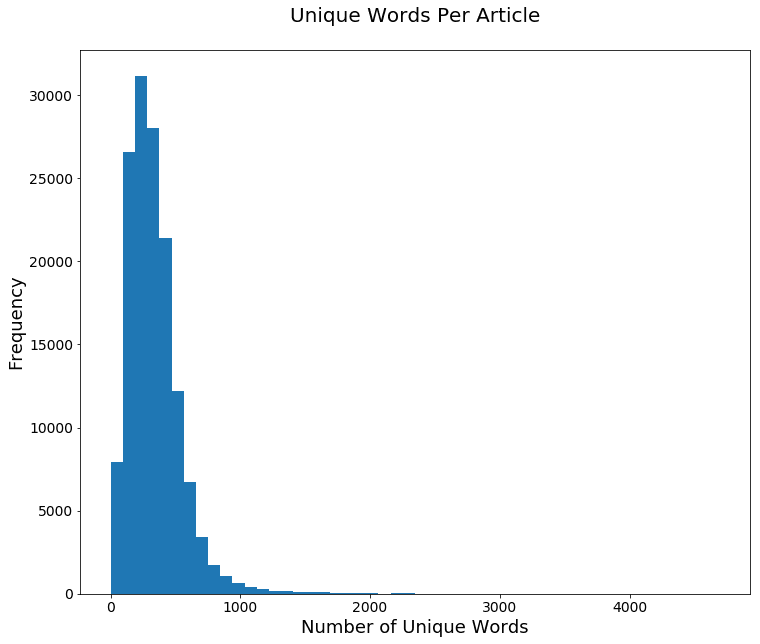

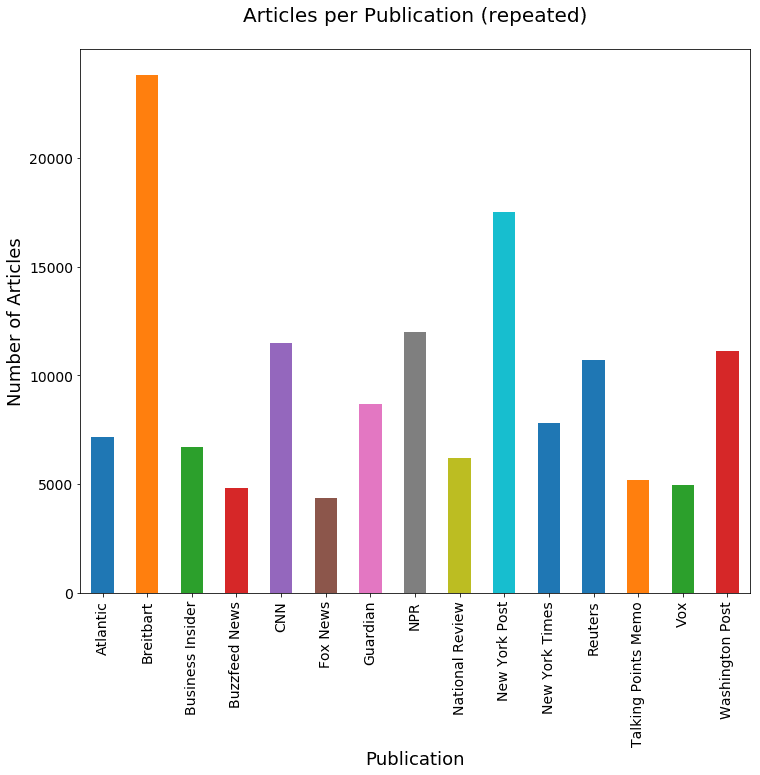

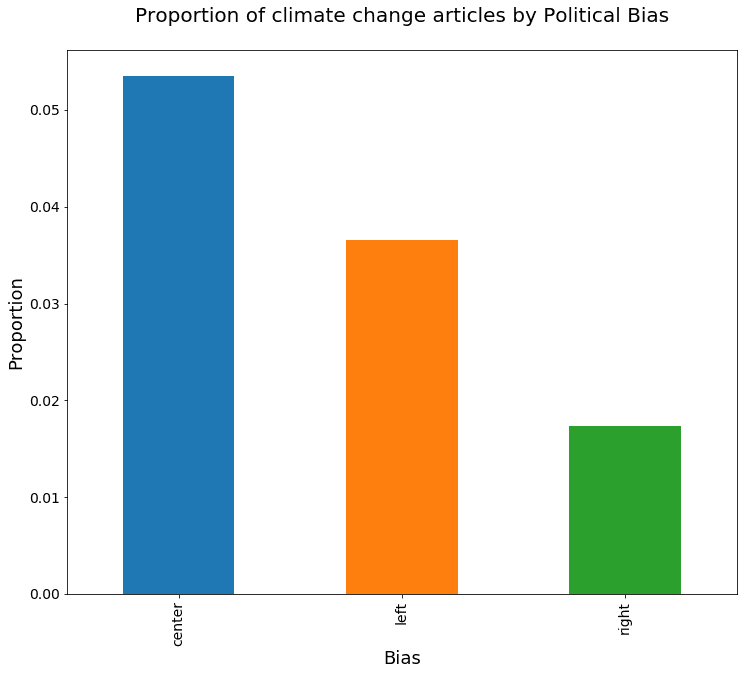

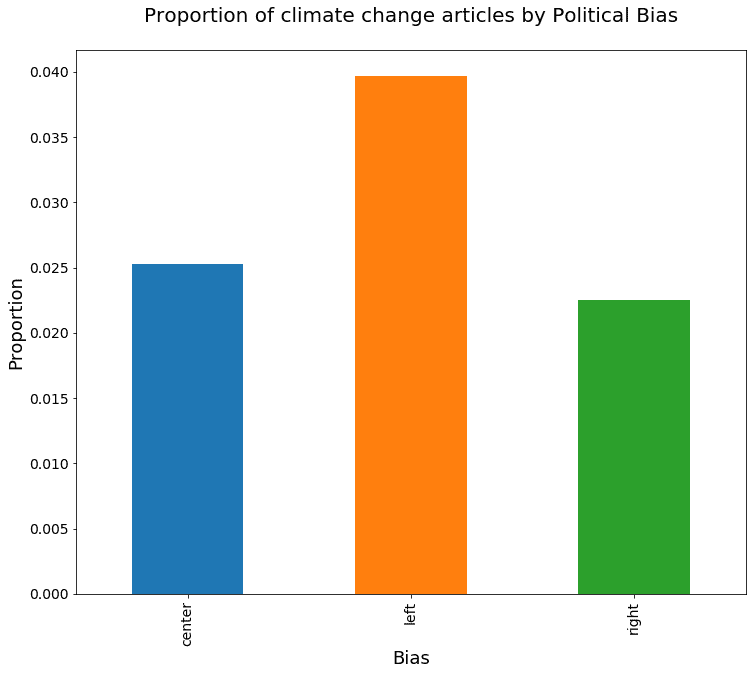

For this post we’ll use a news data set from Kaggle provided by Andrew Thompson (no relation). This data set contains over 142,000 articles from 15 sources mostly from 2016 and 2017, and is split into three different csv files. Here is the article count as displayed on the Kaggle overview page by Andrew:

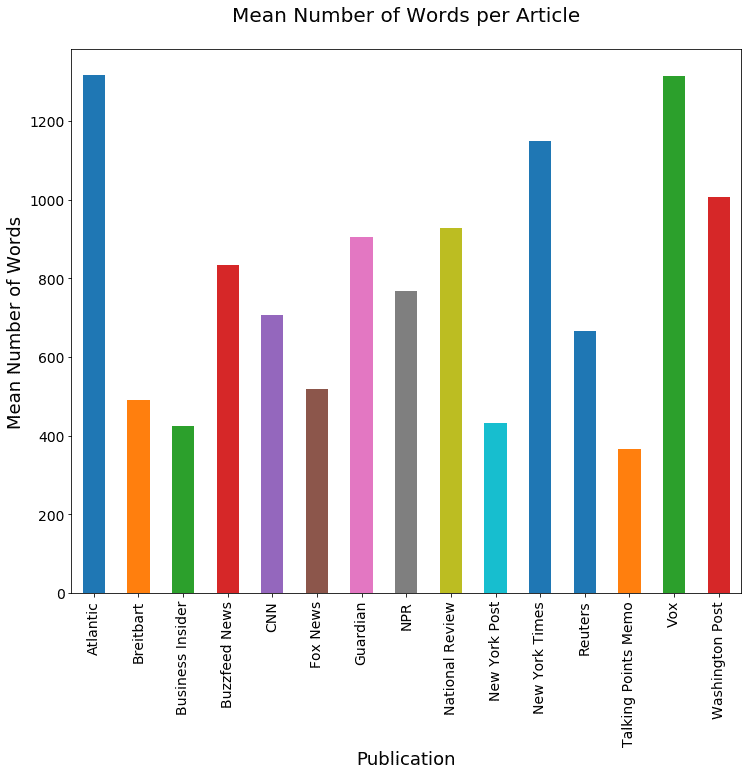

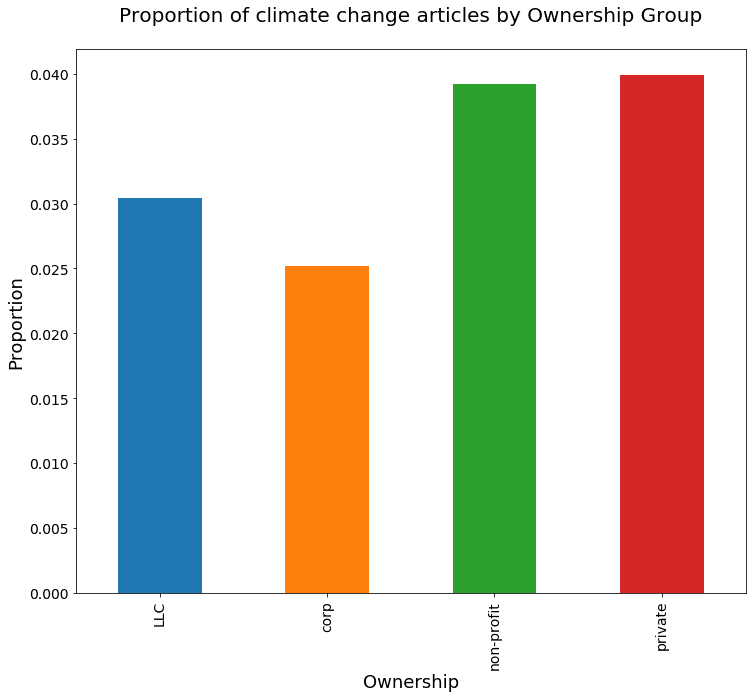

We’ll work on reproducing our own version of this later. But one of the things that might be interesting to look at is the correlation, if any, between the characteristics of these news outlets and the proportion of climate-change-related articles they publish.

Some interesting characteristics we could look at include ownership (independent, non-profit, or corporate) and political leanings, if any. Below, I've done some preliminary research, collecting information from Wikipedia and the providers' own web pages.

I also found two websites that rate publications for their liberal vs conservative bias, allsides.com and mediabiasfactcheck.com, so I've collected some information about political leanings from there.

- Owner: Atlantic Media; majority stake recently sold to Emerson collective, a non-profit founded by Powell Jobs, widow of Steve Jobs

- Owner: Breitbart News Network, LLC

- Founded by a conservative commentator

- Owner: Alex Springer SE (publishing house in Europe)

- Center / left-center

- Private, Jonah Peretti CEO & Kenneth Lerer, executive chair (latter also co-founder of Huffington Post)

- Turner Broadcasting System, mass media

- TBS itself is owned by Time Warner

- Fox entertainment group, mass media

- Lean right / right

- Guardian Media Group (UK), mass media

- Owned by Scott Trust Limited

- National Review Institute, a non-profit

- Founded by William F Buckley Jr

- News corp, mass media

- Right / right center

- NY Times Company

- Thomson Reuters Corporation (Canadian multinational mass media)

- Josh Marshall, independent

- Nash Holdings LLC, controlled by J. Bezos

- Vox Media, multinational

- Lean left / left

Looking this over, we might hypothesize that right-leaning Breitbart, for example, would have a lower proportion of climate related articles than, say, NPR.

We can turn this into a formal hypothesis statement and will do that later in the post. But first, let’s dive deeper into the data. A terminology note: in the computational linguistics and NLP communities, a text collection such as this is called a corpus , so we'll use that terminology here when talking about our text data set.

Exploratory Data Analysis, or EDA, is an important part of any Data Science project. It usually involves analyzing and visualizing the data in various ways to look for patterns before proceeding with more in-depth analysis. In this case, though, we're working with text data rather than numerical data, which makes things a bit different.