Data Science Interview Practice: Machine Learning Case Study

A common interview type for data scientists and machine learning engineers is the machine learning case study. In it, the interviewer will ask a question about how the candidate would build a certain model. These questions can be challenging for new data scientists because the interview is open-ended and new data scientists often lack practical experience building and shipping product-quality models.

I have a lot of practice with these types of interviews as a result of my time at Insight , my many experiences interviewing for jobs , and my role in designing and implementing Intuit’s data science interview. Similar to my last article where I put together an example data manipulation interview practice problem , this time I will walk through a practice case study and how I would work through it.

My Approach

Case study interviews are just conversations. This can make them tougher than they need to be for junior data scientists because they lack the obvious structure of a coding interview or data manipulation interview . I find it’s helpful to impose my own structure on the conversation by approaching it in this order:

- Problem : Dive in with the interviewer and explore what the problem is. Look for edge cases or simple and high-impact parts of the problem that you might be able to close out quickly.

- Metrics : Once you have determined the scope and parameters of the problem you’re trying to solve, figure out how you will measure success. Focus on what is important to the business and not just what is easy to measure.

- Data : Figure out what data is available to solve the problem. The interviewer might give you a couple of examples, but ask about additional information sources. If you know of some public data that might be useful, bring it up here too.

- Labels and Features : Using the data sources you discussed, what features would you build? If you are attacking a supervised classification problem, how would you generate labels? How would you see if they were useful?

- Model : Now that you have a metric, data, features, and labels, what model is a good fit? Why? How would you train it? What do you need to watch out for?

- Validation : How would you make sure your model works offline? What data would you hold out to test your model works as expected? What metrics would you measure?

- Deployment and Monitoring : Having developed a model you are comfortable with, how would you deploy it? Does it need to be real-time or is it sufficient to batch inputs and periodically run the model? How would you check performance in production? How would you monitor for model drift where its performance changes over time?

Here is the prompt:

At Twitter, bad actors occasionally use automated accounts, known as “bots”, to abuse our platform. How would you build a system to help detect bot accounts?

At the start of the interview I try to fully explore the bounds of the problem, which is often open ended. My goal with this part of the interview is to:

- Understand the problem and all the edges cases.

- Come to an agreement with the interviewer on the scope—narrower is better!—of the problem to solve.

- Demonstrate any knowledge I have on the subject, especially from researching the company previously.

Our Twitter bot prompt has a lot of angles from which we could attack. I know Twitter has dozens of types of bots, ranging from my harmless Raspberry Pi bots , to “Russian Bots” trying to influence elections , to bots spreading spam . I would pick one problem to focus on using my best guess as to business impact. In this case spam bots are likely a problem that causes measurable harm (drives users away, drives advertisers away). Russian bots are probably a bigger issue in terms of public perception, but that’s much harder to measure.

After deciding on the scope, I would ask more about the systems they currently have to deal with it. Likely Twitter has an ops team to help identify spam and block accounts and they may even have a rules based system. Those systems will be a good source of data about the bad actors and they likely also have metrics they track for this problem.

Having agreed on what part of the problem to focus on, we now turn to how we are going to measure our impact. There is no point shipping a model if you can’t measure how it’s affecting the business.

Metrics and model use go hand-in-hand, so first we have to agree on what the model will be used for. For spam we could use the model to just mark suspected accounts for human review and tracking, or we could outright block accounts based on the model result. If we pick the human review option, it’s probably more important to get all the bots even if some good customers are affected. If we go with immediate action, it is likely more important to only ban truly bad accounts. I covered thinking about metrics like this in detail in another post, What Machine Learning Metric to Use . Take a look!

I would argue the automatic blocking model will have higher impact because it frees our ops people to focus on other bad behavior. We want two sets of metrics: offline for when we are training and online for when the model is deployed.

Our offline metric will be precision because, based on the argument above, we want to be really sure we’re only banning bad accounts.

Our online metrics are more business focused:

- Ops time saved : Ops is currently spending some amount of time reviewing spam; how much can we cut that down?

- Spam fraction : What percent of Tweets are spam? Can we reduce this?

It is often useful to normalize metrics, like the spam fraction metric, so they don’t go up or down just because we have more customers!

Now that we know what we’re doing and how to measure its success, it’s time to figure out what data we can use. Just based on how a company operates, you can make a really good guess as to the data they have. For Twitter we know they have to track Tweets, accounts, and logins, so they must have databases with that information. Here are what I think they contain:

- Tweets database : Sending account, mentioned accounts, parent Tweet, Tweet text.

- Interactions database : Account, Tweet, action (retweet, favorite, etc.).

- Accounts database : Account name, handle, creation date, creation device, creation IP address.

- Following database : Account, followed account.

- Login database : Account, date, login device, login IP address, success or fail reason.

- Ops database : Account, restriction, human reasoning.

And a lot more. From these we can find out a lot about an account and the Tweets they send, who they send to, who those people react to, and possibly how login events tie different accounts together.

Labels and Features

Having figured out what data is available, it’s time to process it. Because I’m treating this as a classification problem, I’ll need labels to tell me the ground truth for accounts, and I’ll need features which describe the behavior of the accounts.

Since there is an ops team handling spam, I have historical examples of bad behavior which I can use as positive labels. 1 If there aren’t enough I can use tricks to try to expand my labels, for example looking at IP address or devices that are associated with spammers and labeling other accounts with the same login characteristics.

Negative labels are harder to come by. I know Twitter has verified users who are unlikely to be spam bots, so I can use them. But verified users are certainly very different from “normal” good users because they have far more followers.

It is a safe bet that there are far more good users than spam bots, so randomly selecting accounts can be used to build a negative label set.

To build features, it helps to think about what sort of behavior a spam bot might exhibit, and then try to codify that behavior into features. For example:

- Bots can’t write truly unique messages ; they must use a template or language generator. This should lead to similar messages, so looking at how repetitive an account’s Tweets are is a good feature.

- Bots are used because they scale. They can run all the time and send messages to hundreds or thousands (or millions) or users. Number of unique Tweet recipients and number of minutes per day with a Tweet sent are likely good features.

- Bots have a controller. Someone is benefiting from the spam, and they have to control their bots. Features around logins might help here like number of accounts seen from this IP address or device, similarity of login time, etc.

Model Selection

I try to start with the simplest model that will work when starting a new project. Since this is a supervised classification problem and I have written some simple features, logistic regression or a forest are good candidates. I would likely go with a forest because they tend to “just work” and are a little less sensitive to feature processing. 2

Deep learning is not something I would use here. It’s great for image, video, audio, or NLP, but for a problem where you have a set of labels and a set of features that you believe to be predictive it is generally overkill.

One thing to consider when training is that the dataset is probably going to be wildly imbalanced. I would start by down-sampling (since we likely have millions of events), but would be ready to discuss other methods and trade offs.

Validation is not too difficult at this point. We focus on the offline metric we decided on above: precision. We don’t have to worry much about leaking data between our holdout sets if we split at the account level, although if we include bots from the same botnet into our different sets there will be a little data leakage. I would start with a simple validation/training/test split with fixed fractions of the dataset.

Since we want to classify an entire account and not a specific tweet, we don’t need to run the model in real-time when Tweets are posted. Instead we can run batches and can decide on the time between runs by looking at something like the characteristic time a spam bot takes to send out Tweets. We can add rate limiting to Tweet sending as well to slow the spam bots and give us more time to decide without impacting normal users.

For deployment, I would start in shadow mode , which I discussed in detail in another post . This would allow us to see how the model performs on real data without the risk of blocking good accounts. I would track its performance using our online metrics: spam fraction and ops time saved. I would compute these metrics twice, once using the assumption that the model blocks flagged accounts, and once assuming that it does not block flagged accounts, and then compare the two outcomes. If the comparison is favorable, the model should be promoted to action mode.

Let Me Know!

I hope this exercise has been helpful! Please reach out and let me know at @alex_gude if you have any comments or improvements!

In this case a positive label means the account is a spam bot, and a negative label means they are not. ↩

If you use regularization with logistic regression (and you should) you need to scale your features. Random forests do not require this. ↩

Practice Interview Questions

70 Machine Learning Interview Questions & Answers

By Nick Singh

(Ex-Facebook & Best-Selling Data Science Author)

Currently, he’s the best-selling author of Ace the Data Science Interview, and Founder & CEO of DataLemur.

February 12, 2024

Are you gearing up for a machine learning interview and feeling a bit overwhelmed?

Fear not! In this comprehensive guide, we've compiled 70 machine-learning interview questions and their detailed answers to help you ace your next interview with confidence. Let's dive in and unravel the secrets to mastering machine learning interviews.

Fundamental Concepts

Questions in this section may cover basic concepts such as supervised learning, unsupervised learning, reinforcement learning, model evaluation metrics, bias-variance tradeoff, overfitting, underfitting, cross-validation, and regularization techniques like L1 and L2 regularization.

Question 1: What is the bias-variance tradeoff in machine learning?

Answer: The bias-variance tradeoff refers to the balance between bias and variance in predictive models. High bias can cause underfitting, while high variance can lead to overfitting. It's crucial to find a balance to minimize both errors.

Question 2: Explain cross-validation and its importance in model evaluation.

Answer: Cross-validation is a technique used to assess how well a predictive model generalizes to unseen data by splitting the dataset into multiple subsets for training and testing. It helps in detecting overfitting and provides a more accurate estimate of a model's performance.

Question 3: What is regularization, and how does it prevent overfitting?

Answer: Regularization is a technique used to prevent overfitting by adding a penalty term to the loss function, discouraging the model from fitting the training data too closely. Common regularization techniques include L1 (Lasso) and L2 (Ridge) regularization.

Question 4: What are evaluation metrics commonly used for classification tasks?

Answer: Evaluation metrics for classification tasks include accuracy, precision, recall, F1-score, ROC curve, and AUC-ROC score. Each metric provides insights into different aspects of the model's performance.

Question 5: What is the difference between supervised and unsupervised learning?

Answer: Supervised learning involves training a model on labeled data, where the algorithm learns the mapping between input and output variables. In contrast, unsupervised learning deals with unlabeled data and aims to find hidden patterns or structures in the data.

Question 6: How do you handle imbalanced datasets in machine learning?

Answer: Techniques for handling imbalanced datasets include resampling methods such as oversampling minority class instances or under sampling majority class instances, using different evaluation metrics like precision-recall curves, and employing algorithms specifically designed for imbalanced data, such as SMOTE (Synthetic Minority Over-sampling Technique).

Question 7: What is the purpose of feature scaling in machine learning?

Answer: Feature scaling ensures that all features contribute equally to the model training process by scaling them to a similar range. Common scaling techniques include min-max scaling and standardization (Z-score normalization).

Question 8: Explain the concept of overfitting and underfitting in machine learning.

Answer: Overfitting occurs when a model learns the training data too well, capturing noise and irrelevant patterns, leading to poor generalization on unseen data. Underfitting, on the other hand, happens when a model is too simple to capture the underlying structure of the data, resulting in low performance on both training and testing data.

Question 9: What is the difference between a parametric and non-parametric model?

Answer: Parametric models make assumptions about the functional form of the relationship between input and output variables and have a fixed number of parameters. Non-parametric models do not make such assumptions and can adapt to the complexity of the data, often having an indefinite number of parameters.

Question 10: How do you assess the importance of features in a machine-learning model?

Answer: Feature importance can be assessed using techniques like examining coefficients in linear models, feature importance scores in tree-based models, or permutation importance. These methods help identify which features have the most significant impact on the model's predictions.

Data Preprocessing and Feature Engineering

This section may include questions about data cleaning techniques, handling missing values, scaling features, encoding categorical variables, feature selection methods, dimensionality reduction techniques like PCA (Principal Component Analysis), and dealing with imbalanced datasets.

Question 1: What are some common techniques for handling missing data?

Answer: Common techniques for handling missing data include imputation (replacing missing values with estimated values such as mean, median, or mode), deletion of rows or columns with missing values, or using advanced methods like predictive modeling to fill missing values.

Question 2: How do you deal with categorical variables in a machine-learning model?

Answer: Categorical variables can be encoded using techniques like one-hot encoding, label encoding, or target encoding, depending on the nature of the data and the algorithm being used. One-hot encoding creates binary columns for each category, while label encoding assigns a unique integer to each category.

Question 3: What is feature scaling, and when is it necessary?

Answer: Feature scaling is the process of standardizing or normalizing the range of features in the dataset. It is necessary when features have different scales, as algorithms like gradient descent converge faster and more reliably when features are scaled to a similar range.

Question 4: How do you handle outliers in a dataset?

Answer: Outliers can be handled by removing them if they are due to errors or extreme values, transforming the data using techniques like logarithmic or square root transformations, or using robust statistical methods that are less sensitive to outliers.

Question 5: What is feature selection, and why is it important?

Answer: Feature selection is the process of choosing the most relevant features for building predictive models while discarding irrelevant or redundant ones. It is important because it reduces the dimensionality of the dataset, improves model interpretability, and prevents overfitting.

Question 6: Explain the concept of dimensionality reduction.

Answer: Dimensionality reduction techniques like PCA (Principal Component Analysis) and t-SNE (t-distributed Stochastic Neighbor Embedding) are used to reduce the number of features in a dataset while preserving its essential characteristics. This helps in visualization, data compression, and speeding up the training process of machine learning models.

Question 7: What are some methods for detecting and handling multicollinearity among features?

Answer: Multicollinearity occurs when two or more features in a dataset are highly correlated, which can cause issues in model interpretation and stability. Methods for detecting and handling multicollinearity include correlation matrices, variance inflation factor (VIF) analysis, and feature selection techniques.

Question 8: How do you handle skewed distributions in features?

Answer: Skewed distributions can be transformed using techniques like logarithmic transformation, square root transformation, or Box-Cox transformation to make the distribution more symmetrical and improve model performance, especially for algorithms that assume normality.

Question 9: What is the curse of dimensionality, and how does it affect machine learning algorithms?

Answer: The curse of dimensionality refers to the increased computational and statistical challenges associated with high-dimensional data. As the number of features increases, the amount of data required to generalize accurately grows exponentially, leading to overfitting and decreased model performance.

Question 10: When should you use feature engineering techniques like polynomial features?

Answer: Polynomial features are useful when the relationship between the independent and dependent variables is non-linear. By creating polynomial combinations of features, models can capture more complex relationships, improving their ability to fit the data.

Supervised Learning Algorithms

Questions here may focus on various supervised learning algorithms such as linear regression, logistic regression, decision trees, random forests, support vector machines (SVM), k-nearest neighbors (KNN), naive Bayes, gradient boosting methods like XGBoost, and neural networks.

Question 1: Explain the difference between regression and classification algorithms.

Answer: Regression algorithms are used to predict continuous numeric values, while classification algorithms are used to predict categorical labels or classes. Examples of regression algorithms include linear regression and polynomial regression, while examples of classification algorithms include logistic regression, decision trees, and support vector machines.

Question 2: How does a decision tree work, and what are its advantages and disadvantages?

Answer: A decision tree is a tree-like structure where each internal node represents a decision based on a feature, and each leaf node represents the outcome or prediction. Its advantages include interpretability, ease of visualization, and handling both numerical and categorical data. However, it is prone to overfitting, especially with complex trees.

Question 3: What is the difference between bagging and boosting?

Answer: Bagging (Bootstrap Aggregating) and boosting are ensemble learning techniques used to improve model performance by combining multiple base learners. Bagging trains each base learner independently on different subsets of the training data, while boosting focuses on training base learners sequentially, giving more weight to misclassified instances.

Question 4: Explain the working principle of support vector machines (SVM).

Answer: Support Vector Machines (SVM) is a supervised learning algorithm used for classification and regression tasks. It works by finding the hyperplane that best separates the data points into different classes while maximizing the margin, which is the distance between the hyperplane and the nearest data points from each class.

Question 5: What is logistic regression, and when is it used?

Answer: Logistic regression is a binary classification algorithm used to predict the probability of a binary outcome based on one or more predictor variables. It is commonly used when the dependent variable is categorical (e.g., yes/no, true/false) and the relationship between the independent and dependent variables is linear.

Question 6: Explain the concept of ensemble learning and its advantages.

Answer: Ensemble learning combines predictions from multiple models to improve overall performance. It can reduce overfitting, increase predictive accuracy, and handle complex relationships in the data better than individual models. Examples include random forests, gradient boosting machines (GBM), and stacking.

Question 7: How does linear regression handle multicollinearity among features?

Answer: Multicollinearity among features in linear regression can lead to unstable coefficient estimates and inflated standard errors. Techniques for handling multicollinearity include removing correlated features, using regularization techniques like ridge regression, or employing dimensionality reduction methods like PCA.

Question 8: What is the difference between gradient descent and stochastic gradient descent?

Answer: Gradient descent is an optimization algorithm used to minimize the loss function by iteratively adjusting model parameters in the direction of the steepest descent of the gradient. Stochastic gradient descent (SGD) is a variant of gradient descent that updates the parameters using a single randomly chosen data point or a small batch of data points at each iteration, making it faster and more suitable for large datasets.

Question 9: When would you use a decision tree versus a random forest?

Answer: Decision trees are simple and easy to interpret but are prone to overfitting. Random forests, which are ensembles of decision trees, reduce overfitting by averaging predictions from multiple trees and provide higher accuracy and robustness, especially for complex datasets with many features.

Question 10: What is the purpose of hyperparameter tuning in machine learning algorithms?

Answer: Hyperparameter tuning involves selecting the optimal values for hyperparameters, which are parameters that control the learning process of machine learning algorithms. It helps improve model performance by finding the best configuration of hyperparameters through techniques like grid search, random search, or Bayesian optimization.

Unsupervised Learning Algorithms

This section might involve questions about unsupervised learning algorithms like k-means clustering, hierarchical clustering, DBSCAN (Density-Based Spatial Clustering of Applications with Noise), Gaussian Mixture Models (GMM), and dimensionality reduction techniques like t-SNE (t-distributed Stochastic Neighbor Embedding).

Question 1: Explain the k-means clustering algorithm and its steps.

Answer: K-means clustering is a partitioning algorithm that divides a dataset into k clusters by minimizing the sum of squared distances between data points and their respective cluster centroids. The steps include initializing cluster centroids, assigning data points to the nearest centroid, updating centroids, and iterating until convergence.

Question 2: What is the difference between k-means and hierarchical clustering?

Answer: K-means clustering partitions the dataset into a predefined number of clusters (k) by minimizing the within-cluster variance, while hierarchical clustering builds a hierarchy of clusters by recursively merging or splitting clusters based on similarity or dissimilarity measures.

Question 3: How does DBSCAN clustering work, and what are its advantages?

Answer: DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is a density-based clustering algorithm that groups data points that are closely packed while marking outliers as noise. Its advantages include the ability to discover clusters of arbitrary shapes, robustness to noise and outliers, and not requiring the number of clusters as input.

Question 4: Explain the working principle of Gaussian Mixture Models (GMM).

Answer: Gaussian Mixture Models (GMM) represent the probability distribution of a dataset as a mixture of multiple Gaussian distributions, each associated with a cluster. The model parameters, including means and covariances of the Gaussians, are estimated using the Expectation-Maximization (EM) algorithm.

Question 5: When would you use hierarchical clustering over k-means clustering?

Answer: Hierarchical clustering is preferred when the number of clusters is unknown or when the data exhibits a hierarchical structure, as it produces a dendrogram that shows the relationships between clusters at different levels of granularity. In contrast, k-means clustering requires specifying the number of clusters in advance and may not handle non-spherical clusters well.

Question 6: What are the advantages and disadvantages of unsupervised learning?

Answer: The advantages of unsupervised learning include its ability to discover hidden patterns or structures in data without labeled examples, making it useful for exploratory data analysis and feature extraction. However, its disadvantages include the lack of ground truth labels for evaluation and the potential for subjective interpretation of results.

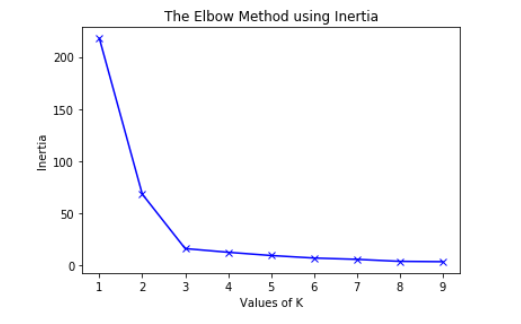

Question 7: How do you determine the optimal number of clusters in a clustering algorithm?

Answer: The optimal number of clusters can be determined using techniques like the elbow method, silhouette analysis, or the gap statistic. These methods aim to find the point where adding more clusters does not significantly improve the clustering quality or where the silhouette score is maximized.

Question 8: What is the purpose of dimensionality reduction in unsupervised learning?

Answer: Dimensionality reduction techniques like PCA (Principal Component Analysis) and t-SNE (t-distributed Stochastic Neighbor Embedding) are used in unsupervised learning to reduce the number of features in a dataset while preserving its essential characteristics. This helps in visualization, data compression, and speeding up the training process of machine learning models.

Question 9: How do you handle missing values in unsupervised learning?

Answer: In unsupervised learning, missing values can be handled by imputation techniques like mean, median, or mode imputation, or by using advanced methods like k-nearest neighbors (KNN) imputation or matrix factorization.

Question 10: What are some applications of unsupervised learning in real-world scenarios?

Answer: Some applications of unsupervised learning include customer segmentation for targeted marketing, anomaly detection in cybersecurity, topic modeling for text analysis, image clustering for visual content organization, and recommendation systems for personalized content delivery.

Deep Learning

Questions in this section may cover topics related to deep learning architectures such as convolutional neural networks (CNNs) for image data, recurrent neural networks (RNNs) for sequential data, long short-term memory networks (LSTMs), attention mechanisms, transfer learning, and popular deep learning frameworks like TensorFlow and PyTorch.

Question 1: What are the key components of a neural network?

Answer: The key components of a neural network include an input layer, one or more hidden layers, each consisting of neurons or nodes, and an output layer. Each neuron applies an activation function to the weighted sum of its inputs to produce an output.

Question 2: Explain the working principle of convolutional neural networks (CNNs).

Answer: Convolutional Neural Networks (CNNs) are specialized neural networks designed for processing structured grid-like data, such as images. They consist of convolutional layers that extract features from input images, pooling layers that downsample feature maps, and fully connected layers that classify the extracted features.

Question 3: What is the purpose of activation functions in neural networks?

Answer: Activation functions introduce non-linearity into the neural network, enabling it to learn complex patterns and relationships in the data. Common activation functions include ReLU (Rectified Linear Unit), sigmoid, tanh (hyperbolic tangent), and softmax.

Question 4: How do you prevent overfitting in deep learning models?

Answer: Techniques for preventing overfitting in deep learning models include using dropout layers to randomly deactivate neurons during training, adding L1 or L2 regularization to penalize large weights, collecting more training data, and early stopping based on validation performance.

Question 5: Explain the concept of transfer learning in deep learning.

Answer: Transfer learning is a technique where a pre-trained neural network model is reused for a different but related task. By leveraging knowledge learned from a large dataset or task, transfer learning allows the model to achieve better performance with less training data and computational resources.

Question 6: What is the difference between shallow and deep neural networks?

Answer: Shallow neural networks have only one hidden layer between the input and output layers, while deep neural networks have multiple hidden layers. Deep neural networks can learn hierarchical representations of data, capturing complex patterns and relationships, but they require more computational resources and may suffer from vanishing or exploding gradients.

Question 7: How do recurrent neural networks (RNNs) handle sequential data?

Answer: Recurrent Neural Networks (RNNs) process sequential data by maintaining a hidden state that captures information from previous time steps and updates it recursively as new input is fed into the network. This allows RNNs to model temporal dependencies and sequences of variable length.

Question 8: What is the vanishing gradient problem, and how does it affect deep learning?

Answer: The vanishing gradient problem occurs when gradients become increasingly small as they propagate backward through layers in deep neural networks during training, making it difficult to update the weights of early layers effectively. It can lead to slow convergence or stagnation in learning.

Question 9: What are some popular deep learning frameworks, and why are they used?

Answer: Popular deep learning frameworks include TensorFlow, PyTorch, Keras, and MXNet. These frameworks provide high-level APIs and abstractions for building and training neural networks, allowing researchers and practitioners to focus on model design and experimentation rather than low-level implementation details.

Question 10: How do you choose the appropriate neural network architecture for a given problem?

Answer: Choosing the appropriate neural network architecture depends on factors such as the nature of the data (e.g., structured, unstructured), the complexity of the problem, computational resources available, and the trade-off between model performance and interpretability. Experimentation and validation on a held-out dataset are essential for selecting the best architecture.

Model Evaluation and Performance Tuning

This section may include questions about techniques for evaluating model performance such as accuracy, precision, recall, F1-score, ROC curve, AUC-ROC score, and strategies for hyperparameter tuning using techniques like grid search, random search, and Bayesian optimization.

Question 1: What evaluation metrics would you use for a binary classification problem, and why?

Answer: For a binary classification problem, common evaluation metrics include accuracy, precision, recall, F1-score, ROC curve, and AUC-ROC score. These metrics provide insights into different aspects of the model's performance, such as overall correctness, class-wise performance, and trade-offs between true positive and false positive rates.

Question 2: How do you interpret the ROC curve and AUC-ROC score?

Answer: The ROC curve plots the true positive rate (sensitivity) against the false positive rate (1-specificity) at various threshold settings, showing the trade-offs between sensitivity and specificity. The AUC-ROC score represents the area under the ROC curve, with higher values indicating better discrimination performance of the model.

Question 3: What is the purpose of cross-validation, and how does it work?

Answer: Cross-validation is a technique used to assess how well a predictive model generalizes to unseen data by splitting the dataset into multiple subsets for training and testing. It works by iteratively training the model on a subset of the data (training set) and evaluating its performance on the remaining data (validation set), rotating the subsets until each subset has been used as both training and validation data.

Question 4: What is hyperparameter tuning, and why is it important?

Answer: Hyperparameter tuning involves selecting the optimal values for hyperparameters, which are parameters that control the learning process of machine learning algorithms. It is important because the choice of hyperparameters can significantly affect the performance of the model, and finding the best configuration can improve predictive accuracy and generalization.

Question 5: How would you approach model selection for a given problem?

Answer: Model selection involves comparing the performance of different models on a validation dataset and selecting the one with the best performance based on evaluation metrics relevant to the problem at hand. It requires experimentation with different algorithms, architectures, and hyperparameter settings to identify the model that generalizes well to unseen data.

Question 6: What is overfitting, and how can it be detected and prevented?

Answer: Overfitting occurs when a model learns the training data too well, capturing noise and irrelevant patterns, leading to poor generalization on unseen data. It can be detected by comparing the performance of the model on training and validation datasets or using techniques like cross-validation. To prevent overfitting, regularization techniques like L1 or L2 regularization, dropout, and early stopping can be applied.

Question 7: How do you perform feature selection to improve model performance?

Answer: Feature selection involves choosing the most relevant features for building predictive models while discarding irrelevant or redundant ones. It can be performed using techniques like univariate feature selection, recursive feature elimination, or model-based feature selection, based on criteria such as feature importance scores or statistical tests.

Question 8: What is grid search, and how does it work?

Answer: Grid search is a hyperparameter tuning technique that exhaustively searches through a specified grid of hyperparameter values, evaluating the model's performance using cross-validation for each combination of hyperparameters. It helps identify the optimal hyperparameter values that maximize the model's performance.

Question 9: How would you handle class imbalance in a classification problem?

Answer: Techniques for handling class imbalance in classification problems include resampling methods such as oversampling the minority class or undersampling the majority class, using different evaluation metrics like precision-recall curves or AUC-ROC score, and employing algorithms specifically designed for imbalanced data, such as SMOTE (Synthetic Minority Over-sampling Technique).

Question 10: What is early stopping, and how does it prevent overfitting?

Answer: Early stopping is a technique used to prevent overfitting by monitoring the model's performance on a validation dataset during training and stopping the training process when the performance starts deteriorating. It works by halting training before the model becomes overly specialized to the training data, thus improving generalization.

Real-World Applications and Case Studies

Candidates may be asked to discuss real-world machine learning applications they have worked on, challenges faced during projects, how they approached problem-solving, and their understanding of the broader implications and ethical considerations of deploying machine learning systems.

Question 1: Can you describe a machine learning project you worked on and the challenges you faced?

Answer: Candidate's response about a specific project, including the problem statement, data used, algorithms employed, challenges encountered, and how they addressed them.

Question 2: What are some ethical considerations to keep in mind when deploying machine learning systems in real-world applications?

Answer: Candidate's response discussing ethical considerations such as bias and fairness, privacy and data protection, transparency and accountability, and potential societal impacts of machine learning systems.

Question 3: How would you approach building a recommendation system for an e-commerce platform?

Answer: Candidate's response outlining the steps involved in building a recommendation system, including data collection, preprocessing, algorithm selection, evaluation metrics, and deployment considerations.

Question 4: Can you discuss a time when you had to work with a large dataset and how you handled it?

Answer: Candidate's response describing their experience working with large datasets, including data preprocessing, optimization techniques, distributed computing frameworks, and strategies for efficient data storage and retrieval.

Question 5: What are some challenges you foresee in deploying a machine-learning model into production?

Answer: Candidate's response discussing challenges such as model scalability, performance monitoring, version control, model drift, security considerations, and integration with existing systems.

Question 6: Can you explain a situation where feature engineering played a crucial role in improving model performance?

Answer: Candidate's response providing an example of feature engineering techniques applied to a specific problem, including feature selection, transformation, creation of new features, and their impact on model performance.

Question 7: How would you evaluate the impact of a machine learning model on a business outcome?

Answer: Candidate's response discussing metrics for evaluating the business impact of a machine learning model, such as return on investment (ROI), cost savings, revenue generation, customer satisfaction, and user engagement.

Question 8: What are some considerations for deploying a machine learning model in a resource-constrained environment?

Answer: Candidate's response addressing considerations such as model size and complexity, computational resource requirements, latency and throughput constraints, energy efficiency, and trade-offs between model performance and deployment feasibility.

Question 9: Can you describe a scenario where you had to explain complex machine-learning concepts to a non-technical audience?

Answer: Candidate's response describing their experience communicating complex machine learning concepts clearly and understandably to stakeholders, clients, or team members with varying levels of technical expertise.

Question 10: How do you stay updated with the latest advancements and trends in machine learning?

Answer: Candidate's response discussing their strategies for staying updated with the latest advancements and trends in machine learning, such as attending conferences, reading research papers, participating in online courses, and experimenting with new techniques and frameworks.

Additional Resources

Need more resources? I HIGHLY recommend my Ace the Data Job Hunt video course. This course is filled with 25+ videos as well as downloadable resources, that will help you get the job you want.

BTW, companies also go HARD on technical interviews – it's not just Machine Learning interviews that are a must to prepare. Test yourself and solve over 200+ SQL questions on Data Lemur which come from companies like Facebook, Google, and VC-backed startups.

But if your SQL coding skills are weak, forget about going right into solving questions – refresh your SQL knowledge with this DataLemur SQL Tutorial .

I'm a bit biased, but I also recommend the book Ace the Data Science Interview because it has multiple FAANG technical Interview questions with solutions in it.

Interview Questions

Career resources.

Join Data Science Interview MasterClass (September Cohort) 🚀 led by Data Scientists and a Recruiter at FAANGs | 3 Slots Remaining...

[2023] Machine Learning Interview Prep

Got a machine learning interview lined up? Chances are that you are interviewing for ML engineering and/or data scientist position. Companies that have ML interview portions are Google , Meta , Stripe , McKinsey , and startups. And, the ML questions are peppered throughout the technical screen, take-home, and on-site rounds. So, what are entailed in the ML engineering interview? There are generally five areas👇

📚 ML Interview A reas

Area 1 – ML Coding

ML coding is similar to LeetCode style, but the main difference is that it is the application of machine learning using coding. Expect to write ML functions from scratch. In some cases, you will not be allowed to import third-party libraries like SkLearn as the questions are designed to assess your conceptual understanding and coding ability.

Area 2 – ML Theory (”Breath”)

These assess the candidate’s breath of knowledge in machine learning. Conceptual understanding of ML theories including the bias-variance trade-off, handling imbalanced labels, and accuracy vs interpretability are what’s assessed in ML theory interviews.

Area 3 – ML Algorithms (”Depth”)

Don’t confuse ML algorithms (sometimes called “Depth”) as the same coverage as ML “Breath”. While ML breath covers the general understanding of machine learning. ML Depth, on the other hand, assesses an in-depth understanding of the particular algorithm. For instance, you may have a dedicated round just focusing on the random forest. E.g. Here’s a sample question set you could be asked in a single round at Amazon.

Area 4 – Applied ML / Business Case

These are solve ML cases in the context of a business problem. Scalability and productionization are not the main concern as they are more so relevant in ML system design portions. Business case could be assessed in various form; it could be verbal explanation, or hands-on coding on Jupyter or Colab.

Area 5 – ML System Design

These assess the soundness and scalability of the ML system design. They are often assessed in the ML engineering interview, and you will be required to discuss the functional & non-functional requirements, architecture overview, data preparation, model training, model evaluation, and model productionization.

📚 ML Questions x Track (e.g. product analyst, data scientist, MLE)

Depending on the tracks, the type of ML questions you will be exposed to will vary. Here are some examples. Consider the following questions posed in various roles:

- Product Analyst – Build a model that can predict the lifetime value of a customer

- Data Scientist (Generalist) – Build a fraud detection model using credit card transactions

- ML Engineering – Build a recommender system that can scale to 10 million daily active users

For product analyst roles, the emphasis is on the application of ML on product analysis, user segmentation, and feature improvement. Rigor in scalable system is not required as most of the analysis is conducted on offline dataset.

For data scientist roles, you will most likely be assessed on ML breath, depth, and business case challenges. Understanding scalable systems is not required unless the role is more focused on “full-stack” type of data science role.

For ML engineering role, you will be asked coding, ML breath & depth and ML system design design questions. You will most likely have dedicated rounds on ML coding and ML system design with ML breath & depth questions peppered throughout the interview process.

✍️ 7 Algorithms You Should Know

In general you should have a in-depth understanding of the following algorithms. Understand the assumption, application, trade-offs and parameter tuning of these 7 ML algorithms. The most important aspect isn’t whether you understand 20+ ML algorithms. What’s more important is that you understand how to leverage 7 algorithms in 20 different situations.

- Linear Regression

- Logistic Regression

- Decision Tree

- Random Forest

- Gradient Boosted Trees

- Dense Neural Networks

📝 More Questions

- What is the difference between supervised and unsupervised learning?

- Can you explain the concept of overfitting and underfitting in machine learning models?

- What is cross-validation? Why is it important?

- Describe how a decision tree works. When would you use it over other algorithms?

- What is the difference between bagging and boosting?

- How would you validate a model you created to generate a predictive analysis?

- How does KNN work?

- What is PCA?

- How would you perform feature selection?

- What are the advantages and disadvantages of a neural network?

💡 Prep Tips

Tip 1 – Understand How ML Interviews are Screen

The typical format is 20 to 40 minutes embedded in a technical phone screen or a dedicated ML round within an onsite. You will be assessed by Sr./Staff-level data scientist or ML engineer. Here’s a sample video. You can also get coaching with a ML interviewer at FAANG companies: https://www.datainterview.com/coaching

Tip 2 – Practice Explaining Verbally

Interviewing is not a written exercise, it’s a verbal exercise. Whether the interviewer asks you conceptual knowledge of ML, coding question, or ML system design, you will be expected to explain with clarity and in-details. As you practice interview questions, practice verbally.

Tip 3 – Join the Ultimate Prep

Get access to ML questions, cases and machine learning mock interview recordings when you join the interview program: Join the Data Science Ultimate Prep created by FAANG engineers/Interviewers

Elevate Your Interview Game

Essential insights and practical strategies to help you excel in machine learning interviews..

What's Inside?

- Comprehensive breakdown of the ML interview process, including all the major interview sessions: ML Fundamentals, ML Coding, ML System Design, & ML Infrastructure.

- Proven strategies for approaching and solving a wide range of ML problems, drawing from real-world scenarios.

- Step-by-step guidance on tackling ML coding challenges, system design questions, and infrastructure design problems.

- Deep dive into the mindset of interviewers, understanding what they value and how to effectively demonstrate your expertise.

- Practical examples and case studies showcasing the history of solutions to ML problems, from pioneering approaches to the state of the art.

Peng Shao has 15 years of ML leadership experience in social media, ad-tech, fintech, and e-commerce. Having interviewed nearly a thousand candidates, he has a comprehensive understanding of the skills that make a strong ML candidate. At Twitter, he served as a Staff ML Engineer, designing ML systems behind Twitter's recommendation algorithms and ads prediction. Prior to that, he co-founded a venture-backed AI startup (Roxy) which was acquired in 2019. Earlier in his career, he led ML teams at Amazon and FactSet. In these roles, he oversaw the development of ML systems including machine translation, tabular information extraction, named entity recognition, and topic modeling.

Stay in Touch.

Don't miss out on exciting updates! Subscribe now to stay connected and be the first to hear about upcoming books, courses, and practice exercises.

Top 10 Data Science Case Study Interview Questions for 2024

Data Science Case Study Interview Questions and Answers to Crack Your next Data Science Interview.

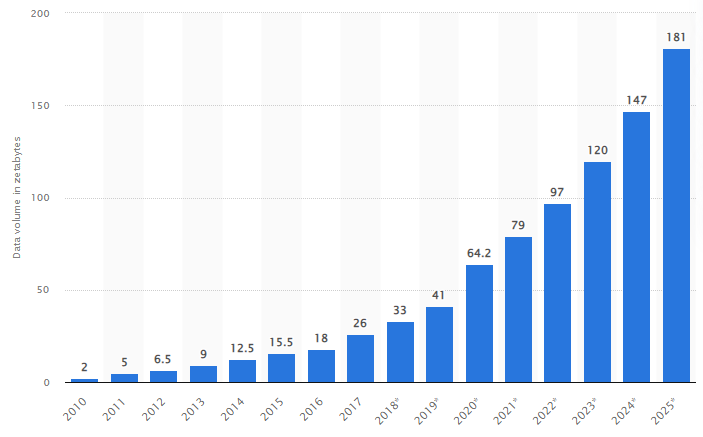

According to Harvard business review, data scientist jobs have been termed “The Sexist job of the 21st century” by Harvard business review . Data science has gained widespread importance due to the availability of data in abundance. As per the below statistics, worldwide data is expected to reach 181 zettabytes by 2025

Source: statists 2021

Build a Churn Prediction Model using Ensemble Learning

Downloadable solution code | Explanatory videos | Tech Support

“Data is the new oil. It’s valuable, but if unrefined it cannot really be used. It has to be changed into gas, plastic, chemicals, etc. to create a valuable entity that drives profitable activity; so must data be broken down, analyzed for it to have value.” — Clive Humby, 2006

Table of Contents

What is a data science case study, why are data scientists tested on case study-based interview questions, research about the company, ask questions, discuss assumptions and hypothesis, explaining the data science workflow, 10 data science case study interview questions and answers.

A data science case study is an in-depth, detailed examination of a particular case (or cases) within a real-world context. A data science case study is a real-world business problem that you would have worked on as a data scientist to build a machine learning or deep learning algorithm and programs to construct an optimal solution to your business problem.This would be a portfolio project for aspiring data professionals where they would have to spend at least 10-16 weeks solving real-world data science problems. Data science use cases can be found in almost every industry out there e-commerce , music streaming, stock market,.etc. The possibilities are endless.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

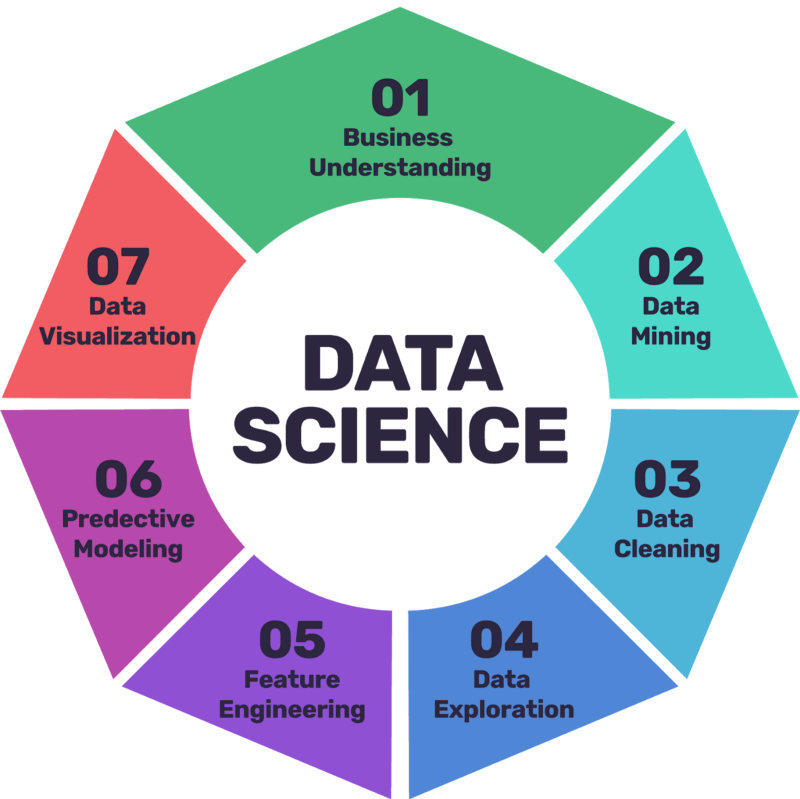

A case study evaluation allows the interviewer to understand your thought process. Questions on case studies can be open-ended; hence you should be flexible enough to accept and appreciate approaches you might not have taken to solve the business problem. All interviews are different, but the below framework is applicable for most data science interviews. It can be a good starting point that will allow you to make a solid first impression in your next data science job interview. In a data science interview, you are expected to explain your data science project lifecycle , and you must choose an approach that would broadly cover all the data science lifecycle activities. The below seven steps would help you get started in the right direction.

Source: mindsbs

Business Understanding — Explain the business problem and the objectives for the problem you solved.

Data Mining — How did you scrape the required data ? Here you can talk about the connections(can be database connections like oracle, SAP…etc.) you set up to source your data.

Data Cleaning — Explaining the data inconsistencies and how did you handle them.

Data Exploration — Talk about the exploratory data analysis you performed for the initial investigation of your data to spot patterns and anomalies.

Feature Engineering — Talk about the approach you took to select the essential features and how you derived new ones by adding more meaning to the dataset flow.

Predictive Modeling — Explain the machine learning model you trained, how did you finalized your machine learning algorithm, and talk about the evaluation techniques you performed on your accuracy score.

Data Visualization — Communicate the findings through visualization and what feedback you received.

New Projects

How to Answer Case Study-Based Data Science Interview Questions?

During the interview, you can also be asked to solve and explain open-ended, real-world case studies. This case study can be relevant to the organization you are interviewing for. The key to answering this is to have a well-defined framework in your mind that you can implement in any case study, and we uncover that framework here.

Ensure that you read about the company and its work on its official website before appearing for the data science job interview . Also, research the position you are interviewing for and understand the JD (Job description). Read about the domain and businesses they are associated with. This will give you a good idea of what questions to expect.

As case study interviews are usually open-ended, you can solve the problem in many ways. A general mistake is jumping to the answer straight away.

Try to understand the context of the business case and the key objective. Uncover the details kept intentionally hidden by the interviewer. Here is a list of questions you might ask if you are being interviewed for a financial institution -

Does the dataset include all transactions from Bank or transactions from some specific department like loans, insurance, etc.?

Is the customer data provided pre-processed, or do I need to run a statistical test to check data quality?

Which segment of borrower’s your business is targeting/focusing on? Which parameter can be used to avoid biases during loan dispersion?

Here's what valued users are saying about ProjectPro

Abhinav Agarwal

Graduate Student at Northwestern University

Director Data Analytics at EY / EY Tech

Not sure what you are looking for?

Make informed or well-thought assumptions to simplify the problem. Talk about your assumption with the interviewer and explain why you would want to make such an assumption. Try to narrow down to key objectives which you can solve. Here is a list of a few instances —

As car sales increase consistently over time with no significant spikes, I assume seasonal changes do not impact your car sales. Hence I would prefer the modeling excluding the seasonality component.

As confirmed by you, the incoming data does not require any preprocessing. Hence I will skip the part of running statistical tests to check data quality and perform feature selection.

As IoT devices are capturing temperature data at every minute, I am required to predict weather daily. I would prefer averaging out the minute data to a day to have data daily.

Get Closer To Your Dream of Becoming a Data Scientist with 150+ Solved End-to-End ML Projects

Now that you have a clear and focused objective to solve the business case. You can start leveraging the 7-step framework we briefed upon above. Think of the mining and cleaning activities that you are required to perform. Talk about feature selection and why you would prefer some features over others, and lastly, how you would select the right machine learning model for the business problem. Here is an example for car purchase prediction from auctions -

First, Prepare the relevant data by accessing the data available from various auctions. I will selectively choose the data from those auctions which are completed. At the same time, when selecting the data, I need to ensure that the data is not imbalanced.

Now I will implement feature engineering and selection to create and select relevant features like a car manufacturer, year of purchase, automatic or manual transmission…etc. I will continue this process if the results are not good on the test set.

Since this is a classification problem, I will check the prediction using the Decision trees and Random forest as this algorithm tends to do better for classification problems. If the results score is unsatisfactory, I can perform hyper parameterization to fine-tune the model and achieve better accuracy scores.

In the end, summarise the answer and explain how your solution is best suited for this business case. How the team can leverage this solution to gain more customers. For instance, building on the car sales prediction analogy, your response can be

For the car predicted as a good car during an auction, the dealers can purchase those cars and minimize the overall losses they incur upon buying a bad car.

Often, the company you are being interviewed for would select case study questions based on a business problem they are trying to solve or have already solved. Here we list down a few case study-based data science interview questions and the approach to answering those in the interviews. Note that these case studies are often open-ended, so there is no one specific way to approach the problem statement.

1. How would you improve the bank's existing state-of-the-art credit scoring of borrowers? How will you predict someone can face financial distress in the next couple of years?

Consider the interviewer has given you access to the dataset. As explained earlier, you can think of taking the following approach.

Ask Questions —

Q: What parameter does the bank consider the borrowers while calculating the credit scores? Do these parameters vary among borrowers of different categories based on age group, income level, etc.?

Q: How do you define financial distress? What features are taken into consideration?

Q: Banks can lend different types of loans like car loans, personal loans, bike loans, etc. Do you want me to focus on any one loan category?

Discuss the Assumptions  —

As debt ratio is proportional to monthly income, we assume that people with a high debt ratio(i.e., their loan value is much higher than the monthly income) will be an outlier.

Monthly income tends to vary (mainly on the upside) over two years. Cases, where the monthly income is constant can be considered data entry issues and should not be considered for analysis. I will choose the regression model to fill up the missing values.

Get FREE Access to Machine Learning Example Codes for Data Cleaning, Data Munging, and Data Visualization

Building end-to-end Data Science Workflows —

Firstly, I will carefully select the relevant data for my analysis. I will deselect records with insane values like people with high debt ratios or inconsistent monthly income.

Identifying essential features and ensuring they do not contain missing values. If they do, fill them up. For instance, Age seems to be a necessary feature for accepting or denying a mortgage. Also, ensuring data is not imbalanced as a meager percentage of borrowers will be defaulter when compared to the complete dataset.

As this is a binary classification problem, I will start with logistic regression and slowly progress towards complex models like decision trees and random forests.

Conclude —

Banks play a crucial role in country economies. They decide who can get finance and on what terms and can make or break investment decisions. Individuals and companies need access to credit for markets and society to function.

You can leverage this credit scoring algorithm to determine whether or not a loan should be granted by predicting the probability that somebody will experience financial distress in the next two years.

2. At an e-commerce platform, how would you classify fruits and vegetables from the image data?

Q: Do the images in the dataset contain multiple fruits and vegetables, or would each image have a single fruit or a vegetable?

Q: Can you help me understand the number of estimated classes for this classification problem?

Q: What would be an ideal dimension of an image? Do the images vary within the dataset? Are these color images or grey images?

Upon asking the above questions, let us assume the interviewer confirms that each image would contain either one fruit or one vegetable. Hence there won't be multiple classes in a single image, and our website has roughly 100 different varieties of fruits and vegetables. For simplicity, the dataset contains 50,000 images each the dimensions are 100 X 100 pixels.

Assumptions and Preprocessing—

I need to evaluate the training and testing sets. Hence I will check for any imbalance within the dataset. The number of training images for each class should be consistent. So, if there are n number of images for class A, then class B should also have n number of training images (or a variance of 5 to 10 %). Hence if we have 100 classes, the number of training images under each class should be consistent. The dataset contains 50,000 images average image per class is close to 500 images.

I will then divide the training and testing sets into 80: 20 ratios (or 70:30, whichever suits you best). I assume that the images provided might not cover all possible angles of fruits and vegetables; hence such a dataset can cause overfitting issues once the training gets completed. I will keep techniques like Data augmentation handy in case I face overfitting issues while training the model.

End to End Data Science Workflow —

As this is a larger dataset, I would first check the availability of GPUs as processing 50,000 images would require high computation. I will use the Cuda library to move the training set to GPU for training.

I choose to develop a convolution neural network (CNN) as these networks tend to extract better features from the images when compared to the feed-forward neural network. Feature extraction is quite essential while building the deep neural network. Also, CNN requires way less computation requirement when compared to the feed-forward neural networks.

I will also consider techniques like Batch normalization and learning rate scheduling to improve the accuracy of the model and improve the overall performance of the model. If I face the overfitting issue on the validation set, I will choose techniques like dropout and color normalization to over those.

Once the model is trained, I will test it on sample test images to see its behavior. It is quite common to model that doing well on training sets does not perform well on test sets. Hence, testing the test set model is an important part of the evaluation.

The fruit classification model can be helpful to the e-commerce industry as this would help them classify the images and tag the fruit and vegetables belonging to their category.The fruit and vegetable processing industries can use the model to organize the fruits to the correct categories and accordingly instruct the device to place them on the cover belts involved in packaging and shipping to customers.

Explore Categories

3. How would you determine whether Netflix focuses more on TV shows or Movies?

Q: Should I include animation series and movies while doing this analysis?

Q: What is the business objective? Do you want me to analyze a particular genre like action, thriller, etc.?

Q: What is the targeted audience? Is this focus on children below a certain age or for adults?

Let us assume the interview responds by confirming that you must perform the analysis on both movies and animation data. The business intends to perform this analysis over all the genres, and the targeted audience includes both adults and children.

Assumptions —

It would be convenient to do this analysis over geographies. As US and India are the highest content generator globally, I would prefer to restrict the initial analysis over these countries. Once the initial hypothesis is established, you can scale the model to other countries.

While analyzing movies in India, understanding the movie release over other months can be an important metric. For example, there tend to be many releases in and around the holiday season (Diwali and Christmas) around November and December which should be considered.

End to End Data Science Workflow —

Firstly, we need to select only the relevant data related to movies and TV shows among the entire dataset. I would also need to ensure the completeness of the data like this has a relevant year of release, month-wise release data, Country-wise data, etc.

After preprocessing the dataset, I will do feature engineering to select the data for only those countries/geographies I am interested in. Now you can perform EDA to understand the correlation of Movies and TV shows with ratings, Categories (drama, comedies…etc.), actors…etc.

Lastly, I would focus on Recommendation clicks and revenues to understand which of the two generate the most revenues. The company would likely prefer the categories generating the highest revenue ( TV Shows vs. Movies) over others.

This analysis would help the company invest in the right venture and generate more revenue based on their customer preference. This analysis would also help understand the best or preferred categories, time in the year to release, movie directors, and actors that their customers would like to see.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

4. How would you detect fake news on social media?

Q: When you say social media, does it mean all the apps available on the internet like Facebook, Instagram, Twitter, YouTub, etc.?

Q: Does the analysis include news titles? Does the news description carry significance?

Q: As these platforms contain content from multiple languages? Should the analysis be multilingual?

Let us assume the interviewer responds by confirming that the news feeds are available only from Facebook. The new title and the news details are available in the same block and are not segregated. For simplicity, we would prefer to categorize the news available in the English language.

Assumptions and Data Preprocessing —

I would first prefer to segregate the news title and description. The news title usually contains the key phrases and the intent behind the news. Also, it would be better to process news titles as that would require low computing than processing the whole text as a data scientist. This will lead to an efficient solution.

Also, I would also check for data imbalance. An imbalanced dataset can cause the model to be biased to a particular class.

I would also like to take a subset of news that may focus on a specific category like sports, finance , etc. Gradually, I will increase the model scope, and this news subset would help me set up my baseline model, which can be tweaked later based on the requirement.

Firstly, it would be essential to select the data based on the chosen category. I take up sports as a category I want to start my analysis with.

I will first clean the dataset by checking for null records. Once this check is done, data formatting is required before you can feed to a natural network. I will write a function to remove characters like !”#$%&’()*+,-./:;<=>?@[]^_`{|}~ as their character does not add any value for deep neural network learning. I will also implement stopwords to remove words like ‘and’, ‘is”, etc. from the vocabulary.

Then I will employ the NLP techniques like Bag of words or TFIDF based on the significance. The bag of words can be faster, but TF IDF can be more accurate and slower. Selecting the technique would also depend upon the business inputs.

I will now split the data in training and testing, train a machine learning model, and check the performance. Since the data set is heavy on text models like naive bayes tends to perform better in these situations.

Conclude  —

Social media and news outlets publish fake news to increase readership or as part of psychological warfare. In general, the goal is profiting through clickbait. Clickbaits lure users and entice curiosity with flashy headlines or designs to click links to increase advertisements revenues. The trained model will help curb such news and add value to the reader's time.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

5. How would you forecast the price of a nifty 50 stock?

Q: Do you want me to forecast the nifty 50 indexes/tracker or stock price of a specific stock within nifty 50?

Q: What do you want me to forecast? Is it the opening price, closing price, VWAP, highest of the day, etc.?

Q: Do you want me to forecast daily prices /weekly/monthly prices?

Q: Can you tell me more about the historical data available? Do we have ten years or 15 years of recorded data?

With all these questions asked to the interviewer, let us assume the interviewer responds by saying that you should pick one stock among nifty 50 stocks and forecast their average price daily. The company has historical data for the last 20 years.

Assumptions and Data preprocessing —

As we forecast the average price daily, I would consider VWAP my target or predictor value. VWAP stands for Volume Weighted Average Price, and it is a ratio of the cumulative share price to the cumulative volume traded over a given time.

Solving this data science case study requires tracking the average price over a period, and it is a classical time series problem. Hence I would refrain from using the classical regression model on the time series data as we have a separate set of machine learning models (like ARIMA , AUTO ARIMA, SARIMA…etc.) to work with such datasets.

Like any other dataset, I will first check for null and understand the % of null values. If they are significantly less, I would prefer to drop those records.

Now I will perform the exploratory data analysis to understand the average price variation from the last 20 years. This would also help me understand the tread and seasonality component of the time series data. Alternatively, I will use techniques like the Dickey-Fuller test to know if the time series is stationary or not.

Usually, such time series is not stationary, and then I can now decompose the time series to understand the additive or multiplicative nature of time series. Now I can use the existing techniques like differencing, rolling stats, or transformation to make the time series non-stationary.

Lastly, once the time series is non-stationary, I will separate train and test data based on the dates and implement techniques like ARIMA or Facebook prophet to train the machine learning model .

Some of the major applications of such time series prediction can occur in stocks and financial trading, analyzing online and offline retail sales, and medical records such as heart rate, EKG, MRI, and ECG.

Time series datasets invoke a lot of enthusiasm between data scientists . They are many different ways to approach a Time series problem, and the process mentioned above is only one of the know techniques.

Access Job Recommendation System Project with Source Code

6. How would you forecast the weekly sales of Walmart? Which department impacted most during the holidays?

Q: Walmart usually operates three different stores - supermarkets, discount stores, and neighborhood stores. Which store data shall I pick to get started with my analysis? Are the sales tracked in US dollars?

Q: How would I identify holidays in the historical data provided? Is the store closed on Black Friday week, super bowl week, or Christmas week?

Q: What are the evaluation or the loss criteria? How many departments are present across all store types?

Let us assume the interviewer responds by saying you must forecast weekly sales department-wise and not store type-wise in US dollars. You would be provided with a flag within the dataset to inform weeks having holidays. There are over 80 departments across three types of stores.

As we predict the weekly sales, I would assume weekly sales to be the target or the predictor for our data model before training.

We are tracking sales price weekly, We will use a regression model to predict our target variable, “Weekly_Sales,” a grouped/hierarchical time series. We will explore the following categories of models, engineer features, and hyper-tune parameters to choose a model with the best fit.

- Linear models

- Tree models

- Ensemble models

I will consider MEA, RMSE, and R2 as evaluation criteria.

End to End Data Science Workflow-

The foremost step is to figure out essential features within the dataset. I would explore store information regarding their size, type, and the total number of stores present within the historical dataset.

The next step would be to perform feature engineering; as we have weekly sales data available, I would prefer to extract features like ‘WeekofYear’, ‘Month’, ‘Year’, and ‘Day’. This would help the model to learn general trends.

Now I will create store and dept rank features as this is one of the end goals of the given problem. I would create these features by calculating the average weekly sales.

Now I will perform the exploratory data analysis (a.k.a EDA) to understand what story does the data has to say? I will analyze the stores and weekly dept sales for the historical data to foresee the seasonality and trends. Weekly sales against the store and weekly sales against the department to understand their significance and whether these features must be retained that will be passed to the machine learning models.

After feature engineering and selection, I will set up a baseline model and run the evaluation considering MAE, RMSE and R2. As this is a regression problem, I will begin with simple models like linear regression and SGD regressor. Later, I will move towards complex models, like Decision Trees Regressor, if the need arises. LGBM Regressor and SGB regressor.

Sales forecasting can play a significant role in the company’s success. Accurate sales forecasts allow salespeople and business leaders to make smarter decisions when setting goals, hiring, budgeting, prospecting, and other revenue-impacting factors. The solution mentioned above is one of the many ways to approach this problem statement.

With this, we come to the end of the post. But let us do a quick summary of the techniques we learned and how they can be implemented. We would also like to provide you with some practice case studies questions to help you build up your thought process for the interview.

7. Considering an organization has a high attrition rate, how would you predict if an employee is likely to leave the organization?

8. How would you identify the best cities and countries for startups in the world?

9. How would you estimate the impact on Air Quality across geographies during Covid 19?

10. A Company often faces machine failures at its factory. How would you develop a model for predictive maintenance?

Do not get intimated by the problem statement; focus on your approach -

Ask questions to get clarity

Discuss assumptions, don't assume things. Let the data tell the story or get it verified by the interviewer.

Build Workflows — Take a few minutes to put together your thoughts; start with a more straightforward approach.

Conclude — Summarize your answer and explain how it best suits the use case provided.

We hope these case study-based data scientist interview questions will give you more confidence to crack your next data science interview.

|

|

|

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Machine Learning Interviews from FAANG, Snapchat, LinkedIn. I have offers from Snapchat, Coupang, Stitchfix etc. Blog: mlengineer.io.

khangich/machine-learning-interview

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 323 Commits | ||||

Repository files navigation

Minimum viable study plan for machine learning interviews.

Follow News about AI projects

- Most popular post: One lesson I learned after solving 500 leetcode questions

- Oct 10th: Machine Learning System Design course became the number 1 ML course on educative.