Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- Product Demos

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

- Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Survey Data Analysis & Reporting

- Regression Analysis

Try Qualtrics for free

The complete guide to regression analysis.

19 min read What is regression analysis and why is it useful? While most of us have heard the term, understanding regression analysis in detail may be something you need to brush up on. Here’s what you need to know about this popular method of analysis.

When you rely on data to drive and guide business decisions, as well as predict market trends, just gathering and analyzing what you find isn’t enough — you need to ensure it’s relevant and valuable.

The challenge, however, is that so many variables can influence business data: market conditions, economic disruption, even the weather! As such, it’s essential you know which variables are affecting your data and forecasts, and what data you can discard.

And one of the most effective ways to determine data value and monitor trends (and the relationships between them) is to use regression analysis, a set of statistical methods used for the estimation of relationships between independent and dependent variables.

In this guide, we’ll cover the fundamentals of regression analysis, from what it is and how it works to its benefits and practical applications.

Free eBook: 2024 global market research trends report

What is regression analysis?

Regression analysis is a statistical method. It’s used for analyzing different factors that might influence an objective – such as the success of a product launch, business growth, a new marketing campaign – and determining which factors are important and which ones can be ignored.

Regression analysis can also help leaders understand how different variables impact each other and what the outcomes are. For example, when forecasting financial performance, regression analysis can help leaders determine how changes in the business can influence revenue or expenses in the future.

Running an analysis of this kind, you might find that there’s a high correlation between the number of marketers employed by the company, the leads generated, and the opportunities closed.

This seems to suggest that a high number of marketers and a high number of leads generated influences sales success. But do you need both factors to close those sales? By analyzing the effects of these variables on your outcome, you might learn that when leads increase but the number of marketers employed stays constant, there is no impact on the number of opportunities closed, but if the number of marketers increases, leads and closed opportunities both rise.

Regression analysis can help you tease out these complex relationships so you can determine which areas you need to focus on in order to get your desired results, and avoid wasting time with those that have little or no impact. In this example, that might mean hiring more marketers rather than trying to increase leads generated.

How does regression analysis work?

Regression analysis starts with variables that are categorized into two types: dependent and independent variables. The variables you select depend on the outcomes you’re analyzing.

Understanding variables:

1. dependent variable.

This is the main variable that you want to analyze and predict. For example, operational (O) data such as your quarterly or annual sales, or experience (X) data such as your net promoter score (NPS) or customer satisfaction score (CSAT) .

These variables are also called response variables, outcome variables, or left-hand-side variables (because they appear on the left-hand side of a regression equation).

There are three easy ways to identify them:

- Is the variable measured as an outcome of the study?

- Does the variable depend on another in the study?

- Do you measure the variable only after other variables are altered?

2. Independent variable

Independent variables are the factors that could affect your dependent variables. For example, a price rise in the second quarter could make an impact on your sales figures.

You can identify independent variables with the following list of questions:

- Is the variable manipulated, controlled, or used as a subject grouping method by the researcher?

- Does this variable come before the other variable in time?

- Are you trying to understand whether or how this variable affects another?

Independent variables are often referred to differently in regression depending on the purpose of the analysis. You might hear them called:

Explanatory variables

Explanatory variables are those which explain an event or an outcome in your study. For example, explaining why your sales dropped or increased.

Predictor variables

Predictor variables are used to predict the value of the dependent variable. For example, predicting how much sales will increase when new product features are rolled out .

Experimental variables

These are variables that can be manipulated or changed directly by researchers to assess the impact. For example, assessing how different product pricing ($10 vs $15 vs $20) will impact the likelihood to purchase.

Subject variables (also called fixed effects)

Subject variables can’t be changed directly, but vary across the sample. For example, age, gender, or income of consumers.

Unlike experimental variables, you can’t randomly assign or change subject variables, but you can design your regression analysis to determine the different outcomes of groups of participants with the same characteristics. For example, ‘how do price rises impact sales based on income?’

Carrying out regression analysis

So regression is about the relationships between dependent and independent variables. But how exactly do you do it?

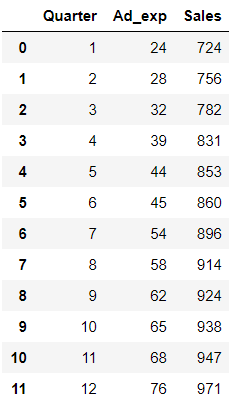

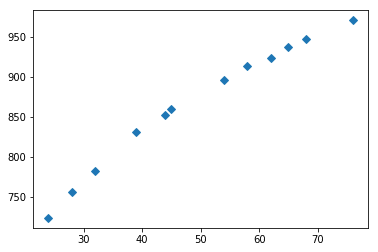

Assuming you have your data collection done already, the first and foremost thing you need to do is plot your results on a graph. Doing this makes interpreting regression analysis results much easier as you can clearly see the correlations between dependent and independent variables.

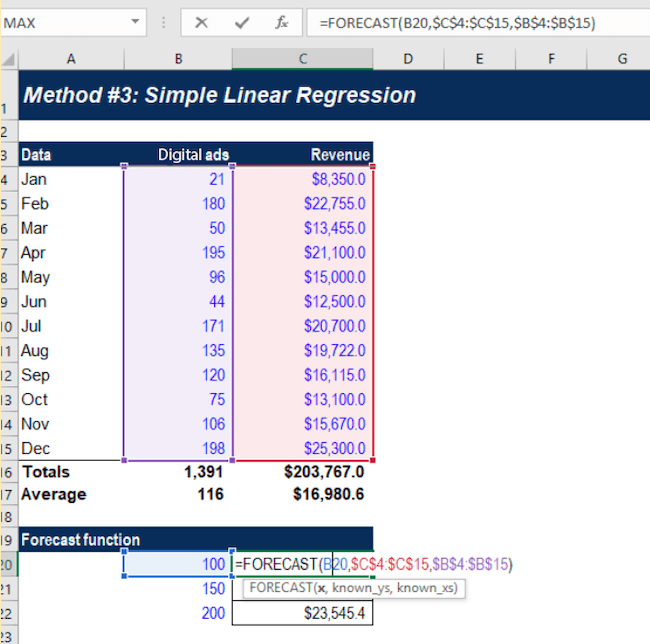

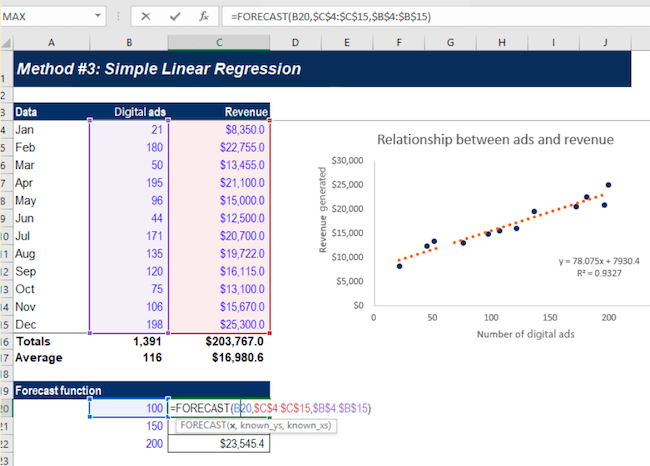

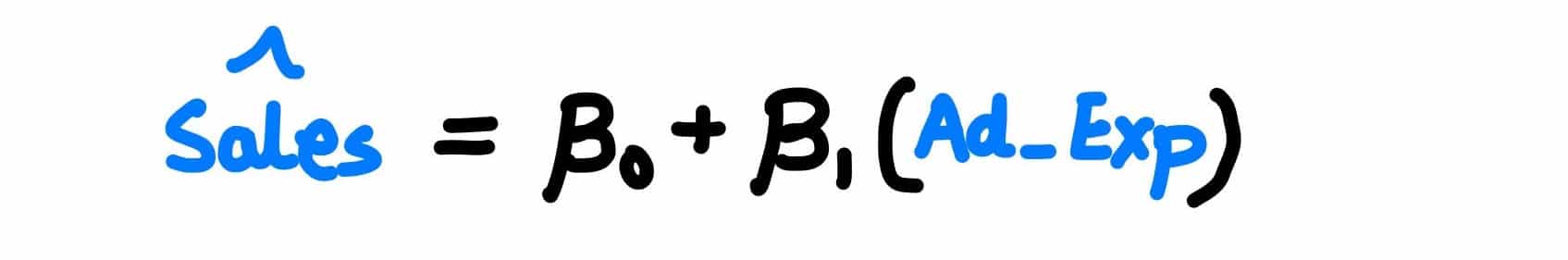

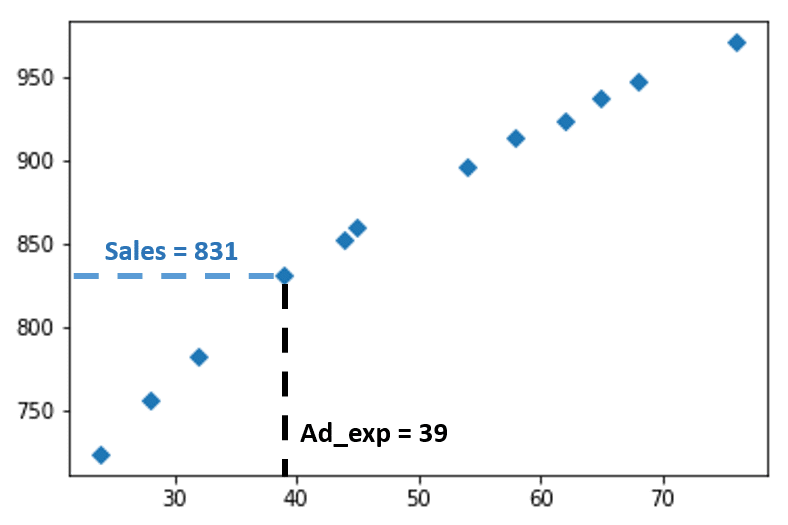

Let’s say you want to carry out a regression analysis to understand the relationship between the number of ads placed and revenue generated.

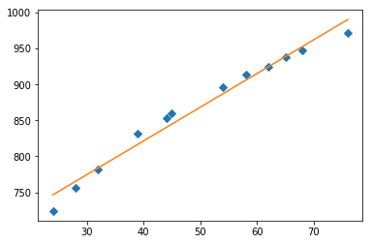

On the Y-axis, you place the revenue generated. On the X-axis, the number of digital ads. By plotting the information on the graph, and drawing a line (called the regression line) through the middle of the data, you can see the relationship between the number of digital ads placed and revenue generated.

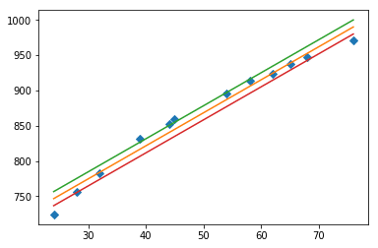

This regression line is the line that provides the best description of the relationship between your independent variables and your dependent variable. In this example, we’ve used a simple linear regression model.

Statistical analysis software can draw this line for you and precisely calculate the regression line. The software then provides a formula for the slope of the line, adding further context to the relationship between your dependent and independent variables.

Simple linear regression analysis

A simple linear model uses a single straight line to determine the relationship between a single independent variable and a dependent variable.

This regression model is mostly used when you want to determine the relationship between two variables (like price increases and sales) or the value of the dependent variable at certain points of the independent variable (for example the sales levels at a certain price rise).

While linear regression is useful, it does require you to make some assumptions.

For example, it requires you to assume that:

- the data was collected using a statistically valid sample collection method that is representative of the target population

- The observed relationship between the variables can’t be explained by a ‘hidden’ third variable – in other words, there are no spurious correlations.

- the relationship between the independent variable and dependent variable is linear – meaning that the best fit along the data points is a straight line and not a curved one

Multiple regression analysis

As the name suggests, multiple regression analysis is a type of regression that uses multiple variables. It uses multiple independent variables to predict the outcome of a single dependent variable. Of the various kinds of multiple regression, multiple linear regression is one of the best-known.

Multiple linear regression is a close relative of the simple linear regression model in that it looks at the impact of several independent variables on one dependent variable. However, like simple linear regression, multiple regression analysis also requires you to make some basic assumptions.

For example, you will be assuming that:

- there is a linear relationship between the dependent and independent variables (it creates a straight line and not a curve through the data points)

- the independent variables aren’t highly correlated in their own right

An example of multiple linear regression would be an analysis of how marketing spend, revenue growth, and general market sentiment affect the share price of a company.

With multiple linear regression models you can estimate how these variables will influence the share price, and to what extent.

Multivariate linear regression

Multivariate linear regression involves more than one dependent variable as well as multiple independent variables, making it more complicated than linear or multiple linear regressions. However, this also makes it much more powerful and capable of making predictions about complex real-world situations.

For example, if an organization wants to establish or estimate how the COVID-19 pandemic has affected employees in its different markets, it can use multivariate linear regression, with the different geographical regions as dependent variables and the different facets of the pandemic as independent variables (such as mental health self-rating scores, proportion of employees working at home, lockdown durations and employee sick days).

Through multivariate linear regression, you can look at relationships between variables in a holistic way and quantify the relationships between them. As you can clearly visualize those relationships, you can make adjustments to dependent and independent variables to see which conditions influence them. Overall, multivariate linear regression provides a more realistic picture than looking at a single variable.

However, because multivariate techniques are complex, they involve high-level mathematics that require a statistical program to analyze the data.

Logistic regression

Logistic regression models the probability of a binary outcome based on independent variables.

So, what is a binary outcome? It’s when there are only two possible scenarios, either the event happens (1) or it doesn’t (0). e.g. yes/no outcomes, pass/fail outcomes, and so on. In other words, if the outcome can be described as being in either one of two categories.

Logistic regression makes predictions based on independent variables that are assumed or known to have an influence on the outcome. For example, the probability of a sports team winning their game might be affected by independent variables like weather, day of the week, whether they are playing at home or away and how they fared in previous matches.

What are some common mistakes with regression analysis?

Across the globe, businesses are increasingly relying on quality data and insights to drive decision-making — but to make accurate decisions, it’s important that the data collected and statistical methods used to analyze it are reliable and accurate.

Using the wrong data or the wrong assumptions can result in poor decision-making, lead to missed opportunities to improve efficiency and savings, and — ultimately — damage your business long term.

- Assumptions

When running regression analysis, be it a simple linear or multiple regression, it’s really important to check that the assumptions your chosen method requires have been met. If your data points don’t conform to a straight line of best fit, for example, you need to apply additional statistical modifications to accommodate the non-linear data. For example, if you are looking at income data, which scales on a logarithmic distribution, you should take the Natural Log of Income as your variable then adjust the outcome after the model is created.

- Correlation vs. causation

It’s a well-worn phrase that bears repeating – correlation does not equal causation. While variables that are linked by causality will always show correlation, the reverse is not always true. Moreover, there is no statistic that can determine causality (although the design of your study overall can).

If you observe a correlation in your results, such as in the first example we gave in this article where there was a correlation between leads and sales, you can’t assume that one thing has influenced the other. Instead, you should use it as a starting point for investigating the relationship between the variables in more depth.

- Choosing the wrong variables to analyze

Before you use any kind of statistical method, it’s important to understand the subject you’re researching in detail. Doing so means you’re making informed choices of variables and you’re not overlooking something important that might have a significant bearing on your dependent variable.

- Model building The variables you include in your analysis are just as important as the variables you choose to exclude. That’s because the strength of each independent variable is influenced by the other variables in the model. Other techniques, such as Key Drivers Analysis, are able to account for these variable interdependencies.

Benefits of using regression analysis

There are several benefits to using regression analysis to judge how changing variables will affect your business and to ensure you focus on the right things when forecasting.

Here are just a few of those benefits:

Make accurate predictions

Regression analysis is commonly used when forecasting and forward planning for a business. For example, when predicting sales for the year ahead, a number of different variables will come into play to determine the eventual result.

Regression analysis can help you determine which of these variables are likely to have the biggest impact based on previous events and help you make more accurate forecasts and predictions.

Identify inefficiencies

Using a regression equation a business can identify areas for improvement when it comes to efficiency, either in terms of people, processes, or equipment.

For example, regression analysis can help a car manufacturer determine order numbers based on external factors like the economy or environment.

Using the initial regression equation, they can use it to determine how many members of staff and how much equipment they need to meet orders.

Drive better decisions

Improving processes or business outcomes is always on the minds of owners and business leaders, but without actionable data, they’re simply relying on instinct, and this doesn’t always work out.

This is particularly true when it comes to issues of price. For example, to what extent will raising the price (and to what level) affect next quarter’s sales?

There’s no way to know this without data analysis. Regression analysis can help provide insights into the correlation between price rises and sales based on historical data.

How do businesses use regression? A real-life example

Marketing and advertising spending are common topics for regression analysis. Companies use regression when trying to assess the value of ad spend and marketing spend on revenue.

A typical example is using a regression equation to assess the correlation between ad costs and conversions of new customers. In this instance,

- our dependent variable (the factor we’re trying to assess the outcomes of) will be our conversions

- the independent variable (the factor we’ll change to assess how it changes the outcome) will be the daily ad spend

- the regression equation will try to determine whether an increase in ad spend has a direct correlation with the number of conversions we have

The analysis is relatively straightforward — using historical data from an ad account, we can use daily data to judge ad spend vs conversions and how changes to the spend alter the conversions.

By assessing this data over time, we can make predictions not only on whether increasing ad spend will lead to increased conversions but also what level of spending will lead to what increase in conversions. This can help to optimize campaign spend and ensure marketing delivers good ROI.

This is an example of a simple linear model. If you wanted to carry out a more complex regression equation, we could also factor in other independent variables such as seasonality, GDP, and the current reach of our chosen advertising networks.

By increasing the number of independent variables, we can get a better understanding of whether ad spend is resulting in an increase in conversions, whether it’s exerting an influence in combination with another set of variables, or if we’re dealing with a correlation with no causal impact – which might be useful for predictions anyway, but isn’t a lever we can use to increase sales.

Using this predicted value of each independent variable, we can more accurately predict how spend will change the conversion rate of advertising.

Regression analysis tools

Regression analysis is an important tool when it comes to better decision-making and improved business outcomes. To get the best out of it, you need to invest in the right kind of statistical analysis software.

The best option is likely to be one that sits at the intersection of powerful statistical analysis and intuitive ease of use, as this will empower everyone from beginners to expert analysts to uncover meaning from data, identify hidden trends and produce predictive models without statistical training being required.

To help prevent costly errors, choose a tool that automatically runs the right statistical tests and visualizations and then translates the results into simple language that anyone can put into action.

With software that’s both powerful and user-friendly, you can isolate key experience drivers, understand what influences the business, apply the most appropriate regression methods, identify data issues, and much more.

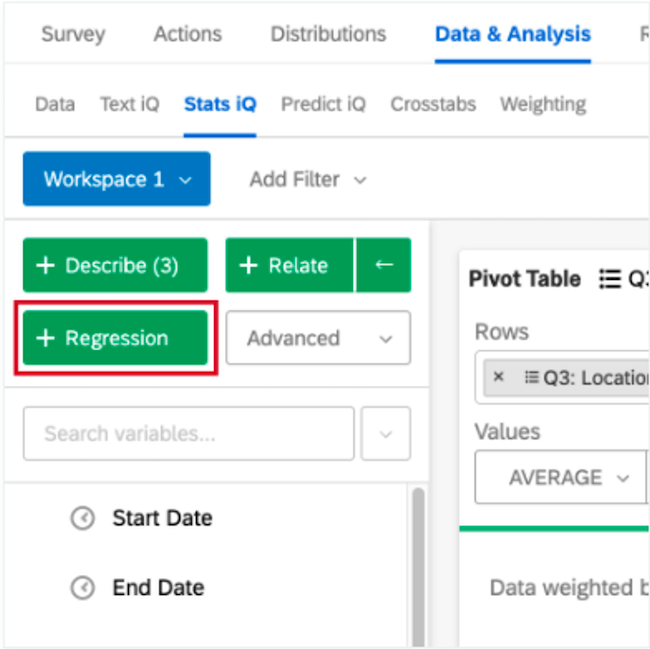

With Qualtrics’ Stats iQ™, you don’t have to worry about the regression equation because our statistical software will run the appropriate equation for you automatically based on the variable type you want to monitor. You can also use several equations, including linear regression and logistic regression, to gain deeper insights into business outcomes and make more accurate, data-driven decisions.

Related resources

Analysis & Reporting

Data Analysis 31 min read

Social media analytics 13 min read, kano analysis 21 min read, margin of error 11 min read, data saturation in qualitative research 8 min read, thematic analysis 11 min read, behavioral analytics 12 min read, request demo.

Ready to learn more about Qualtrics?

- Media Center

- E-Books & White Papers

The Strategic Value of Regression Analysis in Marketing Research

by Michael Lieberman , on December 14, 2023

Regression analysis offers significant value in modern business and research contexts. This article explores the strategic importance of regression analysis to shed light on its diverse applications and benefits. Included are several different case studies to help bring the concept to life.

Understanding Regression Analysis in Marketing

Regression analysis in marketing is used to examine how independent variables—such as advertising spend, demographics, pricing, and product features—influence a dependent variable, typically a measure of consumer behavior or business performance. The goal is to create models that capture these relationships accurately, allowing marketers to make informed decisions.

Benefits of Regression Analysis in Marketing

- Data-driven decisions : Regression analysis empowers marketers to make data-driven decisions, reducing reliance on intuition and guesswork. This approach leads to more accurate and strategic marketing efforts.

- Efficiency and cost savings : By optimizing marketing campaigns and resource allocation, regression analysis can significantly improve efficiency and cost-effectiveness. Companies can achieve better results with the same or fewer resources.

- Personalization : Understanding consumer behavior through regression analysis allows for personalized marketing efforts. Tailored messages and offers can lead to higher engagement and conversion rates.

- Competitive advantage : Marketers who employ regression analysis are better equipped to adapt to changing market conditions, outperform competitors, and stay ahead of industry trends.

- Continuous improvement : Regression analysis is an iterative process. As new data becomes available, models can be updated and refined, ensuring that marketing strategies remain effective over time.

Strategic Applications

- Consumer behavior prediction : Regression analysis helps marketers predict consumer behavior. By analyzing historical data and considering various factors, such as past purchases, online behavior, and demographic information, companies can build models to anticipate customer preferences, buying patterns, and churn rates.

- Marketing campaign optimization : Businesses invest heavily in marketing campaigns. Regression analysis aids in optimizing these efforts by identifying which marketing channels, messages, or strategies have the greatest impact on key performance indicators (KPIs) like sales, click-through rates, or conversion rates.

- Pricing strategy : Pricing is a critical aspect of marketing. Regression analysis can reveal the relationship between pricing strategies and sales volume, helping companies determine the optimal price points for their products or services.

- Product Development : In product development, regression analysis can be used to understand the relationship between product features and consumer satisfaction. Companies can then prioritize product enhancements based on customer preferences.

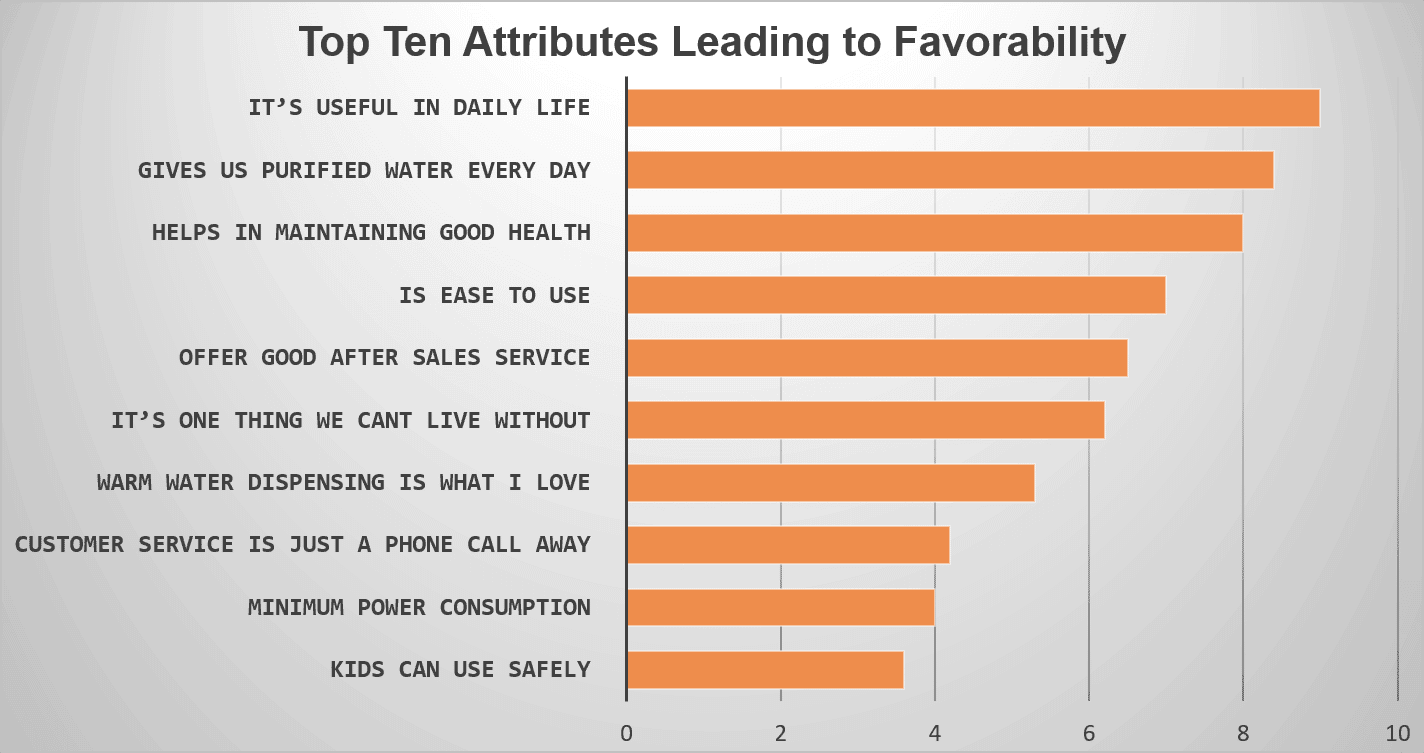

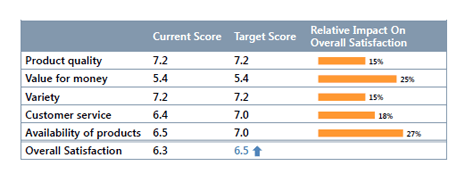

Case Study – Regression Analysis for Ranking Key Attributes

Let’s explore a specific example in a category known as Casual Dining Restaurants (CDR). In a survey, respondents are asked to rate several casual dining restaurants on a variety of attributes. For the purposes of this article, we will keep the number of demonstrated attributes to the top eight. The data for each restaurant is stacked into one regression. We are seeking to rank the attributes based on a regression analysis against an industry standard overall measurement: Net Promoter Score.

Table 1 shows the leading Casual Dining Restaurant chains in the United States to be used to ‘rank’ the key reasons that patrons visit this restaurant category, not specific to one restaurant band.

Table 1 - List of Leading Casual Dining Restaurant Chains in the United States

In Figure 1 we see a graphic example of key drivers across the CDR category.

The category-wide drivers of CDR visits are not particularly surprising. Good food. Good value. Cleanliness. Staff energy. There is one attribute, however, that may not seem intuitively as important as restaurant executives might think. Make sure your servers thank departing customers . Diners seek not just delicious cuisine at a reasonable price, but they also desire a sense of appreciation.

Case Study – Regression and Brand Response to Crisis

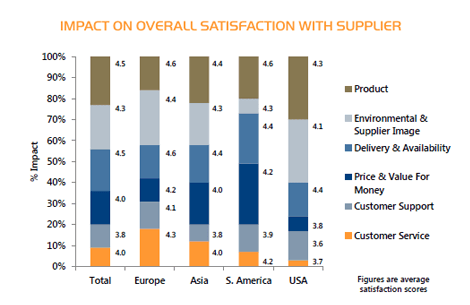

A major automobile company has a public relations disaster. In order to regain trust in their brand equity, the company commissions a series of regression analyses to gauge how buyers are viewing their brand image. However, what they really want to know is how American auto buyers view trust—the most valuable brand perception of this company’s automotive product.

The disaster is fresh—a nation-wide recall of thousands of cars over safety issues regarding airbags—so our company would like a composite of which values go into “Is this a Company I Trust.” Thus, it surveyed decision makers, stake holders, owners, and prospects. We then stack the data into one dataset and run a strategic regression. Once performed, the regression beta values are summed and then reported as percentages of influence on the dependent variable. What we see are the major components of “Trust.”

Figure 2 - Percentage Influence of "A Company I Trust"

Not surprisingly, family safety is the leading driver of Trust. However, we now have Shapley Values of the major components. These findings would normally be handed over to the public relations team to begin damage control. Within days the company began to run advertisements in major markets to reverse the negative narrative of the recall.

Case Study - Regression Analysis/Maximizing Product Lines

SparkleSquad Studios is a fictional startup hoping to find a niche among tween and teen girls to help reverse the tide of social media addiction. Though funded through venture capital investment, they found that all their 40 potential product areas, they only have capacity to produce eight. In order to determine the top 8 hobby products in demand, they fielded a study.

Table 2 - List of Potential Product Area Development

SparkleSquad Studios then conducted a large study gathering data from thousands of web-based surveys conducted among girls aged 10 to 16 across the United States. The key construct of the study is simple—not more than 5 minutes—and concise to cater to respondents' shorter attention spans. Below are the key questions.

- How much money do you typically allocate to hobbies unrelated to social media in a given month?

- Please check-off the hobbies that interest you from the list of 40 potential options below.

Question 1 serves as the dependent variable in the regression. Question 2 responses are coded into categorical variables, 1=Checked, 0=Not Checked . These are the independent variables.

Results are shown below in Table 3.

Table 3 - Top 10 Hobby Products for Production Determined Through Regression Analysis

Based on the resulting regression analysis, SparkleSquad will commence production of ten statistically significant products. The data-driven approach ensures these offerings meet the maximized determined market demand.

Regression analysis gives businesses the ability to predict consumer behavior, optimize marketing efforts, and drive results through data-driven decision-making. By leveraging regression analysis, businesses can gain a competitive advantage and increase their efficiency, and effectiveness. In an era where consumer preferences and market conditions are in constant flux, regression analysis remains an essential tool for marketers looking to stay ahead of the curve.

Michael Lieberman is the Founder and President of Multivariate Solutions , a statistical and market research consulting firm that works with major advertising, public relations, and political strategy firms. He can be reached at +1 646 257 3794, or [email protected] .

About This Blog

Our goal is to help you better understand your customer, market, and competition in order to help drive your business growth.

Popular Posts

- A CEO’s Perspective on Harnessing AI for Market Research Excellence

- 7 Key Advantages of Outsourcing Market Research Services

- How to Use Market Research for Onboarding and Training Employees

- 10 Global Industries That Will Boom in the Next 5 Years

- Primary Data vs. Secondary Data: Market Research Methods

Recent Posts

Posts by topic.

- Industry Insights (843)

- Market Research Strategy (274)

- Food & Beverage (135)

- Healthcare (127)

- The Freedonia Group (121)

- How To's (110)

- Market Research Provider (98)

- Pharmaceuticals (83)

- Manufacturing & Construction (82)

- Packaged Facts (78)

- Telecommunications & Wireless (71)

- Heavy Industry (69)

- Retail (59)

- Marketing (58)

- Profound (57)

- Software & Enterprise Computing (57)

- Transportation & Shipping (54)

- House & Home (50)

- Materials & Chemicals (47)

- Medical Devices (47)

- Consumer Electronics (45)

- Energy & Resources (43)

- Public Sector (40)

- Biotechnology (38)

- Business Services & Administration (37)

- Demographics (37)

- Education (36)

- Custom Market Research (35)

- Diagnostics (34)

- Academic (33)

- E-commerce & IT Outsourcing (33)

- Travel & Leisure (33)

- Financial Services (29)

- Computer Hardware & Networking (28)

- Simba Information (24)

- Kalorama Information (21)

- Knowledge Centers (19)

- Apparel (18)

- Cosmetics & Personal Care (18)

- Market Research Subscription (16)

- Social Media (16)

- Advertising (14)

- Big Data (14)

- Holiday (11)

- Emerging Markets (8)

- Associations (1)

- Religion (1)

MarketResearch.com 6116 Executive Blvd Suite 550 Rockville, MD 20852 800.298.5699 (U.S.) +1.240.747.3093 (International) [email protected]

From Our Blog

Subscribe to blog, connect with us.

Understanding regression analysis: overview and key uses

Last updated

22 August 2024

Reviewed by

Miroslav Damyanov

Regression analysis is a fundamental statistical method that helps us predict and understand how different factors (aka independent variables) influence a specific outcome (aka dependent variable).

Imagine you're trying to predict the value of a house. Regression analysis can help you create a formula to estimate the house's value by looking at variables like the home's size and the neighborhood's average income. This method is crucial because it allows us to predict and analyze trends based on data.

While that example is straightforward, the technique can be applied to more complex situations, offering valuable insights into fields such as economics, healthcare, marketing, and more.

- 3 uses for regression analysis in business

Businesses can use regression analysis to improve nearly every aspect of their operations. When used correctly, it's a powerful tool for learning how adjusting variables can improve outcomes. Here are three applications:

1. Prediction and forecasting

Predicting future scenarios can give businesses significant advantages. No method can guarantee absolute certainty, but regression analysis offers a reliable framework for forecasting future trends based on past data. Companies can apply this method to anticipate future sales for financial planning purposes and predict inventory requirements for more efficient space and cost management. Similarly, an insurance company can employ regression analysis to predict the likelihood of claims for more accurate underwriting.

2. Identifying inefficiencies and opportunities

Regression analysis can help us understand how the relationships between different business processes affect outcomes. Its ability to model complex relationships means that regression analysis can accurately highlight variables that lead to inefficiencies, which intuition alone may not do. Regression analysis allows businesses to improve performance significantly through targeted interventions. For instance, a manufacturing plant experiencing production delays, machine downtime, or labor shortages can use regression analysis to determine the underlying causes of these issues.

3. Making data-driven decisions

Regression analysis can enhance decision-making for any situation that relies on dependent variables. For example, a company can analyze the impact of various price points on sales volume to find the best pricing strategy for its products. Understanding buying behavior factors can help segment customers into buyer personas for improved targeting and messaging.

- Types of regression models

There are several types of regression models, each suited to a particular purpose. Picking the right one is vital to getting the correct results.

Simple linear regression analysis is the simplest form of regression analysis. It examines the relationship between exactly one dependent variable and one independent variable, fitting a straight line to the data points on a graph.

Multiple regression analysis examines how two or more independent variables affect a single dependent variable. It extends simple linear regression and requires a more complex algorithm.

Multivariate linear regression is suitable for multiple dependent variables. It allows the analysis of how independent variables influence multiple outcomes.

Logistic regression is relevant when the dependent variable is categorical, such as binary outcomes (e.g., true/false or yes/no). Logistic regression estimates the probability of a category based on the independent variables.

- 6 mistakes people make with regression analysis

Ignoring key variables is a common mistake when working with regression analysis. Here are a few more pitfalls to try and avoid:

1. Overfitting the model

If a model is too complex, it can become overly powerful and lead to a problem known as overfitting. This mistake is an especially significant problem when the independent variables don't impact the dependent data, though it can happen whenever the model over-adjusts to fit all the variables. In such cases, the model starts memorizing noise rather than meaningful data. When this happens, the model’s results will fit the training data perfectly but fail to generalize to new, unseen data, rendering the model ineffective for prediction or inference.

2. Underfitting the model

A less complex model is unlikely to draw false conclusions mistakenly. However, if the model is too simplistic, it will face the opposite problem: underfitting. In this case, the model will fail to capture the underlying patterns in the data, meaning it won't perform well on either the training or new, unseen data. This lack of complexity prevents the model from making accurate predictions or drawing meaningful inferences.

3. Neglecting model validation

Model validation is how you can be sure that a model isn't overfitting or underfitting. Imagine teaching a child to read. If you always read the same book to the child, they might memorize it and recite it perfectly, making it seem like they’ve learned to read. However, if you give them a new book, they might struggle and be unable to read it.

This scenario is similar to a model that performs well on its training data but fails with new data. Model validation involves testing the model with data it hasn’t seen before. If the model performs well on this new data, it indicates having truly learned to generalize. On the other hand, if the model only performs well on the training data and poorly on new data, it has overfitted to the training data, much like the child who can only recite the memorized book.

4. Multicollinearity

Regression analysis works best when the independent variables are genuinely independent. However, sometimes, two or more variables are highly correlated. This multicollinearity can make it hard for the model to accurately determine each variable's impact.

If a model gives poor results, checking for correlated variables may reveal the issue. You can fix it by removing one or more correlated variables or using a principal component analysis (PCA) technique, which transforms the correlated variables into a set of uncorrelated components.

5. Misinterpreting coefficients

Errors are not always due to the model itself; human error is common. These mistakes often involve misinterpreting the results. For example, someone might misunderstand the units of measure and draw incorrect conclusions. Another frequent issue in scientific analysis is confusing correlation and causation. Regression analysis can only provide insights into correlation, not causation.

6. Poor data quality

The adage “garbage in, garbage out” strongly applies to regression analysis. When low-quality data is input into a model, it analyzes noise rather than meaningful patterns. Poor data quality can manifest as missing values, unrepresentative data, outliers, and measurement errors. Additionally, the model may have excluded essential variables significantly impacting the results. All these issues can distort the relationships between variables and lead to misleading results.

- What are the assumptions that must hold for regression models?

To correctly interpret the output of a regression model, the following key assumptions about the underlying data process must hold:

The relationship between variables is linear.

There must be homoscedasticity, meaning the variance of the variables and the error term must remain constant.

All explanatory variables are independent of one another.

All variables are normally distributed.

- Real-life examples of regression analysis

Let's turn our attention to examining how a few industries use the regression analysis to improve their outcomes:

Regression analysis has many applications in healthcare, but two of the most common are improving patient outcomes and optimizing resources.

Hospitals need to use resources effectively to ensure the best patient outcomes. Regression models can help forecast patient admissions, equipment and supply usage, and more. These models allow hospitals to plan and maximize their resources.

Predicting stock prices, economic trends, and financial risks benefits the finance industry. Regression analysis can help finance professionals make informed decisions about these topics.

For example, analysts often use regression analysis to assess how changes to GDP, interest rates, and unemployment rates impact stock prices. Armed with this information, they can make more informed portfolio decisions.

The banking industry also uses regression analysis. When a loan underwriter determines whether to grant a loan, regression analysis allows them to calculate the probability that a potential lender will repay the loan.

Imagine how much more effective a company's marketing efforts could be if they could predict customer behavior. Regression analysis allows them to do so with a degree of accuracy. For example, marketers can analyze how price, advertising spend, and product features (combined) influence sales. Once they've identified key sales drivers, they can adjust their strategy to maximize revenue. They may approach this analysis in stages.

For instance, if they determine that ad spend is the biggest driver, they can apply regression analysis to data specific to advertising efforts. Doing so allows them to improve the ROI of ads. The opposite may also be true. If ad spending has little to no impact on sales, something is wrong that regression analysis might help identify.

- Regression analysis tools and software

Regression analysis by hand isn't practical. The process requires large numbers and complex calculations. Computers make even the most complex regression analysis possible. Even the most complicated AI algorithms can be considered fancy regression calculations. Many tools exist to help users create these regressions.

Another programming language—while MATLAB is a commercial tool, the open-source project Octave aims to implement much of the functionality. These languages are for complex mathematical operations, including regression analysis. Its tools for computation and visualization have made it very popular in academia, engineering, and industry for calculating regression and displaying the results. MATLAB integrates with other toolboxes so developers can extend its functionality and allow for application-specific solutions.

Python is a more general programming language than the previous examples, but many libraries are available that extend its functionality. For regression analysis, packages like Scikit-Learn and StatsModels provide the computational tools necessary for the job. In contrast, packages like Pandas and Matplotlib can handle large amounts of data and display the results. Python is a simple-to-learn, easy-to-read programming language, which can give it a leg up over the more dedicated math and statistics languages.

SAS (Statistical Analysis System) is a commercial software suite for advanced analytics, multivariate analysis, business intelligence, and data management. It includes a procedure called PROC REG that allows users to efficiently perform regression analysis on their data. The software is well-known for its data-handling capabilities, extensive documentation, and technical support. These factors make it a common choice for large-scale enterprise use and industries requiring rigorous statistical analysis.

Stata is another statistical software package. It provides an integrated data analysis, management, and graphics environment. The tool includes tools for performing a range of regression analysis tasks. This tool's popularity is due to its ease of use, reproducibility, and ability to handle complex datasets intuitively. The extensive documentation helps beginners get started quickly. Stata is widely used in academic research, economics, sociology, and political science.

Most people know Excel , but you might not know that Microsoft's spreadsheet software has an add-in called Analysis ToolPak that can perform basic linear regression and visualize the results. Excel is not an excellent choice for more complex regression or very large datasets. But for those with basic needs who only want to analyze smaller datasets quickly, it's a convenient option already in many tech stacks.

SPSS (Statistical Package for the Social Sciences) is a versatile statistical analysis software widely used in social science, business, and health. It offers tools for various analyses, including regression, making it accessible to users through its user-friendly interface. SPSS enables users to manage and visualize data, perform complex analyses, and generate reports without coding. Its extensive documentation and support make it popular in academia and industry, allowing for efficient handling of large datasets and reliable results.

What is a regression analysis in simple terms?

Regression analysis is a statistical method used to estimate and quantify the relationship between a dependent variable and one or more independent variables. It helps determine the strength and direction of these relationships, allowing predictions about the dependent variable based on the independent variables and providing insights into how each independent variable impacts the dependent variable.

What are the main types of variables used in regression analysis?

Dependent variables : typically continuous (e.g., house price) or binary (e.g., yes/no outcomes).

Independent variables : can be continuous, categorical, binary, or ordinal.

What does a regression analysis tell you?

Regression analysis identifies the relationships between a dependent variable and one or more independent variables. It quantifies the strength and direction of these relationships, allowing you to predict the dependent variable based on the independent variables and understand the impact of each independent variable on the dependent variable.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 22 August 2024

Last updated: 5 February 2023

Last updated: 16 August 2024

Last updated: 9 March 2023

Last updated: 30 April 2024

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next, log in or sign up.

Get started for free

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Creating Brand Value

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading Change and Organizational Renewal

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

What Is Regression Analysis in Business Analytics?

- 14 Dec 2021

Countless factors impact every facet of business. How can you consider those factors and know their true impact?

Imagine you seek to understand the factors that influence people’s decision to buy your company’s product. They range from customers’ physical locations to satisfaction levels among sales representatives to your competitors' Black Friday sales.

Understanding the relationships between each factor and product sales can enable you to pinpoint areas for improvement, helping you drive more sales.

To learn how each factor influences sales, you need to use a statistical analysis method called regression analysis .

If you aren’t a business or data analyst, you may not run regressions yourself, but knowing how analysis works can provide important insight into which factors impact product sales and, thus, which are worth improving.

Access your free e-book today.

Foundational Concepts for Regression Analysis

Before diving into regression analysis, you need to build foundational knowledge of statistical concepts and relationships.

Independent and Dependent Variables

Start with the basics. What relationship are you aiming to explore? Try formatting your answer like this: “I want to understand the impact of [the independent variable] on [the dependent variable].”

The independent variable is the factor that could impact the dependent variable . For example, “I want to understand the impact of employee satisfaction on product sales.”

In this case, employee satisfaction is the independent variable, and product sales is the dependent variable. Identifying the dependent and independent variables is the first step toward regression analysis.

Correlation vs. Causation

One of the cardinal rules of statistically exploring relationships is to never assume correlation implies causation. In other words, just because two variables move in the same direction doesn’t mean one caused the other to occur.

If two or more variables are correlated , their directional movements are related. If two variables are positively correlated , it means that as one goes up or down, so does the other. Alternatively, if two variables are negatively correlated , one goes up while the other goes down.

A correlation’s strength can be quantified by calculating the correlation coefficient , sometimes represented by r . The correlation coefficient falls between negative one and positive one.

r = -1 indicates a perfect negative correlation.

r = 1 indicates a perfect positive correlation.

r = 0 indicates no correlation.

Causation means that one variable caused the other to occur. Proving a causal relationship between variables requires a true experiment with a control group (which doesn’t receive the independent variable) and an experimental group (which receives the independent variable).

While regression analysis provides insights into relationships between variables, it doesn’t prove causation. It can be tempting to assume that one variable caused the other—especially if you want it to be true—which is why you need to keep this in mind any time you run regressions or analyze relationships between variables.

With the basics under your belt, here’s a deeper explanation of regression analysis so you can leverage it to drive strategic planning and decision-making.

Related: How to Learn Business Analytics without a Business Background

What Is Regression Analysis?

Regression analysis is the statistical method used to determine the structure of a relationship between two variables (single linear regression) or three or more variables (multiple regression).

According to the Harvard Business School Online course Business Analytics , regression is used for two primary purposes:

- To study the magnitude and structure of the relationship between variables

- To forecast a variable based on its relationship with another variable

Both of these insights can inform strategic business decisions.

“Regression allows us to gain insights into the structure of that relationship and provides measures of how well the data fit that relationship,” says HBS Professor Jan Hammond, who teaches Business Analytics, one of three courses that comprise the Credential of Readiness (CORe) program . “Such insights can prove extremely valuable for analyzing historical trends and developing forecasts.”

One way to think of regression is by visualizing a scatter plot of your data with the independent variable on the X-axis and the dependent variable on the Y-axis. The regression line is the line that best fits the scatter plot data. The regression equation represents the line’s slope and the relationship between the two variables, along with an estimation of error.

Physically creating this scatter plot can be a natural starting point for parsing out the relationships between variables.

Types of Regression Analysis

There are two types of regression analysis: single variable linear regression and multiple regression.

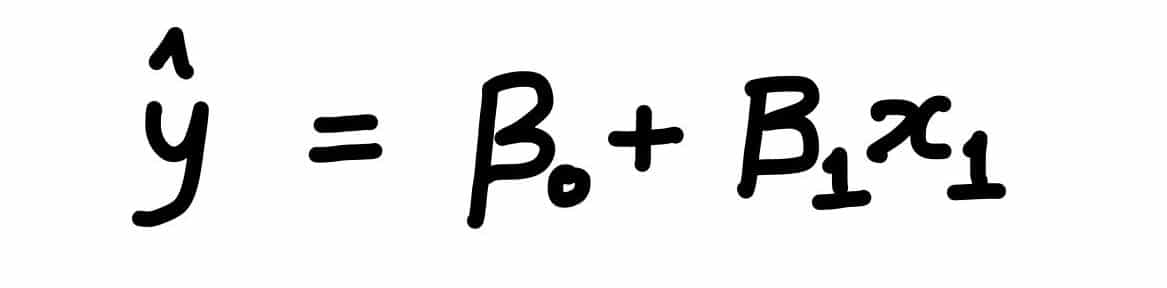

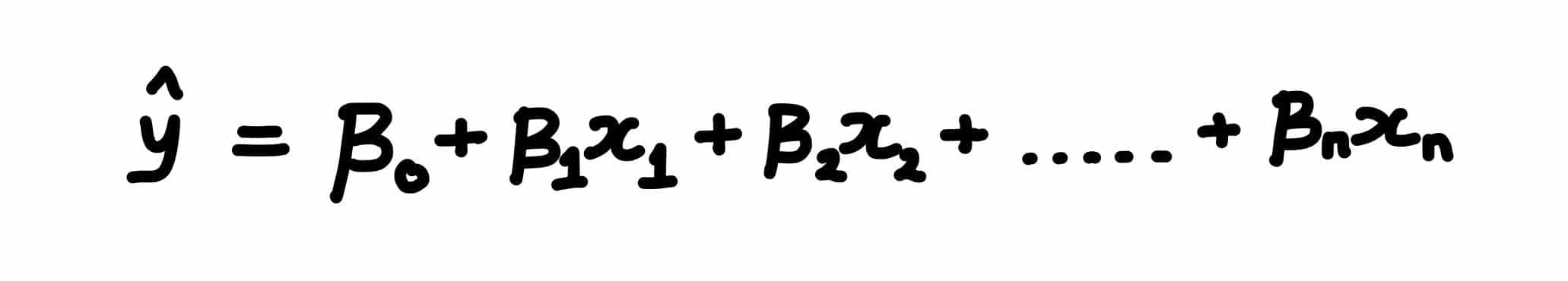

Single variable linear regression is used to determine the relationship between two variables: the independent and dependent. The equation for a single variable linear regression looks like this:

In the equation:

- ŷ is the expected value of Y (the dependent variable) for a given value of X (the independent variable).

- x is the independent variable.

- α is the Y-intercept, the point at which the regression line intersects with the vertical axis.

- β is the slope of the regression line, or the average change in the dependent variable as the independent variable increases by one.

- ε is the error term, equal to Y – ŷ, or the difference between the actual value of the dependent variable and its expected value.

Multiple regression , on the other hand, is used to determine the relationship between three or more variables: the dependent variable and at least two independent variables. The multiple regression equation looks complex but is similar to the single variable linear regression equation:

Each component of this equation represents the same thing as in the previous equation, with the addition of the subscript k, which is the total number of independent variables being examined. For each independent variable you include in the regression, multiply the slope of the regression line by the value of the independent variable, and add it to the rest of the equation.

How to Run Regressions

You can use a host of statistical programs—such as Microsoft Excel, SPSS, and STATA—to run both single variable linear and multiple regressions. If you’re interested in hands-on practice with this skill, Business Analytics teaches learners how to create scatter plots and run regressions in Microsoft Excel, as well as make sense of the output and use it to drive business decisions.

Calculating Confidence and Accounting for Error

It’s important to note: This overview of regression analysis is introductory and doesn’t delve into calculations of confidence level, significance, variance, and error. When working in a statistical program, these calculations may be provided or require that you implement a function. When conducting regression analysis, these metrics are important for gauging how significant your results are and how much importance to place on them.

Why Use Regression Analysis?

Once you’ve generated a regression equation for a set of variables, you effectively have a roadmap for the relationship between your independent and dependent variables. If you input a specific X value into the equation, you can see the expected Y value.

This can be critical for predicting the outcome of potential changes, allowing you to ask, “What would happen if this factor changed by a specific amount?”

Returning to the earlier example, running a regression analysis could allow you to find the equation representing the relationship between employee satisfaction and product sales. You could input a higher level of employee satisfaction and see how sales might change accordingly. This information could lead to improved working conditions for employees, backed by data that shows the tie between high employee satisfaction and sales.

Whether predicting future outcomes, determining areas for improvement, or identifying relationships between seemingly unconnected variables, understanding regression analysis can enable you to craft data-driven strategies and determine the best course of action with all factors in mind.

Do you want to become a data-driven professional? Explore our eight-week Business Analytics course and our three-course Credential of Readiness (CORe) program to deepen your analytical skills and apply them to real-world business problems.

About the Author

Contact Us (315) 303-2040

- Market Research Company Blog

What is Regression Analysis & How Is It Used?

by George Kuhn

Posted at: 3/8/2023 1:30 PM

Regression analysis helps organizations make sense of priority areas and what factors have the most impact and influence on their customer relationships.

It allows researchers and brands to read between the lines of the survey data.

This article will help you understand the definition of regression analysis, how it is commonly used, and the benefits of using regression research.

Interested in using regression analysis? Drive Research can help with that too. Reach our market research company by filling out an online contact form or emailing [email protected] .

Regression Analysis: Definition

Regression analysis is a common statistical method that helps organizations understand the relationship between independent variables and dependent variables.

- Dependent variable: The main factor you want to measure or understand.

- Independent variables: The secondary factors you believe to have an influence on your dependent variable.

More specifically regression analysis tells you what factors are most important, which to disregard, and how each factor affects one another.

In a simple example, say you want to find out how pricing, customer service, and product quality impacts (independent variables) impact customer retention (dependent variable).

A survey using regression analysis research is used to determine if increasing prices will have any impact on repeat customer purchases.

Importance of Regression Analysis

There are several benefits of regression analysis, most of which center around using it to achieve data-driven decision-making .

The advantages of using regression analysis in research include:

1. Great tool for forecasting

While there is no such thing as a magic crystal ball, regression research is a great approach to measuring predictive analytics and forecasting.

For instance, our market research company worked with a manufacturing company to understand the impact that key index scores from the markets had on sales projections.

Regression analysis was used to understand how revenue would be impacted by independent variables such as:

- The ups and downs of oil prices

- The consumer price index (CPI)

- The gross domestic product (GDP)

We used reports and predictive analytic forecasts on these key independent variable statistics to understand how their revenue might be impacted in future quarters.

Though keep in mind, the further in the future you predict, the less reliable the data will be using a wider margin of error .

2. Focus attention on priority areas of improvement

Regression statistical analysis helps businesses and organizations prioritize efforts to improve customer satisfaction metrics such as net promoter score, customer effort score , and customer loyalty.

Using regression analysis in quantitative research provides the opportunity to take corrective actions on the items that will most positively improve overall satisfaction.

When to Use Regression Analysis

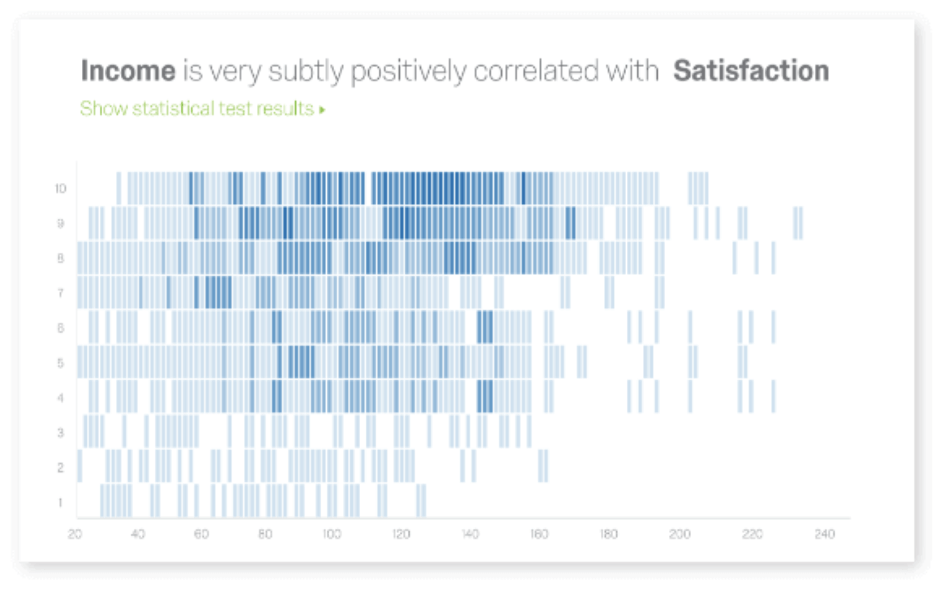

A common use of regression analysis is u nderstanding how the likelihood to recommend a product or service (dependent variable) is impacted by changes in wait time, price, and quantity purchased (presumably independent variables).

A popular way to measure this is with net promoter score (NPS) as it is one of the most commonly used metrics in market research.

The calculation or score is based on a simple 'likelihood to recommend' question.

It is a 0 to 10 scale where “10” indicates very likely to recommend and “0” indicates not at all likely to recommend.

It groups your customers into 3 buckets:

- Promoters or those who rate your brand a 9 or 10

- Passives or those who rate your brand a 7 or 8

- Detractors or those who rate your brand a 0 to 6

The NPS is calculated as the difference between the percentage of promoters and the percentage of detractors (i.e., 75% promoters - 15% detractors = +60 NPS.)

The score is very telling to help your business understand how many raving fans your brand has in comparison to your key competitors and industry benchmarks.

While our online survey company always recommends using an open-ended question after NPS to gather context to help understand the driving forces behind the score, sometimes it does not tell the whole story.

For instance, if you were to ask a customer why they rated your restaurant a “10” on the likelihood to recommend scale, they may say something like “good prices” or “good food” in an open-ended comment.

But is that what is really driving your high NPS rating?

Customers are often not experts at expressing their emotions and feelings in a survey.

This is where regression analysis can help.

Regression Analysis Example in Business

Keeping with the restaurant survey from above, let’s say in the same survey you ask a series of customer satisfaction questions related to respondents’ dining experience at your restaurant.

You believe the price and food are good at your restaurant but you think there might be some underlying drivers really pushing your high NPS.

In this example, likelihood to recommend, or NPS is your dependent variable A .

Your more specific follow-up satisfaction questions are dependent variables B, C, D, E, F, G .

You ask on a scale of 1 to 5 where “5” indicates very satisfied and “1” indicates not at all satisfied, how satisfied are you with the following?

- Cleanliness of the restaurant (INDEPENDENT VARIABLE B)

- Friendliness of the staff (INDEPENDENT VARIABLE C)

- Price of the food (INDEPENDENT VARIABLE D)

- Taste of the food (INDEPENDENT VARIABLE E)

- Speed of your order (INDEPENDENT VARIABLE F)

- Check-out process (INDEPENDENT VARIABLE G)

Through your regression analysis, you find out that INDEPENDENT VARIABLE C (friendliness of the staff) has the most significant effect on NPS.

This means how the customer rates the friendliness of the staff members will have the largest overall impact on how likely they would be to recommend your restaurant.

This is much different than what customers said in the open-ended comment about price and food.

However, as regression analysis proves, staff friendliness is essential.

This is likely driven by subconscious undertones of the customer experience and customers not understanding how they impact their overall experience.

If this facet of your business can be improved so all customers rate your staff a “5” on satisfaction, it is significantly more likely your NPS score will push higher than +60.

Contact Our Market Research Company

Regression analysis is another tool market research firms used on a daily basis with their clients to help brands understand survey data from customers.

The benefit of using a third-party market research firm is that you can leverage their expertise to tell you the “so what” of your customer survey data.

Drive Research is a market research company in Syracuse, NY.

Interested in exploring regression analysis for your customer satisfaction survey ? Need a quote or proposal for the work? Contact us below.

- Message us on our website

- Email us at [email protected]

- Call us at 888-725-DATA

- Text us at 315-303-2040

George Kuhn

George is the Owner & President of Drive Research. He has consulted for hundreds of regional, national, and global organizations over the past 15 years. He is a CX certified VoC professional with a focus on innovation and new product management.

Learn more about George, here .

Categories: Market Research Glossary

Need help with your project? Get in touch with Drive Research.

View Our Blog

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

A Refresher on Regression Analysis

Understanding one of the most important types of data analysis.

You probably know by now that whenever possible you should be making data-driven decisions at work . But do you know how to parse through all the data available to you? The good news is that you probably don’t need to do the number crunching yourself (hallelujah!) but you do need to correctly understand and interpret the analysis created by your colleagues. One of the most important types of data analysis is called regression analysis.

- Amy Gallo is a contributing editor at Harvard Business Review, cohost of the Women at Work podcast , and the author of two books: Getting Along: How to Work with Anyone (Even Difficult People) and the HBR Guide to Dealing with Conflict . She writes and speaks about workplace dynamics. Watch her TEDx talk on conflict and follow her on LinkedIn . amyegallo

Partner Center

- Privacy Policy

Home » Regression Analysis – Methods, Types and Examples

Regression Analysis – Methods, Types and Examples

Table of Contents

Regression Analysis

Regression analysis is a set of statistical processes for estimating the relationships among variables . It includes many techniques for modeling and analyzing several variables when the focus is on the relationship between a dependent variable and one or more independent variables (or ‘predictors’).

Regression Analysis Methodology

Here is a general methodology for performing regression analysis:

- Define the research question: Clearly state the research question or hypothesis you want to investigate. Identify the dependent variable (also called the response variable or outcome variable) and the independent variables (also called predictor variables or explanatory variables) that you believe are related to the dependent variable.

- Collect data: Gather the data for the dependent variable and independent variables. Ensure that the data is relevant, accurate, and representative of the population or phenomenon you are studying.

- Explore the data: Perform exploratory data analysis to understand the characteristics of the data, identify any missing values or outliers, and assess the relationships between variables through scatter plots, histograms, or summary statistics.

- Choose the regression model: Select an appropriate regression model based on the nature of the variables and the research question. Common regression models include linear regression, multiple regression, logistic regression, polynomial regression, and time series regression, among others.

- Assess assumptions: Check the assumptions of the regression model. Some common assumptions include linearity (the relationship between variables is linear), independence of errors, homoscedasticity (constant variance of errors), and normality of errors. Violation of these assumptions may require additional steps or alternative models.

- Estimate the model: Use a suitable method to estimate the parameters of the regression model. The most common method is ordinary least squares (OLS), which minimizes the sum of squared differences between the observed and predicted values of the dependent variable.

- I nterpret the results: Analyze the estimated coefficients, p-values, confidence intervals, and goodness-of-fit measures (e.g., R-squared) to interpret the results. Determine the significance and direction of the relationships between the independent variables and the dependent variable.

- Evaluate model performance: Assess the overall performance of the regression model using appropriate measures, such as R-squared, adjusted R-squared, and root mean squared error (RMSE). These measures indicate how well the model fits the data and how much of the variation in the dependent variable is explained by the independent variables.

- Test assumptions and diagnose problems: Check the residuals (the differences between observed and predicted values) for any patterns or deviations from assumptions. Conduct diagnostic tests, such as examining residual plots, testing for multicollinearity among independent variables, and assessing heteroscedasticity or autocorrelation, if applicable.

- Make predictions and draw conclusions: Once you have a satisfactory model, use it to make predictions on new or unseen data. Draw conclusions based on the results of the analysis, considering the limitations and potential implications of the findings.

Types of Regression Analysis

Types of Regression Analysis are as follows:

Linear Regression

Linear regression is the most basic and widely used form of regression analysis. It models the linear relationship between a dependent variable and one or more independent variables. The goal is to find the best-fitting line that minimizes the sum of squared differences between observed and predicted values.

Multiple Regression

Multiple regression extends linear regression by incorporating two or more independent variables to predict the dependent variable. It allows for examining the simultaneous effects of multiple predictors on the outcome variable.

Polynomial Regression

Polynomial regression models non-linear relationships between variables by adding polynomial terms (e.g., squared or cubic terms) to the regression equation. It can capture curved or nonlinear patterns in the data.

Logistic Regression

Logistic regression is used when the dependent variable is binary or categorical. It models the probability of the occurrence of a certain event or outcome based on the independent variables. Logistic regression estimates the coefficients using the logistic function, which transforms the linear combination of predictors into a probability.

Ridge Regression and Lasso Regression

Ridge regression and Lasso regression are techniques used for addressing multicollinearity (high correlation between independent variables) and variable selection. Both methods introduce a penalty term to the regression equation to shrink or eliminate less important variables. Ridge regression uses L2 regularization, while Lasso regression uses L1 regularization.

Time Series Regression

Time series regression analyzes the relationship between a dependent variable and independent variables when the data is collected over time. It accounts for autocorrelation and trends in the data and is used in forecasting and studying temporal relationships.

Nonlinear Regression

Nonlinear regression models are used when the relationship between the dependent variable and independent variables is not linear. These models can take various functional forms and require estimation techniques different from those used in linear regression.

Poisson Regression

Poisson regression is employed when the dependent variable represents count data. It models the relationship between the independent variables and the expected count, assuming a Poisson distribution for the dependent variable.

Generalized Linear Models (GLM)

GLMs are a flexible class of regression models that extend the linear regression framework to handle different types of dependent variables, including binary, count, and continuous variables. GLMs incorporate various probability distributions and link functions.

Regression Analysis Formulas

Regression analysis involves estimating the parameters of a regression model to describe the relationship between the dependent variable (Y) and one or more independent variables (X). Here are the basic formulas for linear regression, multiple regression, and logistic regression:

Linear Regression:

Simple Linear Regression Model: Y = β0 + β1X + ε

Multiple Linear Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

In both formulas:

- Y represents the dependent variable (response variable).

- X represents the independent variable(s) (predictor variable(s)).

- β0, β1, β2, …, βn are the regression coefficients or parameters that need to be estimated.

- ε represents the error term or residual (the difference between the observed and predicted values).

Multiple Regression:

Multiple regression extends the concept of simple linear regression by including multiple independent variables.

Multiple Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

The formulas are similar to those in linear regression, with the addition of more independent variables.

Logistic Regression:

Logistic regression is used when the dependent variable is binary or categorical. The logistic regression model applies a logistic or sigmoid function to the linear combination of the independent variables.

Logistic Regression Model: p = 1 / (1 + e^-(β0 + β1X1 + β2X2 + … + βnXn))

In the formula:

- p represents the probability of the event occurring (e.g., the probability of success or belonging to a certain category).

- X1, X2, …, Xn represent the independent variables.

- e is the base of the natural logarithm.

The logistic function ensures that the predicted probabilities lie between 0 and 1, allowing for binary classification.

Regression Analysis Examples

Regression Analysis Examples are as follows:

- Stock Market Prediction: Regression analysis can be used to predict stock prices based on various factors such as historical prices, trading volume, news sentiment, and economic indicators. Traders and investors can use this analysis to make informed decisions about buying or selling stocks.

- Demand Forecasting: In retail and e-commerce, real-time It can help forecast demand for products. By analyzing historical sales data along with real-time data such as website traffic, promotional activities, and market trends, businesses can adjust their inventory levels and production schedules to meet customer demand more effectively.

- Energy Load Forecasting: Utility companies often use real-time regression analysis to forecast electricity demand. By analyzing historical energy consumption data, weather conditions, and other relevant factors, they can predict future energy loads. This information helps them optimize power generation and distribution, ensuring a stable and efficient energy supply.

- Online Advertising Performance: It can be used to assess the performance of online advertising campaigns. By analyzing real-time data on ad impressions, click-through rates, conversion rates, and other metrics, advertisers can adjust their targeting, messaging, and ad placement strategies to maximize their return on investment.

- Predictive Maintenance: Regression analysis can be applied to predict equipment failures or maintenance needs. By continuously monitoring sensor data from machines or vehicles, regression models can identify patterns or anomalies that indicate potential failures. This enables proactive maintenance, reducing downtime and optimizing maintenance schedules.

- Financial Risk Assessment: Real-time regression analysis can help financial institutions assess the risk associated with lending or investment decisions. By analyzing real-time data on factors such as borrower financials, market conditions, and macroeconomic indicators, regression models can estimate the likelihood of default or assess the risk-return tradeoff for investment portfolios.

Importance of Regression Analysis

Importance of Regression Analysis is as follows:

- Relationship Identification: Regression analysis helps in identifying and quantifying the relationship between a dependent variable and one or more independent variables. It allows us to determine how changes in independent variables impact the dependent variable. This information is crucial for decision-making, planning, and forecasting.

- Prediction and Forecasting: Regression analysis enables us to make predictions and forecasts based on the relationships identified. By estimating the values of the dependent variable using known values of independent variables, regression models can provide valuable insights into future outcomes. This is particularly useful in business, economics, finance, and other fields where forecasting is vital for planning and strategy development.