Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is a Research Design | Types, Guide & Examples

What Is a Research Design | Types, Guide & Examples

Published on June 7, 2021 by Shona McCombes . Revised on November 20, 2023 by Pritha Bhandari.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall research objectives and approach

- Whether you’ll rely on primary research or secondary research

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research objectives and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, other interesting articles, frequently asked questions about research design.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities—start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

| Qualitative approach | Quantitative approach |

|---|---|

| and describe frequencies, averages, and correlations about relationships between variables |

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

It’s also possible to use a mixed-methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

Quantitative designs can be split into four main types.

- Experimental and quasi-experimental designs allow you to test cause-and-effect relationships

- Descriptive and correlational designs allow you to measure variables and describe relationships between them.

| Type of design | Purpose and characteristics |

|---|---|

| Experimental | relationships effect on a |

| Quasi-experimental | ) |

| Correlational | |

| Descriptive |

With descriptive and correlational designs, you can get a clear picture of characteristics, trends and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analyzing the data.

| Type of design | Purpose and characteristics |

|---|---|

| Grounded theory | |

| Phenomenology |

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study—plants, animals, organizations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

- Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalize your results to the population as a whole.

| Probability sampling | Non-probability sampling |

|---|---|

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study , your aim is to deeply understand a specific context, not to generalize to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question .

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviors, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews .

| Questionnaires | Interviews |

|---|---|

| ) |

Observation methods

Observational studies allow you to collect data unobtrusively, observing characteristics, behaviors or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

| Quantitative observation | |

|---|---|

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

| Field | Examples of data collection methods |

|---|---|

| Media & communication | Collecting a sample of texts (e.g., speeches, articles, or social media posts) for data on cultural norms and narratives |

| Psychology | Using technologies like neuroimaging, eye-tracking, or computer-based tasks to collect data on things like attention, emotional response, or reaction time |

| Education | Using tests or assignments to collect data on knowledge and skills |

| Physical sciences | Using scientific instruments to collect data on things like weight, blood pressure, or chemical composition |

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what kinds of data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected—for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

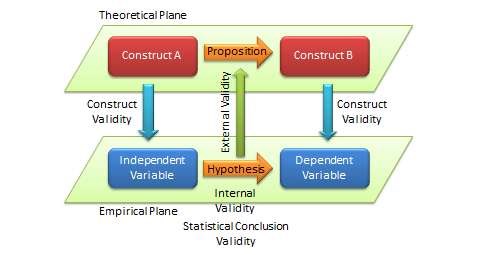

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are high in reliability and validity.

Operationalization

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalization means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in—for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity

Reliability means your results can be consistently reproduced, while validity means that you’re actually measuring the concept you’re interested in.

| Reliability | Validity |

|---|---|

| ) ) |

For valid and reliable results, your measurement materials should be thoroughly researched and carefully designed. Plan your procedures to make sure you carry out the same steps in the same way for each participant.

If you’re developing a new questionnaire or other instrument to measure a specific concept, running a pilot study allows you to check its validity and reliability in advance.

Sampling procedures

As well as choosing an appropriate sampling method , you need a concrete plan for how you’ll actually contact and recruit your selected sample.

That means making decisions about things like:

- How many participants do you need for an adequate sample size?

- What inclusion and exclusion criteria will you use to identify eligible participants?

- How will you contact your sample—by mail, online, by phone, or in person?

If you’re using a probability sampling method , it’s important that everyone who is randomly selected actually participates in the study. How will you ensure a high response rate?

If you’re using a non-probability method , how will you avoid research bias and ensure a representative sample?

Data management

It’s also important to create a data management plan for organizing and storing your data.

Will you need to transcribe interviews or perform data entry for observations? You should anonymize and safeguard any sensitive data, and make sure it’s backed up regularly.

Keeping your data well-organized will save time when it comes to analyzing it. It can also help other researchers validate and add to your findings (high replicability ).

On its own, raw data can’t answer your research question. The last step of designing your research is planning how you’ll analyze the data.

Quantitative data analysis

In quantitative research, you’ll most likely use some form of statistical analysis . With statistics, you can summarize your sample data, make estimates, and test hypotheses.

Using descriptive statistics , you can summarize your sample data in terms of:

- The distribution of the data (e.g., the frequency of each score on a test)

- The central tendency of the data (e.g., the mean to describe the average score)

- The variability of the data (e.g., the standard deviation to describe how spread out the scores are)

The specific calculations you can do depend on the level of measurement of your variables.

Using inferential statistics , you can:

- Make estimates about the population based on your sample data.

- Test hypotheses about a relationship between variables.

Regression and correlation tests look for associations between two or more variables, while comparison tests (such as t tests and ANOVAs ) look for differences in the outcomes of different groups.

Your choice of statistical test depends on various aspects of your research design, including the types of variables you’re dealing with and the distribution of your data.

Qualitative data analysis

In qualitative research, your data will usually be very dense with information and ideas. Instead of summing it up in numbers, you’ll need to comb through the data in detail, interpret its meanings, identify patterns, and extract the parts that are most relevant to your research question.

Two of the most common approaches to doing this are thematic analysis and discourse analysis .

| Approach | Characteristics |

|---|---|

| Thematic analysis | |

| Discourse analysis |

There are many other ways of analyzing qualitative data depending on the aims of your research. To get a sense of potential approaches, try reading some qualitative research papers in your field.

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

A research design is a strategy for answering your research question . It defines your overall approach and determines how you will collect and analyze data.

A well-planned research design helps ensure that your methods match your research aims, that you collect high-quality data, and that you use the right kind of analysis to answer your questions, utilizing credible sources . This allows you to draw valid , trustworthy conclusions.

Quantitative research designs can be divided into two main categories:

- Correlational and descriptive designs are used to investigate characteristics, averages, trends, and associations between variables.

- Experimental and quasi-experimental designs are used to test causal relationships .

Qualitative research designs tend to be more flexible. Common types of qualitative design include case study , ethnography , and grounded theory designs.

The priorities of a research design can vary depending on the field, but you usually have to specify:

- Your research questions and/or hypotheses

- Your overall approach (e.g., qualitative or quantitative )

- The type of design you’re using (e.g., a survey , experiment , or case study )

- Your data collection methods (e.g., questionnaires , observations)

- Your data collection procedures (e.g., operationalization , timing and data management)

- Your data analysis methods (e.g., statistical tests or thematic analysis )

A sample is a subset of individuals from a larger population . Sampling means selecting the group that you will actually collect data from in your research. For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

In statistics, sampling allows you to test a hypothesis about the characteristics of a population.

Operationalization means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioral avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalize the variables that you want to measure.

A research project is an academic, scientific, or professional undertaking to answer a research question . Research projects can take many forms, such as qualitative or quantitative , descriptive , longitudinal , experimental , or correlational . What kind of research approach you choose will depend on your topic.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). What Is a Research Design | Types, Guide & Examples. Scribbr. Retrieved August 24, 2024, from https://www.scribbr.com/methodology/research-design/

Is this article helpful?

Shona McCombes

Other students also liked, guide to experimental design | overview, steps, & examples, how to write a research proposal | examples & templates, ethical considerations in research | types & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

- Privacy Policy

Home » Research Design – Types, Methods and Examples

Research Design – Types, Methods and Examples

Table of Contents

Research Design

Definition:

Research design refers to the overall strategy or plan for conducting a research study. It outlines the methods and procedures that will be used to collect and analyze data, as well as the goals and objectives of the study. Research design is important because it guides the entire research process and ensures that the study is conducted in a systematic and rigorous manner.

Types of Research Design

Types of Research Design are as follows:

Descriptive Research Design

This type of research design is used to describe a phenomenon or situation. It involves collecting data through surveys, questionnaires, interviews, and observations. The aim of descriptive research is to provide an accurate and detailed portrayal of a particular group, event, or situation. It can be useful in identifying patterns, trends, and relationships in the data.

Correlational Research Design

Correlational research design is used to determine if there is a relationship between two or more variables. This type of research design involves collecting data from participants and analyzing the relationship between the variables using statistical methods. The aim of correlational research is to identify the strength and direction of the relationship between the variables.

Experimental Research Design

Experimental research design is used to investigate cause-and-effect relationships between variables. This type of research design involves manipulating one variable and measuring the effect on another variable. It usually involves randomly assigning participants to groups and manipulating an independent variable to determine its effect on a dependent variable. The aim of experimental research is to establish causality.

Quasi-experimental Research Design

Quasi-experimental research design is similar to experimental research design, but it lacks one or more of the features of a true experiment. For example, there may not be random assignment to groups or a control group. This type of research design is used when it is not feasible or ethical to conduct a true experiment.

Case Study Research Design

Case study research design is used to investigate a single case or a small number of cases in depth. It involves collecting data through various methods, such as interviews, observations, and document analysis. The aim of case study research is to provide an in-depth understanding of a particular case or situation.

Longitudinal Research Design

Longitudinal research design is used to study changes in a particular phenomenon over time. It involves collecting data at multiple time points and analyzing the changes that occur. The aim of longitudinal research is to provide insights into the development, growth, or decline of a particular phenomenon over time.

Structure of Research Design

The format of a research design typically includes the following sections:

- Introduction : This section provides an overview of the research problem, the research questions, and the importance of the study. It also includes a brief literature review that summarizes previous research on the topic and identifies gaps in the existing knowledge.

- Research Questions or Hypotheses: This section identifies the specific research questions or hypotheses that the study will address. These questions should be clear, specific, and testable.

- Research Methods : This section describes the methods that will be used to collect and analyze data. It includes details about the study design, the sampling strategy, the data collection instruments, and the data analysis techniques.

- Data Collection: This section describes how the data will be collected, including the sample size, data collection procedures, and any ethical considerations.

- Data Analysis: This section describes how the data will be analyzed, including the statistical techniques that will be used to test the research questions or hypotheses.

- Results : This section presents the findings of the study, including descriptive statistics and statistical tests.

- Discussion and Conclusion : This section summarizes the key findings of the study, interprets the results, and discusses the implications of the findings. It also includes recommendations for future research.

- References : This section lists the sources cited in the research design.

Example of Research Design

An Example of Research Design could be:

Research question: Does the use of social media affect the academic performance of high school students?

Research design:

- Research approach : The research approach will be quantitative as it involves collecting numerical data to test the hypothesis.

- Research design : The research design will be a quasi-experimental design, with a pretest-posttest control group design.

- Sample : The sample will be 200 high school students from two schools, with 100 students in the experimental group and 100 students in the control group.

- Data collection : The data will be collected through surveys administered to the students at the beginning and end of the academic year. The surveys will include questions about their social media usage and academic performance.

- Data analysis : The data collected will be analyzed using statistical software. The mean scores of the experimental and control groups will be compared to determine whether there is a significant difference in academic performance between the two groups.

- Limitations : The limitations of the study will be acknowledged, including the fact that social media usage can vary greatly among individuals, and the study only focuses on two schools, which may not be representative of the entire population.

- Ethical considerations: Ethical considerations will be taken into account, such as obtaining informed consent from the participants and ensuring their anonymity and confidentiality.

How to Write Research Design

Writing a research design involves planning and outlining the methodology and approach that will be used to answer a research question or hypothesis. Here are some steps to help you write a research design:

- Define the research question or hypothesis : Before beginning your research design, you should clearly define your research question or hypothesis. This will guide your research design and help you select appropriate methods.

- Select a research design: There are many different research designs to choose from, including experimental, survey, case study, and qualitative designs. Choose a design that best fits your research question and objectives.

- Develop a sampling plan : If your research involves collecting data from a sample, you will need to develop a sampling plan. This should outline how you will select participants and how many participants you will include.

- Define variables: Clearly define the variables you will be measuring or manipulating in your study. This will help ensure that your results are meaningful and relevant to your research question.

- Choose data collection methods : Decide on the data collection methods you will use to gather information. This may include surveys, interviews, observations, experiments, or secondary data sources.

- Create a data analysis plan: Develop a plan for analyzing your data, including the statistical or qualitative techniques you will use.

- Consider ethical concerns : Finally, be sure to consider any ethical concerns related to your research, such as participant confidentiality or potential harm.

When to Write Research Design

Research design should be written before conducting any research study. It is an important planning phase that outlines the research methodology, data collection methods, and data analysis techniques that will be used to investigate a research question or problem. The research design helps to ensure that the research is conducted in a systematic and logical manner, and that the data collected is relevant and reliable.

Ideally, the research design should be developed as early as possible in the research process, before any data is collected. This allows the researcher to carefully consider the research question, identify the most appropriate research methodology, and plan the data collection and analysis procedures in advance. By doing so, the research can be conducted in a more efficient and effective manner, and the results are more likely to be valid and reliable.

Purpose of Research Design

The purpose of research design is to plan and structure a research study in a way that enables the researcher to achieve the desired research goals with accuracy, validity, and reliability. Research design is the blueprint or the framework for conducting a study that outlines the methods, procedures, techniques, and tools for data collection and analysis.

Some of the key purposes of research design include:

- Providing a clear and concise plan of action for the research study.

- Ensuring that the research is conducted ethically and with rigor.

- Maximizing the accuracy and reliability of the research findings.

- Minimizing the possibility of errors, biases, or confounding variables.

- Ensuring that the research is feasible, practical, and cost-effective.

- Determining the appropriate research methodology to answer the research question(s).

- Identifying the sample size, sampling method, and data collection techniques.

- Determining the data analysis method and statistical tests to be used.

- Facilitating the replication of the study by other researchers.

- Enhancing the validity and generalizability of the research findings.

Applications of Research Design

There are numerous applications of research design in various fields, some of which are:

- Social sciences: In fields such as psychology, sociology, and anthropology, research design is used to investigate human behavior and social phenomena. Researchers use various research designs, such as experimental, quasi-experimental, and correlational designs, to study different aspects of social behavior.

- Education : Research design is essential in the field of education to investigate the effectiveness of different teaching methods and learning strategies. Researchers use various designs such as experimental, quasi-experimental, and case study designs to understand how students learn and how to improve teaching practices.

- Health sciences : In the health sciences, research design is used to investigate the causes, prevention, and treatment of diseases. Researchers use various designs, such as randomized controlled trials, cohort studies, and case-control studies, to study different aspects of health and healthcare.

- Business : Research design is used in the field of business to investigate consumer behavior, marketing strategies, and the impact of different business practices. Researchers use various designs, such as survey research, experimental research, and case studies, to study different aspects of the business world.

- Engineering : In the field of engineering, research design is used to investigate the development and implementation of new technologies. Researchers use various designs, such as experimental research and case studies, to study the effectiveness of new technologies and to identify areas for improvement.

Advantages of Research Design

Here are some advantages of research design:

- Systematic and organized approach : A well-designed research plan ensures that the research is conducted in a systematic and organized manner, which makes it easier to manage and analyze the data.

- Clear objectives: The research design helps to clarify the objectives of the study, which makes it easier to identify the variables that need to be measured, and the methods that need to be used to collect and analyze data.

- Minimizes bias: A well-designed research plan minimizes the chances of bias, by ensuring that the data is collected and analyzed objectively, and that the results are not influenced by the researcher’s personal biases or preferences.

- Efficient use of resources: A well-designed research plan helps to ensure that the resources (time, money, and personnel) are used efficiently and effectively, by focusing on the most important variables and methods.

- Replicability: A well-designed research plan makes it easier for other researchers to replicate the study, which enhances the credibility and reliability of the findings.

- Validity: A well-designed research plan helps to ensure that the findings are valid, by ensuring that the methods used to collect and analyze data are appropriate for the research question.

- Generalizability : A well-designed research plan helps to ensure that the findings can be generalized to other populations, settings, or situations, which increases the external validity of the study.

Research Design Vs Research Methodology

| Research Design | Research Methodology |

|---|---|

| The plan and structure for conducting research that outlines the procedures to be followed to collect and analyze data. | The set of principles, techniques, and tools used to carry out the research plan and achieve research objectives. |

| Describes the overall approach and strategy used to conduct research, including the type of data to be collected, the sources of data, and the methods for collecting and analyzing data. | Refers to the techniques and methods used to gather, analyze and interpret data, including sampling techniques, data collection methods, and data analysis techniques. |

| Helps to ensure that the research is conducted in a systematic, rigorous, and valid way, so that the results are reliable and can be used to make sound conclusions. | Includes a set of procedures and tools that enable researchers to collect and analyze data in a consistent and valid manner, regardless of the research design used. |

| Common research designs include experimental, quasi-experimental, correlational, and descriptive studies. | Common research methodologies include qualitative, quantitative, and mixed-methods approaches. |

| Determines the overall structure of the research project and sets the stage for the selection of appropriate research methodologies. | Guides the researcher in selecting the most appropriate research methods based on the research question, research design, and other contextual factors. |

| Helps to ensure that the research project is feasible, relevant, and ethical. | Helps to ensure that the data collected is accurate, valid, and reliable, and that the research findings can be interpreted and generalized to the population of interest. |

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Gap – Types, Examples and How to...

Critical Analysis – Types, Examples and Writing...

Data Interpretation – Process, Methods and...

Data Collection – Methods Types and Examples

Chapter Summary & Overview – Writing Guide...

Research Summary – Structure, Examples and...

Leave a comment x.

Save my name, email, and website in this browser for the next time I comment.

Ohio State nav bar

The Ohio State University

- BuckeyeLink

- Find People

- Search Ohio State

Basic Research Design

What is research design.

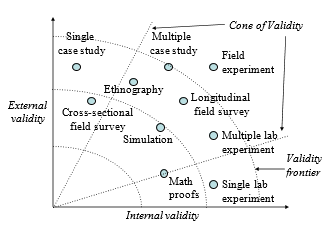

- Definition of Research Design : A procedure for generating answers to questions, crucial in determining the reliability and relevance of research outcomes.

- Importance of Strong Designs : Strong designs lead to answers that are accurate and close to their targets, while weak designs may result in misleading or irrelevant outcomes.

- Criteria for Assessing Design Strength : Evaluating a design’s strength involves understanding the research question and how the design will yield reliable empirical information.

The Four Elements of Research Design (Blair et al., 2023)

- The MIDA Framework : Research designs consist of four interconnected elements – Model (M), Inquiry (I), Data strategy (D), and Answer strategy (A), collectively referred to as MIDA.

- Theoretical Side (M and I): This encompasses the researcher’s beliefs about the world (Model) and the target of inference or the primary question to be answered (Inquiry).

- Empirical Side (D and A): This includes the strategies for collecting (Data strategy) and analyzing or summarizing information (Answer strategy).

- Interplay between Theoretical and Empirical Sides : The theoretical side sets the research challenges, while the empirical side represents the researcher’s responses to these challenges.

- Relation among MIDA Components: The diagram above shows how the four elements of a design are interconnected and how they relate to both real-world and simulated quantities.

- Parallelism in Design Representation: The illustration highlights two key parallelisms in research design – between actual and simulated processes, and between the theoretical (M, I) and empirical (D, A) sides.

- Importance of Simulated Processes: The parallelism between actual and simulated processes is crucial for understanding and evaluating research designs.

- Balancing Theoretical and Empirical Aspects : Effective research design requires a balance between theoretical considerations (models and inquiries) and empirical methodologies (data and answer strategies).

Research Design Principles (Blair et al., 2023)

- Integration of Components: Designs are effective not merely due to their individual components but how these components work together.

- Focus on Entire Design: Assessing a design requires examining how each part, such as the question, estimator, and sampling method, fits into the overall design.

- Importance of Diagnosis: The evaluation of a design’s strength lies in diagnosing the whole design, not just its parts.

- Strong Design Characteristics: Designs with parallel theoretical and empirical aspects tend to be stronger.

- The M:I:D:A Analogy: Effective designs often align data strategies with models and answer strategies with inquiries.

- Flexibility in Models: Good designs should perform well even under varying world scenarios, not just under expected conditions.

- Broadening Model Scope: Designers should consider a wide range of models, assessing the design’s effectiveness across these.

- Robustness of Inquiries and Strategies: Inquiries should yield answers and strategies should be applicable regardless of variations in real-world events.

- Diagnosis Across Models: It’s important to understand for which models a design excels and for which it falters.

- Specificity of Purpose: A design is deemed good when it aligns with a specific purpose or goal.

- Balancing Multiple Criteria: Designs should balance scientific precision, logistical constraints, policy goals, and ethical considerations.

- Diverse Goals and Assessments: Different designs may be optimal for different goals; the purpose dictates the design evaluation.

- Early Planning Benefits: Designing early allows for learning and improving design properties before data collection.

- Avoiding Post-Hoc Regrets: Early design helps avoid regrets related to data collection or question formulation.

- Iterative Improvement: The process of declaration, diagnosis, and redesign improves designs, ideally done before data collection.

- Adaptability to Changes: Designs should be flexible to adapt to unforeseen circumstances or new information.

- Expanding or Contracting Feasibility: The scope of feasible designs may change due to various practical factors.

- Continual Redesign: The principle advocates for ongoing design modification, even post research completion, for robustness and response to criticism.

- Improvement Through Sharing: Sharing designs via a formalized declaration makes it easier for others to understand and critique.

- Enhancing Scientific Communication: Well-documented designs facilitate better communication and justification of research decisions.

- Building a Design Library: The idea is to contribute designs to a shared library, allowing others to learn from and build upon existing work.

The Basics of Social Science Research Designs (Panke, 2018)

Deductive and inductive research.

- Starting Point: Begins with empirical observations or exploratory studies.

- Development of Hypotheses: Hypotheses are formulated after initial empirical analysis.

- Case Study Analysis: Involves conducting explorative case studies and analyzing dynamics at play.

- Generalization of Findings: Insights are then generalized across multiple cases to verify their applicability.

- Application: Suitable for novel phenomena or where existing theories are not easily applicable.

- Example Cases: Exploring new events like Donald Trump’s 2016 nomination or Russia’s annexation of Crimea in 2014.

- Theory-Based: Starts with existing theories to develop scientific answers to research questions.

- Hypothesis Development: Hypotheses are specified and then empirically examined.

- Empirical Examination: Involves a thorough empirical analysis of hypotheses using sound methods.

- Theory Refinement: Results can refine existing theories or contribute to new theoretical insights.

- Application: Preferred when existing theories relate to the research question.

- Example Projects: Usually explanatory projects asking ‘why’ questions to uncover relationships.

Explanatory and Interpretative Research Designs

- Definition: Explanatory research aims to explain the relationships between variables, often addressing ‘why’ questions. It is primarily concerned with identifying cause-and-effect dynamics and is typically quantitative in nature. The goal is to test hypotheses derived from theories and to establish patterns that can predict future occurrences.

- Definition: Interpretative research focuses on understanding the deeper meaning or underlying context of social phenomena. It often addresses ‘how is this possible’ questions, seeking to comprehend how certain outcomes or behaviors are produced within specific contexts. This type of research is usually qualitative and prioritizes individual experiences and perceptions.

- Explanatory Research: Poses ‘why’ questions to explore causal relationships and understand what factors influence certain outcomes.

- Interpretative Research: Asks ‘how is this possible’ questions to delve into the processes and meanings behind social phenomena.

- Explanatory Research: Relies on established theories to form hypotheses about causal relationships between variables. These theories are then tested through empirical research.

- Interpretative Research: Uses theories to provide a framework for understanding the social context and meanings. The focus is on constitutive relationships rather than causal ones.

- Explanatory Research: Often involves studying multiple cases to allow for comparison and generalization. It seeks patterns across different scenarios.

- Interpretative Research: Typically concentrates on single case studies, providing an in-depth understanding of that particular case without necessarily aiming for generalization.

- Explanatory Research: Aims to produce findings that can be generalized to other similar cases or populations. It seeks universal or broad patterns.

- Interpretative Research: Offers detailed insights specific to a single case or context. These findings are not necessarily intended to be generalized but to provide a deep understanding of the particular case.

Qualitative, Quantitative, and Mixed-method Projects

- Definition: Qualitative research is exploratory and aims to understand human behavior, beliefs, feelings, and experiences. It involves collecting non-numerical data, often through interviews, focus groups, or textual analysis. This method is ideal for gaining in-depth insights into specific phenomena.

- Example in Education: A qualitative study might involve conducting in-depth interviews with teachers to explore their experiences and challenges with remote teaching during the pandemic. This research would aim to understand the nuances of their experiences, challenges, and adaptations in a detailed and descriptive manner.

- Definition: Quantitative research seeks to quantify data and generalize results from a sample to the population of interest. It involves measurable, numerical data and often uses statistical methods for analysis. This approach is suitable for testing hypotheses or examining relationships between variables.

- Example in Education: A quantitative study could involve surveying a large number of students to determine the correlation between the amount of time spent on homework and their academic achievement. This would involve collecting numerical data (hours of homework, grades) and applying statistical analysis to examine relationships or differences.

- Definition: Mixed-method research combines both qualitative and quantitative approaches, providing a more comprehensive understanding of the research problem. It allows for the exploration of complex research questions by integrating numerical data analysis with detailed narrative data.

- Example in Education: A mixed-method study might investigate the impact of a new teaching method. The research could start with quantitative methods, like administering standardized tests to measure learning outcomes, followed by qualitative methods, such as conducting focus groups with students and teachers to understand their perceptions and experiences with the new teaching method. This combination provides both statistical results and in-depth understanding.

- Research Questions: What kind of information is needed to answer the questions? Qualitative for “how” and “why”, quantitative for “how many” or “how much”, and mixed methods for a comprehensive understanding of both the breadth and depth of a phenomenon.

- Nature of the Study: Is the study aiming to explore a new area (qualitative), confirm hypotheses (quantitative), or achieve both (mixed-method)?

- Resources Available: Time, funding, and expertise available can influence the choice. Qualitative research can be more time-consuming, while quantitative research may require specific statistical skills.

- Data Sources: Availability and type of data also guide the methodology. Existing numerical data might lean towards quantitative, while studies requiring personal experiences or opinions might be qualitative.

References:

Blair, G., Coppock, A., & Humphreys, M. (2023). Research Design in the Social Sciences: Declaration, Diagnosis, and Redesign . Princeton University Press.

Panke, D. (2018). Research design & method selection: Making good choices in the social sciences. Research Design & Method Selection , 1-368.

Research Design 101

Everything You Need To Get Started (With Examples)

By: Derek Jansen (MBA) | Reviewers: Eunice Rautenbach (DTech) & Kerryn Warren (PhD) | April 2023

Navigating the world of research can be daunting, especially if you’re a first-time researcher. One concept you’re bound to run into fairly early in your research journey is that of “ research design ”. Here, we’ll guide you through the basics using practical examples , so that you can approach your research with confidence.

Overview: Research Design 101

What is research design.

- Research design types for quantitative studies

- Video explainer : quantitative research design

- Research design types for qualitative studies

- Video explainer : qualitative research design

- How to choose a research design

- Key takeaways

Research design refers to the overall plan, structure or strategy that guides a research project , from its conception to the final data analysis. A good research design serves as the blueprint for how you, as the researcher, will collect and analyse data while ensuring consistency, reliability and validity throughout your study.

Understanding different types of research designs is essential as helps ensure that your approach is suitable given your research aims, objectives and questions , as well as the resources you have available to you. Without a clear big-picture view of how you’ll design your research, you run the risk of potentially making misaligned choices in terms of your methodology – especially your sampling , data collection and data analysis decisions.

The problem with defining research design…

One of the reasons students struggle with a clear definition of research design is because the term is used very loosely across the internet, and even within academia.

Some sources claim that the three research design types are qualitative, quantitative and mixed methods , which isn’t quite accurate (these just refer to the type of data that you’ll collect and analyse). Other sources state that research design refers to the sum of all your design choices, suggesting it’s more like a research methodology . Others run off on other less common tangents. No wonder there’s confusion!

In this article, we’ll clear up the confusion. We’ll explain the most common research design types for both qualitative and quantitative research projects, whether that is for a full dissertation or thesis, or a smaller research paper or article.

Research Design: Quantitative Studies

Quantitative research involves collecting and analysing data in a numerical form. Broadly speaking, there are four types of quantitative research designs: descriptive , correlational , experimental , and quasi-experimental .

Descriptive Research Design

As the name suggests, descriptive research design focuses on describing existing conditions, behaviours, or characteristics by systematically gathering information without manipulating any variables. In other words, there is no intervention on the researcher’s part – only data collection.

For example, if you’re studying smartphone addiction among adolescents in your community, you could deploy a survey to a sample of teens asking them to rate their agreement with certain statements that relate to smartphone addiction. The collected data would then provide insight regarding how widespread the issue may be – in other words, it would describe the situation.

The key defining attribute of this type of research design is that it purely describes the situation . In other words, descriptive research design does not explore potential relationships between different variables or the causes that may underlie those relationships. Therefore, descriptive research is useful for generating insight into a research problem by describing its characteristics . By doing so, it can provide valuable insights and is often used as a precursor to other research design types.

Correlational Research Design

Correlational design is a popular choice for researchers aiming to identify and measure the relationship between two or more variables without manipulating them . In other words, this type of research design is useful when you want to know whether a change in one thing tends to be accompanied by a change in another thing.

For example, if you wanted to explore the relationship between exercise frequency and overall health, you could use a correlational design to help you achieve this. In this case, you might gather data on participants’ exercise habits, as well as records of their health indicators like blood pressure, heart rate, or body mass index. Thereafter, you’d use a statistical test to assess whether there’s a relationship between the two variables (exercise frequency and health).

As you can see, correlational research design is useful when you want to explore potential relationships between variables that cannot be manipulated or controlled for ethical, practical, or logistical reasons. It is particularly helpful in terms of developing predictions , and given that it doesn’t involve the manipulation of variables, it can be implemented at a large scale more easily than experimental designs (which will look at next).

That said, it’s important to keep in mind that correlational research design has limitations – most notably that it cannot be used to establish causality . In other words, correlation does not equal causation . To establish causality, you’ll need to move into the realm of experimental design, coming up next…

Need a helping hand?

Experimental Research Design

Experimental research design is used to determine if there is a causal relationship between two or more variables . With this type of research design, you, as the researcher, manipulate one variable (the independent variable) while controlling others (dependent variables). Doing so allows you to observe the effect of the former on the latter and draw conclusions about potential causality.

For example, if you wanted to measure if/how different types of fertiliser affect plant growth, you could set up several groups of plants, with each group receiving a different type of fertiliser, as well as one with no fertiliser at all. You could then measure how much each plant group grew (on average) over time and compare the results from the different groups to see which fertiliser was most effective.

Overall, experimental research design provides researchers with a powerful way to identify and measure causal relationships (and the direction of causality) between variables. However, developing a rigorous experimental design can be challenging as it’s not always easy to control all the variables in a study. This often results in smaller sample sizes , which can reduce the statistical power and generalisability of the results.

Moreover, experimental research design requires random assignment . This means that the researcher needs to assign participants to different groups or conditions in a way that each participant has an equal chance of being assigned to any group (note that this is not the same as random sampling ). Doing so helps reduce the potential for bias and confounding variables . This need for random assignment can lead to ethics-related issues . For example, withholding a potentially beneficial medical treatment from a control group may be considered unethical in certain situations.

Quasi-Experimental Research Design

Quasi-experimental research design is used when the research aims involve identifying causal relations , but one cannot (or doesn’t want to) randomly assign participants to different groups (for practical or ethical reasons). Instead, with a quasi-experimental research design, the researcher relies on existing groups or pre-existing conditions to form groups for comparison.

For example, if you were studying the effects of a new teaching method on student achievement in a particular school district, you may be unable to randomly assign students to either group and instead have to choose classes or schools that already use different teaching methods. This way, you still achieve separate groups, without having to assign participants to specific groups yourself.

Naturally, quasi-experimental research designs have limitations when compared to experimental designs. Given that participant assignment is not random, it’s more difficult to confidently establish causality between variables, and, as a researcher, you have less control over other variables that may impact findings.

All that said, quasi-experimental designs can still be valuable in research contexts where random assignment is not possible and can often be undertaken on a much larger scale than experimental research, thus increasing the statistical power of the results. What’s important is that you, as the researcher, understand the limitations of the design and conduct your quasi-experiment as rigorously as possible, paying careful attention to any potential confounding variables .

Research Design: Qualitative Studies

There are many different research design types when it comes to qualitative studies, but here we’ll narrow our focus to explore the “Big 4”. Specifically, we’ll look at phenomenological design, grounded theory design, ethnographic design, and case study design.

Phenomenological Research Design

Phenomenological design involves exploring the meaning of lived experiences and how they are perceived by individuals. This type of research design seeks to understand people’s perspectives , emotions, and behaviours in specific situations. Here, the aim for researchers is to uncover the essence of human experience without making any assumptions or imposing preconceived ideas on their subjects.

For example, you could adopt a phenomenological design to study why cancer survivors have such varied perceptions of their lives after overcoming their disease. This could be achieved by interviewing survivors and then analysing the data using a qualitative analysis method such as thematic analysis to identify commonalities and differences.

Phenomenological research design typically involves in-depth interviews or open-ended questionnaires to collect rich, detailed data about participants’ subjective experiences. This richness is one of the key strengths of phenomenological research design but, naturally, it also has limitations. These include potential biases in data collection and interpretation and the lack of generalisability of findings to broader populations.

Grounded Theory Research Design

Grounded theory (also referred to as “GT”) aims to develop theories by continuously and iteratively analysing and comparing data collected from a relatively large number of participants in a study. It takes an inductive (bottom-up) approach, with a focus on letting the data “speak for itself”, without being influenced by preexisting theories or the researcher’s preconceptions.

As an example, let’s assume your research aims involved understanding how people cope with chronic pain from a specific medical condition, with a view to developing a theory around this. In this case, grounded theory design would allow you to explore this concept thoroughly without preconceptions about what coping mechanisms might exist. You may find that some patients prefer cognitive-behavioural therapy (CBT) while others prefer to rely on herbal remedies. Based on multiple, iterative rounds of analysis, you could then develop a theory in this regard, derived directly from the data (as opposed to other preexisting theories and models).

Grounded theory typically involves collecting data through interviews or observations and then analysing it to identify patterns and themes that emerge from the data. These emerging ideas are then validated by collecting more data until a saturation point is reached (i.e., no new information can be squeezed from the data). From that base, a theory can then be developed .

As you can see, grounded theory is ideally suited to studies where the research aims involve theory generation , especially in under-researched areas. Keep in mind though that this type of research design can be quite time-intensive , given the need for multiple rounds of data collection and analysis.

Ethnographic Research Design

Ethnographic design involves observing and studying a culture-sharing group of people in their natural setting to gain insight into their behaviours, beliefs, and values. The focus here is on observing participants in their natural environment (as opposed to a controlled environment). This typically involves the researcher spending an extended period of time with the participants in their environment, carefully observing and taking field notes .

All of this is not to say that ethnographic research design relies purely on observation. On the contrary, this design typically also involves in-depth interviews to explore participants’ views, beliefs, etc. However, unobtrusive observation is a core component of the ethnographic approach.

As an example, an ethnographer may study how different communities celebrate traditional festivals or how individuals from different generations interact with technology differently. This may involve a lengthy period of observation, combined with in-depth interviews to further explore specific areas of interest that emerge as a result of the observations that the researcher has made.

As you can probably imagine, ethnographic research design has the ability to provide rich, contextually embedded insights into the socio-cultural dynamics of human behaviour within a natural, uncontrived setting. Naturally, however, it does come with its own set of challenges, including researcher bias (since the researcher can become quite immersed in the group), participant confidentiality and, predictably, ethical complexities . All of these need to be carefully managed if you choose to adopt this type of research design.

Case Study Design

With case study research design, you, as the researcher, investigate a single individual (or a single group of individuals) to gain an in-depth understanding of their experiences, behaviours or outcomes. Unlike other research designs that are aimed at larger sample sizes, case studies offer a deep dive into the specific circumstances surrounding a person, group of people, event or phenomenon, generally within a bounded setting or context .

As an example, a case study design could be used to explore the factors influencing the success of a specific small business. This would involve diving deeply into the organisation to explore and understand what makes it tick – from marketing to HR to finance. In terms of data collection, this could include interviews with staff and management, review of policy documents and financial statements, surveying customers, etc.

While the above example is focused squarely on one organisation, it’s worth noting that case study research designs can have different variation s, including single-case, multiple-case and longitudinal designs. As you can see in the example, a single-case design involves intensely examining a single entity to understand its unique characteristics and complexities. Conversely, in a multiple-case design , multiple cases are compared and contrasted to identify patterns and commonalities. Lastly, in a longitudinal case design , a single case or multiple cases are studied over an extended period of time to understand how factors develop over time.

As you can see, a case study research design is particularly useful where a deep and contextualised understanding of a specific phenomenon or issue is desired. However, this strength is also its weakness. In other words, you can’t generalise the findings from a case study to the broader population. So, keep this in mind if you’re considering going the case study route.

How To Choose A Research Design

Having worked through all of these potential research designs, you’d be forgiven for feeling a little overwhelmed and wondering, “ But how do I decide which research design to use? ”. While we could write an entire post covering that alone, here are a few factors to consider that will help you choose a suitable research design for your study.

Data type: The first determining factor is naturally the type of data you plan to be collecting – i.e., qualitative or quantitative. This may sound obvious, but we have to be clear about this – don’t try to use a quantitative research design on qualitative data (or vice versa)!

Research aim(s) and question(s): As with all methodological decisions, your research aim and research questions will heavily influence your research design. For example, if your research aims involve developing a theory from qualitative data, grounded theory would be a strong option. Similarly, if your research aims involve identifying and measuring relationships between variables, one of the experimental designs would likely be a better option.

Time: It’s essential that you consider any time constraints you have, as this will impact the type of research design you can choose. For example, if you’ve only got a month to complete your project, a lengthy design such as ethnography wouldn’t be a good fit.

Resources: Take into account the resources realistically available to you, as these need to factor into your research design choice. For example, if you require highly specialised lab equipment to execute an experimental design, you need to be sure that you’ll have access to that before you make a decision.

Keep in mind that when it comes to research, it’s important to manage your risks and play as conservatively as possible. If your entire project relies on you achieving a huge sample, having access to niche equipment or holding interviews with very difficult-to-reach participants, you’re creating risks that could kill your project. So, be sure to think through your choices carefully and make sure that you have backup plans for any existential risks. Remember that a relatively simple methodology executed well generally will typically earn better marks than a highly-complex methodology executed poorly.

Recap: Key Takeaways

We’ve covered a lot of ground here. Let’s recap by looking at the key takeaways:

- Research design refers to the overall plan, structure or strategy that guides a research project, from its conception to the final analysis of data.

- Research designs for quantitative studies include descriptive , correlational , experimental and quasi-experimenta l designs.

- Research designs for qualitative studies include phenomenological , grounded theory , ethnographic and case study designs.

- When choosing a research design, you need to consider a variety of factors, including the type of data you’ll be working with, your research aims and questions, your time and the resources available to you.

If you need a helping hand with your research design (or any other aspect of your research), check out our private coaching services .

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

12 Comments

Is there any blog article explaining more on Case study research design? Is there a Case study write-up template? Thank you.

Thanks this was quite valuable to clarify such an important concept.

Thanks for this simplified explanations. it is quite very helpful.

This was really helpful. thanks

Thank you for your explanation. I think case study research design and the use of secondary data in researches needs to be talked about more in your videos and articles because there a lot of case studies research design tailored projects out there.

Please is there any template for a case study research design whose data type is a secondary data on your repository?

This post is very clear, comprehensive and has been very helpful to me. It has cleared the confusion I had in regard to research design and methodology.

This post is helpful, easy to understand, and deconstructs what a research design is. Thanks

This post is really helpful.

how to cite this page

Thank you very much for the post. It is wonderful and has cleared many worries in my mind regarding research designs. I really appreciate .

how can I put this blog as my reference(APA style) in bibliography part?

This post has been very useful to me. Confusing areas have been cleared

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Design | Step-by-Step Guide with Examples

Published on 5 May 2022 by Shona McCombes . Revised on 20 March 2023.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall aims and approach

- The type of research design you’ll use

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research aims and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, frequently asked questions.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities – start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

| Qualitative approach | Quantitative approach |

|---|---|

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

It’s also possible to use a mixed methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Prevent plagiarism, run a free check.

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

Quantitative designs can be split into four main types. Experimental and quasi-experimental designs allow you to test cause-and-effect relationships, while descriptive and correlational designs allow you to measure variables and describe relationships between them.

| Type of design | Purpose and characteristics |

|---|---|

| Experimental | |

| Quasi-experimental | |

| Correlational | |

| Descriptive |

With descriptive and correlational designs, you can get a clear picture of characteristics, trends, and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analysing the data.

| Type of design | Purpose and characteristics |

|---|---|

| Grounded theory | |

| Phenomenology |

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study – plants, animals, organisations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region, or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalise your results to the population as a whole.

| Probability sampling | Non-probability sampling |

|---|---|

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study, your aim is to deeply understand a specific context, not to generalise to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question.

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviours, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews.

| Questionnaires | Interviews |

|---|---|

Observation methods

Observations allow you to collect data unobtrusively, observing characteristics, behaviours, or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

| Quantitative observation | |

|---|---|

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

| Field | Examples of data collection methods |

|---|---|

| Media & communication | Collecting a sample of texts (e.g., speeches, articles, or social media posts) for data on cultural norms and narratives |

| Psychology | Using technologies like neuroimaging, eye-tracking, or computer-based tasks to collect data on things like attention, emotional response, or reaction time |

| Education | Using tests or assignments to collect data on knowledge and skills |

| Physical sciences | Using scientific instruments to collect data on things like weight, blood pressure, or chemical composition |

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected – for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are reliable and valid.

Operationalisation

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalisation means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in – for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity