- - Google Chrome

Intended for healthcare professionals

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- News & Views

- Critical thinking in...

Critical thinking in healthcare and education

- Related content

- Peer review

- Jonathan M Sharples , professor 1 ,

- Andrew D Oxman , research director 2 ,

- Kamal R Mahtani , clinical lecturer 3 ,

- Iain Chalmers , coordinator 4 ,

- Sandy Oliver , professor 1 ,

- Kevan Collins , chief executive 5 ,

- Astrid Austvoll-Dahlgren , senior researcher 2 ,

- Tammy Hoffmann , professor 6

- 1 EPPI-Centre, UCL Department of Social Science, London, UK

- 2 Global Health Unit, Norwegian Institute of Public Health, Oslo, Norway

- 3 Centre for Evidence-Based Medicine, Oxford University, Oxford, UK

- 4 James Lind Initiative, Oxford, UK

- 5 Education Endowment Foundation, London, UK

- 6 Centre for Research in Evidence-Based Practice, Bond University, Gold Coast, Australia

- Correspondence to: J M Sharples Jonathan.Sharples{at}eefoundation.org.uk

Critical thinking is just one skill crucial to evidence based practice in healthcare and education, write Jonathan Sharples and colleagues , who see exciting opportunities for cross sector collaboration

Imagine you are a primary care doctor. A patient comes into your office with acute, atypical chest pain. Immediately you consider the patient’s sex and age, and you begin to think about what questions to ask and what diagnoses and diagnostic tests to consider. You will also need to think about what treatments to consider and how to communicate with the patient and potentially with the patient’s family and other healthcare providers. Some of what you do will be done reflexively, with little explicit thought, but caring for most patients also requires you to think critically about what you are going to do.

Critical thinking, the ability to think clearly and rationally about what to do or what to believe, is essential for the practice of medicine. Few doctors are likely to argue with this. Yet, until recently, the UK regulator the General Medical Council and similar bodies in North America did not mention “critical thinking” anywhere in their standards for licensing and accreditation, 1 and critical thinking is not explicitly taught or assessed in most education programmes for health professionals. 2

Moreover, although more than 2800 articles indexed by PubMed have “critical thinking” in the title or abstract, most are about nursing. We argue that it is important for clinicians and patients to learn to think critically and that the teaching and learning of these skills should be considered explicitly. Given the shared interest in critical thinking with broader education, we also highlight why healthcare and education professionals and researchers need to work together to enable people to think critically about the health choices they make throughout life.

Essential skills for doctors and patients

Critical thinking …

Log in using your username and password

BMA Member Log In

If you have a subscription to The BMJ, log in:

- Need to activate

- Log in via institution

- Log in via OpenAthens

Log in through your institution

Subscribe from £184 *.

Subscribe and get access to all BMJ articles, and much more.

* For online subscription

Access this article for 1 day for: £50 / $60/ €56 ( excludes VAT )

You can download a PDF version for your personal record.

Buy this article

ANA Nursing Resources Hub

Search Resources Hub

What is Evidence-Based Practice in Nursing?

5 min read • June, 01 2023

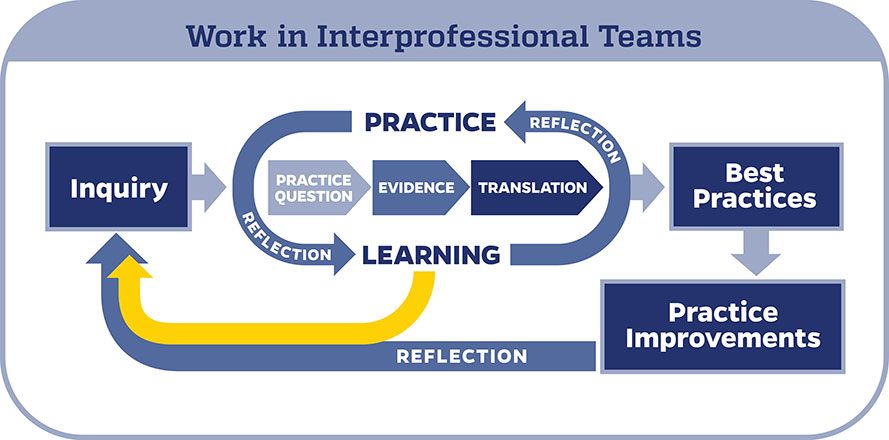

Evidence-based practice in nursing involves providing holistic, quality care based on the most up-to-date research and knowledge rather than traditional methods, advice from colleagues, or personal beliefs.

Nurses can expand their knowledge and improve their clinical practice experience by collecting, processing, and implementing research findings. Evidence-based practice focuses on what's at the heart of nursing — your patient. Learn what evidence-based practice in nursing is, why it's essential, and how to incorporate it into your daily patient care.

How to Use Evidence-Based Practice in Nursing

Evidence-based practice requires you to review and assess the latest research. The knowledge gained from evidence-based research in nursing may indicate changing a standard nursing care policy in your practice Discuss your findings with your nurse manager and team before implementation. Once you've gained their support and ensured compliance with your facility's policies and procedures, merge nursing implementations based on this information with your patient's values to provide the most effective care.

You may already be using evidence-based nursing practices without knowing it. Research findings support a significant percentage of nursing practices, and ongoing studies anticipate this will continue to increase.

Evidence-Based Practice in Nursing Examples

There are various examples of evidence-based practice in nursing, such as:

- Use of oxygen to help with hypoxia and organ failure in patients with COPD

- Management of angina

- Protocols regarding alarm fatigue

- Recognition of a family member's influence on a patient's presentation of symptoms

- Noninvasive measurement of blood pressure in children

Improving patient care begins by asking how you can make it a safer, more compassionate, and personal experience.

Learn about pertinent evidence-based practice information on our Clinical Practice Material page .

Five Steps to Implement Evidence-Based Practice in Nursing

Evidence-based nursing draws upon critical reasoning and judgment skills developed through experience and training. You can practice evidence-based nursing interventions by following five crucial steps that serve as guidelines for making patient care decisions. This process includes incorporating the best external evidence, your clinical expertise, and the patient's values and expectations.

- Ask a clear question about the patient's issue and determine an ultimate goal, such as improving a procedure to help their specific condition.

- Acquire the best evidence by searching relevant clinical articles from legitimate sources.

- Appraise the resources gathered to determine if the information is valid, of optimal quality compared to the evidence levels, and relevant for the patient.

- Apply the evidence to clinical practice by making decisions based on your nursing expertise and the new information.

- Assess outcomes to determine if the treatment was effective and should be considered for other patients.

Analyzing Evidence-Based Research Levels

You can compare current professional and clinical practices with new research outcomes when evaluating evidence-based research. But how do you know what's considered the best information?

Use critical thinking skills and consider levels of evidence to establish the reliability of the information when you analyze evidence-based research. These levels can help you determine how much emphasis to place on a study, report, or clinical practice guideline when making decisions about patient care.

The Levels of Evidence-Based Practice

Four primary levels of evidence come into play when you're making clinical decisions.

- Level A acquires evidence from randomized, controlled trials and is considered the most reliable.

- Level B evidence is obtained from quality-designed control trials without randomization.

- Level C typically gets implemented when there is limited information about a condition and acquires evidence from a consensus viewpoint or expert opinion.

- Level ML (multi-level) is usually applied to complex cases and gets its evidence from more than one of the other levels.

Why Is Evidence-Based Practice in Nursing Essential?

Implementing evidence-based practice in nursing bridges the theory-to-practice gap and delivers innovative patient care using the most current health care findings. The topic of evidence-based practice will likely come up throughout your nursing career. Its origins trace back to Florence Nightingale. This iconic founder of modern nursing gathered data and conclusions regarding the relationship between unsanitary conditions and failing health. Its application remains essential today.

Other Benefits of Evidence-Based Practice in Nursing

Besides keeping health care practices relevant and current, evidence-based practice in nursing offers a range of other benefits to you and your patients:

- Promotes positive patient outcomes

- Reduces health care costs by preventing complications

- Contributes to the growth of the science of nursing

- Allows for incorporation of new technologies into health care practice

- Increases nurse autonomy and confidence in decision-making

- Ensures relevancy of nursing practice with new interventions and care protocols

- Provides scientifically supported research to help make well-informed decisions

- Fosters shared decision-making with patients in care planning

- Enhances critical thinking

- Encourages lifelong learning

When you use the principles of evidence-based practice in nursing to make decisions about your patient's care, it results in better outcomes, higher satisfaction, and reduced costs. Implementing this method promotes lifelong learning and lets you strive for continuous quality improvement in your clinical care and nursing practice to achieve nursing excellence .

Images sourced from Getty Images

Related Resources

Item(s) added to cart

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Working with sources

- What Is Critical Thinking? | Definition & Examples

What Is Critical Thinking? | Definition & Examples

Published on May 30, 2022 by Eoghan Ryan . Revised on May 31, 2023.

Critical thinking is the ability to effectively analyze information and form a judgment .

To think critically, you must be aware of your own biases and assumptions when encountering information, and apply consistent standards when evaluating sources .

Critical thinking skills help you to:

- Identify credible sources

- Evaluate and respond to arguments

- Assess alternative viewpoints

- Test hypotheses against relevant criteria

Table of contents

Why is critical thinking important, critical thinking examples, how to think critically, other interesting articles, frequently asked questions about critical thinking.

Critical thinking is important for making judgments about sources of information and forming your own arguments. It emphasizes a rational, objective, and self-aware approach that can help you to identify credible sources and strengthen your conclusions.

Critical thinking is important in all disciplines and throughout all stages of the research process . The types of evidence used in the sciences and in the humanities may differ, but critical thinking skills are relevant to both.

In academic writing , critical thinking can help you to determine whether a source:

- Is free from research bias

- Provides evidence to support its research findings

- Considers alternative viewpoints

Outside of academia, critical thinking goes hand in hand with information literacy to help you form opinions rationally and engage independently and critically with popular media.

Scribbr Citation Checker New

The AI-powered Citation Checker helps you avoid common mistakes such as:

- Missing commas and periods

- Incorrect usage of “et al.”

- Ampersands (&) in narrative citations

- Missing reference entries

Critical thinking can help you to identify reliable sources of information that you can cite in your research paper . It can also guide your own research methods and inform your own arguments.

Outside of academia, critical thinking can help you to be aware of both your own and others’ biases and assumptions.

Academic examples

However, when you compare the findings of the study with other current research, you determine that the results seem improbable. You analyze the paper again, consulting the sources it cites.

You notice that the research was funded by the pharmaceutical company that created the treatment. Because of this, you view its results skeptically and determine that more independent research is necessary to confirm or refute them. Example: Poor critical thinking in an academic context You’re researching a paper on the impact wireless technology has had on developing countries that previously did not have large-scale communications infrastructure. You read an article that seems to confirm your hypothesis: the impact is mainly positive. Rather than evaluating the research methodology, you accept the findings uncritically.

Nonacademic examples

However, you decide to compare this review article with consumer reviews on a different site. You find that these reviews are not as positive. Some customers have had problems installing the alarm, and some have noted that it activates for no apparent reason.

You revisit the original review article. You notice that the words “sponsored content” appear in small print under the article title. Based on this, you conclude that the review is advertising and is therefore not an unbiased source. Example: Poor critical thinking in a nonacademic context You support a candidate in an upcoming election. You visit an online news site affiliated with their political party and read an article that criticizes their opponent. The article claims that the opponent is inexperienced in politics. You accept this without evidence, because it fits your preconceptions about the opponent.

There is no single way to think critically. How you engage with information will depend on the type of source you’re using and the information you need.

However, you can engage with sources in a systematic and critical way by asking certain questions when you encounter information. Like the CRAAP test , these questions focus on the currency , relevance , authority , accuracy , and purpose of a source of information.

When encountering information, ask:

- Who is the author? Are they an expert in their field?

- What do they say? Is their argument clear? Can you summarize it?

- When did they say this? Is the source current?

- Where is the information published? Is it an academic article? Is it peer-reviewed ?

- Why did the author publish it? What is their motivation?

- How do they make their argument? Is it backed up by evidence? Does it rely on opinion, speculation, or appeals to emotion ? Do they address alternative arguments?

Critical thinking also involves being aware of your own biases, not only those of others. When you make an argument or draw your own conclusions, you can ask similar questions about your own writing:

- Am I only considering evidence that supports my preconceptions?

- Is my argument expressed clearly and backed up with credible sources?

- Would I be convinced by this argument coming from someone else?

If you want to know more about ChatGPT, AI tools , citation , and plagiarism , make sure to check out some of our other articles with explanations and examples.

- ChatGPT vs human editor

- ChatGPT citations

- Is ChatGPT trustworthy?

- Using ChatGPT for your studies

- What is ChatGPT?

- Chicago style

- Paraphrasing

Plagiarism

- Types of plagiarism

- Self-plagiarism

- Avoiding plagiarism

- Academic integrity

- Consequences of plagiarism

- Common knowledge

Don't submit your assignments before you do this

The academic proofreading tool has been trained on 1000s of academic texts. Making it the most accurate and reliable proofreading tool for students. Free citation check included.

Try for free

Critical thinking refers to the ability to evaluate information and to be aware of biases or assumptions, including your own.

Like information literacy , it involves evaluating arguments, identifying and solving problems in an objective and systematic way, and clearly communicating your ideas.

Critical thinking skills include the ability to:

You can assess information and arguments critically by asking certain questions about the source. You can use the CRAAP test , focusing on the currency , relevance , authority , accuracy , and purpose of a source of information.

Ask questions such as:

- Who is the author? Are they an expert?

- How do they make their argument? Is it backed up by evidence?

A credible source should pass the CRAAP test and follow these guidelines:

- The information should be up to date and current.

- The author and publication should be a trusted authority on the subject you are researching.

- The sources the author cited should be easy to find, clear, and unbiased.

- For a web source, the URL and layout should signify that it is trustworthy.

Information literacy refers to a broad range of skills, including the ability to find, evaluate, and use sources of information effectively.

Being information literate means that you:

- Know how to find credible sources

- Use relevant sources to inform your research

- Understand what constitutes plagiarism

- Know how to cite your sources correctly

Confirmation bias is the tendency to search, interpret, and recall information in a way that aligns with our pre-existing values, opinions, or beliefs. It refers to the ability to recollect information best when it amplifies what we already believe. Relatedly, we tend to forget information that contradicts our opinions.

Although selective recall is a component of confirmation bias, it should not be confused with recall bias.

On the other hand, recall bias refers to the differences in the ability between study participants to recall past events when self-reporting is used. This difference in accuracy or completeness of recollection is not related to beliefs or opinions. Rather, recall bias relates to other factors, such as the length of the recall period, age, and the characteristics of the disease under investigation.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Ryan, E. (2023, May 31). What Is Critical Thinking? | Definition & Examples. Scribbr. Retrieved August 12, 2024, from https://www.scribbr.com/working-with-sources/critical-thinking/

Is this article helpful?

Eoghan Ryan

Other students also liked, student guide: information literacy | meaning & examples, what are credible sources & how to spot them | examples, applying the craap test & evaluating sources, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- BMJ Journals

You are here

- Volume 24, Issue 3

- Evidence-based practice education for healthcare professions: an expert view

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Elaine Lehane 1 ,

- Patricia Leahy-Warren 1 ,

- Cliona O’Riordan 1 ,

- Eileen Savage 1 ,

- Jonathan Drennan 1 ,

- Colm O’Tuathaigh 2 ,

- Michael O’Connor 3 ,

- Mark Corrigan 4 ,

- Francis Burke 5 ,

- Martina Hayes 5 ,

- Helen Lynch 6 ,

- Laura Sahm 7 ,

- Elizabeth Heffernan 8 ,

- Elizabeth O’Keeffe 9 ,

- Catherine Blake 10 ,

- Frances Horgan 11 ,

- Josephine Hegarty 1

- 1 Catherine McAuley School of Nursing and Midwifery , University College Cork , Cork , Ireland

- 2 School of Medicine , University College Cork , Cork , Ireland

- 3 Postgraduate Medical Training , Cork University Hospital/Royal College of Physicians , Cork , Ireland

- 4 Postgraduate Surgical Training, Breast Cancer Centre , Cork University Hospital/Royal College of Surgeons , Cork , Ireland

- 5 School of Dentistry , University College Cork , Cork , Ireland

- 6 School of Clinical Therapies , University College Cork , Cork , Ireland

- 7 School of Pharmacy , University College Cork , Cork , Ireland

- 8 Nursing and Midwifery Planning and Development Unit , Kerry Centre for Nurse and Midwifery Education , Cork , Ireland

- 9 Symptomatic Breast Imaging Unit , Cork University Hospital , Cork , Ireland

- 10 School of Public Health, Physiotherapy and Sports Science , University College Dublin , Dublin , Ireland

- 11 School of Physiotherapy , Royal College of Surgeons in Ireland , Dublin , Ireland

- Correspondence to Dr Elaine Lehane, Catherine McAuley School of Nursing and Midwifery, University College Cork, Cork T12 K8AF, Ireland; e.lehane{at}ucc.ie

https://doi.org/10.1136/bmjebm-2018-111019

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

- qualitative research

Introduction

To highlight and advance clinical effectiveness and evidence-based practice (EBP) agendas, the Institute of Medicine set a goal that by 2020, 90% of clinical decisions will be supported by accurate, timely and up-to-date clinical information and will reflect the best available evidence to achieve the best patient outcomes. 1 To ensure that future healthcare users can be assured of receiving such care, healthcare professions must effectively incorporate the necessary knowledge, skills and attitudes required for EBP into education programmes.

The application of EBP continues to be observed irregularly at the point of patient contact. 2 5 7 The effective development and implementation of professional education to facilitate EBP remains a major and immediate challenge. 2 3 6 8 Momentum for continued improvement in EBP education in the form of investigations which can provide direction and structure to developments in this field is recommended. 6

As part of a larger national project looking at current practice and provision of EBP education across healthcare professions at undergraduate, postgraduate and continuing professional development programme levels, we sought key perspectives from international EBP education experts on the provision of EBP education for healthcare professionals. The two other components of this study, namely a rapid review synthesis of EBP literature and a descriptive, cross-sectional, national, online survey relating to the current provision and practice of EBP education to healthcare professionals at third-level institutions and professional training/regulatory bodies in Ireland, will be described in later publications.

EBP expert interviews were conducted to ascertain current and nuanced information on EBP education from an international perspective. Experts from the UK, Canada, New Zealand and Australia were invited by email to participate based on their contribution to peer-reviewed literature on the subject area and recognised innovation in EBP education. Over a 2-month period, individual ‘Skype’ interviews were conducted and recorded. The interview guide (online supplementary appendix A ) focused on current practice and provision of EBP education with specific attention given to EBP curricula, core EBP competencies, assessment methods, teaching initiatives and key challenges to EBP education within respective countries. Qualitative content analysis techniques as advised by Bogner et al 9 for examination of expert interviews were used. Specifically, a six-step process was applied, namely transcription, reading through/paraphrasing, coding, thematic comparison, sociological conceptualisation and theoretical generalisation. To ensure trustworthiness, a number of practices were undertaken, including explicit description of the methods undertaken, participant profile, extensive use of interview transcripts by way of representative quotations, peer review (PL-W) of the data analysis process and invited interviewees to feedback in relation to the overall findings.

Supplementary file 1

Five EBP experts participated in the interviews ( table 1 ). All experts waived their right to anonymity.

- View inline

EBP education expert profile

Three main categories emerged, namely (1) ‘EBP curriculum considerations’, (2) ‘Teaching EBP’ and (3) ‘Stakeholder engagement in EBP education’. These categories informed the overarching theme of ‘Improving healthcare through enhanced teaching and application of EBP’ ( figure 1 ).

- Download figure

- Open in new tab

- Download powerpoint

Summary of data analysis findings from evidence-based practice (EBP) expert interviews—theme, categories and subcategories.

EBP curriculum considerations

Definitive advice in relation to curriculum considerations was provided with a clear emphasis on the need for EBP principles to be integrated throughout all elements of healthcare professions curricula. Educators, regardless of teaching setting, need to be able to ‘draw out evidence-based components’ from any and all aspects of curriculum content, including its incorporation into assessments and examinations. Integration of EBP into clinical curricula in particular was considered essential to successful learning and practice outcomes. If students perceive a dichotomy between EBP and actual clinical care, then “never the twain shall meet” (GG) requiring integration in such a way that it is “seen as part of the basics of optimal clinical care” (GG). Situating EBP as a core element within the professional curriculum and linking it to professional accreditation processes places further emphasis on the necessity of teaching EBP:

…it is also core in residency programmes. So every residency programme has a curriculum on evidence-based practice where again, the residency programmes are accredited…They have to show that they’re teaching evidence-based practice. (GG)

In terms of the focus of curriculum content, all experts emphasised the oft-cited steps of asking questions, acquiring, appraising and applying evidence to patient care decisions. With regard to identifying and retrieving information, the following in particular was noted:

…the key competencies would be to identify evidence-based sources of information, and one of the key things is there should be no expectation that clinicians are going to go to primary research and evaluate primary research. That is simply not a realistic expectation. In teaching it…they have to be able to identify the pre-processed sources and they have to be able to understand the evidence and they have to be able to use it… (GG)

In addition to attaining proficiency in the fundamental EBP steps, developing competence in communicating evidence to others, including the patient, and facilitating shared decision-making were also highlighted:

…So our ability to communicate risks, benefits, understand uncertainty is so poor…that’s a key area we could improve… (CH)

…and a big emphasis [is needed] on the applicability of that information on patient care, how do you use and share the decision making, which is becoming a bigger and bigger deal. (GG)

It was suggested that these EBP ‘basics’ can be taught “from the start in very similar ways” (GG), regardless of whether the student is at an undergraduate or postgraduate level. The concept of ‘ developmental milestones’ was raised by one expert. This related to different levels of expectations in learning and assessing EBP skills and knowledge throughout a programme of study with an incremental approach to teaching and learning advocated over a course of study:

…in terms of developmental milestones. So for the novice…it’s really trying to get them aware of what the structure of evidence-based practice is and knowing what the process of asking a question and the PICO process and learning about that…in their final year…they’re asked to do critically appraised topics and relate it to clinical cases…It’s a developmental process… (LT)

Teaching EBP

Adoption of effective strategies and practical methods to realise successful student learning and understanding was emphasised. Of particular note was the grounding of teaching strategy and associated methods from a clinically relevant perspective with student exposure to EBP facilitated in a dynamic and interesting manner. The use of patient examples and clinical scenarios was repeatedly expressed as one of the most effective instructional practices:

…ultimately trying to get people to teach in a way where they go, “Look, this is really relevant, dynamic and interesting"…so we teach them in loads of different ways…you’re teaching and feeding the ideas as opposed to “"Here’s a definitive course in this way”. (CH)

…It’s pretty obscure stuff, but then I get them to do three examples…when they have done that they have pretty well got their heads around it…I build them lots of practical examples…clinical examples otherwise they think it’s all didactic garbage… (BA)

EBP role models were emphasised as being integral to demonstrating the application of EBP in clinical decision-making and facilitating the contextualisation of EBP within a specific setting/organisation.

…where we’ve seen success is where organisations have said, “There’s going to be two or three people who are going to be the champions and lead where we’re going”…the issue about evidence, it’s complex, it needs to be contextualised and it’s different for each setting… (CH)

It was further suggested that these healthcare professionals have the ‘X-factor’ required of EBP. The acquisition of such expertise which enables a practitioner to integrate individual EBP components culminating in evidence-based decisions was proposed as a definitive target for all healthcare professionals.

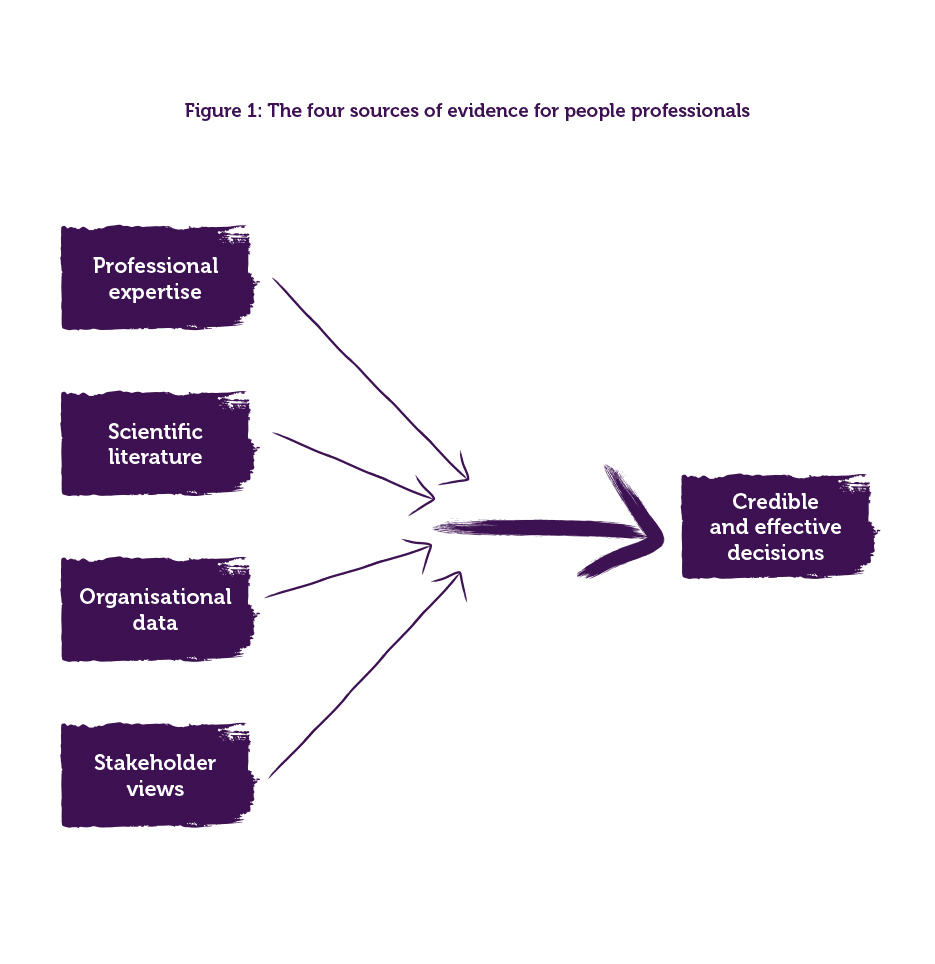

And we call it the X factor…the idea is that the clinician who has the X factor is the good clinician. It’s actually integrating the evidence, the patient values, the patient’s pathophysiology, etc. It could be behavioural issues, systems issues…Those are the four quadrants and the clinical expertise is about integrating those together…You’re not actually adding clinical expertise. It seems to me that the clinical expertise is the ability to integrate those four quadrants. (RJ)

The provision of training for educators to aid the further development of skills and use of resources necessary for effective EBP teaching was recommended:

…so we choose the option to train people as really good teachers and give them really high level skills so that they can then seed it across their organisation… (CH)

Attaining a critical mass of people who are ‘trained’ was also deemed important in making a sustained change:

…and it requires getting the teachers trained and getting enough of them. You don’t need everybody to be doing it to make an impression, but you need enough of them really doing it. (GG)

Stakeholder engagement in EBP education

Engagement of national policy makers, healthcare professionals and patients with EBP was considered to have significant potential to advance its teaching and application in clinical care. The lack of a coherent government and national policy to EBP teaching was cited as a barrier to the implementation of the EBP agenda resulting in a somewhat ‘ad-hoc’ approach, dependent on individual educational or research institutions:

…there’s no cohesive or coherent policy that exists…It’s not been a consistent approach. What we’ve tended to see is that people have started going around particular initiatives…but there’s never been any coordinated approach even from a college perspective, to say we are about improving the uptake and use of evidence in practice and/or generating evidence in practice. And so largely, it’s been left to research institutions… (CH)

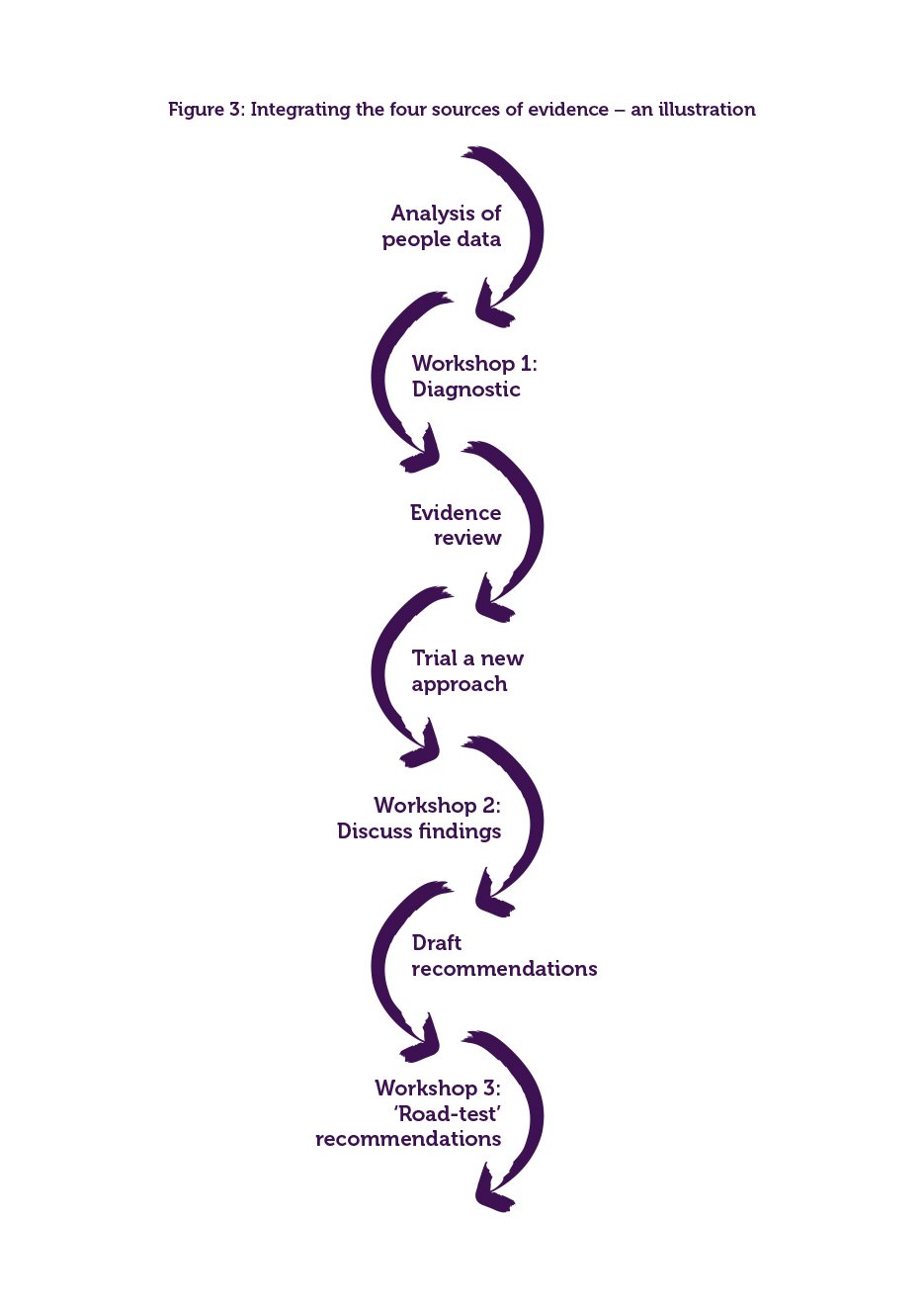

To further ingrain EBP within healthcare professional practice, it was suggested that EBP processes, whether related to developing, disseminating or implementing evidence, be embedded in a more structured way into everyday clinical care to promote active and consistent engagement with EBP on a continuous basis:

…we think it should be embedded into care…we’ve got to have people being active in developing, disseminating and implementing evidence…developing can come in a number of formats. It can be an audit. It can be about a practice improvement. It can be about doing some aspect like a systematic review, but it’s very clearly close to healthcare. (CH)

Enabling patients to engage with evidence with a view to informing healthcare professional/patient interactions and care decisions was also advocated:

…I think we really need to put some energy into…this whole idea of patient-driven care, patient-led care and putting some of these tools in the hands of the consumers so that they’re enabled to be able to ask the right questions and to go into an interaction with some background knowledge about what treatments they should be expecting. (LT)

If patients are considered as recipients of EBP rather than key stakeholders, the premise of shared decision-making for care cannot be achieved.

The implementation of a successful EBP education is necessary so that learners not only understand the importance of EBP and be competent in the fundamental steps, but it ultimately serves to influence behaviour in terms of decision-making, through application of EBP in their professional practice. In essence, it serves the function of developing practitioners who value EBP and have the knowledge and skills to implement such practice. The ultimate goal of this agenda is to enhance the delivery of healthcare for improved patient outcomes. The overarching theme of ‘Improving healthcare through enhanced teaching and application of EBP’ represents the focus and purpose of the effort required to optimally structure healthcare professional (HCP) curricula, promote effective EBP teaching and learning strategies, and engage with key stakeholders for the overall advancement of EBP education as noted:

…we think that everyone in training should be in the game of improving healthcare…It’s not just saying I want to do some evidence-based practice…it’s ultimately about…improving healthcare. (CH)

Discussion and recommendations

Education programmes and associated curricula act as a key medium for shaping healthcare professional knowledge, skills and attitudes, and therefore play an essential role in determining the quality of care provided. 10 Unequivocal recommendations were made in relation to the pervasive integration of EBP throughout the academic and clinical curricula. Such integration is facilitated by the explicit inclusion of EBP as a core competency within professional standards and requirements in addition to accreditation processes. 11

Further emphasis on communication skills was also noted as being key to enhancing EBP competency, particularly in relation to realising shared decision-making between patients and healthcare practitioners in making evidence-based decisions. A systematic review by Galbraith et al , 12 which examined a ‘real-world’ approach to evidence-based medicine in general practice, corroborates this recommendation by calling for further attention to be given to communication skills of healthcare practitioners within the context of being an evidence-based practitioner. This resonates with recommendations by Gorgon et al 13 for the need to expose students to the intricacies of ‘real world’ contexts in which EBP is applied.

Experts in EBP, together with trends throughout empirical research and recognised educational theory repeatedly, make a number of recommendations for enhancing EBP teaching and learning strategies. These include (1) clinical integration of EBP teaching and learning, (2) a conscious effort on behalf of educators to embed EBP throughout all elements of healthcare professional programmes, (3) the use of multifaceted, dynamic teaching and assessment strategies which are context-specific and relevant to the individual learner/professional cohort, and (4) ‘scaffolding’ of learning.

At a practical level this requires a more concerted effort to move away from a predominant reliance on stand-alone didactic teaching towards clinically integrative and interactive teaching. 10 14–17 An example provided by one of the EBP experts represents such integrated teaching and experiential learning through the performance of GATE/CATs (Graphic Appraisal Tool for Epidemiological studies/Critically Appraised Topics) while on clinical rotation, with assessment conducted by a clinician in practice. Such an activity fulfils the criteria of being reflective of practice, facilitating the identification of gaps between current and desired levels of competence, identifying solutions for clinical issues and allowing re-evaluation and opportunity for reflection of decisions made with a practitioner. This level of interactivity facilitates ‘deeper’ learning, which is essential for knowledge transfer. 8 Such practices are also essential to bridge the gap between academic and clinical worlds, enabling students to experience ‘real’ translation of EBP in the clinical context. 6 ‘Scaffolding’ of learning, whereby EBP concepts and their application increase in complexity and are reinforced throughout a programme, was also highlighted as an essential instructional approach which is in keeping with recent literature specific both to EBP education and from a broader curriculum development perspective. 3 6 18 19

In addition to addressing challenges such as curriculum organisation and programme content/structure, identifying salient barriers to implementing optimal EBP education is recommended as an expedient approach to effecting positive change. 20 Highlighted strategies to overcome such barriers included (1) ‘Training the trainers’, (2) development of and investment in a national coherent approach to EBP education, and (3) structural incorporation of EBP learning into workplace settings.

National surveys of EBP education delivery 21 22 found that a lack of academic and clinical staff knowledgeable in teaching EBP was a barrier to effective and efficient student learning. This was echoed by findings from EBP expert interviews, which correspond with assertions by Hitch and Nicola-Richmond 6 that while recommended educational practices and resources are available, their uptake is somewhat limited. Effective teacher/leader education is required to improve EBP teaching quality. 10 16 23 24 Such formal training should extend to academic and clinical educators. Supporting staff to have confidence and competence in teaching EBP and providing opportunities for learning throughout education programmes is necessary to facilitate tangible change in this area.

A national and coherent plan with associated investment in healthcare education specific to the integration of EBP was highlighted as having an important impact on educational outcomes. The lack of a coordinated and cohesive approach and perceived value of EBP in the midst of competing interests, particularly within the context of the healthcare agenda, was suggested to lead to an ‘ad-hoc’ approach to the implementation of and investment in EBP education and related core EBP resources. Findings from a systematic scoping review of recommendations for the implementation of EBP 16 draw attention to a number of interventions at a national level that have potential to further promote and facilitate EBP education. Such interventions include government-level policy direction in relation to EBP education requirements across health profession programmes and the instalment and financing of a national institute for the development of evidence-based guidelines.

Incorporating EBP activities into routine clinical practice has potential to promote the consistent participation and implementation of EBP. Such incorporation can be facilitated at various different levels and settings. At a health service level, the provision of computer and internet facilities at the point of care with associated content management/decision support systems allowing access to guidelines, protocols, critically appraised topics and condensed recommendations was endorsed. At a local workplace level, access to EBP mentors, implementation of consistent and regular journal clubs, grand rounds, audit and regular research meetings are important to embed EBP within the healthcare and education environments. This in turn can nurture a culture which practically supports the observation and actualisation of EBP in day-to-day practice 16 and could in theory allow the coherent development of cohorts of EBP leaders.

There are study limitations which must be acknowledged. Four of the five interviewees were medical professionals. Further inclusion of allied healthcare professionals may have increased the representativeness of the findings. However, the primary selection criteria for participants were extensive and recognised expertise in relation to EBP education, the fundamental premises of which traverse specific professional boundaries.

Despite positive attitudes towards EBP and a predominant recognition of its necessity for the delivery of quality and safe healthcare, its consistent translation at the point of care remains elusive. To this end, continued investigations which seek to provide further direction and structure to developments in EBP education are recommended. 6 Although the quality of evidence has remained variable regarding the efficacy of individual EBP teaching interventions, consistent trends in relation to valuable andragogically sound educational approaches, fundamental curricular content and preferential instructional practices are evident within the literature in the past decade. The adoption of such trends is far from prevalent, which brings into question the extent of awareness that exists in relation to such recommendations and accompanying resources. There is a need to translate EBP into an active clinical resolution, which will have a positive impact on the delivery of patient care. In particular, an examination of current discourse between academic and clinical educators across healthcare professions is required to progress a ‘real world’ pragmatic approach to the integration of EBP education which has meaningful relevance to students and engenders active engagement from educators, clinicians and policy makers alike. Further attention is needed on strategies that not only focus on issues such as curricula structure, content and programme delivery but which support educators, education institutions, health services and clinicians to have the capacity and competence to meet the challenge of providing such EBP education.

Summary Box

What is already known.

Evidence-based practice (EBP) is established as a fundamental element and key indicator of high-quality patient care.

Both achieving competency and delivering instruction in EBP are complex processes requiring a multimodal approach.

Currently there exists only a modest utilisation of existing resources available to further develop EBP education.

What are the new findings?

In addition to developing competence in the fundamental EBP steps of ‘Ask’, ‘Acquire’, ‘Appraise’, ‘Apply’ and ‘Assess’, developing competence in effectively communicating evidence to others, in particular patients/service users, is an area newly emphasised as requiring additional attention by healthcare educators.

The successful expansion of the assessment and evaluation of EBP requires a pragmatic amplification of the discourse between academic and clinical educators.

How might it impact on clinical practice in the foreseeable future?

Quality of care is improved through the integration of the best available evidence into decision-making as routine practice and not in the extemporised manner often currently practised.

Acknowledgments

Special thanks to Professor Leanne Togher, Professor Carl Heneghan, Professor Bruce Arroll, Professor Rodney Jackson and Professor Gordon Guyatt, who provided key insights on EBP education from an international perspective. Thank you to Dr Niamh O’Rourke, Dr Eve O’Toole, Dr Sarah Condell and Professor Dermot Malone for their helpful direction throughout the project.

- 1. ↵ Institute of Medicine (IOM) (US) Roundtable on Evidence-Based Medicine . Leadership Commitments to Improve Value in Healthcare: Finding Common Ground: Workshop Summary . Washington (DC : National Academies Press (US) , 2009 .

- Summerskill W ,

- Glasziou P , et al

- Saroyan A ,

- Dauphinee WD

- Thangaratinam S ,

- Barnfield G ,

- Weinbrenner S , et al

- Barends E ,

- Nicola-Richmond K

- Zeleníková R ,

- Ren D , et al

- Menz W , et al

- Volmink J , et al

- Bhutta ZA , et al

- Galbraith K ,

- Gorgon EJ ,

- Fiddes P , et al

- Coomarasamy A ,

- Ubbink DT ,

- Guyatt GH ,

- Vermeulen H

- Kortekaas MF ,

- Bartelink ML ,

- van der Heijden GJ , et al

- Odabaşı O , et al

- Camosso-Stefinovic J ,

- Gillies C , et al

- Blanco MA ,

- Capello CF ,

- Dorsch JL , et al

- Heneghan C ,

- Crilly M , et al

- Ingvarson L ,

- Walczak J ,

- Gabryś E , et al

- Ahmadi SF ,

- Baradaran HR ,

- Tilson JK ,

- Kaplan SL ,

- Harris JL , et al

Contributors This project formed part of a national project on EBP education in Ireland of which all named authors are members. The authors named on this paper made substantial contributions to both the acquisition and analysis of data, in addition to reviewing the report and paper for submission.

Funding This research was funded by the Clinical Effectiveness Unit of the National Patient Safety Office (NPSO), Department of Health, Ireland.

Competing interests None declared.

Patient consent Not required.

Ethics approval All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional ethical committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. Ethical approval was granted by the Social Research Ethics Committee, University College Cork (Log 2016–140).

Provenance and peer review Not commissioned; externally peer reviewed.

Data sharing statement The full report entitled ’Research on Teaching EBP in Ireland to healthcare professionals and healthcare students' is available on the National Clinical Effectiveness, Department of Health website.

Read the full text or download the PDF:

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Critical thinking: knowledge and skills for evidence-based practice

Affiliation.

- 1 Communication Sciences and Special Education, 514 Aderhold Hall, University of Georgia, Athens, GA 30602, USA. [email protected]

- PMID: 20601532

- DOI: 10.1044/0161-1461(2010/09-0037)

Purpose: I respond to Kamhi's (2011) conclusion in his article "Balancing Certainty and Uncertainty in Clinical Practice" that rational or critical thinking is an essential complement to evidence-based practice (EBP).

Method: I expand on Kamhi's conclusion and briefly describe what clinicians might need to know to think critically within an EBP profession. Specifically, I suggest how critical thinking is relevant to EBP, broadly summarize the relevant skills, indicate the importance of thinking dispositions, and outline the various ways our thinking can go wrong.

Conclusion: I finish the commentary by suggesting that critical thinking skills should be considered a required outcome of our professional training programs.

PubMed Disclaimer

- Balancing certainty and uncertainty in clinical practice. Kamhi AG. Kamhi AG. Lang Speech Hear Serv Sch. 2011 Jan;42(1):59-64. doi: 10.1044/0161-1461(2009/09-0034). Epub 2009 Oct 15. Lang Speech Hear Serv Sch. 2011. PMID: 19833828

Similar articles

- Some pragmatic tips for dealing with clinical uncertainty. Bernstein Ratner N. Bernstein Ratner N. Lang Speech Hear Serv Sch. 2011 Jan;42(1):77-80; discussion 88-93. doi: 10.1044/0161-1461(2009/09-0033). Epub 2009 Oct 15. Lang Speech Hear Serv Sch. 2011. PMID: 19833829

- Rational thinking in school-based practice. Clark MK, Flynn P. Clark MK, et al. Lang Speech Hear Serv Sch. 2011 Jan;42(1):73-6; discussion 88-93. doi: 10.1044/0161-1461(2010/09-0043). Epub 2010 Jul 2. Lang Speech Hear Serv Sch. 2011. PMID: 20601529

- Questions about certainty and uncertainty in clinical practice. Nelson NW. Nelson NW. Lang Speech Hear Serv Sch. 2011 Jan;42(1):81-7; discussion 88-93. doi: 10.1044/0161-1461(2010/09-0046). Epub 2010 Jul 2. Lang Speech Hear Serv Sch. 2011. PMID: 20601528

- Critical thinking a new approach to patient care. Sullivan DL, Chumbley C. Sullivan DL, et al. JEMS. 2010 Apr;35(4):48-53. doi: 10.1016/S0197-2510(10)70094-2. JEMS. 2010. PMID: 20399376 Review.

- Identifying and managing common childhood language and speech impairments. Reilly S, McKean C, Morgan A, Wake M. Reilly S, et al. BMJ. 2015 May 14;350:h2318. doi: 10.1136/bmj.h2318. BMJ. 2015. PMID: 25976972 Review. No abstract available.

- Synchronous online lecturing or blended flipped classroom with jigsaw: an educational intervention during the Covid-19 pandemic. Mohebbi Z, Mortezaei-Haftador A, Mehrabi M. Mohebbi Z, et al. BMC Med Educ. 2022 Dec 7;22(1):845. doi: 10.1186/s12909-022-03915-5. BMC Med Educ. 2022. PMID: 36476447 Free PMC article.

Publication types

- Search in MeSH

LinkOut - more resources

Full text sources.

- MedlinePlus Health Information

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

What is Evidence-Based Practice in Nursing? (With Examples, Benefits, & Challenges)

Are you a nurse looking for ways to increase patient satisfaction, improve patient outcomes, and impact the profession? Have you found yourself caught between traditional nursing approaches and new patient care practices? Although evidence-based practices have been used for years, this concept is the focus of patient care today more than ever. Perhaps you are wondering, “What is evidence-based practice in nursing?” In this article, I will share information to help you begin understanding evidence-based practice in nursing + 10 examples about how to implement EBP.

What is Evidence-Based Practice in Nursing?

When was evidence-based practice first introduced in nursing, who introduced evidence-based practice in nursing, what is the difference between evidence-based practice in nursing and research in nursing, what are the benefits of evidence-based practice in nursing, top 5 benefits to the patient, top 5 benefits to the nurse, top 5 benefits to the healthcare organization, 10 strategies nursing schools employ to teach evidence-based practices, 1. assigning case studies:, 2. journal clubs:, 3. clinical presentations:, 4. quizzes:, 5. on-campus laboratory intensives:, 6. creating small work groups:, 7. interactive lectures:, 8. teaching research methods:, 9. requiring collaboration with a clinical preceptor:, 10. research papers:, what are the 5 main skills required for evidence-based practice in nursing, 1. critical thinking:, 2. scientific mindset:, 3. effective written and verbal communication:, 4. ability to identify knowledge gaps:, 5. ability to integrate findings into practice relevant to the patient’s problem:, what are 5 main components of evidence-based practice in nursing, 1. clinical expertise:, 2. management of patient values, circumstances, and wants when deciding to utilize evidence for patient care:, 3. practice management:, 4. decision-making:, 5. integration of best available evidence:, what are some examples of evidence-based practice in nursing, 1. elevating the head of a patient’s bed between 30 and 45 degrees, 2. implementing measures to reduce impaired skin integrity, 3. implementing techniques to improve infection control practices, 4. administering oxygen to a client with chronic obstructive pulmonary disease (copd), 5. avoiding frequently scheduled ventilator circuit changes, 6. updating methods for bathing inpatient bedbound clients, 7. performing appropriate patient assessments before and after administering medication, 8. restricting the use of urinary catheterizations, when possible, 9. encouraging well-balanced diets as soon as possible for children with gastrointestinal symptoms, 10. implementing and educating patients about safety measures at home and in healthcare facilities, how to use evidence-based knowledge in nursing practice, step #1: assessing the patient and developing clinical questions:, step #2: finding relevant evidence to answer the clinical question:, step #3: acquire evidence and validate its relevance to the patient’s specific situation:, step #4: appraise the quality of evidence and decide whether to apply the evidence:, step #5: apply the evidence to patient care:, step #6: evaluating effectiveness of the plan:, 10 major challenges nurses face in the implementation of evidence-based practice, 1. not understanding the importance of the impact of evidence-based practice in nursing:, 2. fear of not being accepted:, 3. negative attitudes about research and evidence-based practice in nursing and its impact on patient outcomes:, 4. lack of knowledge on how to carry out research:, 5. resource constraints within a healthcare organization:, 6. work overload:, 7. inaccurate or incomplete research findings:, 8. patient demands do not align with evidence-based practices in nursing:, 9. lack of internet access while in the clinical setting:, 10. some nursing supervisors/managers may not support the concept of evidence-based nursing practices:, 12 ways nurse leaders can promote evidence-based practice in nursing, 1. be open-minded when nurses on your teams make suggestions., 2. mentor other nurses., 3. support and promote opportunities for educational growth., 4. ask for increased resources., 5. be research-oriented., 6. think of ways to make your work environment research-friendly., 7. promote ebp competency by offering strategy sessions with staff., 8. stay up-to-date about healthcare issues and research., 9. actively use information to demonstrate ebp within your team., 10. create opportunities to reinforce skills., 11. develop templates or other written tools that support evidence-based decision-making., 12. review evidence for its relevance to your organization., bonus 8 top suggestions from a nurse to improve your evidence-based practices in nursing, 1. subscribe to nursing journals., 2. offer to be involved with research studies., 3. be intentional about learning., 4. find a mentor., 5. ask questions, 6. attend nursing workshops and conferences., 7. join professional nursing organizations., 8. be honest with yourself about your ability to independently implement evidence-based practice in nursing., useful resources to stay up to date with evidence-based practices in nursing, professional organizations & associations, blogs/websites, youtube videos, my final thoughts, frequently asked questions answered by our expert, 1. what did nurses do before evidence-based practice, 2. how did florence nightingale use evidence-based practice, 3. what is the main limitation of evidence-based practice in nursing, 4. what are the common misconceptions about evidence-based practice in nursing, 5. are all types of nurses required to use evidence-based knowledge in their nursing practice, 6. will lack of evidence-based knowledge impact my nursing career, 7. i do not have access to research databases, how do i improve my evidence-based practice in nursing, 7. are there different levels of evidence-based practices in nursing.

• Level One: Meta-analysis of random clinical trials and experimental studies • Level Two: Quasi-experimental studies- These are focused studies used to evaluate interventions. • Level Three: Non-experimental or qualitative studies. • Level Four: Opinions of nationally recognized experts based on research. • Level Five: Opinions of individual experts based on non-research evidence such as literature reviews, case studies, organizational experiences, and personal experiences.

8. How Can I Assess My Evidence-Based Knowledge In Nursing Practice?

Promoting critical thinking through an evidence-based skills fair intervention

Journal of Research in Innovative Teaching & Learning

ISSN : 2397-7604

Article publication date: 23 November 2020

Issue publication date: 1 April 2022

The lack of critical thinking in new graduates has been a concern to the nursing profession. The purpose of this study was to investigate the effects of an innovative, evidence-based skills fair intervention on nursing students' achievements and perceptions of critical thinking skills development.

Design/methodology/approach

The explanatory sequential mixed-methods design was employed for this study.

The findings indicated participants perceived the intervention as a strategy for developing critical thinking.

Originality/value

The study provides educators helpful information in planning their own teaching practice in educating students.

Critical thinking

Evidence-based practice, skills fair intervention.

Gonzalez, H.C. , Hsiao, E.-L. , Dees, D.C. , Noviello, S.R. and Gerber, B.L. (2022), "Promoting critical thinking through an evidence-based skills fair intervention", Journal of Research in Innovative Teaching & Learning , Vol. 15 No. 1, pp. 41-54. https://doi.org/10.1108/JRIT-08-2020-0041

Emerald Publishing Limited

Copyright © 2020, Heidi C. Gonzalez, E-Ling Hsiao, Dianne C. Dees, Sherri R. Noviello and Brian L. Gerber

Published in Journal of Research in Innovative Teaching & Learning . Published by Emerald Publishing Limited. This article is published under the Creative Commons Attribution (CC BY 4.0) licence. Anyone may reproduce, distribute, translate and create derivative works of this article (for both commercial and non-commercial purposes), subject to full attribution to the original publication and authors. The full terms of this licence may be seen at http://creativecommons.org/licences/by/4.0/legalcode

Introduction

Critical thinking (CT) was defined as “cognitive skills of analyzing, applying standards, discriminating, information seeking, logical reasoning, predicting, and transforming knowledge” ( Scheffer and Rubenfeld, 2000 , p. 357). Critical thinking is the basis for all professional decision-making ( Moore, 2007 ). The lack of critical thinking in student nurses and new graduates has been a concern to the nursing profession. It would negatively affect the quality of service and directly relate to the high error rates in novice nurses that influence patient safety ( Arli et al. , 2017 ; Saintsing et al. , 2011 ). It was reported that as many as 88% of novice nurses commit medication errors with 30% of these errors due to a lack of critical thinking ( Ebright et al. , 2004 ). Failure to rescue is another type of error common for novice nurses, reported as high as 37% ( Saintsing et al. , 2011 ). The failure to recognize trends or complications promptly or take action to stabilize the patient occurs when health-care providers do not recognize signs and symptoms of the early warnings of distress ( Garvey and CNE series, 2015 ). Internationally, this lack of preparedness and critical thinking attributes to the reported 35–60% attrition rate of new graduate nurses in their first two years of practice ( Goodare, 2015 ). The high attrition rate of new nurses has expensive professional and economic costs of $82,000 or more per nurse and negatively affects patient care ( Twibell et al. , 2012 ). Facione and Facione (2013) reported the failure to utilize critical thinking skills not only interferes with learning but also results in poor decision-making and unclear communication between health-care professionals, which ultimately leads to patient deaths.

Due to the importance of critical thinking, many nursing programs strive to infuse critical thinking into their curriculum to better prepare graduates for the realities of clinical practice that involves ever-changing, complex clinical situations and bridge the gap between education and practice in nursing ( Benner et al. , 2010 ; Kim et al. , 2019 ; Park et al. , 2016 ; Newton and Moore, 2013 ; Nibert, 2011 ). To help develop students' critical thinking skills, nurse educators must change the way they teach nursing, so they can prepare future nurses to be effective communicators, critical thinkers and creative problem solvers ( Rieger et al. , 2015 ). Nursing leaders also need to redefine teaching practice and educational guidelines that drive innovation in undergraduate nursing programs.

Evidence-based practice has been advocated to promote critical thinking and help reduce the research-practice gap ( Profetto-McGrath, 2005 ; Stanley and Dougherty, 2010 ). Evidence-based practice was defined as “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of the individual patient” ( Sackett et al. , 1996 , p. 71). Skills fair intervention, one type of evidence-based practice, can be used to engage students, promote active learning and develop critical thinking ( McCausland and Meyers, 2013 ; Roberts et al. , 2009 ). Skills fair intervention helps promote a consistent teaching practice of the psychomotor skills to the novice nurse that decreased anxiety, gave clarity of expectations to the students in the clinical setting and increased students' critical thinking skills ( Roberts et al. , 2009 ). The researchers of this study had an opportunity to create an active, innovative skills fair intervention for a baccalaureate nursing program in one southeastern state. This intervention incorporated evidence-based practice rationale with critical thinking prompts using Socratic questioning, evidence-based practice videos to the psychomotor skill rubrics, group work, guided discussions, expert demonstration followed by guided practice and blended learning in an attempt to promote and develop critical thinking in nursing students ( Hsu and Hsieh, 2013 ; Oermann et al. , 2011 ; Roberts et al. , 2009 ). The effects of an innovative skills fair intervention on senior baccalaureate nursing students' achievements and their perceptions of critical thinking development were examined in the study.

Literature review

The ability to use reasoned opinion focusing equally on processes and outcomes over emotions is called critical thinking ( Paul and Elder, 2008 ). Critical thinking skills are desired in almost every discipline and play a major role in decision-making and daily judgments. The roots of critical thinking date back to Socrates 2,500 years ago and can be traced to the ancient philosopher Aristotle ( Paul and Elder, 2012 ). Socrates challenged others by asking inquisitive questions in an attempt to challenge their knowledge. In the 1980s, critical thinking gained nationwide recognition as a behavioral science concept in the educational system ( Robert and Petersen, 2013 ). Many researchers in both education and nursing have attempted to define, measure and teach critical thinking for decades. However, a theoretical definition has yet to be accepted and established by the nursing profession ( Romeo, 2010 ). The terms critical literacy, CT, reflective thinking, systems thinking, clinical judgment and clinical reasoning are used synonymously in the reviewed literature ( Clarke and Whitney, 2009 ; Dykstra, 2008 ; Jones, 2010 ; Swing, 2014 ; Turner, 2005 ).

Watson and Glaser (1980) viewed critical thinking not only as cognitive skills but also as a combination of skills, knowledge and attitudes. Paul (1993) , the founder of the Foundation for Critical Thinking, offered several definitions of critical thinking and identified three essential components of critical thinking: elements of thought, intellectual standards and affective traits. Brunt (2005) stated critical thinking is a process of being practical and considered it to be “the process of purposeful thinking and reflective reasoning where practitioners examine ideas, assumptions, principles, conclusions, beliefs, and actions in the contexts of nursing practice” (p. 61). In an updated definition, Ennis (2011) described critical thinking as, “reasonable reflective thinking focused on deciding what to believe or do” (para. 1).

The most comprehensive attempt to define critical thinking was under the direction of Facione and sponsored by the American Philosophical Association ( Scheffer and Rubenfeld, 2000 ). Facione (1990) surveyed 53 experts from the arts and sciences using the Delphi method to define critical thinking as a “purposeful, self-regulatory judgment which results in interpretation, analysis, evaluation, and inference, as well as an explanation of the evidential, conceptual, methodological, criteriological, or contextual considerations upon which judgment, is based” (p. 2).

To come to a consensus definition for critical thinking, Scheffer and Rubenfeld (2000) also conducted a Delphi study. Their study consisted of an international panel of nurses who completed five rounds of sequenced questions to arrive at a consensus definition. Critical thinking was defined as “habits of mind” and “cognitive skills.” The elements of habits of mind included “confidence, contextual perspective, creativity, flexibility, inquisitiveness, intellectual integrity, intuition, open-mindedness, perseverance, and reflection” ( Scheffer and Rubenfeld, 2000 , p. 352). The elements of cognitive skills were recognized as “analyzing, applying standards, discriminating, information seeking, logical reasoning, predicting, and transforming knowledge” ( Scheffer and Rubenfeld, 2000 , p. 352). In addition, Ignatavicius (2001) defined the development of critical thinking as a long-term process that must be practiced, nurtured and reinforced over time. Ignatavicius believed that a critical thinker required six cognitive skills: interpretation, analysis, evaluation, inference, explanation and self-regulation ( Chun-Chih et al. , 2015 ). According to Ignatavicius (2001) , the development of critical thinking is difficult to measure or describe because it is a formative rather than summative process.

Fero et al. (2009) noted that patient safety might be compromised if a nurse cannot provide clinically competent care due to a lack of critical thinking. The Institute of Medicine (2001) recommended five health care competencies: patient-centered care, interdisciplinary team care, evidence-based practice, informatics and quality improvement. Understanding the development and attainment of critical thinking is the key for gaining these future competencies ( Scheffer and Rubenfeld, 2000 ). The development of a strong scientific foundation for nursing practice depends on habits such as contextual perspective, inquisitiveness, creativity, analysis and reasoning skills. Therefore, the need to better understand how these critical thinking habits are developed in nursing students needs to be explored through additional research ( Fero et al. , 2009 ). Despite critical thinking being listed since the 1980s as an accreditation outcome criteria for baccalaureate programs by the National League for Nursing, very little improvement has been observed in practice ( McMullen and McMullen, 2009 ). James (2013) reported the number of patient harm incidents associated with hospital care is much higher than previously thought. James' study indicated that between 210,000 and 440,000 patients each year go to the hospital for care and end up suffering some preventable harm that contributes to their death. James' study of preventable errors is attributed to other sources besides nursing care, but having a nurse in place who can advocate and critically think for patients will make a positive impact on improving patient safety ( James, 2013 ; Robert and Peterson, 2013 ).

Adopting teaching practice to promote CT is a crucial component of nursing education. Research by Nadelson and Nadelson (2014) suggested evidence-based practice is best learned when integrated into multiple areas of the curriculum. Evidence-based practice developed its roots through evidence-based medicine, and the philosophical origins extend back to the mid-19th century ( Longton, 2014 ). Florence Nightingale, the pioneer of modern nursing, used evidence-based practice during the Crimean War when she recognized a connection between poor sanitary conditions and rising mortality rates of wounded soldiers ( Rahman and Applebaum, 2011 ). In professional nursing practice today, a commonly used definition of evidence-based practice is derived from Dr. David Sackett: the conscientious, explicit and judicious use of current best evidence in making decisions about the care of the individual patient ( Sackett et al. , 1996 , p. 71). As professional nurses, it is imperative for patient safety to remain inquisitive and ask if the care provided is based on available evidence. One of the core beliefs of the American Nephrology Nurses' Association's (2019) 2019–2020 Strategic Plan is “Anna must support research to develop evidence-based practice, as well as to advance nursing science, and that as individual members, we must support, participate in, and apply evidence-based research that advances our own skills, as well as nursing science” (p. 1). Longton (2014) reported the lack of evidence-based practice in nursing resulted in negative outcomes for patients. In fact, when evidence-based practice was implemented, changes in policies and procedures occurred that resulted in decreased reports of patient harm and associated health-care costs. The Institute of Medicine (2011) recommendations included nurses being leaders in the transformation of the health-care system and achieving higher levels of education that will provide the ability to critically analyze data to improve the quality of care for patients. Student nurses must be taught to connect and integrate CT and evidence-based practice throughout their program of study and continue that practice throughout their careers.

One type of evidence-based practice that can be used to engage students, promote active learning and develop critical thinking is skills fair intervention ( McCausland and Meyers, 2013 ; Roberts et al. , 2009 ). Skills fair intervention promoted a consistent teaching approach of the psychomotor skills to the novice nurse that decreased anxiety, gave clarity of expectations to the students in the clinical setting and increased students' critical thinking skills ( Roberts et al. , 2009 ). The skills fair intervention used in this study is a teaching strategy that incorporated CT prompts, Socratic questioning, group work, guided discussions, return demonstrations and blended learning in an attempt to develop CT in nursing students ( Hsu and Hsieh, 2013 ; Roberts et al. , 2009 ). It melded evidence-based practice with simulated CT opportunities while students practiced essential psychomotor skills.

Research methodology

Context – skills fair intervention.

According to Roberts et al. (2009) , psychomotor skills decline over time even among licensed experienced professionals within as little as two weeks and may need to be relearned within two months without performing a skill. When applying this concept to student nurses for whom each skill is new, it is no wonder their competency result is diminished after having a summer break from nursing school. This skills fair intervention is a one-day event to assist baccalaureate students who had taken the summer off from their studies in nursing and all faculty participated in operating the stations. It incorporated evidence-based practice rationale with critical thinking prompts using Socratic questioning, evidence-based practice videos to the psychomotor skill rubrics, group work, guided discussions, expert demonstration followed by guided practice and blended learning in an attempt to promote and develop critical thinking in baccalaureate students.

Students were scheduled and placed randomly into eight teams based on attributes of critical thinking as described by Wittmann-Price (2013) : Team A – Perseverance, Team B – Flexibility, Team C – Confidence, Team D – Creativity, Team E – Inquisitiveness, Team F – Reflection, Team G – Analyzing and Team H – Intuition. The students rotated every 20 minutes through eight stations: Medication Administration: Intramuscular and Subcutaneous Injections, Initiating Intravenous Therapy, ten-minute Focused Physical Assessment, Foley Catheter Insertion, Nasogastric Intubation, Skin Assessment/Braden Score and Restraints, Vital Signs and a Safety Station. When the students completed all eight stations, they went to the “Check-Out” booth to complete a simple evaluation to determine their perceptions of the effectiveness of the innovative intervention. When the evaluations were complete, each of the eight critical thinking attribute teams placed their index cards into a hat, and a student won a small prize. All Junior 2, Senior 1 and Senior 2 students were required to attend the Skills Fair. The Skills Fair Team strove to make the event as festive as possible, engaging nursing students with balloons, candy, tri-boards, signs and fun pre and postactivities. The Skills Fair rubrics, scheduling and instructions were shared electronically with students and faculty before the skills fair intervention to ensure adequate preparation and continuous resource availability as students move forward into their future clinical settings.

Research design

Institutional review board (IRB) approval was obtained from XXX University to conduct this study and protect human subject rights. The explanatory sequential mixed-methods design was employed for this study. The design was chosen to identify what effects a skills fair intervention that had on senior baccalaureate nursing students' achievements on the Kaplan Critical Thinking Integrated Test (KCTIT) and then follow up with individual interviews to explore those test results in more depth. In total, 52 senior nursing students completed the KCTIT; 30 of them participated in the skills fair intervention and 22 of them did not participate. The KCTIT is a computerized 85-item exam in which 85 equates to 100%, making each question worth one point. It has high reliability and validity ( Kaplan Nursing, 2012 ; Swing, 2014 ). The reliability value of the KCTIT ranged from 0.72 to 0.89. A t -test was used to analyze the test results.

A total of 11 participants were purposefully selected based on a range of six high achievers and five low achievers on the KCTIT for open-ended one-on-one interviews. Each interview was conducted individually and lasted for about 60 minutes. An open-ended interview protocol was used to guide the flow of data collection. The interviewees' ages ranged from 21 to 30 years, with an average of 24 years. One of 11 interviewees was male. Among them, seven were White, three were Black and one was Indian American. The data collected were used to answer the following research questions: (1) What was the difference in achievements on the KCTIT among senior baccalaureate nursing students who participated in the skills fair intervention and students who did not participate? (2) What were the senior baccalaureate nursing students' perceptions of internal and external factors impacting the development of critical thinking skills during the skills fair intervention? and (3) What were the senior baccalaureate nursing students' perceptions of the skills fair intervention as a critical thinking developmental strategy?