- IEEE Brain Career Center

- IEEE Brain Community

Podcasts Listen to industry and research specialists discuss cutting-edge neurotechnology and associated career paths.

Webinars Learn from top subject matter experts in brain research and neurotechnology.

eLearning Modules Dive deep with subject experts into key brain-related topic areas.

Video Series Access conversations with the industry's best of the best.

Presentations Discover more about the future of neurotechnology.

BrainInsight Featuring news and forward-looking commentary on neurotechnology research.

IEEE Neuroethics Framework Examining the ethical, legal, social, and cultural issues that arise with development and use of neurotechnologies.

IEEE Brain Talks Highlighting Q&As with brain experts and industry leaders.

Research & White Papers Identifying key challenges and advances required to successfully develop next generation neurotechnologies.

Brain Topics Learn more about the brain and neurotechnology research.

Standards Consider guidelines for neurotechnology development and use.

TED Talks Explore ground-breaking ideas in brain and neurotechnology development.

Career Center Find information on brain-related careers.

In by Adriel Carridice September 10, 2024

The 2025 IEEE International Symposium on Biomedical Imaging (ISBI) Call for Papers is now open! ISBI 2025 will be held in Houston, Texas, United States, from 14-17 April 2024.

This vibrant setting provides unparalleled opportunities to bridge the gap between cutting-edge biomedical imaging technologies and frontline clinicians, ultimately enhancing patient care.

ISBI 2025 is a scientific conference dedicated to the mathematical, algorithmic, and computational aspects of biological and biomedical imaging, across all scales of observation. It fosters knowledge transfer among different imaging communities, including technological, clinical and industrial communities, and contributes to an integrative approach to biomedical imaging.

| . To encourage attendance by a broader audience of imaging scientists and clinical professionals, ISBI 2024 will continue to have a second track featuring posters selected from 1-page abstract submissions without subsequent archival publication. 1-page abstracts will not be published in IEEE . High-quality papers are solicited containing original contributions in the topics of interest.

Submit your paper by 11 October 2024 to be considered. Learn more about the submission requirements and guidelines . |

To register for this event please visit the following URL: https://biomedicalimaging.org/2025/ →

Date And Time

Event types, event category, share with friends.

Over the last decade, the field of neuroscience has seen great advancements. Multiple efforts, both public and private, are underway to develop new tools to deepen our understanding of the brain and to create novel technologies that can record, decode, and sense brain signals as well as stimulate, modify, and augment brain function with improved efficacy and safety.

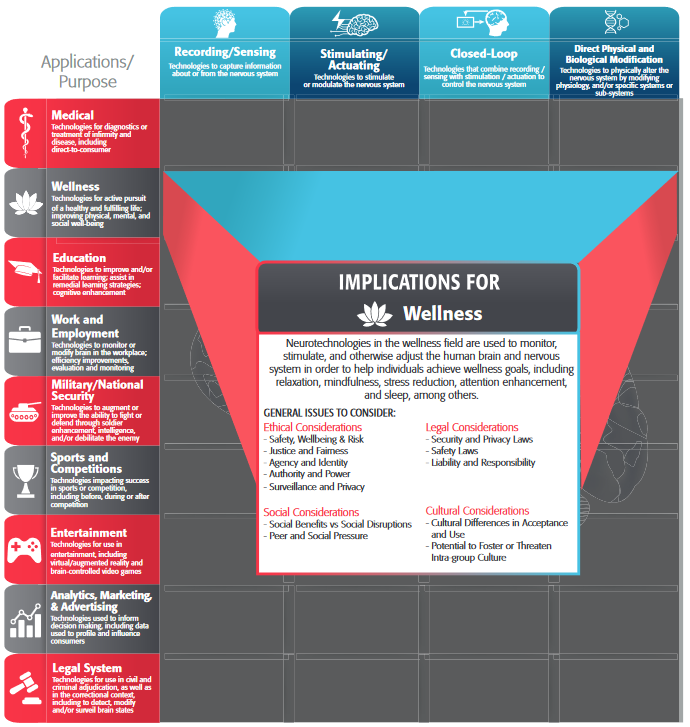

Although current research into and early deployment of neurotechnologies has predominantly focused on medical and therapeutic uses, there are already examples pointing to the push for the commercialization of these technologies for other applications, such as wellness, education, or gaming. As part of our effort to support the neuroengineering community, the IEEE Brain Neuroethics Subcommittee is developing a neuroethical framework for evaluating the ethical, legal, social, and cultural issues that may arise with the deployment of such neurotechnologies. The IEEE Brain neuroethical framework is organized as a matrix of specific types of contemporary neurotechnologies and their current and potential applications.

In this framework, we explore the ethical, legal, social, and cultural issues (ELSCI) that are generated by different types of neurotechnologies when used in specific applications. Key areas identified for potential neurotechnology implementation include medicine, wellness, education, work and employment, military and national security, sports and competitions, entertainment, the legal system, as well as marketing and advertising.

We recognize that neurotechnologies are constantly changing, both in terms of the translational pathway and the scope of applications for which they are used. A given neurotechnology might not flourish for a given application but may be used in ways not originally intended. Similarly, the ELSCI of a given device might change based on the particular social context and culture at hand. Accordingly, this framework is intended to serve as a living document, such that the themes and principles only capture a particular moment in time and will need to be revised as neuroscience, neurotechnologies, and their uses evolve. Furthermore, it is intended to facilitate further discussion by inviting input and new perspectives from a wide range of individuals with an interest in neurotechnologies.

While the focus is primarily on current technologies, we discuss potential risks and benefits of technologies for which only limited data is available. Our hope is for the proliferation of research in this field, and we look forward to issuing supplementary resources. Finally, while we acknowledge that there are different ways in which neurotechnologies can be conceptualized, here we focus on neurotechnologies as devices or physical modifications that interface with the human body, supplement pharmaceutical interventions, or that integrate with pharmaceutical agents. We focus on those interventions that use electricity, magnetic pulses, light, or other non-pharmacological agents to bring about their goal. In some cases, these techniques may incorporate genetic modification to the target tissue; however, pure gene therapies that do not involve an associated electronic device are outside the scope of this document.

Each application begins by defining the use case. Next, it identifies and describes existing key examples of the use of neurotechnology in the relevant application area as well as both near-term and long-term applications and the technologies that will enable them. After examining the ethical, legal, social, and cultural considerations for neurotechnologies in that given application, we highlight some examples of regulatory considerations, relevant standards, and a few case studies.

The documentation that supports this framework is the result of ongoing collaboration and dialogue among teams of engineers, scientists, clinicians, ethicists, sociologists, lawyers, and other stakeholders. This document has set the foundation for the ongoing development of socio-technical standards with a focus on neurotechnology (IEEE SA P7700) for engineers, researchers, applied scientists, practitioners, and neurotechnology companies that will help ensure the responsible development and use of new neurotechnologies. This framework will also be of interest to a wide range of audiences and stakeholders interested in neuroethics and the ethical, legal, social, and cultural implications (ELSCI) of these emerging technologies.

IEEE TRANSACTIONS ON MEDICAL IMAGING (TMI) encourages the submission of manuscripts on imaging of body structure, morphology and function, including cell and molecular imaging and all forms of microscopy. The journal publishes original contributions on medical imaging achieved by modalities including ultrasound, x-rays, magnetic resonance, radionuclides, microwaves, and optical methods. Contributions describing novel acquisition techniques, medical image processing and analysis, visualization and performance, pattern recognition, machine learning, and related methods are encouraged. Studies involving highly technical perspectives are most welcome.

The focus of the journal is on unifying the sciences of medicine, biology, and imaging. It emphasizes the common ground where instrumentation, hardware, software, mathematics, physics, biology, and medicine interact through new analysis methods. Strong application papers that describe novel methods are particularly encouraged. Papers describing important applications based on medically adopted and/or established methods without significant innovation in methodology will be directed to other journals.

A Publication of

Sponsor societies:.

Home / Publications / IEEE Photonics Journal

IEEE Photonics Journal

The society’s open access journal providing rapid publication of top-quality peer-reviewed papers at the forefront of photonics research..

IEEE Photonics Journal is an online-only rapid publication archival journal of top quality research at the forefront of photonics. Photonics integrates quantum electronics and optics to accelerate progress in the generation of novel photon sources and in their utilization in emerging applications at the micro and nano scales spanning from the far-infrared/THz to the x-ray region of the electromagnetic spectrum.

Time to Publication

Impact Factor

What's Popular

- Reconfigurable Integrated Photonic Unitary Neural Networks With Phase Encoding Enabled by In-Situ Training Source: IEEE Photonics Journal - popular articles Published on 2024-09-03

- High-Quality and Enhanced-Resolution Single-Pixel Imaging Based on Spiral Line Array Laser Source Source: IEEE Photonics Journal - popular articles Published on 2024-08-30

- Experimental Investigation of Si/SnOx Heterojunction for Its Tunable Optoelectronic Properties Source: IEEE Photonics Journal - popular articles Published on 2024-08-30

- Design and Demonstration of MOCVD-Grown p-Type AlxGa1-xN/GaN Quantum Well Infrared Photodetector Source: IEEE Photonics Journal - popular articles Published on 2024-08-29

- Design and Optimization of InAs Waveguide- Integrated Photodetectors on Silicon via Heteroepitaxial Integration for Mid- Infrared Silicon Photonics Source: IEEE Photonics Journal - popular articles Published on 2024-08-27

See all articles for this month >

OPEN CALLS FOR PAPERS

Information on current calls for papers for IEEE Photonics Society journals.

SPECIAL ISSUES

Further information on selected and published IEEE Photonics Society special issues.

Description

Technical areas, information for authors, editorial board, publication office, open access.

IEEE Photonics Journal is an open access, online-only rapid publication archival journal of top-quality research at the forefront of photonics. Contributions addressing issues ranging from fundamental understanding to emerging technologies and applications are within the scope of the Journal.

IEEE Photonics Journal is published online only. This platform offers capabilities to enhance published articles; all articles are published in color. Authors have the opportunity to submit supplemental material which may include but is not limited to: multimedia presentations, simulations, webinars, etc. Authors can also store their data in IEEE DataPort, and receive a DOI for their dataset https://ieee-dataport.org . In their final form, all articles contain a cover page with a “Graphic Abstract.”

The journal offers a thorough review process that is a signature of IEEE Publications. Upon acceptance papers receive a Digital Object Identifier and are published in the Early Access section on IEEE Xplore https://ieeexplore.ieee.org/ . At this stage, papers are fully citable. The final published version of the papers is copy edited by IEEE to ensure higher production quality.

Breakthroughs in the generation of light and its control and utilization have given rise to the field of Photonics; a rapidly expanding area of science and technology with major technological and economic impacts. Photonics integrates quantum electronics and optics to accelerate progress in the generation of novel photon sources and in their utilization in emerging applications at the micro- and nano-scales spanning from the far-infrared/THz to the x-ray region of the electromagnetic spectrum.

We welcome original contributions addressing issues ranging from fundamental understanding to emerging technologies and applications:

- Photon sources from far infrared to x-rays

- Photonics materials and engineered photonic structures

- Integrated optics and optoelectronic

- Ultrafast, attosecond, high field and short wavelength photonics

- Biophotonics including DNA photonics

- Nano-photonics

- Magneto-photonics

- Fundamentals of light propagation and interaction; nonlinear effects

- Optical data storage

- Fiber optics and optical communications devices, systems, and technologies

- Solar Cells

- Micro Opto Electro Mechanical Systems (MOEMS)

- Microwave photonics

- Optical sensors

Area 1: Optical Networks and Systems, Senior Editor: Ben Puttnam, Junior Editor: Andrea Sgambelluri

Optical core, metro, access, and data center networks; fiber optics links; free-space communications; underwater communications; optical cryptography.

Area 2: Fiber Optics Devices and Subsystems, Senior Editor: Fan Zhang, Junior Editor: Yang Du

Optical sources, devices, and subsystems for fiber communications; multimode and multicore fibers; optical frequency combs; amplifiers; multiplexers; interconnects; modulators; switches.

Area 3: Light Sources , Senior Editor: Paul Crump, Junior Editor: Xin Wang

Lasers; coherent optical sources; LED; OLED; QLED; lightning; incoherent optical sources; semiconductor lasers; visual perception.

Area 4: Detection, Sensing, and Energy, Senior Editor: Young Min Song, Junior Editor: Zunaid Omair

Optical detectors; sensors; solar cells; display technology; photovoltaics; thermophotovoltaics; vision; colors; visual optics; environmental optics; photonics measurements; energy optics (solar concentrators, daylighting design, solar fuels); measurement for industrial inspection.

Area 5: Integrated Systems, Circuits and Devices: Design, Fabrication and Materials, Senior Editor: Sylwester Latkowski, Junior Editor,

Integrated photonics systems; waveguides; integrated photonic devices; ring resonators; filters; multiplexers; liquid crystals; photonics manufacturing.

Area 6: Plasmonics and Metamaterials , Senior Editor: Jacob Khurgin, Junior Editor: Haifeng Hu

Micro photonics; nanophotonics; metamaterials; plasmonics; mid-Infrared and THz photonics; acoustic metamaterials; optomechanics; 2D material plasmonics and metasurfaces; nanowires; quantum dots; micro and nanoantennas; photonic bandgap structures.

Area 7: Biophotonics and Medical Optics, Senior Editor: Qiyin Fang

Biomedical optics, spectroscopy and microscopy; diffuse tomography; tissue imaging; nanoscopy; optical coherent tomography; bioimaging; optical biophysics; photophysics; photochemistry; biosensors; optical manipulation and molecular probes, imaging and drug delivery; photonics and the brain.

Area 8: Computational Photonics, Senior Editor: Jose Azana, Junior Editor: Maria del Rosario Fernandez Ruiz

Fourier optics; statistical optics; coherence; signal and image processing; microwave photonics; electromagnetics; artificial vision; lidar; computational imaging; diffractive optics.

Area 9: Propagation, Imaging, and Spectroscopy, Senior Editor : Stefan Stanciu , Junior Editor: Roxana Totu

Microscopy (diffraction-limited and super-resolution techniques); spectroscopy (UV/VIS, infrared, THz); nanoscopy; adaptive optics; holography; scattering; diffraction; gratings; physical optics; diffuse optics; polarization, luminescence, fluorescence, vibrational, nonlinear, photoacoustic, plasmonic and multimodal imaging; image processing and analysis (restoration, classification, and augmentation); methods for inspection, characterization, and imaging; photonics for arts, architecture, and archaeology.

Area 10: Quantum Photonics, Senior Editor: Niels Gregersen, Junior Editor: Jun Liu

Quantum sources and detection; single-photon emission and detection; entanglement; integrated quantum optics; quantum cryptography; quantum computation; quantum simulation.

Area 11: Nonlinear Photonics and Novel Optical Phenomena, Senior Editor: Michelle Sander, Junior Editor: Huanyu Song

Nonlinear photonics and phenomena; Terahertz; ultrahigh field and ultrafast photonics; nonlinear pulse propagation and interaction; high power systems; X-rays and plasma; attosecond science; high precision metrology and frequency comb technology; magnetophotonics; acoustophotonics; photoacoustic effects .

Area: 12 Optical Data Science and Machine Intelligence in Photonics , Senior Editor: Salah Obayya, Junior Editor: Jingxi Li

Machine learning-based solutions to inverse problems in optics; machine learning for life sciences imaging and microscopy; inverse design; materials for optical neural networks; photonic reservoir computing; photonic hardware accelerators; co-design of photonic systems and downstream algorithms; machine learning for ultrafast optics, for photonic material discovery and for optical storage.

Starting July 2021, papers are published in the IEEE two-column format. A template is available to guide Authors in the preparation of the manuscript and in estimating the total number of pages. Click here for the IEEE Template Selector .

Manuscripts are submitted in *PDF or in Microsoft Word form for review, and in LaTex for later processing by IEEE publications. Authors can submit supplemental multimedia files, such as animation, movies, data sets, sound files, and other forms of enhanced, multi-media content. Authors are encouraged to use high-quality color graphics. In addition, Authors can store their data in IEEE DataPort https://ieee-dataport.org.

The Journal uses a ‘Graphic Abstract’ as the cover page, and Authors are required to submit one piece of artwork or identify a figure in the paper that best describes the results of their work.

The use of artificial intelligence (AI)–generated text in an article shall be disclosed in the acknowledgments section of any paper submitted to an IEEE Conference or Periodical. The sections of the paper that use AI-generated text shall have a citation to the AI system used to generate the text.

IEEE Tools for Authors offers a reference validation tool to check the format and completeness of references. Analyze your article’s LaTeX files prior to submission to avoid delays in publishing. The IEEE LaTeX Analyzer will identify potential setbacks such as incomplete files or different versions of LaTeX. The use of these tools simplifies the copy-editing process which in turn reflects into a faster time-to-publication.

English language editing services can help refine the language of your article and reduce the risk of rejection without review. IEEE Authors are eligible for discounts at several language editing services; visit IEEE Author Center to learn more. Please note these services are fee-based and do not guarantee acceptance.

All IEEE Journals require an Open Researcher and Contributor ID (ORCID) for all Authors. ORCID is a persistent unique identifier for researchers and functions similarly to an article’s Digital Object Identifier (DOI). ORCIDs enable accurate attribution and improved discoverability of an Author’s published work. Researchers can sign up for an ORCID for free via an easy registration process on orcid.org . Learn more at http://orcid.org/content/about-orcid or in a video at https://vimeo.com/97150912. Authors who do not have an ORCID in their ScholarOne user account will be prompted to provide one during submission.

Author submissions are done through the IEEE Author Portal. Click here to the IEEE Author Portal .

The login and password for IEEE Photonics Technology Letters, IEEE Journal of Quantum Electronics, IEEE Journal of Selected Topics in Quantum Electronics, IEEE/Optic Publishing Group Journal of Light Wave Technology, or IEEE Journal of Display Technologies will also work on the IEEE Photonics Journal IEEE Author Portal site.

Submissions are reviewed by the Editorial Office for completeness and language proficiency. Submissions that are deficient will be sent back to the Authors. Articles are screened for plagiarism before being sent for review.

During the submission process, Authors select one of the 12 Technical Areas that best identifies the subject of their paper, and the manuscript is assigned to the corresponding Senior Editor (SE).

Authors are encouraged to suggest an Associate Editor (AE) to handle the review process. The Editor in Chief (EiC) will consider this suggestion, however, he/she reserves the option to use other AEs based on their loads. The file of the AE’s names and their area of expertise is available in the IEEE Author Portal https://ieee.atyponrex.com/journal/pj-ieee in the ‘Instructions and Forms’ tab.

Upon submission, your manuscript will be checked for formal template compliance before the Senior Editor examines the paper for scope compliance, language proficiency, as well as basic technical content and novelty. Out-of-scope papers, as well as papers of insufficient technical content or quality, may be immediately rejected upon consultation within the Editorial Board. Additional information on the journal scope and topic categories can be found here https://ieeephotonics.org/publications/photonics-journal/ .

After passing these initial editorial steps, the Senior Editor assigns your manuscript to an Associate Editor who is an expert in the respective paper’s topic area. Authors also have the opportunity to suggest a preferred Associate Editor (or to exclude certain Associate Editors as “non-preferred”) upon submission. We will always honor non-preferred Associate Editor selections if these are based on clear precedence that could lead to a potentially biased review process. (The mere fact that an Associate Editor may also be a competitor working in the exact same field as your paper is not a reason for exclusion.) We will try to honor preferred Associate Editor choices, but only if your preferences make technical sense and if the current Associate Editor workload permits the assignment.

The Associate Editor selects a minimum of two reviewers who are experts in the field of your paper. Authors can track the status of their submission at any time through their Author Portal . Please note that all technical work performed in this paper handling process, including all work performed by the Editor-in-Chief, the Senior Editors, the Associate Editors, and the Reviewers, is based on volunteers. While we constantly strive to keep reviewing times to a minimum, we place strong emphasis on technical quality. The average turn-around time (from submission to decision) is currently about 77 days.

PJ allows for one revision cycle. Should your manuscript require more than one revision, it may be rejected, but you are encouraged to resubmit so you can fully address all reviewer concerns. Once accepted, your paper will be placed on-line in the queue for the journal within 2-3 days. At that point it can be fully referenced using the digital object identifier (DOI), even if it hasn’t yet appeared in a printed issue. The articles in this journal are peer reviewed in accordance with the requirements set forth in the IEEE Publication Services and Products Board Operations Manual ( https://pspb.ieee.org/images/files/files/opsmanual.pdf , section 8.2.2). Each published article is reviewed by a minimum of two independent reviewers using a single-anonymous process, where the identities of the reviewers are not known to the authors, but the reviewers know the identities of the authors. Articles will be screened for plagiarism before acceptance.

Appeals must be directed to the EiC, in the form of a letter that clearly explains the rationale for the appeal. The submitted documentation should also include a copy of the rejection letter and of the reviews. The IEEE appeal process calls for the establishment of an independent group of evaluators that will review the Authors’ rebuttal, the decision of the AE, and the Reviewers comments. The process takes on average 6-8 weeks. Once the EiC has reached a decision, it will be communicated to the authors by email.

Publishing within IEEE is governed by Principles of Scholarly publishing developed in 2007 and found at: http://www.ieee.org/web/publications/rights/PublishingPrinciples.html

IEEE Statement on the Appropriate use of Bibliometrics: https://www.ieee.org/publications/rights/bibliometrics-statement.html

IEEE Photonics Journal Editor-in-Chief

Senior editors.

Jose Azana, INRS-EMT, Canada

Paul Crump, Ferdinand-Braun Institute for Hoechstfrequenztechnik, Germany

Qiyin Fang, McMaster University, Canada

Niels Gregersen, Technical University of Denmark, Denmark

Jacob Khurgin, John Hopkins University, USA

Sylwester Latkowski, Eindhoven University of Technology, Netherlands

Salah Obayya, Zewail City of Science and Technology, Egypt

Benjamin Puttnam, Dokuritsu Gyosei Hojin Joho Tsushin Kenkyu Kiko, Japan

Michelle Y. Sander, Boston University, USA

Young Min Song, Gwangju Institute of Science & Technology, Korea

Stefan Stanciu, University of “Politehnica” of Bucharest, Romania

Fan Zhang, Peking University, China

Associate Editors

Nicola Andriolli, CNR IEIIT, Italy

Amir Arbabi, University of Massachusetts Amherst, USA

Marco Bellini, Istituto Nazionale di Ottica Consiglio Nazionale delle Ricerche, Italy

Francesco Bertazzi, Politecnico di Torino, Italy

Paolo Bianchini, Istituto Italiano di Tecnologia, Italy

Thomas Bocklitz, Leibniz Institute of Photonic Technology, Germany

Luigi Bonacina, Universite’ de Geneve, Switzerland

Ahmed Bukhamseen, Saudi Aramco, Saudi Arabia

Chi Wai Chow, National Yang Ming Chiao Tung University, Taiwan

Caterina Ciminelli, Politecnico di Bari, Italy

Giulio Cossu, Scuola Superiore Sant‘Anna, Italy

Fei Ding, University of Southern Denmark, Denmark

Lu Ding, Institute of Materials Research & Engineering, Singapore

Hery S. Djie, Lumentum LLC, USA

Dror Fixler, Bar Ilan University, Israel

Lan Fu, Australian National University, Australia

Songnian Fu, Guangdong University of Technology, China

Fei Gao, ShanghaiTech University, China

Haoshuo Chen, Nokia Bell Labs, USA

Hao Huang, Lumentum Operations LLC, USA

Satoshi Ishii, Busshitsu Zairyo Kenkyu Kiko Kokusai Nanoarchitectonics Kenkyu Kyoten, Japan

Zhensheng Jia, Cable Television Laboratories, USA

Antonio Jurado-Navas, University of Malaga, Spain

Mukesh Kumar, Indian Institute of Technology, India

Jiun-Haw Lee, National Taiwan University, Taiwan

Yan Li, Shanghai Jiao Tong University, China

Peter Liu, State University of NY at Buffalo, USA

Muhammad Qasim Mehmood, Information Technology University (ITU) of the Punjab, Lahore, Pakistan

MD. Jarez Miah, Bangladesh University of Engineering and Technology, Bangladesh

S.M.Abdur Razzak, Rajshahi University of Engineering & Technology, Bangladesh

Anurag Sharma, Indian Institute of Technology Delhi, India

Chao Shen, Fudan University, China

Lei Shi, Fudan University, China

Zachary Smith, University of Science and Technology of China, China

Jingbo Sun, Tsinghua University, China

Eduward Tangdiongga, Eindhoven University of Technology, The Netherlands

Alberto Tibaldi, IEIIT-CNR, Italy

Georgios Veronis, Louisiana State University, USA

Luca Vincetti, University of Modena and Reggio Emilia, Italy

Yating Wan, KAUST, Saudi Arabia

Shang Wang, Stevens Institute of Technology, USA

Hai Xiao, Clemson University, USA

Shumin Xiao, Harbin Institute of Technology, China

He-Xiu Xu, Air Force Engineering University, China

Yu Yao, Arizona State University, USA

Shu-Chi Yeh, University of Rochester Medical Center, USA

Changyuan Yu, Hong Kong Polytechnic University, Hong Kong

Alessandro Zavatta, Consiglio Nazionale delle Ricerche, Italy

Jinwei Zeng, Huazhong University of Science and Technology, China

Junwen Zhang, Fudan University, China

Lin Zhang, Tianjin University, China

Xiaobei Zhang, Shanghai University, China

Chao Zhou, Washington University in Saint Louis, USA

Xian Zhou, University of Science and Technology Beijing and Beijing, China

Xinxing Zhou, Hunan Normal University, China

Yeyu Zhu, Lumentum Operations LLC, USA

Junior Editors

Jing Du, Huazhong University of Science and Technology, China

Xin Wang, Southeast University, China

Zunaid Omair, Standford University, USA

Haifeng Hu, University of Shanghai for Science and Technology, China

Maria Fernandez-Ruiz, Universidad de Alala, Spain

Roxana Totu, University Politehnica of Bucharest, Romania

Jingxi Li, University of California Los Angeles, USA

Andrea Sgambelluri, Scuola Superiore Sant’Anna, Italy

Huanyu Song, SLAC National Accelerator Laboratory, USA

Yang Du, Leibniz Institute of Photonic Technology, Germany

Jun Liu, Huazhong University of Science and Technology

Publications Coordinator

Yvette Charles IEEE Photonics Society 445 Hoes Lane Piscataway, NJ 08854-1331, USA Phone: +1 732 981 3457 Email: [email protected]

Publications Portfolio Manager

Laura A. Lander IEEE Photonics Society 445 Hoes Lane Piscataway, New Jersey 08854, USA Phone: +1 732 465 6479 Email: [email protected]

Open Access Rights Management

Article processing charge (apc): us$1995.

IEEE Photonics Journal is a fully Open Access Journal, compliant with funder mandates, including Plan S.

For papers submitted in 2024, the APC is US$1995 plus applicable local taxes.

- IEEE Members receive a 5% discount.

- IEEE Society Members receive a 20% discount.

Discounts do not apply to undergraduate and graduate students. These discounts cannot be combined.

Photonics Journal has a waiver policy for authors from low-income countries. Corresponding authors from low-income countries (as classified by the World Bank) are eligible for a 100% waiver on APCs. Corresponding authors from lower-middle-income countries are also eligible for a discount on APCs ranging from 25% to 50% based on the GDP of the country of the corresponding author.

author center

Resources and tools to help you write, prepare, and share your research work more effectively.

open access

Special issue in IEEE Transactions on Medical Imaging: Advancements in Foundation Models for Medical Imaging

- February 7, 2024

Foundation models, e.g., ChatGPT/GPT-4/GPT-4V, at the forefront of artificial intelligence (AI) and deep learning, represent a pivotal leap in the domain of computational intelligence. This special issue aims to explore and showcase cutting-edge research in the development and application of foundation models for medical imaging within the field of healthcare. Οriginal and innovative methodological contributions are invited, which address the key challenges in developing, validating and applying foundation models for medical imaging. This is an open call for papers. Stay tuned for more details!

Share this page:

Deep Learning Applications in Medical Image Analysis

The tremendous success of machine learning algorithms at image recognition tasks in recent years intersects with a time of dramatically increased use of electronic medical records and diagnostic imaging. This review introduces the machine learning algorithms as applied to medical image analysis, focusing on convolutional neural networks, and emphasizing clinical aspects of the field. The advantage of machine learning in an era of medical big data is that significant hierarchal relationships within the data can be discovered algorithmically without laborious hand-crafting of features. We cover key research areas and applications of medical image classification, localization, detection, segmentation, and registration. We conclude by discussing research obstacles, emerging trends, and possible future directions.

View this article on IEEE Xplore

At a Glance

- Journal: IEEE Access

- Format: Open Access

- Frequency: Continuous

- Submission to Publication: 4-6 weeks (typical)

- Topics: All topics in IEEE

- Average Acceptance Rate: 27%

- Impact Factor: 3.4

- Model: Binary Peer Review

- Article Processing Charge: US $1,995

Featured Articles

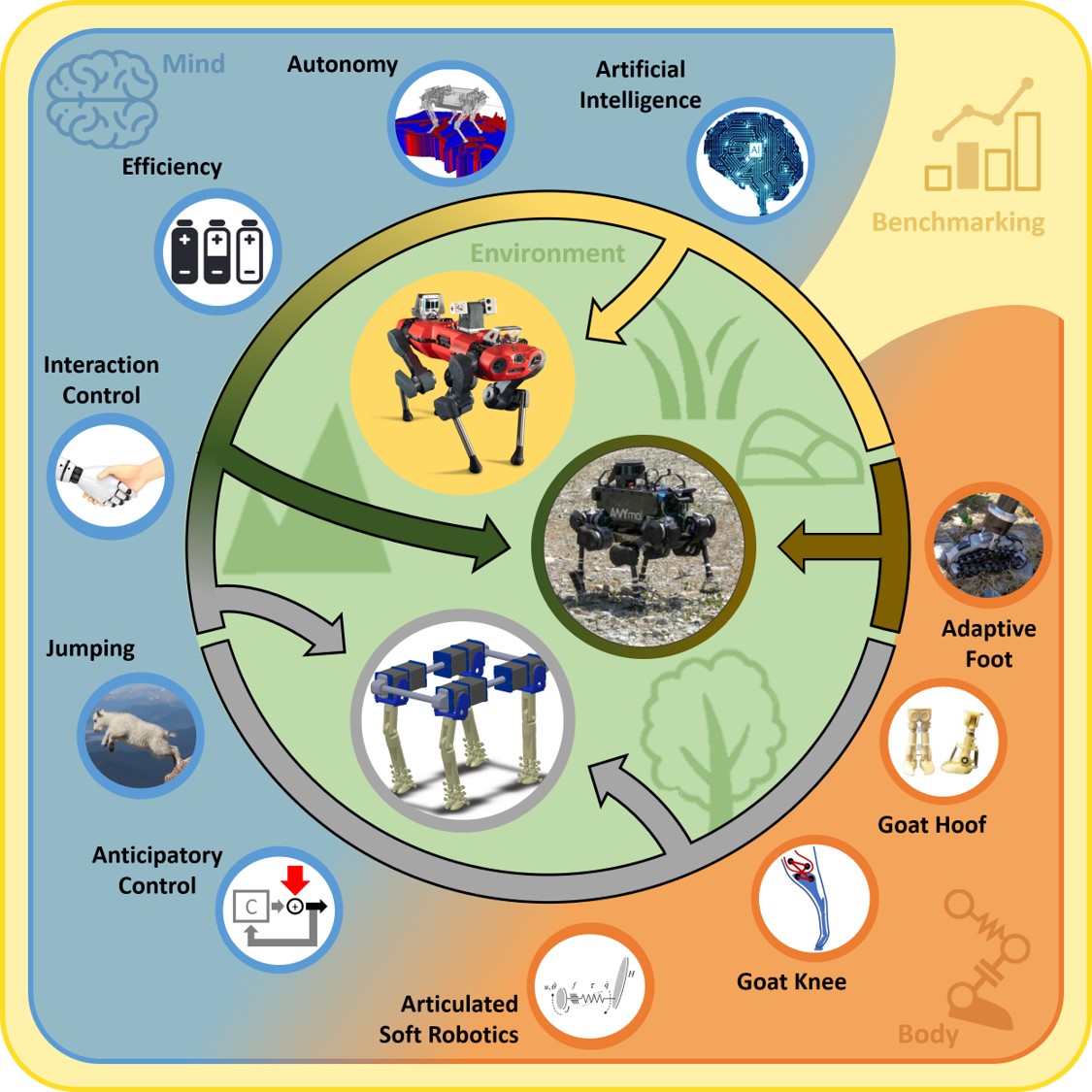

Robotic Monitoring of Habitats: The Natural Intelligence Approach

View in IEEE Xplore

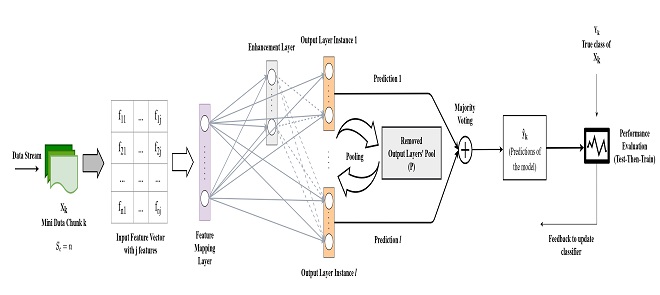

A Broad Ensemble Learning System for Drifting Stream Classification

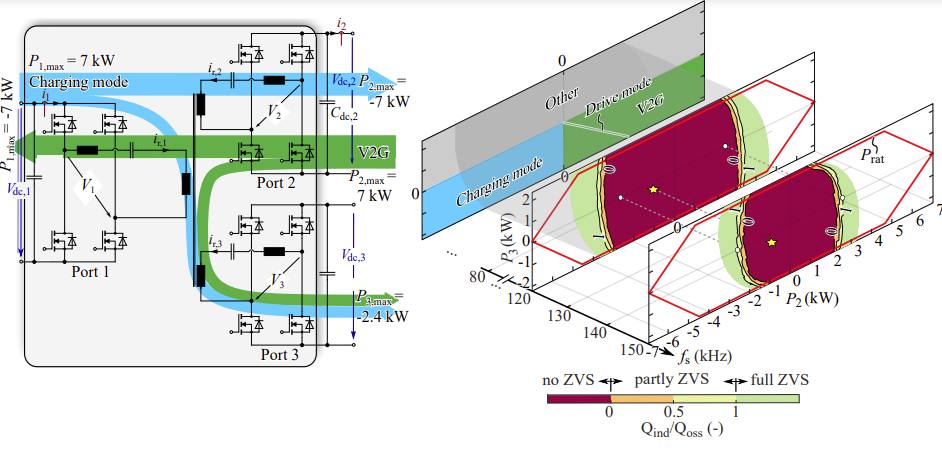

Increasing Light Load Efficiency in Phase-Shifted, Variable Frequency Multiport Series Resonant Converters

Submission guidelines.

© 2024 IEEE - All rights reserved. Use of this website signifies your agreement to the IEEE TERMS AND CONDITIONS.

A not-for-profit organization, IEEE is the world’s largest technical professional organization dedicated to advancing technology for the benefit of humanity.

AWARD RULES:

NO PURCHASE NECESSARY TO ENTER OR WIN. A PURCHASE WILL NOT INCREASE YOUR CHANCES OF WINNING.

These rules apply to the “2024 IEEE Access Best Video Award Part 2″ (the “Award”).

- Sponsor: The Sponsor of the Award is The Institute of Electrical and Electronics Engineers, Incorporated (“IEEE”) on behalf of IEEE Access , 445 Hoes Lane, Piscataway, NJ 08854-4141 USA (“Sponsor”).

- Eligibility: Award is open to residents of the United States of America and other countries, where permitted by local law, who are the age of eighteen (18) and older. Employees of Sponsor, its agents, affiliates and their immediate families are not eligible to enter Award. The Award is subject to all applicable state, local, federal and national laws and regulations. Entrants may be subject to rules imposed by their institution or employer relative to their participation in Awards and should check with their institution or employer for any relevant policies. Void in locations and countries where prohibited by law.

- Agreement to Official Rules : By participating in this Award, entrants agree to abide by the terms and conditions thereof as established by Sponsor. Sponsor reserves the right to alter any of these Official Rules at any time and for any reason. All decisions made by Sponsor concerning the Award including, but not limited to the cancellation of the Award, shall be final and at its sole discretion.

- How to Enter: This Award opens on July 1, 2024 at 12:00 AM ET and all entries must be received by 11:59 PM ET on December 31, 2024 (“Promotional Period”).

Entrant must submit a video with an article submission to IEEE Access . The video submission must clearly be relevant to the submitted manuscript. Only videos that accompany an article that is accepted for publication in IEEE Access will qualify. The video may be simulations, demonstrations, or interviews with other experts, for example. Your video file should not exceed 100 MB.

Entrants can enter the Award during Promotional Period through the following method:

- The IEEE Author Portal : Entrants can upload their video entries while submitting their article through the IEEE Author Portal submission site .

- Review and Complete the Terms and Conditions: After submitting your manuscript and video through the IEEE Author Portal, entrants should then review and sign the Terms and Conditions .

Entrants who have already submitted a manuscript to IEEE Access without a video can still submit a video for inclusion in this Award so long as the video is submitted within 7 days of the article submission date. The video can be submitted via email to the article administrator. All videos must undergo peer review and be accepted along with the article submission. Videos may not be submitted after an article has already been accepted for publication.

The criteria for an article to be accepted for publication in IEEE Access are:

- The article must be original writing that enhances the existing body of knowledge in the given subject area. Original review articles and surveys are acceptable even if new data/concepts are not presented.

- Results reported must not have been submitted or published elsewhere (although expanded versions of conference publications are eligible for submission).

- Experiments, statistics, and other analyses must be performed to a high technical standard and are described in sufficient detail.

- Conclusions must be presented in an appropriate fashion and are supported by the data.

- The article must be written in standard English with correct grammar.

- Appropriate references to related prior published works must be included.

- The article must fall within the scope of IEEE Access

- Must be in compliance with the IEEE PSPB Operations Manual.

- Completion of the required IEEE intellectual property documents for publication.

- At the discretion of the IEEE Access Editor-in-Chief.

- Disqualification: The following items will disqualify a video from being considered a valid submission:

- The video is not original work.

- A video that is not accompanied with an article submission.

- The article and/or video is rejected during the peer review process.

- The article and/or video topic does not fit into the scope of IEEE Access .

- The article and/or do not follow the criteria for publication in IEEE Access .

- Videos posted in a comment on IEEE Xplore .

- Content is off-topic, offensive, obscene, indecent, abusive or threatening to others.

- Infringes the copyright, trademark or other right of any third party.

- Uploads viruses or other contaminating or destructive features.

- Is in violation of any applicable laws or regulations.

- Is not in English.

- Is not provided within the designated submission time.

- Entrant does not agree and sign the Terms and Conditions document.

Entries must be original. Entries that copy other entries, or the intellectual property of anyone other than the Entrant, may be removed by Sponsor and the Entrant may be disqualified. Sponsor reserves the right to remove any entry and disqualify any Entrant if the entry is deemed, in Sponsor’s sole discretion, to be inappropriate.

- Entrant’s Warranty and Authorization to Sponsor: By entering the Award, entrants warrant and represent that the Award Entry has been created and submitted by the Entrant. Entrant certifies that they have the ability to use any image, text, video, or other intellectual property they may upload and that Entrant has obtained all necessary permissions. IEEE shall not indemnify Entrant for any infringement, violation of publicity rights, or other civil or criminal violations. Entrant agrees to hold IEEE harmless for all actions related to the submission of an Entry. Entrants further represent and warrant, if they reside outside of the United States of America, that their participation in this Award and acceptance of a prize will not violate their local laws.

- Intellectual Property Rights: Entrant grants Sponsor an irrevocable, worldwide, royalty free license to use, reproduce, distribute, and display the Entry for any lawful purpose in all media whether now known or hereinafter created. This may include, but is not limited to, the IEEE A ccess website, the IEEE Access YouTube channel, the IEEE Access IEEE TV channel, IEEE Access social media sites (LinkedIn, Facebook, Twitter, IEEE Access Collabratec Community), and the IEEE Access Xplore page. Facebook/Twitter/Microsite usernames will not be used in any promotional and advertising materials without the Entrants’ expressed approval.

- Number of Prizes Available, Prizes, Approximate Retail Value and Odds of winning Prizes: Two (2) promotional prizes of $350 USD Amazon gift cards. One (1) grand prize of a $500 USD Amazon gift card. Prizes will be distributed to the winners after the selection of winners is announced. Odds of winning a prize depend on the number of eligible entries received during the Promotional Period. Only the corresponding author of the submitted manuscript will receive the prize.

The grand prize winner may, at Sponsor’ discretion, have his/her article and video highlighted in media such as the IEEE Access Xplore page and the IEEE Access social media sites.

The prize(s) for the Award are being sponsored by IEEE. No cash in lieu of prize or substitution of prize permitted, except that Sponsor reserves the right to substitute a prize or prize component of equal or greater value in its sole discretion for any reason at time of award. Sponsor shall not be responsible for service obligations or warranty (if any) in relation to the prize(s). Prize may not be transferred prior to award. All other expenses associated with use of the prize, including, but not limited to local, state, or federal taxes on the Prize, are the sole responsibility of the winner. Winner(s) understand that delivery of a prize may be void where prohibited by law and agrees that Sponsor shall have no obligation to substitute an alternate prize when so prohibited. Amazon is not a sponsor or affiliated with this Award.

- Selection of Winners: Promotional prize winners will be selected based on entries received during the Promotional Period. The sponsor will utilize an Editorial Panel to vote on the best video submissions. Editorial Panel members are not eligible to participate in the Award. Entries will be ranked based on three (3) criteria:

- Presentation of Technical Content

- Quality of Video

Upon selecting a winner, the Sponsor will notify the winner via email. All potential winners will be notified via their email provided to the sponsor. Potential winners will have five (5) business days to respond after receiving initial prize notification or the prize may be forfeited and awarded to an alternate winner. Potential winners may be required to sign an affidavit of eligibility, a liability release, and a publicity release. If requested, these documents must be completed, signed, and returned within ten (10) business days from the date of issuance or the prize will be forfeited and may be awarded to an alternate winner. If prize or prize notification is returned as undeliverable or in the event of noncompliance with these Official Rules, prize will be forfeited and may be awarded to an alternate winner.

- General Prize Restrictions: No prize substitutions or transfer of prize permitted, except by the Sponsor. Import/Export taxes, VAT and country taxes on prizes are the sole responsibility of winners. Acceptance of a prize constitutes permission for the Sponsor and its designees to use winner’s name and likeness for advertising, promotional and other purposes in any and all media now and hereafter known without additional compensation unless prohibited by law. Winner acknowledges that neither Sponsor, Award Entities nor their directors, employees, or agents, have made nor are in any manner responsible or liable for any warranty, representation, or guarantee, express or implied, in fact or in law, relative to any prize, including but not limited to its quality, mechanical condition or fitness for a particular purpose. Any and all warranties and/or guarantees on a prize (if any) are subject to the respective manufacturers’ terms therefor, and winners agree to look solely to such manufacturers for any such warranty and/or guarantee.

11.Release, Publicity, and Privacy : By receipt of the Prize and/or, if requested, by signing an affidavit of eligibility and liability/publicity release, the Prize Winner consents to the use of his or her name, likeness, business name and address by Sponsor for advertising and promotional purposes, including but not limited to on Sponsor’s social media pages, without any additional compensation, except where prohibited. No entries will be returned. All entries become the property of Sponsor. The Prize Winner agrees to release and hold harmless Sponsor and its officers, directors, employees, affiliated companies, agents, successors and assigns from and against any claim or cause of action arising out of participation in the Award.

Sponsor assumes no responsibility for computer system, hardware, software or program malfunctions or other errors, failures, delayed computer transactions or network connections that are human or technical in nature, or for damaged, lost, late, illegible or misdirected entries; technical, hardware, software, electronic or telephone failures of any kind; lost or unavailable network connections; fraudulent, incomplete, garbled or delayed computer transmissions whether caused by Sponsor, the users, or by any of the equipment or programming associated with or utilized in this Award; or by any technical or human error that may occur in the processing of submissions or downloading, that may limit, delay or prevent an entrant’s ability to participate in the Award.

Sponsor reserves the right, in its sole discretion, to cancel or suspend this Award and award a prize from entries received up to the time of termination or suspension should virus, bugs or other causes beyond Sponsor’s control, unauthorized human intervention, malfunction, computer problems, phone line or network hardware or software malfunction, which, in the sole opinion of Sponsor, corrupt, compromise or materially affect the administration, fairness, security or proper play of the Award or proper submission of entries. Sponsor is not liable for any loss, injury or damage caused, whether directly or indirectly, in whole or in part, from downloading data or otherwise participating in this Award.

Representations and Warranties Regarding Entries: By submitting an Entry, you represent and warrant that your Entry does not and shall not comprise, contain, or describe, as determined in Sponsor’s sole discretion: (A) false statements or any misrepresentations of your affiliation with a person or entity; (B) personally identifying information about you or any other person; (C) statements or other content that is false, deceptive, misleading, scandalous, indecent, obscene, unlawful, defamatory, libelous, fraudulent, tortious, threatening, harassing, hateful, degrading, intimidating, or racially or ethnically offensive; (D) conduct that could be considered a criminal offense, could give rise to criminal or civil liability, or could violate any law; (E) any advertising, promotion or other solicitation, or any third party brand name or trademark; or (F) any virus, worm, Trojan horse, or other harmful code or component. By submitting an Entry, you represent and warrant that you own the full rights to the Entry and have obtained any and all necessary consents, permissions, approvals and licenses to submit the Entry and comply with all of these Official Rules, and that the submitted Entry is your sole original work, has not been previously published, released or distributed, and does not infringe any third-party rights or violate any laws or regulations.

12.Disputes: EACH ENTRANT AGREES THAT: (1) ANY AND ALL DISPUTES, CLAIMS, AND CAUSES OF ACTION ARISING OUT OF OR IN CONNECTION WITH THIS AWARD, OR ANY PRIZES AWARDED, SHALL BE RESOLVED INDIVIDUALLY, WITHOUT RESORTING TO ANY FORM OF CLASS ACTION, PURSUANT TO ARBITRATION CONDUCTED UNDER THE COMMERCIAL ARBITRATION RULES OF THE AMERICAN ARBITRATION ASSOCIATION THEN IN EFFECT, (2) ANY AND ALL CLAIMS, JUDGMENTS AND AWARDS SHALL BE LIMITED TO ACTUAL OUT-OF-POCKET COSTS INCURRED, INCLUDING COSTS ASSOCIATED WITH ENTERING THIS AWARD, BUT IN NO EVENT ATTORNEYS’ FEES; AND (3) UNDER NO CIRCUMSTANCES WILL ANY ENTRANT BE PERMITTED TO OBTAIN AWARDS FOR, AND ENTRANT HEREBY WAIVES ALL RIGHTS TO CLAIM, PUNITIVE, INCIDENTAL, AND CONSEQUENTIAL DAMAGES, AND ANY OTHER DAMAGES, OTHER THAN FOR ACTUAL OUT-OF-POCKET EXPENSES, AND ANY AND ALL RIGHTS TO HAVE DAMAGES MULTIPLIED OR OTHERWISE INCREASED. ALL ISSUES AND QUESTIONS CONCERNING THE CONSTRUCTION, VALIDITY, INTERPRETATION AND ENFORCEABILITY OF THESE OFFICIAL RULES, OR THE RIGHTS AND OBLIGATIONS OF ENTRANT AND SPONSOR IN CONNECTION WITH THE AWARD, SHALL BE GOVERNED BY, AND CONSTRUED IN ACCORDANCE WITH, THE LAWS OF THE STATE OF NEW JERSEY, WITHOUT GIVING EFFECT TO ANY CHOICE OF LAW OR CONFLICT OF LAW, RULES OR PROVISIONS (WHETHER OF THE STATE OF NEW JERSEY OR ANY OTHER JURISDICTION) THAT WOULD CAUSE THE APPLICATION OF THE LAWS OF ANY JURISDICTION OTHER THAN THE STATE OF NEW JERSEY. SPONSOR IS NOT RESPONSIBLE FOR ANY TYPOGRAPHICAL OR OTHER ERROR IN THE PRINTING OF THE OFFER OR ADMINISTRATION OF THE AWARD OR IN THE ANNOUNCEMENT OF THE PRIZES.

- Limitation of Liability: The Sponsor, Award Entities and their respective parents, affiliates, divisions, licensees, subsidiaries, and advertising and promotion agencies, and each of the foregoing entities’ respective employees, officers, directors, shareholders and agents (the “Released Parties”) are not responsible for incorrect or inaccurate transfer of entry information, human error, technical malfunction, lost/delayed data transmissions, omission, interruption, deletion, defect, line failures of any telephone network, computer equipment, software or any combination thereof, inability to access web sites, damage to a user’s computer system (hardware and/or software) due to participation in this Award or any other problem or error that may occur. By entering, participants agree to release and hold harmless the Released Parties from and against any and all claims, actions and/or liability for injuries, loss or damage of any kind arising from or in connection with participation in and/or liability for injuries, loss or damage of any kind, to person or property, arising from or in connection with participation in and/or entry into this Award, participation is any Award-related activity or use of any prize won. Entry materials that have been tampered with or altered are void. If for any reason this Award is not capable of running as planned, or if this Award or any website associated therewith (or any portion thereof) becomes corrupted or does not allow the proper playing of this Award and processing of entries per these rules, or if infection by computer virus, bugs, tampering, unauthorized intervention, affect the administration, security, fairness, integrity, or proper conduct of this Award, Sponsor reserves the right, at its sole discretion, to disqualify any individual implicated in such action, and/or to cancel, terminate, modify or suspend this Award or any portion thereof, or to amend these rules without notice. In the event of a dispute as to who submitted an online entry, the entry will be deemed submitted by the authorized account holder the email address submitted at the time of entry. “Authorized Account Holder” is defined as the person assigned to an email address by an Internet access provider, online service provider or other organization responsible for assigning email addresses for the domain associated with the email address in question. Any attempt by an entrant or any other individual to deliberately damage any web site or undermine the legitimate operation of the Award is a violation of criminal and civil laws and should such an attempt be made, the Sponsor reserves the right to seek damages and other remedies from any such person to the fullest extent permitted by law. This Award is governed by the laws of the State of New Jersey and all entrants hereby submit to the exclusive jurisdiction of federal or state courts located in the State of New Jersey for the resolution of all claims and disputes. Facebook, LinkedIn, Twitter, G+, YouTube, IEEE Xplore , and IEEE TV are not sponsors nor affiliated with this Award.

- Award Results and Official Rules: To obtain the identity of the prize winner and/or a copy of these Official Rules, send a self-addressed stamped envelope to Kimberly Rybczynski, IEEE, 445 Hoes Lane, Piscataway, NJ 08854-4141 USA.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Bioengineering (Basel)

- PMC10740686

How Artificial Intelligence Is Shaping Medical Imaging Technology: A Survey of Innovations and Applications

Luís pinto-coelho.

1 ISEP—School of Engineering, Polytechnic Institute of Porto, 4200-465 Porto, Portugal; tp.ppi.pesi@cfl

2 INESCTEC, Campus of the Engineering Faculty of the University of Porto, 4200-465 Porto, Portugal

Associated Data

Not applicable.

The integration of artificial intelligence (AI) into medical imaging has guided in an era of transformation in healthcare. This literature review explores the latest innovations and applications of AI in the field, highlighting its profound impact on medical diagnosis and patient care. The innovation segment explores cutting-edge developments in AI, such as deep learning algorithms, convolutional neural networks, and generative adversarial networks, which have significantly improved the accuracy and efficiency of medical image analysis. These innovations have enabled rapid and accurate detection of abnormalities, from identifying tumors during radiological examinations to detecting early signs of eye disease in retinal images. The article also highlights various applications of AI in medical imaging, including radiology, pathology, cardiology, and more. AI-based diagnostic tools not only speed up the interpretation of complex images but also improve early detection of disease, ultimately delivering better outcomes for patients. Additionally, AI-based image processing facilitates personalized treatment plans, thereby optimizing healthcare delivery. This literature review highlights the paradigm shift that AI has brought to medical imaging, highlighting its role in revolutionizing diagnosis and patient care. By combining cutting-edge AI techniques and their practical applications, it is clear that AI will continue shaping the future of healthcare in profound and positive ways.

1. Introduction

Advancements in medical imaging and artificial intelligence (AI) have ushered in a new era of possibilities in the field of healthcare. The fusion of these two domains has revolutionized various aspects of medical practice, ranging from early disease detection and accurate diagnosis to personalized treatment planning and improved patient outcomes.

Medical imaging techniques such as computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET) play a pivotal role in providing clinicians with detailed and comprehensive visual information about the human body. These imaging modalities generate vast amounts of data that require efficient analysis and interpretation, and this is where AI steps in.

AI, particularly deep learning algorithms, has demonstrated remarkable capabilities in extracting valuable insights from medical images [ 1 ]. Deep learning models, trained on large datasets, are capable of recognizing complex patterns and features that may not be readily discernible to the human eye [ 2 , 3 ]. These algorithms can even provide a new perspective about what image features should be valued to support decisions [ 4 ]. One of the key advantages of AI in medical imaging is its ability to enhance the accuracy and efficiency of disease diagnosis [ 1 , 5 ]. Through this process, AI can assist healthcare professionals in detecting abnormalities, identifying specific structures, and predicting disease outcomes [ 5 , 6 ].

By leveraging machine learning algorithms, AI systems can analyze medical images with speed and precision, aiding in the identification of early-stage diseases that may be difficult to detect through traditional methods. This early detection is crucial as it can lead to timely interventions, potentially saving lives and improving treatment outcomes [ 1 , 2 , 3 ].

Furthermore, AI has opened up new possibilities in image segmentation and quantification. By employing sophisticated algorithms, AI can accurately delineate structures of interest within medical images, such as tumors, blood vessels, or cells [ 7 , 8 , 9 ]. This segmentation capability is invaluable in treatment planning, as it enables clinicians to precisely target areas for intervention, optimize surgical procedures, and deliver targeted therapies [ 10 ].

The integration of AI and medical imaging has also facilitated the development of personalized medicine. Through the analysis of medical images and patient data, AI algorithms can generate patient-specific insights, enabling tailored treatment plans that consider individual variations in anatomy, physiology, and disease characteristics. This personalized approach to healthcare enhances treatment efficacy and minimizes the risk of adverse effects, leading to improved patient outcomes and quality of life [ 1 , 11 , 12 ].

Additionally, AI has paved the way for advancements in image-guided interventions and surgical procedures. By combining preoperative imaging data with real-time imaging during surgery, AI algorithms can provide surgeons with augmented visualization, navigation assistance, and decision support. These tools enhance surgical precision, reduce procedural risks, and enable minimally invasive techniques, ultimately improving patient safety and surgical outcomes [ 13 ].

Recently several cutting-edge articles have been published covering a wide variety of topics within the scope of medical imaging and AI. Many of these outstanding advancements are directed to cancer, a major cause of severe disease and mortality. The main contributions and fields will be addressed in the next sections.

2. Methodology

The primary aim of this review is to present a comprehensive overview of the influential artificial intelligence (AI) technological advancements that are shaping the landscape of medical imaging in recent years. The construction of the article dataset followed a two-stage methodology. Initially, to identify the most pertinent AI-supported clinical imaging application, searches were conducted on major scientific article repositories. In July 2023, queries were made on PubMed, IEEE, Scopus, ScienceDirect, Web of Science, and ACM, focusing on the Title and Abstract of articles. Filters for language (English only) and year of publication (2017 and onwards) were applied. Search terms encompassed key machine learning words and expressions (e.g., “machine learning”, “artificial intelligence”, “classification”, “segmentation”) combined with clinical image-related keywords (e.g., “image”, “pixel”, “resolution”, “MRI”, “PET”, “CT”). After article retrieval, duplicates were eliminated. It is also important to mention that preprint articles, such as arXiv, bioRxiv, medRxiv, among others, were also queried as part of the Scopus indexing system. These are major open-access article archives holding highly relevant manuscripts (considering the number of citations and widespread usage) but whose content was not peer reviewed.

In the second stage, the previously identified papers and their references were utilized as seeds to construct connection maps, employing the LitMaps [ 14 ] web tool to identify the most relevant technologies. The Iramuteq software [ 15 ] was also used to generate and explore word and concept networks using some of the included natural language processing tools [ 16 ]. The selection of technologies was based on manual observation of connection maps, with a focus on identifying healthcare-related keyword groups. The use of this methodology implied some ad hoc criteria since the mentioned tools are agnostic to the underlying clinical processes and not always are able to correctly group medical areas. With the described methodology, the ultimate aim was to encompass a broad spectrum of disease handling processes and support activities, emphasizing the most promising technological approaches to date while acknowledging identified limitations. Additionally, emphasis has been given to review articles that were specifically referenced when available for specific domains, as they offer an enhanced overview within a confined area of knowledge. The final article corpus showed a distribution by year of publication as depicted in Figure 1 . It can be observed that 2023 has the highest number of review/survey articles, which can evidence the interest in the area but can also be an indicator of the diversity of involved technologies, demanding for an overview article.

Distribution of the selected articles by year of publication.

3. Technological Innovations

Mathematical models and algorithms stand at the forefront of scientific exploration, serving as powerful tools that enable us to unravel complex phenomena, make predictions, and uncover hidden patterns in vast datasets. These essential components of modern research have not only revolutionized our understanding of the natural world but have also played a pivotal role in driving technological breakthroughs that open up numerous application possibilities across various domains. The synergy between mathematical models and algorithms has not only enhanced our understanding of the world but has also been a driving force behind technological advancements that have transformed our daily lives.

The earliest multilayer perceptron networks, while representing a crucial step in the evolution of neural networks, had notable limitations. One of the primary constraints was their shallow architecture, which consisted of only a few layers, limiting their ability to model complex patterns. Besides the model expansion restrictions imposed by the limited computing power, training these networks with multiple layers was also challenging. In particular, the earliest activation functions used in neural networks, including the sigmoid and hyperbolic tangent (tanh), led to the vanishing gradient problem [ 17 ] as their gradients became exceedingly small as inputs moved away from zero. This issue impeded the efficient propagation of gradients during training, resulting in slow convergence or training failures. Furthermore, the limited output range of these functions and their symmetric nature constrained the network’s ability to represent complex, high-dimensional data. Additionally, the computational complexity of these functions, particularly the exponential calculations, hindered training and inference in large networks. These shortcomings led to the development and widespread adoption of more suitable activation functions, such as the rectified linear unit (ReLU) [ 18 ] and its variants, which successfully addressed these issues and became integral components of modern deep learning architectures [ 19 ]. For these reasons, early multilayer perceptron networks struggled to capture complex patterns in data, making them unsuitable for tasks requiring the modeling of intricate relationships, ultimately leading to the necessity of exploration of more advanced architectures and training techniques.

Improvements in the artificial neurons’ functionality, more advanced architectures, and improved training algorithms supported by graphical computational units (GPU) came to open promising possibilities. The LeNet-5 architecture, developed for the recognition of handwritten digits [ 20 ], is a fundamental milestone for convolutional neural networks (CNNs) [ 21 , 22 ].

CNNs, inspired by the biological operation of animals’ vision system, assume that the input is the representation of image data. Current architectures follow a structured sequence of layers, each with specific functions to process and extract features from the input data [ 23 ]. The journey begins with the input layer, which receives raw image data, typically represented as a grid of pixel values, often with three color channels (red, green, blue) for color images. Following the input layer, the network employs convolutional layers, which are responsible for feature extraction. These layers use convolutional operations (of several types [ 22 ]) to detect local patterns and features in the input data. Early convolutional layers focus on detecting basic features like edges, corners, and textures. After each convolution operation, activation layers with rectified linear unit (ReLU) activation functions are applied to introduce nonlinearity. ReLU units help the network learn more complex patterns and enhance its ability to model the data effectively. Pooling (Subsampling) layers come next, reducing the spatial dimensions of the feature maps while preserving important information. Max pooling and average pooling are common operations that help make the network more robust to variations in scale and position. The sequence of convolutional layers continues, with additional layers stacked to capture increasingly complex and abstract features. These deeper layers are adept at detecting higher-level patterns, shapes, and objects in the data. Similar to the earlier convolutional layers, activation layers with ReLU functions are applied after each convolution operation, maintaining nonlinearity and enhancing feature learning. Pooling (subsampling) layers may be used again, further decreasing the spatial dimensions of the feature maps and retaining essential information. At the end of this sequence, after the network has extracted the most relevant information from the input data, a special set of vectors are obtained, designated by deep features [ 24 ]. These, located deep in the network, distill data into compact, meaningful forms that are highly discriminative. Or, in other words, after the progressive extraction of information, layer after layer, raw input data is refined into more condensed and abstract representations that are imbued with semantic meaning, encapsulating essential characteristics of the input. They are highly discriminative and have lower dimensionality than the raw input data, which not only conserves computational resources but also simplifies subsequent processing, making it especially beneficial in the analysis of high-dimensional data, such as images. This process also eliminates the tedious and error-prone process of handcrafted feature selection, leading to optimized feature sets and to the possibility of building the so-called “end-to-end” systems. Deep features can also help mitigate overfitting, a common challenge in machine learning, since by learning relevant representations, they prevent models from memorizing the training data and encourage more robust generalization.

Another great advantage of deep feature extraction pipelines is the possibility of using transfer learning techniques. In this case, a deep feature extraction network previously successfully developed on one task or dataset can be transferred and fine-tuned to another related task, significantly reducing the need for large, labeled datasets and speeding up model training. This versatility is a game changer in many applications.

After this extraction front end, continuing with the processing pipeline and moving towards the end of the network, fully connected layers are introduced. These layers come after the convolutional and pooling layers and play a pivotal role in feature aggregation and classification. The deep features extracted by the previous layers are flattened and processed through one or more fully connected layers.

Finally, the output layer emerges as the last layer of the network. The number of neurons in this layer corresponds to the number of classes in a classification task or the number of output units in a regression task. For classification tasks, a sigmoid or a softmax activation function is typically used to calculate class probabilities, providing the final output of the CNN [ 25 , 26 ]. A sigmoid function is commonly employed in binary classification, producing a single probability score indicating the likelihood of belonging to the positive class. The softmax function is favored for its ability to transform raw output scores into probability distributions across multiple classes. This conversion ensures that the computed probabilities represent the likelihood of the input belonging to each class, with the sum of probabilities equating to one, thereby constituting a valid probability distribution. Beyond this interpretability, both functions are differentiable, a critical attribute for the application of gradient-based optimization algorithms like backpropagation during training.

The described structured sequence of layers, from the input layer to the output layer, captures the hierarchical feature learning process in a CNN, allowing it to excel in image classification tasks (among others). Specific CNN architectures may introduce variations, additional components, or specialized layers based on the network’s design goals and requirements.

3.1. Transformers

CNNs are well suited for grid-like data, such as images, where local patterns can be captured efficiently. However, they struggle with sequential data because they lack a mechanism for modeling dependencies between distant elements (for example, in distinct time instants or far in the image). Also, CNNs do not inherently model the position or order of elements within the data. They rely on shared weight filters, which makes them translation invariant but can be problematic when absolute spatial relationships are important [ 27 ]. To overcome these limitations (handling sequential data, modeling long-range dependencies, incorporating positional information, and addressing tasks involving multimodal data, among others), transformers were introduced [ 28 ]. In the context of machine learning applied to images, transformers are a type of neural network architecture that extends the transformer model, originally designed for natural language processing [ 28 ], to handle computer vision tasks. These models are often referred to as vision transformers (ViTs) or image transformers [ 29 ] and come to introduce performance benefits, especially in noisy conditions [ 30 , 31 ]. In clinical settings, applications cover diagnosis and prognosis [ 32 ], encompassing classification, segmentation, and reconstruction tasks in distinct stages [ 31 , 33 ].

In vision transformers (ViT), the initial image undergoes a transformation process, wherein it is divided into a sequence of patches, as can be observed in Figure 2 . Each of these patches is associated with a positional encoding technique, which captures and encodes the spatial positions of the patches, thus preserving spatial information. These patches, together with a class token, are then input into a transformer model to perform multi-head self-attention (MHSA) and generate embeddings that represent the learned characteristics of the patches. The class token’s state in the ViT’s output underscores a pivotal aspect of the model’s architecture since it acts as a global aggregator of information from all patches, offering a comprehensive representation of the entire image. The token’s state is dynamically updated during processing, reflecting a holistic understanding that encapsulates both local details and also the broader context of the image. Finally, a multilayer perceptron (MLP) is employed for the purpose of classifying the learned image representation. Notably, in addition to using raw images, it is also possible to supply feature maps generated by convolutional neural networks (CNNs) as input into a vision transformer for the purpose of establishing relational mappings [ 34 ]. It is also possible to use the transformer’s encoding technique to explore the model’s explainability [ 35 ].

Pipeline for applying the transformer’s technique to images.

The attention mechanism is a fundamental component in transformers. It plays a pivotal role in enabling the model to selectively focus on different parts of the input data with varying degrees of attention. At its core, the attention mechanism allows the model to assign varying levels of importance to different elements within the input data. This means the model can “pay attention” to specific elements while processing the data, prioritizing those that are most relevant to the task at hand. This selective attention enhances the model’s ability to capture essential information and relationships within the input. The mechanism operates as follows: First, the input data is organized into a sequence of elements, such as tokens in a sentence for NLP or patches in an image for computer vision. Then, the mechanism introduces three sets of learnable parameters: query (Q), key (K), and value (V). The query represents the element of interest, while the key and value pairs are associated with each element in the input sequence. For each element in the input sequence, the attention mechanism calculates an attention score, reflecting the similarity between the query and the key for that element. The method used to measure this similarity can vary, with techniques like dot product and scaled dot product being common choices. These attention scores represent how relevant each element is to the query. The next step involves applying the softmax function to the attention scores. This converts them into weights that sum to one, effectively determining the importance of each input element concerning the query. The higher the weight, the more attention the model allocates to that specific element in the input data. Finally, the attention mechanism computes a weighted sum of the values, using the attention weights. The resulting output is a combination of information from all input elements, with elements more relevant to the query receiving higher weight in the final representation [ 36 , 37 ].

The attention mechanism can be used in various ways (attention gate [ 38 ], mixed attention [ 39 ], among others in the medical field), with one prominent variant being self-attention. In self-attention, the query, key, and value all originate from the same input sequence. This allows the architecture to model relationships and dependencies between elements within the same sequence, making it particularly useful for tasks that involve capturing long-range dependencies and context [ 7 , 40 , 41 ].

The original ViT architecture, as in Figure 3 a, was enhanced with the hierarchical vision transformer using shifted windows (SWIN transformer) [ 42 ] where a hierarchical partitioning of the image into patches is used. This means that the image is first divided into smaller patches, and then these patches are merged together as the network goes deeper, as in Figure 3 b. This hierarchical approach allows SWIN to capture both local and global features in the image, which can improve its performance on a variety of tasks. In the SWIN transformer, images of different resolutions belonging to outputs of different stages can be used to facilitate segmentation tasks.

Comparison of architecture operation when going deep in the network.

Another key difference between SWIN and ViT is that SWIN uses a shifted window self-attention mechanism, as depicted in Figure 4 . This means that the self-attention operation is only applied to a local window of patches, or in other words, to a limited number of neighbor patches (as represented in green in Figure 4 ) rather than the entire image. Then, in a second stage, the attention window focus location is shifted to a different location (by patch cyclic shifting). This shifted window approach comes to reduce the computational load and complexity of the self-attention operation, which can improve the efficiency of the SWIN architecture. These differences, when compared with the original ViT, allow a more efficient and scalable architecture, which were further refined in SWIN v2 [ 43 ].

Shifted window’s mechanism for the self-attention mechanism in the SWIN transformer.

The transformer-based approach has received a lot of attention due to its effectiveness, still with improvement opportunities [ 44 ]. The described innovations have been crucial in advancing the state of the art in medical image processing, covering machine learning tasks, such as classification, segmentation, synthesis (image or video), detection, and captioning [ 34 , 45 ]. By enhancing the model’s ability to focus on relevant information and understand complex relationships within the data, the attention mechanism represents a significant step in the improvement of the quality and effectiveness of various deep learning applications in the medical field.

Within the broad category of computer vision and artificial intelligence, the YOLO algorithm [ 46 ], which stands for “you only look once”, has gained a lot of popularity due to its performance in real-time object detection tasks. In the medical imaging field, the term “YOLO” is sometimes used more broadly to refer to implementations or systems that use one of the versions of the YOLO algorithm. It approaches object detection as a regression problem, predicting bounding box coordinates and class probabilities directly from the input image in a single pass through its underlying neural network (composed of backbone, neck, and head sections). This single-pass processing, where the image is divided into a grid for simultaneous predictions, distinguishes YOLO from other approaches and contributes to its exceptional speed. Postprediction, nonmaximum suppression is applied to filter redundant and low-confidence predictions, ensuring that each object is detected only once. In the medical field, YOLO has been used for a variety of imaging tasks, including cytology automation [ 47 ], detecting lung nodules in CT scans [ 48 ], segmentation of structures [ 49 ], detecting breast cancer in mammograms [ 50 ], or to track needles in ultrasound sequences [ 51 ], among others. YOLO’s fast and accurate object detection capabilities make it an excellent choice for many medical imaging applications.

Finally, it is noteworthy to highlight the emergence of hybrid approaches that combine the aforementioned algorithms, as observed in instances like TransU-net [ 52 ] or ViT-YOLO [ 53 ]. These combinations aim to leverage the strengths of each individual algorithm, with the objective of achieving performance enhancements. It is important to acknowledge, however, that these approaches are still in an early stage of development and are not explored here.

3.2. Generative Models